lstm实现tensorflow不使用tf.nn.rnn_cell

本文是对上篇博客的代码改进,就是将rnn改成lstm。

具体的改动大约是这几个地方

1.lstm_cell实现

def lstm_cell(rnn_input, pre_output, memory):

#输入门

with tf.variable_scope('input_gate',reuse=tf.AUTO_REUSE):

wi = tf.get_variable('wi', shape=[num_classes+memory_size, memory_size])

bi = tf.get_variable('bi', shape=[memory_size], initializer=tf.constant_initializer(0.0))

wc = tf.get_variable('wc', shape=[num_classes+memory_size, memory_size])

ci = tf.get_variable('ci', shape=[memory_size], initializer=tf.constant_initializer(0.0))

it = tf.sigmoid(tf.matmul(tf.concat([rnn_input,pre_output], 1),wi)+bi) #shape=>[batch_size, num_classes]=>[batch_size,num_classes+memory_size]=>[batch_size,memory_size]=>[batch_size,num_classes+memory_size]=>[batch_size,memory_size]=>[batch_size,memory_size]

ic = tf.tanh(tf.matmul(tf.concat([rnn_input,pre_output], 1),wc)+ci)

#遗忘门

with tf.variable_scope('forget_gate',reuse=tf.AUTO_REUSE):

wf = tf.get_variable('wf', shape=[num_classes+memory_size, memory_size])

bf = tf.get_variable('bf', shape=[memory_size], initializer=tf.constant_initializer(0.0))

ft = tf.sigmoid(tf.matmul(tf.concat([rnn_input,pre_output], 1),wf)+bf)

#更新记忆

with tf.variable_scope('memory',reuse=tf.AUTO_REUSE):

c = memory*ft+ic*it

#输出门

with tf.variable_scope('output_gate',reuse=tf.AUTO_REUSE):

wo = tf.get_variable('wo', shape=[num_classes+memory_size, memory_size])

bo = tf.get_variable('bo', shape=[memory_size], initializer=tf.constant_initializer(0.0))

ot = tf.sigmoid(tf.matmul(tf.concat([rnn_input,pre_output], 1),wo)+bo)

ht = ot*tf.tanh(c)

#返回更新后的记忆与输出用于下一个lstm_cell的输入参量

return ht, c

在这里实现了一个简单的lstm单元,想实现多层lstm可以对此cell进行叠加。

代码实现依据我所写的这篇博客《lstm的理解》所写

其中形状的变化,大约是这样的变化过程:

shape:

=>[batch_size, num_classes] 这是刚开始输入时的shape

=>[batch_size,num_classes+num_classes]对输入与上一个时刻的输出进行concat

=>[batch_size,memory_size]与w进行矩阵乘法

=>[batch_size,memory_size]与偏置进行相加

2.对每一个时刻的输入进行计算

memory = init_memory

lstm_outputs = []

memory_outputs = []

for rnn_input in rnn_inputs:

pre_output, memory = lstm_cell(rnn_input, pre_output, memory)

lstm_outputs.append(pre_output)

memory_outputs.append(memory)

final_state = memory_outputs[-1]#保存这个batch最后一个memory,用于下一批的输入

3.具体代码如下

import tensorflow as tf

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

def get_data(size = 40000):

X = np.array(np.random.choice(2, size=(size)))

Y = []

for i in range(size):

threshold = 0.5

if X[i-3] == 1:

threshold += 0.5

if X[i-8] == 1:

threshold -= 0.25

if np.random.rand() > threshold:

Y.append(0)

else:

Y.append(1)

return X,np.array(Y)

def get_batch(x,y,batch_size,seq_length):

lens = len(x)

batches = lens//(batch_size*seq_length)

x = x[:batches*batch_size*seq_length]

y = y[:batches*batch_size*seq_length]

x = x.reshape(batch_size, -1)

print(x.shape)

y = y.reshape(batch_size,-1)

for i in range(0,(batches-1)*seq_length,seq_length):

raw_x = x[:,i:i+seq_length]

raw_y = y[:,i:i+seq_length]

yield (raw_x, raw_y)

def get_epochs(n, num_steps):

x,y=get_data()

for i in range(n):

yield get_batch(x,y, batch_size, num_steps)

batch_size = 3

num_classes = 2

state_size = 4

memory_size = 8

num_steps = 10

learning_rate = 0.1

"""

tf +

>>> import tensorflow as tf

>>> import numpy as np

>>> a = tf.constant(np.array([[1,2,3],[4,5,6]]))

>>> b = tf.constant(np.array([0,3,6]))

>>> c = a+b

>>> sess=tf.Session()

>>> sess.run(c)

array([[ 1, 5, 9],

[ 4, 8, 12]])

tf.unstack

>>> import numpy as np

>>> import tensorflow as tf

>>> sess = tf.Session()

>>> b = tf.constant(np.array([[0,1,2],[3,4,5]]))

>>> c = tf.one_hot(b,6)

>>> sess.run(c)

array([[[1., 0., 0., 0., 0., 0.],

[0., 1., 0., 0., 0., 0.],

[0., 0., 1., 0., 0., 0.]],

[[0., 0., 0., 1., 0., 0.],

[0., 0., 0., 0., 1., 0.],

[0., 0., 0., 0., 0., 1.]]], dtype=float32)

>>> d = tf.unstack(c,axis=1)

>>> sess.run(d)

[array([[1., 0., 0., 0., 0., 0.],

[0., 0., 0., 1., 0., 0.]], dtype=float32), array([[0., 1., 0., 0., 0., 0.],

[0., 0., 0., 0., 1., 0.]], dtype=float32), array([[0., 0., 1., 0., 0., 0.],

[0., 0., 0., 0., 0., 1.]], dtype=float32)]

>>> e = tf.unstack(c,axis=0)

>>> sess.run(e)

[array([[1., 0., 0., 0., 0., 0.],

[0., 1., 0., 0., 0., 0.],

[0., 0., 1., 0., 0., 0.]], dtype=float32), array([[0., 0., 0., 1., 0., 0.],

[0., 0., 0., 0., 1., 0.],

[0., 0., 0., 0., 0., 1.]], dtype=float32)]

>>> f = tf.unstack(c,axis=2)

>>> sess.run(f)

[array([[1., 0., 0.],

[0., 0., 0.]], dtype=float32), array([[0., 1., 0.],

[0., 0., 0.]], dtype=float32), array([[0., 0., 1.],

[0., 0., 0.]], dtype=float32), array([[0., 0., 0.],

[1., 0., 0.]], dtype=float32), array([[0., 0., 0.],

[0., 1., 0.]], dtype=float32), array([[0., 0., 0.],

[0., 0., 1.]], dtype=float32)]

"""

x = tf.placeholder(tf.int32, [batch_size, num_steps], name='inputs') #[batch_size, num_steps]

y = tf.placeholder(tf.int32, [batch_size, num_steps], name='targets')

init_state = tf.zeros([batch_size, state_size])

init_memory = tf.zeros([batch_size,memory_size])

pre_output = tf.zeros([batch_size, memory_size])

x_onehot = tf.one_hot(x, num_classes) #[batch_size, num_steps, num_classes]

rnn_inputs = tf.unstack(x_onehot, axis=1) #对数据进行分解 [num_steps, batch_size, num_classes]

def lstm_cell(rnn_input, pre_output, memory):

with tf.variable_scope('input_gate',reuse=tf.AUTO_REUSE):

wi = tf.get_variable('wi', shape=[num_classes+memory_size, memory_size])

bi = tf.get_variable('bi', shape=[memory_size], initializer=tf.constant_initializer(0.0))

wc = tf.get_variable('wc', shape=[num_classes+memory_size, memory_size])

ci = tf.get_variable('ci', shape=[memory_size], initializer=tf.constant_initializer(0.0))

it = tf.sigmoid(tf.matmul(tf.concat([rnn_input,pre_output], 1),wi)+bi) #

ic = tf.tanh(tf.matmul(tf.concat([rnn_input,pre_output], 1),wc)+ci)

with tf.variable_scope('forget_gate',reuse=tf.AUTO_REUSE):

wf = tf.get_variable('wf', shape=[num_classes+memory_size, memory_size])

bf = tf.get_variable('bf', shape=[memory_size], initializer=tf.constant_initializer(0.0))

ft = tf.sigmoid(tf.matmul(tf.concat([rnn_input,pre_output], 1),wf)+bf)

with tf.variable_scope('memory',reuse=tf.AUTO_REUSE):

c = memory*ft+ic*it

with tf.variable_scope('output_gate',reuse=tf.AUTO_REUSE):

wo = tf.get_variable('wo', shape=[num_classes+memory_size, memory_size])#由于要与memory进行矩阵乘法,所有要保证shape一致

bo = tf.get_variable('bo', shape=[memory_size], initializer=tf.constant_initializer(0.0))

ot = tf.sigmoid(tf.matmul(tf.concat([rnn_input,pre_output], 1),wo)+bo)

ht = ot*tf.tanh(c)

return ht, c

memory = init_memory

lstm_outputs = []

memory_outputs = []

for rnn_input in rnn_inputs:

pre_output, memory = lstm_cell(rnn_input, pre_output, memory)

lstm_outputs.append(pre_output)

memory_outputs.append(memory)

final_state = memory_outputs[-1]

#softmax

with tf.variable_scope('softmax'):

W = tf.get_variable('W', [memory_size, num_classes])

b = tf.get_variable('b', [num_classes], initializer=tf.constant_initializer(0.0))

#注意,这里要将num_steps个输出全部分别进行计算其输出,然后使用softmax预测

logits = [tf.matmul(lstm_output, W) + b for lstm_output in lstm_outputs]

predictions = [tf.nn.softmax(logit) for logit in logits]

# Turn our y placeholder into a list of labels

y_as_list = tf.unstack(y, num=num_steps, axis=1)

#losses and train_step

losses = [tf.nn.sparse_softmax_cross_entropy_with_logits(labels=label, logits=logit) for \

logit, label in zip(logits, y_as_list)]

total_loss = tf.reduce_mean(losses)

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(total_loss)

def train_network(num_epochs, num_steps, state_size=4, verbose=True):

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

training_losses = []

#得到数据,因为num_epochs==5,所以外循环只执行五次

for idx, epoch in enumerate(get_epochs(num_epochs, num_steps)):

training_loss = 0

#保存每次执行后的最后状态,然后赋给下一次执行

training_memory = np.zeros((batch_size, memory_size))

if verbose:

print("\nEPOCH", idx)

#这是具体获得数据的部分

for step, (X, Y) in enumerate(epoch):

tr_losses, training_loss_, training_memory, _ = \

sess.run([losses,

total_loss,

final_state,

train_step],

feed_dict={x:X, y:Y, init_memory:training_memory})#training_memory将每个batch的最后一个记忆赋给下次执行

training_loss += training_loss_

if step % 100 == 0 and step > 0:

if verbose:

print("Average loss at step", step,

"for last 100 steps:", training_loss/100)

training_losses.append(training_loss/100)

training_loss = 0

return training_losses

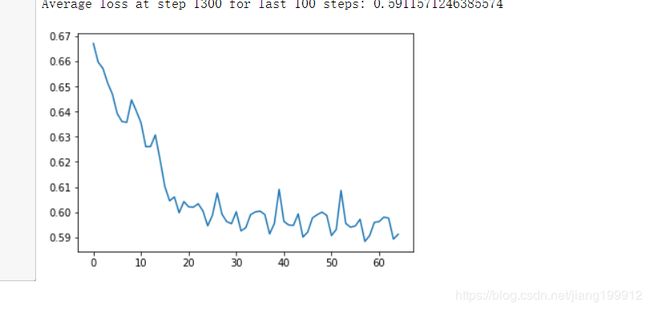

training_losses = train_network(5,num_steps)

plt.plot(training_losses)

plt.show()