CUDA学习

文章目录

- 第一部分:CUDA C简介

- 1.1 第一个程序

- 1)核函数调用

- 2)传递参数

- 3)查询设备

- 4)设备属性的使用

- 第二部分:CUDA C并行编程

- 1. CUDA并行编程

- 1)矢量求和运算

- 2)另一个例子

- 第三部分:线程协作

- 1. 并行线程块的分解

- 案例一:矢量求和

- 案例二:在GPU上使用线程实现波纹效果

- 2. 共享内存和同步

- 1)点积运算

- 2)基于共享内存的位图

- 第四部分: 常量内存与事件

- 1.常量内存

- 案例一:光线跟踪

- 2. 使用事件来测量性能

- 第五部分:纹理内存

- 1. 使用一维纹理内存

- 2.使用二维纹理内存

- 第六部分:图形互操作性

- 第七部分:原子性

- 案例:直方图计算

- 第八部分:流

- 1.页锁定主机内存

- 2. CUDA流

- 第九部分:矩阵计算库

- 1. cuSparse

- 2. cuBLAS

- 3. cuRAND

第一部分:CUDA C简介

1.1 第一个程序

1)核函数调用

在GPU设备上执行的函数通常称为核函数

主机:CPU以及系统内存

设备:GPU及其内存

#include - __global__表示函数在设备上,而不是主机上运行

- kernel<<<1,1>>>(); //表示调用设备代码,尖括号表示要将一些参数传递给运行时系统,这些参数告诉运行时如何启动设备代码。

2)传递参数

下面演示在设备上分配和释放内存

#include "../common/book.h"

__global__ void add( int a, int b, int *c ) {

*c = a + b;

}

int main( void ) {

int c;

int *dev_c;

/**cudaMalloc()告诉 CUDA运行时在设备上分配内存

第一个参数:保存新分配内存地址的变量

第二个:分配内存大小

**/

cudaMalloc( (void**)&dev_c, sizeof(int) );

add<<<1,1>>>( 2, 7, dev_c );

HANDLE_ERROR( cudaMemcpy( &c, dev_c, sizeof(int),

cudaMemcpyDeviceToHost ) );

printf( "2 + 7 = %d\n", c );

HANDLE_ERROR( cudaFree( dev_c ) ); //在设备上释放内存

return 0;

}

CUDA C淡化了主机代码和设备代码之间的差异,但我们不能在对cudaMalloc返回的指针进行解引用,不可以用其读取或者写入内存。也就是说,我们不能在主机上对这块内存进行任何修改,主机指针智能访问主机代码中的内存,设备指针只能访问设备代码中的内存。

- 可以将cudaMalloc分配的指针传递给在设备上执行的函数

- 可以在设备代码中使用该指针进行内存读写

- 可以穿递给主机上执行的函数

- 不能在主机代码中用该指针读写

- 要用cudaFree来释放

访问设备内存的来嗯中方法: - 在设备代码中使用设备指针 *c = a + b;

- cudaMemcpy(); cudaMemcpyDeviceToHost告诉运行时源指针是设备指针,目标指针是主机指针,还有cudaMemcpyDeviceToDevice, cudaMemcpyHostToDevice,如果都在主机上,调用memcpy就好了。

3)查询设备

希望在程序中知道设备拥有多少内存,具备哪些功能。一台计算机上拥有多个支持CUDA的设备时,希望知道使用的是哪个处理器。

通过cudaGetDeviceCount来或者CUDA设备数量,然后可以遍历设备,查询各个设备的相关信息,CUDA运行时回返回一个cudaDeviceProp类型的结构,一个结构体,包含了这些内容

#include "../common/book.h"

int main( void ) {

cudaDeviceProp prop;

int count;

HANDLE_ERROR( cudaGetDeviceCount( &count ) );

for (int i=0; i< count; i++) {

HANDLE_ERROR( cudaGetDeviceProperties( &prop, i ) );

printf( " --- General Information for device %d ---\n", i );

printf( "Name: %s\n", prop.name );

printf( "Compute capability: %d.%d\n", prop.major, prop.minor );

printf( "Clock rate: %d\n", prop.clockRate );

printf( "Device copy overlap: " );

if (prop.deviceOverlap)

printf( "Enabled\n" );

else

printf( "Disabled\n");

printf( "Kernel execution timeout : " );

if (prop.kernelExecTimeoutEnabled)

printf( "Enabled\n" );

else

printf( "Disabled\n" );

printf( " --- Memory Information for device %d ---\n", i );

printf( "Total global mem: %ld\n", prop.totalGlobalMem );

printf( "Total constant Mem: %ld\n", prop.totalConstMem );

printf( "Max mem pitch: %ld\n", prop.memPitch );

printf( "Texture Alignment: %ld\n", prop.textureAlignment );

printf( " --- MP Information for device %d ---\n", i );

printf( "Multiprocessor count: %d\n",

prop.multiProcessorCount );

printf( "Shared mem per mp: %ld\n", prop.sharedMemPerBlock );

printf( "Registers per mp: %d\n", prop.regsPerBlock );

printf( "Threads in warp: %d\n", prop.warpSize );

printf( "Max threads per block: %d\n",

prop.maxThreadsPerBlock );

printf( "Max thread dimensions: (%d, %d, %d)\n",

prop.maxThreadsDim[0], prop.maxThreadsDim[1],

prop.maxThreadsDim[2] );

printf( "Max grid dimensions: (%d, %d, %d)\n",

prop.maxGridSize[0], prop.maxGridSize[1],

prop.maxGridSize[2] );

printf( "\n" );

}

}

4)设备属性的使用

希望软件运行更快:选择拥有最多处理器的GPU来运行

核函数与CPU密集交互:在集成GPU上运行代码,可以与CPU共享内存

支持双精度浮点:Compute capability>1.3

我们将GPU需求写到一个cudaDeviceProp中,然后通过cudaChooseDevice检查当前设备是否满足条件,返回一个设备ID,并将其传给cudaSetDevice,随后所有设备操作都在这个设备上运行。

多GPU情况下设备选择和设置。

#include "../common/book.h"

int main( void ) {

cudaDeviceProp prop;

int dev;

HANDLE_ERROR( cudaGetDevice( &dev ) );

printf( "ID of current CUDA device: %d\n", dev ); //0

memset( &prop, 0, sizeof( cudaDeviceProp ) );

prop.major = 1;

prop.minor = 3;

HANDLE_ERROR( cudaChooseDevice( &dev, &prop ) );

printf( "ID of CUDA device closest to revision 1.3: %d\n", dev );

HANDLE_ERROR( cudaSetDevice( dev ) );

}

第二部分:CUDA C并行编程

1. CUDA并行编程

前面我们只调用一个核函数,且该函数在GPU上串行计算,本章介绍如何启动一个并行执行的设备核函数

1)矢量求和运算

有两组数据,我们希望将两组数据中对应元素两两相加

- 基于CPU的矢量求和

#include "../common/book.h"

#define N 10

void add( int *a, int *b, int *c ) {

int tid = 0; // this is CPU zero, so we start at zero

while (tid < N) {

c[tid] = a[tid] + b[tid];

tid += 1; // we have one CPU, so we increment by one

}

}

int main( void ) {

int a[N], b[N], c[N];

// fill the arrays 'a' and 'b' on the CPU

for (int i=0; i<N; i++) {

a[i] = -i;

b[i] = i * i;

}

add( a, b, c );

// display the results

for (int i=0; i<N; i++) {

printf( "%d + %d = %d\n", a[i], b[i], c[i] );

}

return 0;

}

- 基于GPU的矢量求和

将add编写为设备函数

#include "../common/book.h"

#define N 10

__global__ void add( int *a, int *b, int *c ) {

//blockIdx是一个内置变量,变量中包含的值是当前执行设备代码的线程块索引

//N个并行块集合称为一个线程格(Grid),告诉运行时,我们想要一个一维的线程格,其中包含N个线程块,

//每个线程块的blockIdx.x都是不同的,当N个线程块并行执行时,运行时用每个线程块索引替换blockIdx.x

int tid = blockIdx.x; // this thread handles the data at its thread id

if (tid < N)

c[tid] = a[tid] + b[tid];

}

int main( void ) {

int a[N], b[N], c[N];

int *dev_a, *dev_b, *dev_c;

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a, N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b, N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c, N * sizeof(int) ) );

// fill the arrays 'a' and 'b' on the CPU

for (int i=0; i<N; i++) {

a[i] = -i;

b[i] = i * i;

}

// copy the arrays 'a' and 'b' to the GPU

HANDLE_ERROR( cudaMemcpy( dev_a, a, N * sizeof(int),

cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMemcpy( dev_b, b, N * sizeof(int),

cudaMemcpyHostToDevice ) );

add<<<N,1>>>( dev_a, dev_b, dev_c );

// copy the array 'c' back from the GPU to the CPU

HANDLE_ERROR( cudaMemcpy( c, dev_c, N * sizeof(int),

cudaMemcpyDeviceToHost ) );

// display the results

for (int i=0; i<N; i++) {

printf( "%d + %d = %d\n", a[i], b[i], c[i] );

}

// free the memory allocated on the GPU

HANDLE_ERROR( cudaFree( dev_a ) );

HANDLE_ERROR( cudaFree( dev_b ) );

HANDLE_ERROR( cudaFree( dev_c ) );

return 0;

}

add<<

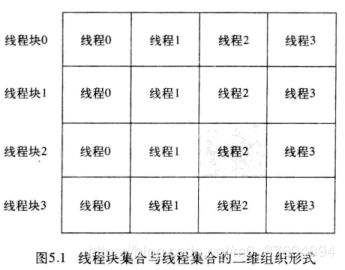

第一个参数N:网格的大小,设备在执行核函数时使用的并行线程快的数量,即运行时创建核函数的N个副本,并以并行的方式运行他们,我们将每个并行执行环境成为一个线程块(Block)。blockIdx.x

第二个参数:线程块的大小,我们这里都是1

dim3 grid(DIM,DIM);

kernel<<

gridDim保存线程格每一维的大小,(DIM,DIM)

线程块数组每一维的最大数量不能超过65535,这是硬件限制。

CUDA常用修饰符介绍

执行配置运算符<<< >>>,用来传递内核函数的执行参数。执行配置有四个参数,第一个参数声明网格的大小,第二个参数声明块的大小,第三个参数声明动态分配的共享存储器大小,默认为0,最后一个参数声明执行的流,默认为 0。

1 device , 表示从 GPU 上调用,在 GPU 上执行; 也就是说其可以被__global__> 或者__device__修饰的函数调用。此限定符修饰的函数使用有限制,比如在 G80/GT200 架构上不能使用递归,不能使用函数指针等。

2 global,表示在 CPU 上调用,在 GPU上执行,也就是所谓的内核(kernel)函数;内核只能够被主机调用,内核并不是一个完整的程序,它只是一个数据并行步骤,其指令流由多个线程执行。

3 host,表示在 CPU 上调用,在 CPU 上执行,这是默认时的情况,也就是传统的 C 函数。CUDA> 支持__host__和__device__的联用,表示同时为主机和设备编译。

五个内建变量,用于在运行时获得网格和块的尺寸及线程索引等信息

1 gridDim, gridDim 是一个包含三个元素 x,y,z 的结构体,分别表示网格在x,y,z

三个方向上的尺寸,虽然其有三维,但是目前只能使用二维;2 blockDim, blockDim 也是一个包含三个元素 x,y,z 的结构体,分别表示块在x,y,z

三个方向上的尺寸,对应于执行配置中的第一个参数,对应于执行配置的第二个参数;3 blockIdx, blockIdx 也是一个包含三个元素 x,y,z 的结构体,分别表示当前线程所在块在网格中 x,y,z

三个方向上的索引;4 threadIdx, threadIdx 也是一个包含三个元素 x,y,z 的结构体,分别表示当前线程在其所在块中 x,y,z

三个方向上的索引;5 warpSize,warpSize 表明 warp 的尺寸,在计算能力为 1.0 的设备中,这个值是24,在 1.0

以上的设备中,这个值是 32。

2)另一个例子

#include "../common/book.h"

#include "../common/cpu_bitmap.h"

#define DIM 1000

struct cuComplex {

float r;

float i;

cuComplex( float a, float b ) : r(a), i(b) {}

//__device__表示代码将在GPU上运行,表示这些函数只能在__device__或者__global__函数中调用

__device__ float magnitude2( void ) {

return r * r + i * i;

}

__device__ cuComplex operator*(const cuComplex& a) {

return cuComplex(r*a.r - i*a.i, i*a.r + r*a.i);

}

__device__ cuComplex operator+(const cuComplex& a) {

return cuComplex(r+a.r, i+a.i);

}

};

//__device__表示代码将在GPU上运行,从 GPU 上调用

__device__ int julia( int x, int y ) {

const float scale = 1.5;

float jx = scale * (float)(DIM/2 - x)/(DIM/2);

float jy = scale * (float)(DIM/2 - y)/(DIM/2);

cuComplex c(-0.8, 0.156);

cuComplex a(jx, jy);

int i = 0;

for (i=0; i<200; i++) {

a = a * a + c;

if (a.magnitude2() > 1000)

return 0;

}

return 1;

}

__global__ void kernel( unsigned char *ptr ) {

// map from blockIdx to pixel position

int x = blockIdx.x; //不同线程不一样,替换blockIdx.x

int y = blockIdx.y;

int offset = x + y * gridDim.x; //gridDim.x=DIMX,gridDim.y=DIMY

// now calculate the value at that position

int juliaValue = julia( x, y );

ptr[offset*4 + 0] = 255 * juliaValue;

ptr[offset*4 + 1] = 0;

ptr[offset*4 + 2] = 0;

ptr[offset*4 + 3] = 255;

}

// globals needed by the update routine

struct DataBlock {

unsigned char *dev_bitmap;

};

int main( void ) {

DataBlock data;

CPUBitmap bitmap( DIM, DIM, &data );

unsigned char *dev_bitmap;

HANDLE_ERROR( cudaMalloc( (void**)&dev_bitmap, bitmap.image_size() ) );

data.dev_bitmap = dev_bitmap;

//声明了一个二维的线程格,dim3表示一个三维数组,第三维为1

dim3 grid(DIMX,DIMY);

kernel<<<grid,1>>>( dev_bitmap );

HANDLE_ERROR( cudaMemcpy( bitmap.get_ptr(), dev_bitmap,

bitmap.image_size(),

cudaMemcpyDeviceToHost ) );

HANDLE_ERROR( cudaFree( dev_bitmap ) );

bitmap.display_and_exit();

}

第三部分:线程协作

本节解决并行执行的各个部分如何通过相互协作来解决问题。即进行各个并行副本之间的通信与协作。

1. 并行线程块的分解

案例一:矢量求和

1)使用线程进行矢量求和

尖括号的第一个参数告诉CUDA运行时启动核函数的N个并行副本,每个副本称为一个线程块(Block)。CUDA运行时把这些线程块分解为多个线程;

第二个参数表示CUDA运行时每个线程块中创建的线程数量,下面这行代码表示每个线程块之启动一个线程。等价于启动N/2个线程块,每个线程块2个线程。

//blockDim中保存线程块中每一维的线程数量,由于使用的是一维的线程块,因此只用到blockDim.x

int tid = threadIdx.x + blockIdx.x*blockDim.x;

//将线程数设为固定值

add<<<(N+127)/128, 128>>>(dev_z,dev_b,dev_c);

此时当N不是128的整数倍时将启动过多的线程,所以核函数这样使用

if(tid<N) //加个判断,在访问之前,必须检查线程的偏移是否位于0~N之间,不会造成对数组的越界读写

c[tid] = a[tid] + b[tid];

3)对任意长度矢量求和

上面例子当矢量长度超过65535×128=8388480时,核函数回调用失败,此时:

————global———— void add(int *a, int* b, int* c)

{

int tid = threadIdx.x + blockIdx.x * blockDim.x;

while(tid < N)

{

c[tid] = a[tid] + b[tid];

tid += blockDim.x * gridDim.x;//递增步长为线程格中正在运行的线程数量=线程块中线程数×线程格中线程块数=128×128

}

}

add<<<128,128>>>(dev_a, dev_b,dev_c);

案例二:在GPU上使用线程实现波纹效果

利用GPU强大的计算能力来生成图片

#include "cuda.h"

#include "../common/book.h"

#include "../common/cpu_anim.h"

#define DIM 1024

#define PI 3.1415926535897932f

__global__ void kernel( unsigned char *ptr, int ticks ) {

// map from threadIdx/BlockIdx to pixel position

int x = threadIdx.x + blockIdx.x * blockDim.x;

int y = threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

// now calculate the value at that position

float fx = x - DIM/2;

float fy = y - DIM/2;

float d = sqrtf( fx * fx + fy * fy );

unsigned char grey = (unsigned char)(128.0f + 127.0f *

cos(d/10.0f - ticks/7.0f) /

(d/10.0f + 1.0f));

ptr[offset*4 + 0] = grey;

ptr[offset*4 + 1] = grey;

ptr[offset*4 + 2] = grey;

ptr[offset*4 + 3] = 255;

}

struct DataBlock {

unsigned char *dev_bitmap;

CPUAnimBitmap *bitmap;

};

void generate_frame( DataBlock *d, int ticks ) {

dim3 blocks(DIM/16,DIM/16);

dim3 threads(16,16);

kernel<<<blocks,threads>>>( d->dev_bitmap, ticks );

HANDLE_ERROR( cudaMemcpy( d->bitmap->get_ptr(),

d->dev_bitmap,

d->bitmap->image_size(),

cudaMemcpyDeviceToHost ) );

}

// clean up memory allocated on the GPU

void cleanup( DataBlock *d ) {

HANDLE_ERROR( cudaFree( d->dev_bitmap ) );

}

int main( void ) {

DataBlock data;

CPUAnimBitmap bitmap( DIM, DIM, &data );

data.bitmap = &bitmap;

HANDLE_ERROR( cudaMalloc( (void**)&data.dev_bitmap,

bitmap.image_size() ) );

bitmap.anim_and_exit( (void (*)(void*,int))generate_frame,

(void (*)(void*))cleanup );

}

2. 共享内存和同步

线程块分解为线程的另一个更重要的目的就是共享内存。将CUDA C的关键字__share__添加到变量声明中,将使这个变量驻留在共享内存中。CUDA编译器对共享内存有特别的处理,对于在GPU上启动的每个线程块,编译器都将创建该变量的一个副本,同一个线程块中的每个线程都共享这个内存块,但看不到其他线程块上的变量副本,这就使得一个线程块中的多个线程可以进行通信和协作。而且共享内存访问相对普通内存更快一些。

要想实现线程间通信,要解决线程同步问题。

1)点积运算

#include "../common/book.h"

#define imin(a,b) (a

const int N = 33 * 1024;

const int threadsPerBlock = 256;

const int blocksPerGrid =

imin( 32, (N+threadsPerBlock-1) / threadsPerBlock );

//一个线程块是一个代码副本,一块共享内存,所以cache的维度为threadsPerBlock

__global__ void dot( float *a, float *b, float *c ) {

__shared__ float cache[threadsPerBlock]; //共享内存

int tid = threadIdx.x + blockIdx.x * blockDim.x;

int cacheIndex = threadIdx.x;

float temp = 0;

while (tid < N) {

temp += a[tid] * b[tid];

tid += blockDim.x * gridDim.x;

}

// set the cache values

cache[cacheIndex] = temp;

// synchronize threads in this block

__syncthreads(); //同步操作,该线程块所有线程前面代码执行完毕

// for reductions, threadsPerBlock must be a power of 2

// because of the following code

int i = blockDim.x/2; //归约,log2(threadsPerBlock)次循环,每次循环通过线程块内的多线程计算

while (i != 0) {

if (cacheIndex < i)

cache[cacheIndex] += cache[cacheIndex + i];

__syncthreads(); //同步操作,该线程块所有线程前面代码执行完毕,一定要所有线程都执行完这个语句这些线程才能向下执行,否则jiiuhui一直等待,因此不要将__syncthreads()放到 if语句中,将会导致线程发散。

i /= 2;

}

if (cacheIndex == 0)

c[blockIdx.x] = cache[0]; //c的大小为线程块数量,所以blockIdx.x

}

int main( void ) {

float *a, *b, c, *partial_c;

float *dev_a, *dev_b, *dev_partial_c;

// allocate memory on the cpu side

a = (float*)malloc( N*sizeof(float) );

b = (float*)malloc( N*sizeof(float) );

partial_c = (float*)malloc( blocksPerGrid*sizeof(float) );

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a,

N*sizeof(float) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b,

N*sizeof(float) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_partial_c,

blocksPerGrid*sizeof(float) ) );

// fill in the host memory with data

for (int i=0; i<N; i++) {

a[i] = i;

b[i] = i*2;

}

// copy the arrays 'a' and 'b' to the GPU

HANDLE_ERROR( cudaMemcpy( dev_a, a, N*sizeof(float),

cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMemcpy( dev_b, b, N*sizeof(float),

cudaMemcpyHostToDevice ) );

dot<<<blocksPerGrid,threadsPerBlock>>>( dev_a, dev_b,

dev_partial_c );

// copy the array 'c' back from the GPU to the CPU

HANDLE_ERROR( cudaMemcpy( partial_c, dev_partial_c,

blocksPerGrid*sizeof(float),

cudaMemcpyDeviceToHost ) );

// finish up on the CPU side,这里c的维度比较低,并不能充分利用GPU的计算资源,所以直接在CPU上进行

c = 0;

for (int i=0; i<blocksPerGrid; i++) {

c += partial_c[i];

}

#define sum_squares(x) (x*(x+1)*(2*x+1)/6)

printf( "Does GPU value %.6g = %.6g?\n", c,

2 * sum_squares( (float)(N - 1) ) );

// free memory on the gpu side

HANDLE_ERROR( cudaFree( dev_a ) );

HANDLE_ERROR( cudaFree( dev_b ) );

HANDLE_ERROR( cudaFree( dev_partial_c ) );

// free memory on the cpu side

free( a );

free( b );

free( partial_c );

}

2)基于共享内存的位图

#include "cuda.h"

#include "../common/book.h"

#include "../common/cpu_bitmap.h"

#define DIM 1024

#define PI 3.1415926535897932f

__global__ void kernel( unsigned char *ptr ) {

// map from threadIdx/BlockIdx to pixel position

int x = threadIdx.x + blockIdx.x * blockDim.x;

int y = threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

__shared__ float shared[16][16];

// now calculate the value at that position

const float period = 128.0f;

shared[threadIdx.x][threadIdx.y] =

255 * (sinf(x*2.0f*PI/ period) + 1.0f) *

(sinf(y*2.0f*PI/ period) + 1.0f) / 4.0f;

// removing this syncthreads shows graphically what happens

// when it doesn't exist. this is an example of why we need it.

//当线程将shared[threadIdx.x][threadIdx.y]的结果保存到像素中时,负责写入shared[15-threadIdx.x][15-threadIdx.y]的线程可能还没写完,因此需要进行同步操作。

__syncthreads();

ptr[offset*4 + 0] = 0;

ptr[offset*4 + 1] = shared[15-threadIdx.x][15-threadIdx.y];

ptr[offset*4 + 2] = 0;

ptr[offset*4 + 3] = 255;

}

// globals needed by the update routine

struct DataBlock {

unsigned char *dev_bitmap;

};

int main( void ) {

DataBlock data;

CPUBitmap bitmap( DIM, DIM, &data );

unsigned char *dev_bitmap;

HANDLE_ERROR( cudaMalloc( (void**)&dev_bitmap,

bitmap.image_size() ) );

data.dev_bitmap = dev_bitmap;

dim3 grids(DIM/16,DIM/16);

dim3 threads(16,16);

kernel<<<grids,threads>>>( dev_bitmap );

HANDLE_ERROR( cudaMemcpy( bitmap.get_ptr(), dev_bitmap,

bitmap.image_size(),

cudaMemcpyDeviceToHost ) );

HANDLE_ERROR( cudaFree( dev_bitmap ) );

bitmap.display_and_exit();

}

第四部分: 常量内存与事件

本节介绍更高级的功能,通过GPU上特殊的内存区域**(常量内存)来加速应用程序的执行**。以及如何通过事件来测量CUDA应用程序的性能,进而可以定量分析对应用程序的某个修改是否能改善性能。

1.常量内存

GPU通常包含数百个数学计算单元,因此性能瓶颈并不在与数学计算吞吐量,而是芯片的内存带宽。因此希望减小与CPU的内存通信量,不然可能导致素数如数据速率跟不上计算速率,这就尴尬了。

常量内存保存在核函数执行期间不会发生变化的数据,NVIDIA提供了64KB的常量内存,用其替换全局内存可以有效减小内存带宽。

案例一:光线跟踪

#include "cuda.h"

#include "../common/book.h"

#include "../common/cpu_bitmap.h"

#define DIM 1024

#define rnd( x ) (x * rand() / RAND_MAX)

#define INF 2e10f

struct Sphere {

float r,b,g;

float radius;

float x,y,z;

__device__ float hit( float ox, float oy, float *n ) {

float dx = ox - x;

float dy = oy - y;

if (dx*dx + dy*dy < radius*radius) {

float dz = sqrtf( radius*radius - dx*dx - dy*dy );

*n = dz / sqrtf( radius * radius );

return dz + z;

}

return -INF;

}

};

#define SPHERES 20

//声明常量内存,指定大小

//Sphere *s;

__constant__ Sphere s[SPHERES];

__global__ void kernel( unsigned char *ptr ) {

// map from threadIdx/BlockIdx to pixel position

int x = threadIdx.x + blockIdx.x * blockDim.x;

int y = threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

float ox = (x - DIM/2);

float oy = (y - DIM/2);

float r=0, g=0, b=0;

float maxz = -INF;

for(int i=0; i<SPHERES; i++) {

float n;

float t = s[i].hit( ox, oy, &n );

if (t > maxz) {

float fscale = n;

r = s[i].r * fscale;

g = s[i].g * fscale;

b = s[i].b * fscale;

maxz = t;

}

}

ptr[offset*4 + 0] = (int)(r * 255);

ptr[offset*4 + 1] = (int)(g * 255);

ptr[offset*4 + 2] = (int)(b * 255);

ptr[offset*4 + 3] = 255;

}

// globals needed by the update routine

struct DataBlock {

unsigned char *dev_bitmap;

};

int main( void ) {

DataBlock data;

// capture the start time

cudaEvent_t start, stop;

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

CPUBitmap bitmap( DIM, DIM, &data );

unsigned char *dev_bitmap;

// allocate memory on the GPU for the output bitmap

HANDLE_ERROR( cudaMalloc( (void**)&dev_bitmap,

bitmap.image_size() ) );

// allocate temp memory, initialize it, copy to constant

// memory on the GPU, then free our temp memory

Sphere *temp_s = (Sphere*)malloc( sizeof(Sphere) * SPHERES );

for (int i=0; i<SPHERES; i++) {

temp_s[i].r = rnd( 1.0f );

temp_s[i].g = rnd( 1.0f );

temp_s[i].b = rnd( 1.0f );

temp_s[i].x = rnd( 1000.0f ) - 500;

temp_s[i].y = rnd( 1000.0f ) - 500;

temp_s[i].z = rnd( 1000.0f ) - 500;

temp_s[i].radius = rnd( 100.0f ) + 20;

}

//HANDLE_ERROR( cudaMemcpy( s, temp_s,

// sizeof(Sphere) * SPHERES,

// cudaMemcpyHostToDevice ) );

//重点是这句,上的常量内存时,需要使用这个特殊版本的cudaMemcpy,桑面那句会复制到全局内存,下面这句复制到常量内存

HANDLE_ERROR( cudaMemcpyToSymbol( s, temp_s,

sizeof(Sphere) * SPHERES) );

free( temp_s );

// generate a bitmap from our sphere data

dim3 grids(DIM/16,DIM/16);

dim3 threads(16,16);

kernel<<<grids,threads>>>( dev_bitmap );

// copy our bitmap back from the GPU for display

HANDLE_ERROR( cudaMemcpy( bitmap.get_ptr(), dev_bitmap,

bitmap.image_size(),

cudaMemcpyDeviceToHost ) );

// get stop time, and display the timing results

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

float elapsedTime;

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

printf( "Time to generate: %3.1f ms\n", elapsedTime );

HANDLE_ERROR( cudaEventDestroy( start ) );

HANDLE_ERROR( cudaEventDestroy( stop ) );

HANDLE_ERROR( cudaFree( dev_bitmap ) );

// display

bitmap.display_and_exit();

}

———constant——把变量的访问限制为只读,从常量内存读取数据可以节约内存带宽:

- 线程将从半线程束的广播中收到数据,半线程束为16个线程,第一个读取,其他15个广播收到,内存流量为全局内存的1/16,然后将这个常量数据放在GPU缓存上。

- 其他半线程束请求同一个地址时,从常量内存缓存中收到数据,同样减小了内存流量

同理,如果不同线程访问的不是同一个内存地址的话,这16次读取操作会被串行化,需要16倍的时间发出请求,但如果从全局内存中读取,这些请求同时发出,反而会快一点。

2. 使用事件来测量性能

为了测量GPU在某个任务上花费的时间,我们将艾嗯使用CUDA的事件API。

CUDA中的事件本质上是一个GPU的时间戳,可以避免使用CPU定时器来统计GPU执行时间可能与到的各种麻烦。

方法就是先创建一个事件,然后记录一个事件,要统计代码执行时间,还要创建一个结束事件:

// capture the start time

cudaEvent_t start, stop;

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

//GPU上执行一些工作

// get stop time, and display the timing results

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );//阻塞后面语句,直到GPU执行到达stop事件,当这个语句返回时,我们知道stop之前的所有GPU工作已经完成了

float elapsedTime;

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

printf( "Time to generate: %3.1f ms\n", elapsedTime );

HANDLE_ERROR( cudaEventDestroy( start ) );

HANDLE_ERROR( cudaEventDestroy( stop ) );

注意,事件是直接在GPU上实现的,如果中间的代码中包含了除核函数和设备内存赋值外的其他代码,将得到不可靠的结果。

上述代码:全局内存:6.4 ms, 常量内存:4.1 ms,提升了50%

第五部分:纹理内存

和常量内存一样,纹理内存也是一种喔个只读内存,可以提升性能并减小内存流量。也可以用于通用计算。

纹理内存和常量内存一样,缓存在芯片上,某些情况下,能够减小对呢村的访问请求并提供根高的内存带宽,纹理内存专门为在内存访问中存在打来嗯空间局部性的应用程序设计的。

即临近线程读取的内存地址非常接近。

图中四个内存地址并非连续,因此CPU缓存中不会缓存这些地址,如果使用纹理内存,就会缓存。

在温度更新的内存访问模式中存在巨大的内存空间局部性,可以通过纹理来加速

1. 使用一维纹理内存

#include "cuda.h"

#include "../common/book.h"

#include "../common/cpu_anim.h"

#define DIM 1024

#define PI 3.1415926535897932f

#define MAX_TEMP 1.0f

#define MIN_TEMP 0.0001f

#define SPEED 0.25f

// these exist on the GPU side

//××××将输入数据声明为texture类型的引用××××××

texture<float> texConstSrc;

texture<float> texIn;

texture<float> texOut;

// this kernel takes in a 2-d array of floats

// it updates the value-of-interest by a scaled value based

// on itself and its nearest neighbors

__global__ void blend_kernel( float *dst,

bool dstOut ) {

// map from threadIdx/BlockIdx to pixel position

int x = threadIdx.x + blockIdx.x * blockDim.x;

int y = threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

int left = offset - 1;

int right = offset + 1;

if (x == 0) left++;

if (x == DIM-1) right--;

int top = offset - DIM;

int bottom = offset + DIM;

if (y == 0) top += DIM;

if (y == DIM-1) bottom -= DIM;

//×××××××××前后左右通过tex1Dfetch来访问,texIn和texOut是全局引用××××××××

float t, l, c, r, b;

if (dstOut) {

t = tex1Dfetch(texIn,top);

l = tex1Dfetch(texIn,left);

c = tex1Dfetch(texIn,offset);

r = tex1Dfetch(texIn,right);

b = tex1Dfetch(texIn,bottom);

} else {

t = tex1Dfetch(texOut,top);

l = tex1Dfetch(texOut,left);

c = tex1Dfetch(texOut,offset);

r = tex1Dfetch(texOut,right);

b = tex1Dfetch(texOut,bottom);

}

dst[offset] = c + SPEED * (t + b + r + l - 4 * c);

}

// NOTE - texOffsetConstSrc could either be passed as a

// parameter to this function, or passed in __constant__ memory

// if we declared it as a global above, it would be

// a parameter here:

// __global__ void copy_const_kernel( float *iptr,

// size_t texOffset )

__global__ void copy_const_kernel( float *iptr ) {

// map from threadIdx/BlockIdx to pixel position

int x = threadIdx.x + blockIdx.x * blockDim.x;

int y = threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

//×××××××××前后左右通过tex1Dfetch来访问,texConstSrc是全局引用××××××××

float c = tex1Dfetch(texConstSrc,offset);

if (c != 0)

iptr[offset] = c;

}

// globals needed by the update routine

struct DataBlock {

unsigned char *output_bitmap;

float *dev_inSrc;

float *dev_outSrc;

float *dev_constSrc;

CPUAnimBitmap *bitmap;

cudaEvent_t start, stop;

float totalTime;

float frames;

};

void anim_gpu( DataBlock *d, int ticks ) {

HANDLE_ERROR( cudaEventRecord( d->start, 0 ) );

dim3 blocks(DIM/16,DIM/16);

dim3 threads(16,16);

CPUAnimBitmap *bitmap = d->bitmap;

// since tex is global and bound, we have to use a flag to

// select which is in/out per iteration

volatile bool dstOut = true;

for (int i=0; i<90; i++) {

float *in, *out;

if (dstOut) {

in = d->dev_inSrc;

out = d->dev_outSrc;

} else {

out = d->dev_inSrc;

in = d->dev_outSrc;

}

copy_const_kernel<<<blocks,threads>>>( in );

blend_kernel<<<blocks,threads>>>( out, dstOut );

dstOut = !dstOut;

}

float_to_color<<<blocks,threads>>>( d->output_bitmap,

d->dev_inSrc );

HANDLE_ERROR( cudaMemcpy( bitmap->get_ptr(),

d->output_bitmap,

bitmap->image_size(),

cudaMemcpyDeviceToHost ) );

HANDLE_ERROR( cudaEventRecord( d->stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( d->stop ) );

float elapsedTime;

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

d->start, d->stop ) );

d->totalTime += elapsedTime;

++d->frames;

printf( "Average Time per frame: %3.1f ms\n",

d->totalTime/d->frames );

}

// clean up memory allocated on the GPU

void anim_exit( DataBlock *d ) {

//不仅要释放全局缓存区,还要清除与纹理的绑定

cudaUnbindTexture( texIn );

cudaUnbindTexture( texOut );

cudaUnbindTexture( texConstSrc );

HANDLE_ERROR( cudaFree( d->dev_inSrc ) );

HANDLE_ERROR( cudaFree( d->dev_outSrc ) );

HANDLE_ERROR( cudaFree( d->dev_constSrc ) );

HANDLE_ERROR( cudaEventDestroy( d->start ) );

HANDLE_ERROR( cudaEventDestroy( d->stop ) );

}

int main( void ) {

DataBlock data;

CPUAnimBitmap bitmap( DIM, DIM, &data );

data.bitmap = &bitmap;

data.totalTime = 0;

data.frames = 0;

HANDLE_ERROR( cudaEventCreate( &data.start ) );

HANDLE_ERROR( cudaEventCreate( &data.stop ) );

int imageSize = bitmap.image_size();

HANDLE_ERROR( cudaMalloc( (void**)&data.output_bitmap,

imageSize ) );

// assume float == 4 chars in size (ie rgba)

HANDLE_ERROR( cudaMalloc( (void**)&data.dev_inSrc,

imageSize ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.dev_outSrc,

imageSize ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.dev_constSrc,

imageSize ) );

//×××××××××××××××分配内存后,将内存绑定到前面声明的纹理引用上××××××××××××××××××××××

HANDLE_ERROR( cudaBindTexture( NULL, texConstSrc,

data.dev_constSrc,

imageSize ) );

HANDLE_ERROR( cudaBindTexture( NULL, texIn,

data.dev_inSrc,

imageSize ) );

HANDLE_ERROR( cudaBindTexture( NULL, texOut,

data.dev_outSrc,

imageSize ) );

// intialize the constant data

float *temp = (float*)malloc( imageSize );

for (int i=0; i<DIM*DIM; i++) {

temp[i] = 0;

int x = i % DIM;

int y = i / DIM;

if ((x>300) && (x<600) && (y>310) && (y<601))

temp[i] = MAX_TEMP;

}

temp[DIM*100+100] = (MAX_TEMP + MIN_TEMP)/2;

temp[DIM*700+100] = MIN_TEMP;

temp[DIM*300+300] = MIN_TEMP;

temp[DIM*200+700] = MIN_TEMP;

for (int y=800; y<900; y++) {

for (int x=400; x<500; x++) {

temp[x+y*DIM] = MIN_TEMP;

}

}

HANDLE_ERROR( cudaMemcpy( data.dev_constSrc, temp,

imageSize,

cudaMemcpyHostToDevice ) );

// initialize the input data

for (int y=800; y<DIM; y++) {

for (int x=0; x<200; x++) {

temp[x+y*DIM] = MAX_TEMP;

}

}

HANDLE_ERROR( cudaMemcpy( data.dev_inSrc, temp,

imageSize,

cudaMemcpyHostToDevice ) );

free( temp );

bitmap.anim_and_exit( (void (*)(void*,int))anim_gpu,

(void (*)(void*))anim_exit );

}

2.使用二维纹理内存

使用二维纹理性能上相对于一维没有什么提升,就是有写应用代码更简洁一些,并且不用处理边界问题

#include "cuda.h"

#include "../common/book.h"

#include "../common/cpu_anim.h"

#define DIM 1024

#define PI 3.1415926535897932f

#define MAX_TEMP 1.0f

#define MIN_TEMP 0.0001f

#define SPEED 0.25f

// these exist on the GPU side

//××××××××××××××××××××××××这里加了个2×××××××××××××××××××××××××××××××××

texture<float,2> texConstSrc;

texture<float,2> texIn;

texture<float,2> texOut;

__global__ void blend_kernel( float *dst,

bool dstOut ) {

// map from threadIdx/BlockIdx to pixel position

int x = threadIdx.x + blockIdx.x * blockDim.x;

int y = threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

float t, l, c, r, b;

if (dstOut) {

//××××××××××××××××××××××××这里tex1Dfetch改为tex2D×××××××××××××××××××××××××××××××××

t = tex2D(texIn,x,y-1);

l = tex2D(texIn,x-1,y);

c = tex2D(texIn,x,y);

r = tex2D(texIn,x+1,y);

b = tex2D(texIn,x,y+1);

} else {

t = tex2D(texOut,x,y-1);

l = tex2D(texOut,x-1,y);

c = tex2D(texOut,x,y);

r = tex2D(texOut,x+1,y);

b = tex2D(texOut,x,y+1);

}

dst[offset] = c + SPEED * (t + b + r + l - 4 * c);

}

__global__ void copy_const_kernel( float *iptr ) {

// map from threadIdx/BlockIdx to pixel position

int x = threadIdx.x + blockIdx.x * blockDim.x;

int y = threadIdx.y + blockIdx.y * blockDim.y;

int offset = x + y * blockDim.x * gridDim.x;

float c = tex2D(texConstSrc,x,y);

if (c != 0)

iptr[offset] = c;

}

// globals needed by the update routine

struct DataBlock {

unsigned char *output_bitmap;

float *dev_inSrc;

float *dev_outSrc;

float *dev_constSrc;

CPUAnimBitmap *bitmap;

cudaEvent_t start, stop;

float totalTime;

float frames;

};

void anim_gpu( DataBlock *d, int ticks ) {

HANDLE_ERROR( cudaEventRecord( d->start, 0 ) );

dim3 blocks(DIM/16,DIM/16);

dim3 threads(16,16);

CPUAnimBitmap *bitmap = d->bitmap;

// since tex is global and bound, we have to use a flag to

// select which is in/out per iteration

volatile bool dstOut = true;

for (int i=0; i<90; i++) {

float *in, *out;

if (dstOut) {

in = d->dev_inSrc;

out = d->dev_outSrc;

} else {

out = d->dev_inSrc;

in = d->dev_outSrc;

}

copy_const_kernel<<<blocks,threads>>>( in );

blend_kernel<<<blocks,threads>>>( out, dstOut );

dstOut = !dstOut;

}

float_to_color<<<blocks,threads>>>( d->output_bitmap,

d->dev_inSrc );

HANDLE_ERROR( cudaMemcpy( bitmap->get_ptr(),

d->output_bitmap,

bitmap->image_size(),

cudaMemcpyDeviceToHost ) );

HANDLE_ERROR( cudaEventRecord( d->stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( d->stop ) );

float elapsedTime;

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

d->start, d->stop ) );

d->totalTime += elapsedTime;

++d->frames;

printf( "Average Time per frame: %3.1f ms\n",

d->totalTime/d->frames );

}

// clean up memory allocated on the GPU

void anim_exit( DataBlock *d ) {

cudaUnbindTexture( texIn );

cudaUnbindTexture( texOut );

cudaUnbindTexture( texConstSrc );

HANDLE_ERROR( cudaFree( d->dev_inSrc ) );

HANDLE_ERROR( cudaFree( d->dev_outSrc ) );

HANDLE_ERROR( cudaFree( d->dev_constSrc ) );

HANDLE_ERROR( cudaEventDestroy( d->start ) );

HANDLE_ERROR( cudaEventDestroy( d->stop ) );

}

int main( void ) {

DataBlock data;

CPUAnimBitmap bitmap( DIM, DIM, &data );

data.bitmap = &bitmap;

data.totalTime = 0;

data.frames = 0;

HANDLE_ERROR( cudaEventCreate( &data.start ) );

HANDLE_ERROR( cudaEventCreate( &data.stop ) );

int imageSize = bitmap.image_size();

HANDLE_ERROR( cudaMalloc( (void**)&data.output_bitmap,

imageSize ) );

// assume float == 4 chars in size (ie rgba)

HANDLE_ERROR( cudaMalloc( (void**)&data.dev_inSrc,

imageSize ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.dev_outSrc,

imageSize ) );

HANDLE_ERROR( cudaMalloc( (void**)&data.dev_constSrc,

imageSize ) );

//×××××××××××××××××××××这里绑定方法不太一样××××××××××××××××××××××××××××××××

cudaChannelFormatDesc desc = cudaCreateChannelDesc<float>();

HANDLE_ERROR( cudaBindTexture2D( NULL, texConstSrc,

data.dev_constSrc,

desc, DIM, DIM,

sizeof(float) * DIM ) );

HANDLE_ERROR( cudaBindTexture2D( NULL, texIn,

data.dev_inSrc,

desc, DIM, DIM,

sizeof(float) * DIM ) );

HANDLE_ERROR( cudaBindTexture2D( NULL, texOut,

data.dev_outSrc,

desc, DIM, DIM,

sizeof(float) * DIM ) );

// initialize the constant data

float *temp = (float*)malloc( imageSize );

for (int i=0; i<DIM*DIM; i++) {

temp[i] = 0;

int x = i % DIM;

int y = i / DIM;

if ((x>300) && (x<600) && (y>310) && (y<601))

temp[i] = MAX_TEMP;

}

temp[DIM*100+100] = (MAX_TEMP + MIN_TEMP)/2;

temp[DIM*700+100] = MIN_TEMP;

temp[DIM*300+300] = MIN_TEMP;

temp[DIM*200+700] = MIN_TEMP;

for (int y=800; y<900; y++) {

for (int x=400; x<500; x++) {

temp[x+y*DIM] = MIN_TEMP;

}

}

HANDLE_ERROR( cudaMemcpy( data.dev_constSrc, temp,

imageSize,

cudaMemcpyHostToDevice ) );

// initialize the input data

for (int y=800; y<DIM; y++) {

for (int x=0; x<200; x++) {

temp[x+y*DIM] = MAX_TEMP;

}

}

HANDLE_ERROR( cudaMemcpy( data.dev_inSrc, temp,

imageSize,

cudaMemcpyHostToDevice ) );

free( temp );

bitmap.anim_and_exit( (void (*)(void*,int))anim_gpu,

(void (*)(void*))anim_exit );

}

第六部分:图形互操作性

将通用计算与渲染任务交互。即CUDA C与OpenGL和DirectX这两种实时渲染API进行交互,这个功能我用不到,就不看了。

第七部分:原子性

案例:直方图计算

使用全局内存:

#define SIZE (100*1024*1024)

__global__ void histo_kernel( unsigned char *buffer,

long size,

unsigned int *histo ) {

// calculate the starting index and the offset to the next

// block that each thread will be processing

int i = threadIdx.x + blockIdx.x * blockDim.x;

int stride = blockDim.x * gridDim.x;

while (i < size) {

atomicAdd( &histo[buffer[i]], 1 );

i += stride;

}

}

int main( void ) {

unsigned char *buffer =

(unsigned char*)big_random_block( SIZE );

// capture the start time

// starting the timer here so that we include the cost of

// all of the operations on the GPU.

cudaEvent_t start, stop;

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

// allocate memory on the GPU for the file's data

unsigned char *dev_buffer;

unsigned int *dev_histo;

HANDLE_ERROR( cudaMalloc( (void**)&dev_buffer, SIZE ) );

HANDLE_ERROR( cudaMemcpy( dev_buffer, buffer, SIZE,

cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_histo,

256 * sizeof( int ) ) );

HANDLE_ERROR( cudaMemset( dev_histo, 0,

256 * sizeof( int ) ) );

// kernel launch - 2x the number of mps gave best timing

cudaDeviceProp prop;

HANDLE_ERROR( cudaGetDeviceProperties( &prop, 0 ) );

int blocks = prop.multiProcessorCount;

histo_kernel<<<blocks*2,256>>>( dev_buffer, SIZE, dev_histo );

unsigned int histo[256];

HANDLE_ERROR( cudaMemcpy( histo, dev_histo,

256 * sizeof( int ),

cudaMemcpyDeviceToHost ) );

// get stop time, and display the timing results

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

float elapsedTime;

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

printf( "Time to generate: %3.1f ms\n", elapsedTime );

long histoCount = 0;

for (int i=0; i<256; i++) {

histoCount += histo[i];

}

printf( "Histogram Sum: %ld\n", histoCount );

// verify that we have the same counts via CPU

for (int i=0; i<SIZE; i++)

histo[buffer[i]]--;

for (int i=0; i<256; i++) {

if (histo[i] != 0)

printf( "Failure at %d! Off by %d\n", i, histo[i] );

}

HANDLE_ERROR( cudaEventDestroy( start ) );

HANDLE_ERROR( cudaEventDestroy( stop ) );

cudaFree( dev_histo );

cudaFree( dev_buffer );

free( buffer );

return 0;

}

比CPU反而慢四倍,因为太多线程竞争统一开内存,会导致串行,caiiyong的解决方法是降低内存竞争的程度,采用两阶段法。

分配一个共享内存保存每个线程块的临时直方图,然后把这些内存块的直方图加起来。

#include "../common/book.h"

#define SIZE (100*1024*1024)

__global__ void histo_kernel( unsigned char *buffer,

long size,

unsigned int *histo ) {

// clear out the accumulation buffer called temp

// since we are launched with 256 threads, it is easy

// to clear that memory with one write per thread

__shared__ unsigned int temp[256];

temp[threadIdx.x] = 0;

__syncthreads();

// calculate the starting index and the offset to the next

// block that each thread will be processing

int i = threadIdx.x + blockIdx.x * blockDim.x;

int stride = blockDim.x * gridDim.x;

while (i < size) {

atomicAdd( &temp[buffer[i]], 1 );

i += stride;

}

// sync the data from the above writes to shared memory

// then add the shared memory values to the values from

// the other thread blocks using global memory

// atomic adds

// same as before, since we have 256 threads, updating the

// global histogram is just one write per thread!

__syncthreads();

atomicAdd( &(histo[threadIdx.x]), temp[threadIdx.x] );

}

int main( void ) {

unsigned char *buffer =

(unsigned char*)big_random_block( SIZE );

// capture the start time

// starting the timer here so that we include the cost of

// all of the operations on the GPU. if the data were

// already on the GPU and we just timed the kernel

// the timing would drop from 74 ms to 15 ms. Very fast.

cudaEvent_t start, stop;

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

// allocate memory on the GPU for the file's data

unsigned char *dev_buffer;

unsigned int *dev_histo;

HANDLE_ERROR( cudaMalloc( (void**)&dev_buffer, SIZE ) );

HANDLE_ERROR( cudaMemcpy( dev_buffer, buffer, SIZE,

cudaMemcpyHostToDevice ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_histo,

256 * sizeof( int ) ) );

HANDLE_ERROR( cudaMemset( dev_histo, 0,

256 * sizeof( int ) ) );

// kernel launch - 2x the number of mps gave best timing

cudaDeviceProp prop;

HANDLE_ERROR( cudaGetDeviceProperties( &prop, 0 ) );

int blocks = prop.multiProcessorCount;

//当线程块数量为GPU处理器数量额达两倍时,性能最优

histo_kernel<<<blocks*2,256>>>( dev_buffer,

SIZE, dev_histo );

unsigned int histo[256];

HANDLE_ERROR( cudaMemcpy( histo, dev_histo,

256 * sizeof( int ),

cudaMemcpyDeviceToHost ) );

// get stop time, and display the timing results

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

float elapsedTime;

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

printf( "Time to generate: %3.1f ms\n", elapsedTime );

long histoCount = 0;

for (int i=0; i<256; i++) {

histoCount += histo[i];

}

printf( "Histogram Sum: %ld\n", histoCount );

// verify that we have the same counts via CPU

for (int i=0; i<SIZE; i++)

histo[buffer[i]]--;

for (int i=0; i<256; i++) {

if (histo[i] != 0)

printf( "Failure at %d!\n", i );

}

HANDLE_ERROR( cudaEventDestroy( start ) );

HANDLE_ERROR( cudaEventDestroy( stop ) );

cudaFree( dev_histo );

cudaFree( dev_buffer );

free( buffer );

return 0;

}

第八部分:流

流用于实现任务并行性,并行执行两个或多个不同的任务,而不是大量数据执行同一任务。

1.页锁定主机内存

主机内存通过cudaHostAlloc分配,而不是malloc,这样的内存复制到设备上速度更快,但内存消耗也很快,因此,仅对cudaMemcpy调用中的源内存或目标内存,才设置为页锁定内存,不使用时立即释放。

#include "../common/book.h"

#define SIZE (64*1024*1024)

float cuda_malloc_test( int size, bool up ) {

cudaEvent_t start, stop;

int *a, *dev_a;

float elapsedTime;

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

a = (int*)malloc( size * sizeof( *a ) );

HANDLE_NULL( a );

HANDLE_ERROR( cudaMalloc( (void**)&dev_a,

size * sizeof( *dev_a ) ) );

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

for (int i=0; i<100; i++) {

if (up)

HANDLE_ERROR( cudaMemcpy( dev_a, a,

size * sizeof( *dev_a ),

cudaMemcpyHostToDevice ) );

else

HANDLE_ERROR( cudaMemcpy( a, dev_a,

size * sizeof( *dev_a ),

cudaMemcpyDeviceToHost ) );

}

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

free( a );

HANDLE_ERROR( cudaFree( dev_a ) );

HANDLE_ERROR( cudaEventDestroy( start ) );

HANDLE_ERROR( cudaEventDestroy( stop ) );

return elapsedTime;

}

float cuda_host_alloc_test( int size, bool up ) {

cudaEvent_t start, stop;

int *a, *dev_a;

float elapsedTime;

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

//这句话是重点,分配页锁定主机内存

HANDLE_ERROR( cudaHostAlloc( (void**)&a,

size * sizeof( *a ),

cudaHostAllocDefault ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_a,

size * sizeof( *dev_a ) ) );

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

for (int i=0; i<100; i++) {

if (up)

HANDLE_ERROR( cudaMemcpy( dev_a, a,

size * sizeof( *a ),

cudaMemcpyHostToDevice ) );

else

HANDLE_ERROR( cudaMemcpy( a, dev_a,

size * sizeof( *a ),

cudaMemcpyDeviceToHost ) );

}

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

HANDLE_ERROR( cudaFreeHost( a ) );

HANDLE_ERROR( cudaFree( dev_a ) );

HANDLE_ERROR( cudaEventDestroy( start ) );

HANDLE_ERROR( cudaEventDestroy( stop ) );

return elapsedTime;

}

int main( void ) {

float elapsedTime;

float MB = (float)100*SIZE*sizeof(int)/1024/1024;

// try it with cudaMalloc

elapsedTime = cuda_malloc_test( SIZE, true );

printf( "Time using cudaMalloc: %3.1f ms\n",

elapsedTime );

printf( "\tMB/s during copy up: %3.1f\n",

MB/(elapsedTime/1000) );

elapsedTime = cuda_malloc_test( SIZE, false );

printf( "Time using cudaMalloc: %3.1f ms\n",

elapsedTime );

printf( "\tMB/s during copy down: %3.1f\n",

MB/(elapsedTime/1000) );

// now try it with cudaHostAlloc

elapsedTime = cuda_host_alloc_test( SIZE, true );

printf( "Time using cudaHostAlloc: %3.1f ms\n",

elapsedTime );

printf( "\tMB/s during copy up: %3.1f\n",

MB/(elapsedTime/1000) );

elapsedTime = cuda_host_alloc_test( SIZE, false );

printf( "Time using cudaHostAlloc: %3.1f ms\n",

elapsedTime );

printf( "\tMB/s during copy down: %3.1f\n",

MB/(elapsedTime/1000) );

}

2. CUDA流

CUDA流表示GPU操作队列,,该队列中的操作按照指定顺序执行。可将一个流看作一个GPU任务,所个流并行执行。

单个流的例子:

int main( void ) {

cudaDeviceProp prop;

int whichDevice;

//设备要支持重叠才可以

HANDLE_ERROR( cudaGetDevice( &whichDevice ) );

HANDLE_ERROR( cudaGetDeviceProperties( &prop, whichDevice ) );

if (!prop.deviceOverlap) {

printf( "Device will not handle overlaps, so no speed up from streams\n" );

return 0;

}

cudaEvent_t start, stop;

float elapsedTime;

cudaStream_t stream;

int *host_a, *host_b, *host_c;

int *dev_a, *dev_b, *dev_c;

// start the timers

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

// initialize the stream

HANDLE_ERROR( cudaStreamCreate( &stream ) );

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c,

N * sizeof(int) ) );

// allocate host locked memory, used to stream,要分配页锁定内存

HANDLE_ERROR( cudaHostAlloc( (void**)&host_a,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

HANDLE_ERROR( cudaHostAlloc( (void**)&host_b,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

HANDLE_ERROR( cudaHostAlloc( (void**)&host_c,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

for (int i=0; i<FULL_DATA_SIZE; i++) {

host_a[i] = rand();

host_b[i] = rand();

}

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

// now loop over full data, in bite-sized chunks,GPU内存远小于主机内存,因此要分块操作

for (int i=0; i<FULL_DATA_SIZE; i+= N) {

// copy the locked memory to the device, async,异步操作,只是发出申请,该语句可以继续往下走,并不保证执行成功

HANDLE_ERROR( cudaMemcpyAsync( dev_a, host_a+i,

N * sizeof(int),

cudaMemcpyHostToDevice,

stream ) );

HANDLE_ERROR( cudaMemcpyAsync( dev_b, host_b+i,

N * sizeof(int),

cudaMemcpyHostToDevice,

stream ) );

kernel<<<N/256,256,0,stream>>>( dev_a, dev_b, dev_c );

// copy the data from device to locked memory

HANDLE_ERROR( cudaMemcpyAsync( host_c+i, dev_c,

N * sizeof(int),

cudaMemcpyDeviceToHost,

stream ) );

}

// copy result chunk from locked to full buffer,因为是异步,所以要和CPU同步一下

HANDLE_ERROR( cudaStreamSynchronize( stream ) );

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

printf( "Time taken: %3.1f ms\n", elapsedTime );

// cleanup the streams and memory

HANDLE_ERROR( cudaFreeHost( host_a ) );

HANDLE_ERROR( cudaFreeHost( host_b ) );

HANDLE_ERROR( cudaFreeHost( host_c ) );

HANDLE_ERROR( cudaFree( dev_a ) );

HANDLE_ERROR( cudaFree( dev_b ) );

HANDLE_ERROR( cudaFree( dev_c ) );

HANDLE_ERROR( cudaStreamDestroy( stream ) );

return 0;

}

这样主机继续执行一些工作,GPU也忙于处理流的工作,有利于效率的提升。

多个CUDA流:

int main( void ) {

cudaDeviceProp prop;

int whichDevice;

HANDLE_ERROR( cudaGetDevice( &whichDevice ) );

HANDLE_ERROR( cudaGetDeviceProperties( &prop, whichDevice ) );

if (!prop.deviceOverlap) {

printf( "Device will not handle overlaps, so no speed up from streams\n" );

return 0;

}

cudaEvent_t start, stop;

float elapsedTime;

cudaStream_t stream0, stream1;

int *host_a, *host_b, *host_c;

int *dev_a0, *dev_b0, *dev_c0;

int *dev_a1, *dev_b1, *dev_c1;

// start the timers

HANDLE_ERROR( cudaEventCreate( &start ) );

HANDLE_ERROR( cudaEventCreate( &stop ) );

// initialize the streams

HANDLE_ERROR( cudaStreamCreate( &stream0 ) );

HANDLE_ERROR( cudaStreamCreate( &stream1 ) );

// allocate the memory on the GPU

HANDLE_ERROR( cudaMalloc( (void**)&dev_a0,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b0,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c0,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_a1,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_b1,

N * sizeof(int) ) );

HANDLE_ERROR( cudaMalloc( (void**)&dev_c1,

N * sizeof(int) ) );

// allocate host locked memory, used to stream

HANDLE_ERROR( cudaHostAlloc( (void**)&host_a,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

HANDLE_ERROR( cudaHostAlloc( (void**)&host_b,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

HANDLE_ERROR( cudaHostAlloc( (void**)&host_c,

FULL_DATA_SIZE * sizeof(int),

cudaHostAllocDefault ) );

for (int i=0; i<FULL_DATA_SIZE; i++) {

host_a[i] = rand();

host_b[i] = rand();

}

HANDLE_ERROR( cudaEventRecord( start, 0 ) );

// now loop over full data, in bite-sized chunks

for (int i=0; i<FULL_DATA_SIZE; i+= N*2) {

// enqueue copies of a in stream0 and stream1

HANDLE_ERROR( cudaMemcpyAsync( dev_a0, host_a+i,

N * sizeof(int),

cudaMemcpyHostToDevice,

stream0 ) );

HANDLE_ERROR( cudaMemcpyAsync( dev_a1, host_a+i+N,

N * sizeof(int),

cudaMemcpyHostToDevice,

stream1 ) );

// enqueue copies of b in stream0 and stream1

HANDLE_ERROR( cudaMemcpyAsync( dev_b0, host_b+i,

N * sizeof(int),

cudaMemcpyHostToDevice,

stream0 ) );

HANDLE_ERROR( cudaMemcpyAsync( dev_b1, host_b+i+N,

N * sizeof(int),

cudaMemcpyHostToDevice,

stream1 ) );

// enqueue kernels in stream0 and stream1

kernel<<<N/256,256,0,stream0>>>( dev_a0, dev_b0, dev_c0 );

kernel<<<N/256,256,0,stream1>>>( dev_a1, dev_b1, dev_c1 );

// enqueue copies of c from device to locked memory

HANDLE_ERROR( cudaMemcpyAsync( host_c+i, dev_c0,

N * sizeof(int),

cudaMemcpyDeviceToHost,

stream0 ) );

HANDLE_ERROR( cudaMemcpyAsync( host_c+i+N, dev_c1,

N * sizeof(int),

cudaMemcpyDeviceToHost,

stream1 ) );

}

HANDLE_ERROR( cudaStreamSynchronize( stream0 ) );

HANDLE_ERROR( cudaStreamSynchronize( stream1 ) );

HANDLE_ERROR( cudaEventRecord( stop, 0 ) );

HANDLE_ERROR( cudaEventSynchronize( stop ) );

HANDLE_ERROR( cudaEventElapsedTime( &elapsedTime,

start, stop ) );

printf( "Time taken: %3.1f ms\n", elapsedTime );

// cleanup the streams and memory

HANDLE_ERROR( cudaFreeHost( host_a ) );

HANDLE_ERROR( cudaFreeHost( host_b ) );

HANDLE_ERROR( cudaFreeHost( host_c ) );

HANDLE_ERROR( cudaFree( dev_a0 ) );

HANDLE_ERROR( cudaFree( dev_b0 ) );

HANDLE_ERROR( cudaFree( dev_c0 ) );

HANDLE_ERROR( cudaFree( dev_a1 ) );

HANDLE_ERROR( cudaFree( dev_b1 ) );

HANDLE_ERROR( cudaFree( dev_c1 ) );

HANDLE_ERROR( cudaStreamDestroy( stream0 ) );

HANDLE_ERROR( cudaStreamDestroy( stream1 ) );

return 0;

}

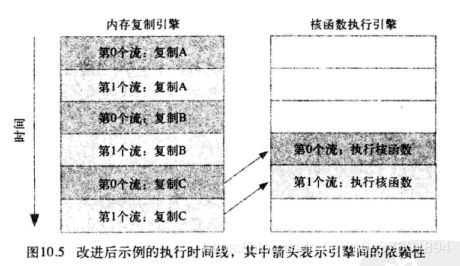

宽度优先调用比深度优先要好,这部分涉及GPU的工作调度机制,有内存赋值引擎和核函数执行引擎,这样宽度优先,即穿插着执行效率更高。

第九部分:矩阵计算库

cuSparse和cuBLAS提供了矩阵运算库,比MKL效率高很多,下面 简单介绍下用法

1. cuSparse

内含很多稀疏线性代数结构,有很多稀疏的矩阵存储格式

#include "../common/common.h"

#include 2. cuBLAS

不支持多种稀疏数据格式,仅支持优化稠密向量和稠密矩阵的操作,用法相对cuSparse简单一些。

列优先存储的,cuSparse是行优先的

#include "../common/common.h"

#include 3. cuRAND

提供了GPU快速生成随机数的不同方法

#include "../common/common.h"

#include