MongoDB集群搭建之sharding

1 集群架构介绍

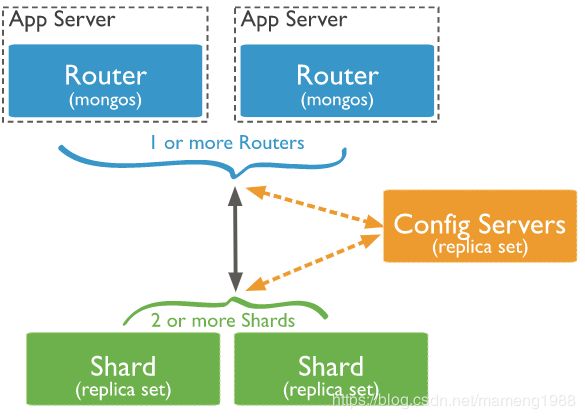

1.1 sharding集群架构

- shard:每个分片包含分片数据的子集。每个分片都可以部署为副本集(replica set)。可以分片,不分片的数据存于主分片服务器上。部署为3成员副本集。

- mongos:mongos充当查询路由器,提供客户端应用程序和分片集群之间的接口。可以部署多个mongos路由器。部署1个或者多个mongos。

- config servers:配置服务器存储群集的元数据和配置设置。从MongoDB 3.4开始,必须将配置服务器部署为3成员副本集。

注意:应用程序或者客户端必须要连接mongos才能与集群的数据进行交互,永远不应连接到单个分片以执行读取或写入操作。

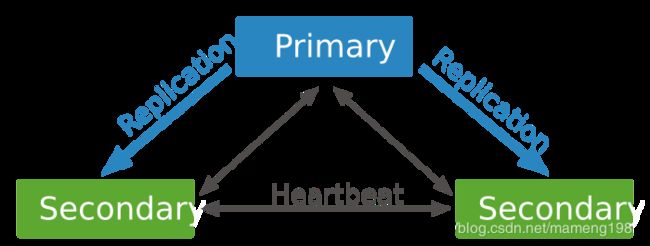

1.2 shard的replica set的架构

1.3 config servers的replica set的架构

2 分片策略

2.1 散列分片

- 使用散列索引在共享集群中分区数据。散列索引计算单个字段的哈希值作为索引值; 此值用作分片键。

- 使用散列索引解析查询时,MongoDB会自动计算哈希值。应用程序也不会需要计算哈希值。

- 基于散列值的数据分布有助于更均匀的数据分布,尤其是在分片键单调变化的数据集中。

2.2 范围分片

3 部署

3.1 环境说明

| 服务器名称 | IP地址 | 操作系统版本 | MongoDB版本 | 配置服务器(Config Server)端口 | 分片服务器1(Shard Server 1) | 分片服务器2(Shard Server 2) | 分片服务器3(Shard Server 3) | 功能 |

|---|---|---|---|---|---|---|---|---|

| mongo1.example.com | 192.168.200.1 | Centos7.5 | 4.0 | 27027(Primary) | 27017(Primary) | 27018(Arbiter) | 27019(Secondary) | 配置服务器和分片服务器 |

| mongo2.example.com | 192.168.200.2 | Centos7.5 | 4.0 | 27027(Secondary) | 27017(Secondary) | 27018(Primary) | 27019(Arbiter) | 配置服务器和分片服务器 |

| mongo3.example.com | 192.168.200.3 | Centos7.5 | 4.0 | 27027(Secondary) | 27017(Arbiter) | 27018(Secondary) | 27019(Primary) | 配置服务器和分片服务器 |

| mongo4.example.com | 192.168.200.4 | Centos7.5 | 4.0 | mongos的端口:27017 | mongos(用于客户端连接) |

注意:官方推荐配置中使用逻辑DNS,所以该文档中,将服务器名称和IP地址的DNS映射关系写入到各服务器的/etc/hosts文件中。

3.2 部署MongoDB

环境中4台服务器的MongoDB的安装部署,详见:MongoDB安装

在服务器上创建环境所需目录:

mkdir -p /data/mongodb/data/{configServer,shard1,shard2,shard3}

mkdir -p /data/mongodb/{log,pid}

3.3 创建配置服务器(Config Server)的 Replica Set(副本集)

3台服务器上配置文件内容: /data/mongodb/configServer.conf

mongo1.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/configServer.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/configServer"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/configServer.pid"

net:

bindIp: mongo1.example.com

port: 27027

replication:

replSetName: cs0

sharding:

clusterRole: configsvr

mongo2.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/configServer.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/configServer"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/configServer.pid"

net:

bindIp: mongo2.example.com

port: 27027

replication:

replSetName: cs0

sharding:

clusterRole: configsvr

mongo3.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/configServer.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/configServer"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/configServer.pid"

net:

bindIp: mongo3.example.com

port: 27027

replication:

replSetName: cs0

sharding:

clusterRole: configsvr

启动三台服务器Config Server,进入到bin目录下,分别执行:

./mongod -f /data/mongodb/configServer.conf

连接到其中一个Config Server

./mongo --host mongo1.example.com --port 27027

连接成功的结果:

MongoDB shell version v4.0.10

connecting to: mongodb://mongo1.example.com:27027/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("1a4d4252-11d0-40bb-90da-f144692be88d") }

MongoDB server version: 4.0.10

Server has startup warnings:

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten]

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten]

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten]

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2020-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2020-06-14T14:28:56.014+0800 I CONTROL [initandlisten]

2020-06-14T14:28:56.014+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2020-06-14T14:28:56.014+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2020-06-14T14:28:56.014+0800 I CONTROL [initandlisten]

>

配置Replica Set

>rs.initiate(

{

_id: "cs0",

configsvr: true,

members: [

{ _id : 0, host : "mongo1.example.com:27027" },

{ _id : 1, host : "mongo2.example.com:27027" },

{ _id : 2, host : "mongo3.example.com:27027" }

]

}

)

执行结果:

{

"ok" : 1,

"operationTime" : Timestamp(1592116308, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1592116308, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0),

"$clusterTime" : {

"clusterTime" : Timestamp(1592116308, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

查看Replica Set的状态

cs0:PRIMARY> rs.status()

结果: 可以看出三个服务器:1个Primary,2个Secondary

{

"set" : "cs0",

"date" : ISODate("2020-06-14T06:33:31.348Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1590493976, 1),

"members" : [

{

"_id" : 0,

"name" : "mongo1.example.com:27027",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 277,

"optime" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-06-14T06:33:26Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1590493919, 1),

"electionDate" : ISODate("2020-06-14T06:31:59Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "mongo2.example.com:27027",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 102,

"optime" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-06-14T06:33:26Z"),

"optimeDurableDate" : ISODate("2020-06-14T06:33:26Z"),

"lastHeartbeat" : ISODate("2020-06-14T06:33:29.385Z"),

"lastHeartbeatRecv" : ISODate("2020-06-14T06:33:29.988Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongo1.example.com:27027",

"syncSourceHost" : "mongo1.example.com:27027",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "mongo3.example.com:27027",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 102,

"optime" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1590494006, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-06-14T06:33:26Z"),

"optimeDurableDate" : ISODate("2020-06-14T06:33:26Z"),

"lastHeartbeat" : ISODate("2020-06-14T06:33:29.384Z"),

"lastHeartbeatRecv" : ISODate("2020-06-14T06:33:29.868Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongo1.example.com:27027",

"syncSourceHost" : "mongo1.example.com:27027",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1590494006, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1590493908, 1),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"lastCommittedOpTime" : Timestamp(1590494006, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1590494006, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

创建管理用户

>use admin

>db.createUser(

{

user: "myAdmin",

pwd: "qwe123",

roles: [{ role: "userAdminAnyDatabase", db: "admin" },"readWriteAnyDatabase"]

}

)

开启Config Server的登录验证和内部验证

使用Keyfiles进行内部认证,在其中一台服务器上创建Keyfiles

[[email protected] ~]# openssl rand -base64 756 > /data/mongodb/keyfile

[[email protected] ~]# chmod 400 /data/mongodb/keyfile

将这个keyfile文件分发到其它的三台服务器上,并保证权限400

在/data/mongodb/configServer.conf 配置文件中开启认证:

security:

keyFile: "/data/mongodb/keyfile"

clusterAuthMode: "keyFile"

authorization: "enabled"

然后依次关闭2个Secondary,在关闭 Primary

./mongod -f /data/mongodb/configServer.conf --shutdown

依次开启Primary和两个Secondary

./mongod -f /data/mongodb/configServer.conf

使用用户密码登录mongo

mongo --host mongo1.example.com --port 27027 -u myAdmin --authenticationDatabase "admin" -p 'qwe123'

注意:由于刚创建用户的时候没有给该用户管理集群的权限,所有此时登录后,能查看所有数据库,但是不能查看集群的状态信息。

cs0:PRIMARY> rs.status()

{

"operationTime" : Timestamp(1590495861, 1),

"ok" : 0,

"errmsg" : "not authorized on admin to execute command { replSetGetStatus: 1.0, lsid: { id: UUID(\"59dd4dc0-b34f-43b9-a341-a2f43ec1dcfa\") }, $clusterTime: { clusterTime: Timestamp(1560495849, 1), signature: { hash: BinData(0, A51371EC5AA54BB1B05ED9342BFBF03CBD87F2D9), keyId: 6702270356301807629 } }, $db: \"admin\" }",

"code" : 13,

"codeName" : "Unauthorized",

"$gleStats" : {

"lastOpTime" : Timestamp(0, 0),

"electionId" : ObjectId("7fffffff0000000000000002")

},

"lastCommittedOpTime" : Timestamp(1590495861, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1590495861, 1),

"signature" : {

"hash" : BinData(0,"3UkTpXxyU8WI1TyS+u5vgewueGA="),

"keyId" : NumberLong("6702270356301807629")

}

}

}

cs0:PRIMARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

赋值该用户具有集群的管理权限

cs0:PRIMARY> use admin

cs0:PRIMARY> db.system.users.find() #查看当前的用户信息

cs0:PRIMARY> db.grantRolesToUser("myAdmin", ["clusterAdmin"])

查看集群信息

cs0:PRIMARY> rs.status()

{

"set" : "cs0",

"date" : ISODate("2020-06-14T07:18:20.223Z"),

"myState" : 1,

"term" : NumberLong(2),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1590496652, 1),

"members" : [

{

"_id" : 0,

"name" : "mongo1.example.com:27027",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1123,

"optime" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2020-06-14T07:18:10Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1590495590, 1),

"electionDate" : ISODate("2020-06-14T06:59:50Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "mongo2.example.com:27027",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1113,

"optime" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2020-06-14T07:18:10Z"),

"optimeDurableDate" : ISODate("2020-06-14T07:18:10Z"),

"lastHeartbeat" : ISODate("2020-06-14T07:18:18.974Z"),

"lastHeartbeatRecv" : ISODate("2020-06-14T07:18:19.142Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongo1.example.com:27027",

"syncSourceHost" : "mongo1.example.com:27027",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "mongo3.example.com:27027",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 1107,

"optime" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1590496690, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2020-06-14T07:18:10Z"),

"optimeDurableDate" : ISODate("2020-06-14T07:18:10Z"),

"lastHeartbeat" : ISODate("2020-06-14T07:18:18.999Z"),

"lastHeartbeatRecv" : ISODate("2020-06-14T07:18:18.998Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongo2.example.com:27027",

"syncSourceHost" : "mongo2.example.com:27027",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1590496690, 1),

"$gleStats" : {

"lastOpTime" : {

"ts" : Timestamp(1590496631, 1),

"t" : NumberLong(2)

},

"electionId" : ObjectId("7fffffff0000000000000002")

},

"lastCommittedOpTime" : Timestamp(1590496690, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1590496690, 1),

"signature" : {

"hash" : BinData(0,"lHiVw7WeO81npTi2IMW16reAN84="),

"keyId" : NumberLong("6702270356301807629")

}

}

}

3.4 部署分片服务器1(Shard1)以及Replica Set(副本集)

3台服务器上配置文件内容: /data/mongodb/shard1.conf

mongo1.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard1.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard1"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard1.pid"

net:

bindIp: mongo1.example.com

port: 27017

replication:

replSetName: "shard1"

sharding:

clusterRole: shardsvr

mongo2.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard1.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard1"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard1.pid"

net:

bindIp: mongo2.example.com

port: 27017

replication:

replSetName: "shard1"

sharding:

clusterRole: shardsvr

mongo3.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard1.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard1"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard1.pid"

net:

bindIp: mongo3.example.com

port: 27017

replication:

replSetName: "shard1"

sharding:

clusterRole: shardsvr

开启三台服务器上Shard

./mongod -f /data/mongodb/shard1.conf

连接Primary服务器的Shard的副本集

./mongo --host mongo1.example.com --port 27017

结果:

MongoDB shell version v4.0.10

connecting to: mongodb://mongo1.example.net:27017/?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("91e76384-cdae-411f-ab88-b7a8bd4555d1") }

MongoDB server version: 4.0.10

Server has startup warnings:

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2020-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

>

配置Replica Set

> rs.initiate(

{

_id : "shard1",

members: [

{ _id : 0, host : "mongo1.example.com:27017",priority:2 },

{ _id : 1, host : "mongo2.example.com:27017",priority:1 },

{ _id : 2, host : "mongo3.example.com:27017",arbiterOnly:true }

]

}

)

注意:优先级priority的值越大,越容易选举成为Primary

查看Replica Set的状态:

shard1:PRIMARY> rs.status()

{

"set" : "shard1",

"date" : ISODate("2020-06-20T01:33:21.809Z"),

"myState" : 1,

"term" : NumberLong(2),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1590994393, 1),

"t" : NumberLong(2)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1590994393, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" : Timestamp(1590994393, 1),

"t" : NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1590994393, 1),

"t" : NumberLong(2)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1590994373, 1),

"members" : [

{

"_id" : 0,

"name" : "mongo1.example.net:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 43,

"optime" : {

"ts" : Timestamp(1590994393, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2020-06-20T01:33:13Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1590994371, 1),

"electionDate" : ISODate("2020-06-20T01:32:51Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "mongo2.example.com:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 36,

"optime" : {

"ts" : Timestamp(1590994393, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1590994393, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2020-06-20T01:33:13Z"),

"optimeDurableDate" : ISODate("2020-06-20T01:33:13Z"),

"lastHeartbeat" : ISODate("2020-06-20T01:33:19.841Z"),

"lastHeartbeatRecv" : ISODate("2020-06-20T01:33:21.164Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongo1.example.com:27017",

"syncSourceHost" : "mongo1.example.com:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "mongo3.example.com:27017",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 32,

"lastHeartbeat" : ISODate("2020-06-20T01:33:19.838Z"),

"lastHeartbeatRecv" : ISODate("2020-06-20T01:33:20.694Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1

}

上面的结果: 可以看出三个服务器:1个Primary,1个Secondary,1一个Arbiter

创建管理用户

> use admin

> db.createUser(

{

user: "myAdmin",

pwd: "qwe123",

roles: [{ role: "userAdminAnyDatabase", db: "admin" },"readWriteAnyDatabase","clusterAdmin"]

}

)

开启Shard1的登录验证和内部验证

security:

keyFile: "/data/mongodb/keyfile"

clusterAuthMode: "keyFile"

authorization: "enabled"

然后依次关闭Arbiter、Secondary、Primary

./mongod -f /data/mongodb/shard1.conf --shutdown

依次开启Primary和两个Secondary

./mongod -f /data/mongodb/shard1.conf

使用用户密码登录mongo

mongo --host mongo1.example.com --port 27017 -u myAdmin --authenticationDatabase "admin" -p 'qwe123'

3.5 部署分片服务器2(Shard2)以及Replica Set(副本集)

3台服务器上配置文件内容: /data/mongodb/shard2.conf

mongo1.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard2.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard2"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard2.pid"

net:

bindIp: mongo1.example.com

port: 27018

replication:

replSetName: "shard2"

sharding:

clusterRole: shardsvr

mongo2.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard2.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard2"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard2.pid"

net:

bindIp: mongo2.example.com

port: 27018

replication:

replSetName: "shard2"

sharding:

clusterRole: shardsvr

mongo3.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard2.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard2"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard2.pid"

net:

bindIp: mongo3.example.com

port: 27018

replication:

replSetName: "shard2"

sharding:

clusterRole: shardsvr

开启三台服务器上Shard

./mongod -f /data/mongodb/shard2.conf

连接Primary服务器的Shard的副本集

./mongo --host mongo2.example.com --port 27018

配置Replica Set(注意:三个服务器的角色发生了改变)

> rs.initiate(

{

_id : "shard2",

members: [

{ _id : 0, host : "mongo1.example.com:27018",arbiterOnly:true },

{ _id : 1, host : "mongo2.example.com:27018",priority:2 },

{ _id : 2, host : "mongo3.example.com:27018",priority:1 }

]

}

)

查看Replica Set的状态:

shard2:PRIMARY> rs.status()

{

"set" : "shard2",

"date" : ISODate("2020-06-20T01:59:08.996Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1590995943, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1560995943, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1590995943, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1590995943, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1590995913, 1),

"members" : [

{

"_id" : 0,

"name" : "mongo1.example.com:27018",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 107,

"lastHeartbeat" : ISODate("2020-06-20T01:59:08.221Z"),

"lastHeartbeatRecv" : ISODate("2020-06-20T01:59:07.496Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "mongo2.example.com:27018",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 412,

"optime" : {

"ts" : Timestamp(1590995943, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-06-20T01:59:03Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1590995852, 1),

"electionDate" : ISODate("2020-06-20T01:57:32Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 2,

"name" : "mongo3.example.com:27018",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 107,

"optime" : {

"ts" : Timestamp(1590995943, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1590995943, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-06-20T01:59:03Z"),

"optimeDurableDate" : ISODate("2020-06-20T01:59:03Z"),

"lastHeartbeat" : ISODate("2020-06-20T01:59:08.220Z"),

"lastHeartbeatRecv" : ISODate("2020-06-20T01:59:08.716Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongo2.example.com:27018",

"syncSourceHost" : "mongo2.example.com:27018",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1590995943, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1590995943, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

结果: 可以看出三个服务器:1个Primary,1个Secondary,1一个Arbiter

配置登录认证的用户请按照 Shard1 的步骤

3.6 部署分片服务器3(Shard3)以及Replica Set(副本集)

3台服务器上配置文件内容: /data/mongodb/shard3.conf

mongo1.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard3.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard3"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard3.pid"

net:

bindIp: mongo1.example.com

port: 27019

replication:

replSetName: "shard3"

sharding:

clusterRole: shardsvr

mongo2.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard3.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard3"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard3.pid"

net:

bindIp: mongo2.example.com

port: 27019

replication:

replSetName: "shard3"

sharding:

clusterRole: shardsvr

mongo3.example.com服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard3.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard3"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard3.pid"

net:

bindIp: mongo3.example.com

port: 27019

replication:

replSetName: "shard3"

sharding:

clusterRole: shardsvr

开启三台服务器上Shard

./mongod -f /data/mongodb/shard3.conf

连接Primary服务器的Shard的副本集

./mongo --host mongo3.example.com --port 27019

配置Replica Set(注意:三个服务器的角色发生了改变)

> rs.initiate(

{

_id : "shard3",

members: [

{ _id : 0, host : "mongo1.example.com:27019",priority:1 },

{ _id : 1, host : "mongo2.example.com:27019",arbiterOnly:true },

{ _id : 2, host : "mongo3.example.com:27019",priority:2 }

]

}

)

查看Replica Set的状态:

shard3:PRIMARY> rs.status()

{

"set" : "shard3",

"date" : ISODate("2020-06-20T02:21:56.990Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1590997312, 2),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1590997312, 2),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1590997312, 2),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1590997312, 2),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1590997312, 1),

"members" : [

{

"_id" : 0,

"name" : "mongo1.example.com:27019",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 17,

"optime" : {

"ts" : Timestamp(1590997312, 2),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1590997312, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-06-20T02:21:52Z"),

"optimeDurableDate" : ISODate("2020-06-20T02:21:52Z"),

"lastHeartbeat" : ISODate("2020-06-20T02:21:56.160Z"),

"lastHeartbeatRecv" : ISODate("2020-06-20T02:21:55.155Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "mongo3.example.com:27019",

"syncSourceHost" : "mongo3.example.com:27019",

"syncSourceId" : 2,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "mongo2.example.com:27019",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 17,

"lastHeartbeat" : ISODate("2020-06-20T02:21:56.159Z"),

"lastHeartbeatRecv" : ISODate("2020-06-20T02:21:55.021Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "mongo3.example.com:27019",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 45,

"optime" : {

"ts" : Timestamp(1590997312, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2020-06-20T02:21:52Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1590997310, 1),

"electionDate" : ISODate("2020-06-20T02:21:50Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1,

"operationTime" : Timestamp(1590997312, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1590997312, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

结果: 可以看出三个服务器:1个Primary,1个Secondary,1一个Arbiter

配置登录认证的用户请按照 Shard1 的步骤

3.7 配置mongos服务器去连接分片集群

mongos.example.com 服务器上mongos的配置文件 /data/mongodb/mongos.conf

systemLog:

destination: file

path: "/data/mongodb/log/mongos.log"

logAppend: true

processManagement:

fork: true

net:

port: 27017

bindIp: mongos.example.com

sharding:

configDB: "cs0/mongo1.example.com:27027,mongo2.example.com:27027,mongo3.example.com:27027"

security:

keyFile: "/data/mongodb/keyfile"

clusterAuthMode: "keyFile"

启动mongos服务

./mongos -f /data/mongodb/mongos.conf

连接mongos

./mongo --host mongos.example.com --port 27017 -u myAdmin --authenticationDatabase "admin" -p 'qwe123'

查看当前集群结果:

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5d0af6ed4fa51757cd032108")

}

shards:

active mongoses:

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

在集群中先加入Shard1、Shard2,剩余Shard3我们在插入数据有在进行加入(模拟实现扩容)。

mongos>sh.addShard("shard1/mongo1.example.com:27017,mongo2.example.com:27017,mongo3.example.com:27017")

mongos>sh.addShard("shard2/mongo1.example.com:27018,mongo2.example.com:27018,mongo3.example.com:27018")

执行后查看集群状态:

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5d0af6ed4fa51757cd032108")

}

shards:

{ "_id" : "shard1", "host" : "shard1/mongo1.example.com:27017,mongo2.example.com:27017", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/mongo2.example.com:27018,mongo3.example.com:27018", "state" : 1 }

active mongoses:

"4.0.10" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

4 测试

先看下分片chunk的默认大小(64M):

mongos> use config

switched to db config

mongos> db.settings.find()

{ "_id" : "chunksize", "value" : 64 }

为了方便测试,把分片chunk的大小设置为1M:

mongos> db.settings..save({"_id":"chunksize","value":1})

执行创建数据库,并启用分片存储:

mongos> sh.enableSharding("user_center")

创建“log”集合并分片:

mongos> sh.shardCollection("user_center.log",{"id":1})

数据库user_center中log集合使用了片键{“id”:1},这个片键通过字段id的值进行数据分配,这就是范围分片。

查看集群状态:

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5ef991c0e4405bff37a72c62")

}

shards:

{ "_id" : "shard1", "host" : "shard1/mongo1.wocloud.com:27017,mongo2.wocloud.com:27017", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/mongo2.wocloud.com:27018,mongo3.wocloud.com:27018", "state" : 1 }

active mongoses:

"4.0.10" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "articledb", "primary" : "shard1", "partitioned" : true, "version" : { "uuid" : UUID("c55f15d5-d5f9-4fea-b5d2-a4cf53aac750"), "lastMod" : 1 } }

articledb.comment

shard key: { "_id" : "hashed" }

unique: false

balancing: true

chunks:

shard1 2

shard2 2

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : NumberLong("-4611686018427387902") } on : shard1 Timestamp(1, 0)

{ "_id" : NumberLong("-4611686018427387902") } -->> { "_id" : NumberLong(0) } on : shard1 Timestamp(1, 1)

{ "_id" : NumberLong(0) } -->> { "_id" : NumberLong("4611686018427387902") } on : shard2 Timestamp(1, 2)

{ "_id" : NumberLong("4611686018427387902") } -->> { "_id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 3)

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{ "_id" : "user_center", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("badbc29b-1c41-42ca-b06c-7803c960076e"), "lastMod" : 1 } }

user_center.log

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "id" : { "$minKey" : 1 } }-->> { "id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 0)

批量插入数据:

mongos> use user_cent

mongos> for(var i=1;i<50000;i++) {db.log.save({"id":i,"message":"message"+i});}

执行如下命令:

mongos> db.log.stats()

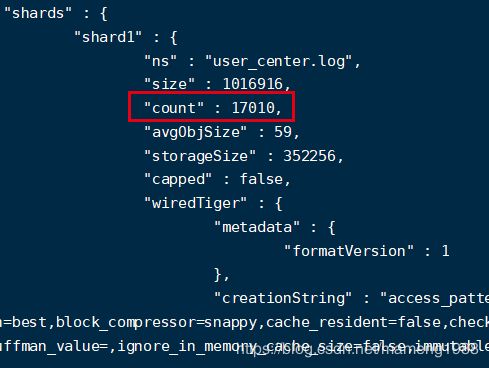

分片不均匀,这是shard key的策略造成的,改变分片策略:

mongos> db.log.drop()

mongos> sh.shardCollection("user_center.log",{"id":"hashed"})

mongos> for(var i=1;i<50000;i++) {db.log.save({"id":i,"message":"message"+i});}

mongos> db.log.stats()

再执行如下命令:

mongos> sh.status()

得到集群状态:

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5ef991c0e4405bff37a72c62")

}

shards:

{ "_id" : "shard1", "host" : "shard1/mongo1.wocloud.com:27017,mongo2.wocloud.com:27017", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/mongo2.wocloud.com:27018,mongo3.wocloud.com:27018", "state" : 1 }

active mongoses:

"4.0.10" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "articledb", "primary" : "shard1", "partitioned" : true, "version" : { "uuid" : UUID("c55f15d5-d5f9-4fea-b5d2-a4cf53aac750"), "lastMod" : 1 } }

articledb.comment

shard key: { "_id" : "hashed" }

unique: false

balancing: true

chunks:

shard1 2

shard2 2

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : NumberLong("-4611686018427387902") } on : shard1 Timestamp(1, 0)

{ "_id" : NumberLong("-4611686018427387902") } -->> { "_id" : NumberLong(0) } on : shard1 Timestamp(1, 1)

{ "_id" : NumberLong(0) } -->> { "_id" : NumberLong("4611686018427387902") } on : shard2 Timestamp(1, 2)

{ "_id" : NumberLong("4611686018427387902") } -->> { "_id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 3)

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{ "_id" : "user_center", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("badbc29b-1c41-42ca-b06c-7803c960076e"), "lastMod" : 1 } }

user_center.log

shard key: { "id" : "hashed" }

unique: false

balancing: true

chunks:

shard1 2

shard2 2

{ "id" : { "$minKey" : 1 } } -->> { "id" : NumberLong("-6148914691236517204") } on : shard1 Timestamp(1, 0)

{ "id" : NumberLong("-6148914691236517204") } -->> { "id" : NumberLong("-3074457345618258602") } on : shard1 Timestamp(1, 1)

{ "id" : NumberLong("-3074457345618258602") } -->> { "id" : NumberLong(0) } on : shard2 Timestamp(1, 2)

{ "id" : NumberLong(0) } -->> { "id" : NumberLong("3074457345618258602") } -->> { "id" : NumberLong("6148914691236517204") } -->> { "id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 5)

可以看出,数据分别再Shard1、Shard2分片上。

将Shard3分片也加入到集群中来:

mongos> sh.addShard("shard3/mongo1.example.com:27019,mongo2.example.com:27019,mongo3.example.com:27019")

再查看集群的状态:

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5ef991c0e4405bff37a72c62")

}

shards:

{ "_id" : "shard1", "host" : "shard1/mongo1.wocloud.com:27017,mongo2.wocloud.com:27017", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/mongo2.wocloud.com:27018,mongo3.wocloud.com:27018", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/mongo1.wocloud.com:27019,mongo3.wocloud.com:27019", "state" : 1 }

active mongoses:

"4.0.10" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

4 : Success

databases:

{ "_id" : "articledb", "primary" : "shard1", "partitioned" : true, "version" : { "uuid" : UUID("c55f15d5-d5f9-4fea-b5d2-a4cf53aac750"), "lastMod" : 1 } }

articledb.comment

shard key: { "_id" : "hashed" }

unique: false

balancing: true

chunks:

shard1 2

shard2 2

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : NumberLong("-4611686018427387902") } on : shard1 Timestamp(1, 0)

{ "_id" : NumberLong("-4611686018427387902") } -->> { "_id" : NumberLong(0) } on : shard1 Timestamp(1, 1)

{ "_id" : NumberLong(0) } -->> { "_id" : NumberLong("4611686018427387902") } on : shard2 Timestamp(1, 2)

{ "_id" : NumberLong("4611686018427387902") } -->> { "_id" : { "$maxKey" : 1 } } on : shard2 Timestamp(1, 3)

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{ "_id" : "user_center", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("badbc29b-1c41-42ca-b06c-7803c960076e"), "lastMod" : 1 } }

user_center.log

shard key: { "id" : "hashed" }

unique: false

balancing: true

chunks:

shard1 2

shard2 2

shard3 2

{ "id" : { "$minKey" : 1 } } -->> { "id" : NumberLong("-6148914691236517204") } on : shard1 Timestamp(1, 0)

{ "id" : NumberLong("-6148914691236517204") } -->> { "id" : NumberLong("-3074457345618258602") } on : shard1 Timestamp(1, 1)

{ "id" : NumberLong("-3074457345618258602") } -->> { "id" : NumberLong(0) } on : shard2 Timestamp(1, 2)

{ "id" : NumberLong(0) } -->> { "id" : NumberLong("3074457345618258602") } on : shard2 Timestamp(1, 3)

{ "id" : NumberLong("3074457345618258602") } -->> { "id" : NumberLong("6148914691236517204") } on : shard3 Timestamp(1, 4)

{ "id" : NumberLong("6148914691236517204") } -->> { "id" : { "$maxKey" : 1 } } on : shard3 Timestamp(1, 5)

加入后,集群的分片数据重新平衡调整,有一部分数据分布到Shard3上。

5 备份和恢复

参考:1、https://www.cnblogs.com/sz-wenbin/p/11022563.html

2、https://blog.csdn.net/hanlicun/article/details/79185089