【Python行业分析4】BOSS直聘招聘信息获取之爬虫程序数据处理

今天我们要正式使用程序来把爬取到的页面筛选出有效数据并保存到文件中,我会从最基础的一步一步去完善程序,帮助大家来理解爬虫程序,其中还是有许多问题我没能解决,也希望有大佬可以留言帮助一下

由于cookies调试比较麻烦,我是先当了个静态页面来取数据的,通了后有加的爬取过程。

数据提取

from tp.boss.get_cookies import get_cookie_from_chrome

from bs4 import BeautifulSoup as bs

import requests

HOST = "https://www.zhipin.com/"

def test5(query_url, job_list):

"""

获取下一页数据,直到没有

:return:

"""

# User-Agent信息

user_agent = r'Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/27.0.1453.94 Safari/537.36'

# Headers信息

headers = {'User-Agnet': user_agent, 'Connection': 'keep-alive'}

cookie_dict = get_cookie_from_chrome('.zhipin.com')

# 将字典转为CookieJar:

cookies = requests.utils.cookiejar_from_dict(cookie_dict, cookiejar=None, overwrite=True)

s = requests.Session()

s.cookies = cookies

s.headers = headers

req = s.get(HOST + query_url)

content = req.content.decode("utf-8")

content = bs(content, "html.parser")

# 处理职位列表

for item in content.find_all(class_="job-primary"):

job_title = item.find("div", attrs={"class": "job-title"})

job_name = job_title.a.attrs["title"]

job_href = job_title.a.attrs["href"]

data_jid = job_title.a['data-jid']

data_lid = job_title.a["data-lid"]

job_area = job_title.find(class_="job-area").text

job_limit = item.find(class_="job-limit")

salary = job_limit.span.text

exp = job_limit.p.contents[0]

degree = job_limit.p.contents[2]

company = item.find(class_="info-company")

company_name = company.h3.a.text

company_type = company.p.a.text

stage = company.p.contents[2]

scale = company.p.contents[4]

info_desc = item.find(class_="info-desc").text

tags = [t.text for t in item.find_all(class_="tag-item")]

job_list.append([job_area, company_type, company_name, data_jid, data_lid, job_name, stage, scale, job_href,

salary, exp, degree, info_desc, "、".join(tags)])

page = content.find(class_="page")

if page:

next_page = page.find(class_="next")

if next_page:

next_href = next_page.attrs["href"]

test5(next_href, job_list)

HTML元素获取

获取 标签为div class是 job-title的标签

job_title = item.find("div", attrs={"class": "job-title"})

获取 class是 job-area 的标签

job_area = job_title.find(class_="job-area")

获取 class是 job-primary 的标签列表

job-primary = job_title.find_all(class_="job-primary")

获取 a 标签中是 title 属性的数据

job_name = job_title.a.attrs["title"]

contents获取直接子节点,返回的是一个列表

# 示例html 不限本科

# 结果 ["不限", "", "本科"]

exp = job_limit.p.contents[0]

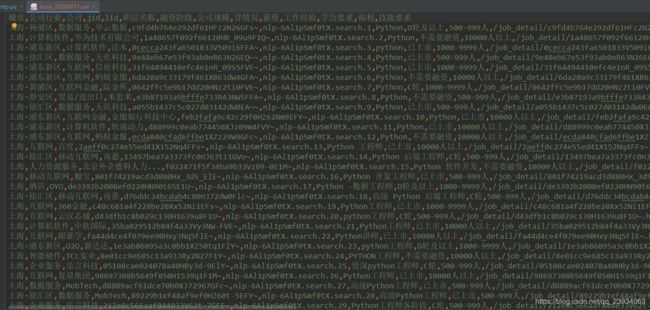

保存csv文件

def test5_main(query_url, file_name):

job_list = []

test5(query_url, job_list)

if len(job_list):

print("爬到%d条数据" % len(job_list))

with open(file_name, "w", newline='', encoding='utf8') as f:

birth_header = ["城市", "公司行业", "公司", "jid", "lid", "职位名称", "融资阶段", "公司规模", "详情页", "薪资",

"工作经验", "学历要求", "福利", "技能要求", ]

writer = csv.writer(f)

writer.writerows([birth_header])

writer.writerows(job_list)

f.close()

else:

print("没有爬取到数据")

if __name__ == "__main__":

query_url = "/job_detail/?query=&city=101020100&industry=&position=100109"

test5_main(query_url, "boss_20200611.csv")