使用OpenGL实现视频录制

之前,我写过一篇文章,用Camera2和GLSurface实现预览:https://blog.csdn.net/qq_36391075/article/details/81631461。

今天,来实现录制视频:

思路:

- 通过MediaCodec创建一个用于输入的Surface

- .通过通过camera预览时的上下文EGL创建OpenGL的环境,根据上面得到的Surface创建EGLSuface。

- 通过camera预览时的绑定的纹理id,进行纹理绘制。

- 交换数据,让数据输入金Surface。使用AudioReocod进行声音的采集

- 通过Mediacodec和MediaMuxer进行数据的封装

MediaCodec

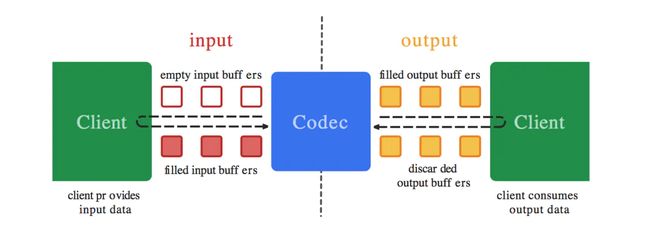

mediacodec可以用来获得安卓底层的多媒体编码,可以用来编码和解码,它是安卓low-level多媒体基础框架的重要组成部分。它经常和 MediaExtractor, MediaSync, MediaMuxer, MediaCrypto, MediaDrm, Image, Surface, AudioTrack一起使用。

通过上图可以看出,mediacodec的作用是处理输入的数据生成输出数据。首先生成一个输入数据缓冲区,将数据填入缓冲区提供给codec,codec会采用异步的方式处理这些输入的数据,然后将填满输出缓冲区提供给消费者,消费者消费完后将缓冲区返还给codec。

关于它的详细介绍可以参考:https://juejin.im/entry/5aa234f751882555712bf210

MediaMuxer

MediaMuxer的作用是生成音频或视频文件;还可以把音频与视频混合成一个音视频文件

相关API介绍:

- MediaMuxer(String path, int format):path:输出文件的名称 format:输出文件的格式;当前只支持MP4格式;

- addTrack(MediaFormat format):添加通道;我们更多的是使用MediaCodec.getOutpurForma()或Extractor.getTrackFormat(int index)来获取MediaFormat;也可以自己创建;

- start():开始合成文件

- writeSampleData(int trackIndex, ByteBuffer byteBuf, MediaCodec.BufferInfo bufferInfo):把ByteBuffer中的数据写入到在构造器设置的文件中;

- stop():停止合成文件

- release():释放资源

关于它的使用可以参考:https://www.cnblogs.com/renhui/p/7474096.html

根据思路,首先,我们得先创建一个关于画面的MeidaCodec:

//在VideoRecordEncode类中

public void prepare(){

Log.d(TAG, "prepare: "+Thread.currentThread().getName());

try {

mEnOS = false;

mViedeoEncode = MediaCodec.createEncoderByType(MIME_TYPE);

MediaFormat format = MediaFormat.createVideoFormat(MIME_TYPE,mWidth,mHeight);

format.setInteger(MediaFormat.KEY_BIT_RATE,calcBitRate());

format.setInteger(MediaFormat.KEY_FRAME_RATE,FRAME_RATE);

format.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL,10);

format.setInteger(MediaFormat.KEY_COLOR_FORMAT,

MediaCodecInfo.CodecCapabilities.COLOR_FormatSurface);

mViedeoEncode.configure(format,null,null,MediaCodec.CONFIGURE_FLAG_ENCODE);

//得到Surface用于编码

mSurface = mViedeoEncode.createInputSurface();

mViedeoEncode.start();

mPrepareLisnter.onPrepare(this);

}catch (IOException e){

e.printStackTrace();

}

}Surface创建好后,我们绑定EGL的上下文:在`onPrepare(this);回调中:

private onFramPrepareLisnter lisnter = new onFramPrepareLisnter() {

@Override

public void onPrepare(VideoRecordEncode encode) {

mPresenter.setVideoEncode(encode);

}

};

public void setVideoEncode(VideoRecordEncode encode){

mViewController.setVideoEncode(encode);

}

@Override

public void setVideoEncode(VideoRecordEncode encode) {

mRecordView.setVideoEndoer(encode);//mRecodView是GLSrurace的子类

}

public void setVideoEndoer(final VideoRecordEncode endoer){

//获得OpenGL中的线程

queueEvent(new Runnable() {

@Override

public void run() {

synchronized (mRender){

endoer.setEGLContext(EGL14.eglGetCurrentContext(),mTextId);

mRender.mEncode = endoer;

}

}

});

}

public void setEGLContext(EGLContext context,int texId){

mShare_Context = context;

mTexId = texId;

mHandler.setEGLContext(mShare_Context,mSurface,mTexId);//创建上下文

}

与此同时,在VideoRecordEncode类中,在它被创建的同时,让MediaCodec的对象作用在一个线程中,且让用EGL绘制纹理作用于另一个线程中:

public VideoRecordEncode(VideoMediaMuxer muxer,onFramPrepareLisnter prepareLisnter, int width, int height) {

mWidth = width;

mHeight = height;

mPrepareLisnter = prepareLisnter;

mMuxer = muxer;

mBfferInfo = new MediaCodec.BufferInfo();

mHandler = RenderHandler.createRenderHandler();

synchronized (mSync){

new Thread(this).start();

try {

mSync.wait();

}catch (InterruptedException e){

e.printStackTrace();

}

}

}

public static RenderHandler createRenderHandler(){

RenderHandler handler = new RenderHandler();

new Thread(handler).start();

synchronized (handler.mSyn){

try {

handler.mSyn.wait();

}catch (InterruptedException e){

return null;

}

}

return handler;

}

RenderHanlder是专门用于EGL绘制的一个类:当它启动的时候,进入run方法,这个时候,EGL的环境还没有创建,因此我们用一个标志符来标志是否创建EGLContext:

@Override

public void run() {

synchronized (mSyn){

mRequestRelease= mRequestEGLContext = false;

mRequestDraw = 0;

mSyn.notifyAll();

}

boolean localRequestDraw = false;

for(;;){

synchronized (mSyn){

if(mRequestRelease) break;

if(mRequestEGLContext){

mRequestEGLContext = false;

prepare();

}

}

localRequestDraw = mRequestDraw>0 ;

if(localRequestDraw){

if(mTextId>=0){

mRequestDraw --;

mEGLHelper.makeCurrent();

mEGLHelper.render(mTextId,mStMatrix);

}

}else {

synchronized (mSyn){

try {

mSyn.wait();

}catch (InterruptedException e){

break;

}

}

}

}

}当VideoRecordEncode准备好了,就创建上下文,这时就让mRequestEGLContext = ture:然后就会准备EGL环境:

private void prepare(){

mEGLHelper = new EGLHelper(mShareContext,mLinkSurface,mTextId);

mSyn.notifyAll();

}在run方法中,我们看到还有一个localRequestDraw它用来表示当前是否有数据可以进行绘制,当有数据绘制时,它就为true,否则为false,这时让线程进行等待,直达有数据为止。

当RenderHandler的线程开启后,VideroRecordEncode的线程也会开启,也进入run方法:

@Override

public void run() {

Log.d(TAG, "run: "+Thread.currentThread().getName());

synchronized (mSync){

mLocalRquestStop = false;

mRequestDrain = 0;

mSync.notifyAll();

}

boolean localRuqestDrain;

boolean localRequestStop;

while (mIsRunning){

synchronized (mSync){

localRequestStop = mLocalRquestStop;

localRuqestDrain = (mRequestDrain>0); //判断是否有数据进行绘制

if(localRuqestDrain){

mRequestDrain --;

}

}

if(localRequestStop){

drain();

mViedeoEncode.signalEndOfInputStream();

mEnOS = true;

drain();

release();

break;

}

if(localRuqestDrain){

drain();//获得Surface的数据,写入文件

}else {

synchronized (mSync){

try {

Log.d(TAG, "run: wait");

mSync.wait();

}catch (InterruptedException e){

break;

}

}

}

}

synchronized (mSync){

mLocalRquestStop = true;

mIsCaturing = false;

}

}这个run方法的逻辑和RenderHandle的run方法的逻辑差不多,同样是有数据才绘制,没有数据就等待。如果录制结束了,就将结束符输入。

private void drain(){

int count = 0;

LOOP: while (mIsCaturing){ //判断是都在捕获画面

int encodeStatue = mViedeoEncode.

dequeueOutputBuffer(mBfferInfo,10000); //得到数据的index

if(encodeStatue == MediaCodec.INFO_TRY_AGAIN_LATER){ //如果mViedeoEncode还没有准备好

if(!mEnOS){

if(++count >5){

break LOOP;

}

}

}else if(encodeStatue == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED){

//当发生变化时,这个通常刚启动的时候进入

Log.d(TAG, "drain: "+encodeStatue);

MediaFormat format = mViedeoEncode.getOutputFormat();

mTrackIndex = mMuxer.addTrack(format);

mMuxerStart = true;

if(!mMuxer.start()){

synchronized (mMuxer){

while (!mMuxer.isStarted()){

try {

mMuxer.wait(100);

}catch (InterruptedException e){

break LOOP;

}

}

}

}

}else if(encodeStatue <0){

Log.d(TAG, "drain:unexpected result " +

"from encoder#dequeueOutputBuffer: " + encodeStatue);

}else {

ByteBuffer byteBuffer = mViedeoEncode.getOutputBuffer(encodeStatue);//获取数据

mBfferInfo.presentationTimeUs = getPTSUs();

prevOutputPTSUs = mBfferInfo.presentationTimeUs;

mMuxer.writeSampleData(mTrackIndex,byteBuffer,mBfferInfo);//写入数据

mViedeoEncode.releaseOutputBuffer(encodeStatue,false);

if ((mBfferInfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

// when EOS come.

Log.d(TAG, "drain: EOS");

mIsCaturing = false;

break; // out of while

}

}

}

}刚才我们知道,有数据时,我们才绘制,那么怎么知道有数据呢?

在预览用的GLSuface中,当调用onDrawFramw时,那么便是有数据了:

@Override

public void onDrawFrame(GL10 gl) {

GLES20.glClear(GLES20.GL_COLOR_BUFFER_BIT);

if(mRequestUpdateTex){

mRequestUpdateTex = false;

//得到最新的图像

mSurfaceTexture.updateTexImage();

//得到图像的纹理矩阵

mSurfaceTexture.getTransformMatrix(mStMatrix);

}

//绘制图像

mPhoto.draw(mTextId,mStMatrix);

mFlip = !mFlip;

if(mFlip){ //降帧

synchronized (this){

if(mEncode!=null){

mEncode.onFrameAvaliable(mTextId,mStMatrix);

}

}

}

}

public boolean onFrameAvaliable(int textId,float[] stMatrix){

synchronized (mSync){

if(!mIsCaturing||mLocalRquestStop){

return false;

}

mRequestDrain++;//有数据了

mSync.notifyAll();//结束等待

}

mTexId = textId;

mHandler.draw(mTexId,stMatrix); //通知绘制图像

return true;

}

public void draw(int textId,float[] stMatrix){

if(mRequestRelease) return;

synchronized (mSyn){

mTextId = textId;

// System.arraycopy(stMatrix,0,mStMatrix,0,16);

mStMatrix = stMatrix;

mRequestDraw ++;

mSyn.notifyAll();

}

}

这样的话,视频的画面数据就一帧一帧的写入文件了。我们再梳理一下逻辑。

在VideoRecordEncode这个类的对象创建的时候,创建绘制用的类RenderHandler的对象,并都开启线程,这样两个都进入等待时刻,等待数据。

当`VideoRecordEncode这个类的对象的MediaCodec准备好了,就创建与预览相关联的EGL环境,然后开始捕获画面。 onDrawFrame

当在方法调用时,就通知VideoRecordEncode`对象,数据准备好了,可以结束等待了,同时通知RenderHanlder对象,让它进行绘制。

将获得的数据写入文件。

写入文件,我用的是MediaMuxer

public VideoMediaMuxer()throws IOException{

mOutputPath = getCaptureFile(Environment.DIRECTORY_MOVIES,EXT).toString();

mMediaMuxer = new MediaMuxer(mOutputPath,MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

mEncodeCount = 0;

mStartEncodeCount = 0;

mPresenter.setModeController(this);

}

public void addEncode(VideoRecordEncode videoRecordEncode,AudioRecordEncode audioRecordEncode){

mVideoEncode = videoRecordEncode;

mAudioEndoe = audioRecordEncode;

mEncodeCount = 2;

}

public int addTrack(MediaFormat format){

int track= mMediaMuxer.addTrack(format);

return track;

}

public void preprare(){

//MediaCodec初始化

mVideoEncode.prepare();

mAudioEndoe.onPerpare();

}

@Override

public void startRecording(){

//开始录制

mVideoEncode = new

VideoRecordEncode(this,lisnter,1280, 720);

mAudioEndoe = new AudioRecordEncode(this);

//判断有几个MediaCodec

this.addEncode(mVideoEncode,mAudioEndoe);

this.preprare();

mVideoEncode.startRecord();

mAudioEndoe.startRecording();

}

private onFramPrepareLisnter lisnter = new onFramPrepareLisnter() {

@Override

public void onPrepare(VideoRecordEncode encode) {

mPresenter.setVideoEncode(encode);

}

};

@Override

public void stopRecording(){

mVideoEncode.onStopRecording();

mAudioEndoe.onStopRecording();

}

synchronized public boolean start(){

//当两个MediaCodec都准备好了,才可以写入文件

mStartEncodeCount++;

Log.d(TAG, "start: "+mStartEncodeCount);

if(mEncodeCount>0&&(mStartEncodeCount == mEncodeCount)){

mMediaMuxer.start();

mIsStart = true;

notifyAll();

return mIsStart;

}

return mIsStart;

}

public synchronized boolean isStarted() {

return mIsStart;

}

synchronized public void stop(){

mStartEncodeCount -- ;

if(mEncodeCount>0&&mStartEncodeCount<=0){

mMediaMuxer.stop();

mMediaMuxer.release();

mIsStart = false;

}

}

public void writeSampleData(int mediaTrack, ByteBuffer byteBuffer, MediaCodec.BufferInfo bufferInfo){

//写入文件

if(mStartEncodeCount>0){

mMediaMuxer.writeSampleData(mediaTrack,byteBuffer,bufferInfo);

}

}

public static final File getCaptureFile(final String type, final String ext) {

final File dir = new File(Environment.getExternalStoragePublicDirectory(type), DIR_NAME);

dir.mkdirs();

if (dir.canWrite()) {

return new File(dir, getDateTimeString() + ext);

}

return null;

}

private static final String getDateTimeString() {

final GregorianCalendar now = new GregorianCalendar();

return mDateTimeForamt.format(now.getTime());

}

}

关于声音的录制,其原理和代码和画面的录制差不多,只是多了一个encode的部分,它与画面录制不同的时,我们用AudioRecode录制的声音数据,需要自己手动写入MediaCode:

public class AudioRecordEncode implements Runnable {

private static final int BIT_RATE = 64000;

public static final int SAMPLES_PER_FRAME = 1024; //ACC,bytes/frame/channel

public static final int FRAME_PER_BUFFER = 25; //ACC,frame/buffer/sec

private static final String MIME_TYPE = "audio/mp4a-latm";

// 采样率

// 44100是目前的标准,但是某些设备仍然支持22050,16000,11025

// 采样频率一般共分为22.05KHz、44.1KHz、48KHz三个等级

private final static int AUDIO_SAMPLE_RATE = 44100;

// 音频通道 单声道

private final static int AUDIO_CHANNEL = AudioFormat.CHANNEL_IN_MONO;

// 音频格式:PCM编码

private final static int AUDIO_ENCODING = AudioFormat.ENCODING_PCM_16BIT;

private boolean mIsCapturing = false;

private boolean mEOS = false;

private boolean mRuestStop = false;

private int mRuqestDrain = 0;

private Object mSyn = new Object();

private AudioThread mAudioThread;

private MediaCodec mCodec;

private VideoMediaMuxer mMuxer;

private boolean mMuxerStart = false;

private int mTrackIndex;

private MediaCodec.BufferInfo mBfferInfo;

private static final String TAG = "AudioRecordEncode";

public AudioRecordEncode(VideoMediaMuxer muxer) {

mBfferInfo = new MediaCodec.BufferInfo();

mMuxer = muxer;

synchronized (mSyn){

new Thread(this).start();

try {

mSyn.wait();

}catch (InterruptedException e){

e.printStackTrace();

}

}

}

public void onPerpare(){

//MediaCodec初始化

mEOS = false;

try {

MediaFormat audioFormat = MediaFormat.createAudioFormat(MIME_TYPE,

AUDIO_SAMPLE_RATE,AUDIO_CHANNEL);

audioFormat.setInteger(MediaFormat.KEY_AAC_PROFILE, MediaCodecInfo.CodecProfileLevel.AACObjectLC);

audioFormat.setInteger(MediaFormat.KEY_CHANNEL_MASK, AudioFormat.CHANNEL_IN_MONO);

audioFormat.setInteger(MediaFormat.KEY_BIT_RATE, BIT_RATE);

audioFormat.setInteger(MediaFormat.KEY_CHANNEL_COUNT, 1);

mCodec = MediaCodec.createEncoderByType(MIME_TYPE);

mCodec.configure(audioFormat,null,null,MediaCodec.CONFIGURE_FLAG_ENCODE);

mCodec.start();

}catch (IOException e){

e.printStackTrace();

}

}

public void startRecording(){

synchronized (mSyn){

mIsCapturing = true;

mRuestStop = false;

//开启声音录制

mAudioThread = new AudioThread();

mAudioThread.start();

mSyn.notifyAll();

}

}

@Override

public void run() {

synchronized (mSyn){

mRuestStop = false;

mRuqestDrain = 0;

mSyn.notifyAll();

}

boolean localRuqestDrain;

boolean localRequestStop;

boolean IsRunning = true;

while (IsRunning){

synchronized (mSyn){

localRequestStop = mRuestStop;

localRuqestDrain = (mRuqestDrain>0);//判断是否有数据

if(localRuqestDrain){

mRuqestDrain --;

}

}

if(localRequestStop){

drain();

encode(null,0,getPTSUs());

mEOS = true;

drain();

release();

break;

}

if(localRuqestDrain){

drain();

}else {

synchronized (mSyn){//没有数据就等待数据

try {

Log.d(TAG, "run: wait");

mSyn.wait();

}catch (InterruptedException e){

break;

}

}

}

}

synchronized (mSyn){

mRuestStop = true;

mIsCapturing= false;

}

}

private void drain(){

int count = 0;

LOOP:while (mIsCapturing){

int encodeStatue = mCodec.

dequeueOutputBuffer(mBfferInfo,10000);

if(encodeStatue == MediaCodec.INFO_TRY_AGAIN_LATER){

if(!mEOS){

if(++count >5){

break LOOP;

}

}

}else if(encodeStatue == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED){

Log.d(TAG, "drain: "+encodeStatue);

MediaFormat format = mCodec.getOutputFormat();

mTrackIndex = mMuxer.addTrack(format);

mMuxerStart = true;

if(!mMuxer.start()){

synchronized (mMuxer){

while (!mMuxer.isStarted()){

try {

mMuxer.wait(100);

}catch (InterruptedException e){

break LOOP;

}

}

}

}

}else if(encodeStatue <0){

Log.d(TAG, "drain:unexpected result " +

"from encoder#dequeueOutputBuffer: " + encodeStatue);

}else {

ByteBuffer byteBuffer = mCodec.getOutputBuffer(encodeStatue);

mBfferInfo.presentationTimeUs = getPTSUs();

prevOutputPTSUs = mBfferInfo.presentationTimeUs;

mMuxer.writeSampleData(mTrackIndex,byteBuffer,mBfferInfo);

mCodec.releaseOutputBuffer(encodeStatue,false);

if ((mBfferInfo.flags & MediaCodec.BUFFER_FLAG_END_OF_STREAM) != 0) {

// when EOS come.

Log.d(TAG, "drain: EOS");

mIsCapturing = false;

break; // out of while

}

}

}

}

private void release(){

if(mCodec != null){

mCodec.stop();

mCodec.release();

mCodec = null;

}

if (mMuxerStart) {

if (mMuxer != null) {

try {

mMuxer.stop();

} catch (final Exception e) {

Log.e(TAG, "failed stopping muxer", e);

}

}

}

mBfferInfo = null;

}

public void onStopRecording(){

synchronized (mSyn){

if(!mIsCapturing||mRuestStop){

return;

}

mIsCapturing = false;

mRuestStop = true;

mSyn.notifyAll();

}

}

private void onFrameAvaliable(){

//通知有数据了

synchronized (mSyn){

if(!mIsCapturing || mRuestStop ){

return;

}

mRuqestDrain++;

mSyn.notifyAll();

}

}

private class AudioThread extends Thread{

//音频源

private static final int AUDIO_INPUT = MediaRecorder.AudioSource.MIC;

// 录音对象

private AudioRecord mAudioRecord;

@Override

public void run() {

// android.os.Process.setThreadPriority(android.os.Process.THREAD_PRIORITY_URGENT_AUDIO);

createAudio();//初始化AudioRecorde

if(mAudioRecord.getState() != AudioRecord.STATE_INITIALIZED){

mAudioRecord = null;

}

if(mAudioRecord!=null){

try {

if(mIsCapturing){

ByteBuffer buffer = ByteBuffer.allocateDirect(SAMPLES_PER_FRAME);

mAudioRecord.startRecording();//开始录制

int readSize;

try {

for(;mIsCapturing && !mRuestStop && !mEOS;){

buffer.clear();

readSize = mAudioRecord.read(buffer,SAMPLES_PER_FRAME);//读取数据

if(readSize>0){

buffer.position(readSize);

buffer.flip();

encode(buffer,readSize,getPTSUs());//将数据放入MediaCodec

onFrameAvaliable();//通知有数据了

}

}

onFrameAvaliable();

}finally {

mAudioRecord.stop();

}

}

}finally {

mAudioRecord.release();

mAudioRecord = null;

}

}

}

private void createAudio(){

//获得缓冲区字节大小

int buffersize = AudioRecord.getMinBufferSize(AUDIO_SAMPLE_RATE,

AUDIO_CHANNEL,AUDIO_ENCODING);

mAudioRecord = new AudioRecord(AUDIO_INPUT,AUDIO_SAMPLE_RATE,

AUDIO_CHANNEL,AUDIO_ENCODING,buffersize);

}

}

private void encode(ByteBuffer byteBuffer,int length,long presentationTimeUs){

if(!mIsCapturing) return;

ByteBuffer inputBuffer ;

int index;

while (mIsCapturing){

index = mCodec.dequeueInputBuffer(presentationTimeUs);

if(index>0){

inputBuffer = mCodec.getInputBuffer(index);

inputBuffer.clear();

if(byteBuffer!=null){

inputBuffer.put(byteBuffer);

}

if(length<=0){

mEOS = true;

mCodec.queueInputBuffer(index,0,0,

presentationTimeUs,MediaCodec.BUFFER_FLAG_END_OF_STREAM);

break;

}else {

mCodec.queueInputBuffer(index,0,length,presentationTimeUs,0);

}

break;

}else {

}

}

}

private long prevOutputPTSUs = 0;

/**

* get next encoding presentationTimeUs

* @return

*/

protected long getPTSUs() {

long result = System.nanoTime() / 1000L;

// presentationTimeUs should be monotonic

// otherwise muxer fail to write

if (result < prevOutputPTSUs)

result = (prevOutputPTSUs - result) + result;

return result;

}

}

项目地址: https://github.com/vivianluomin/FunCamera