Keras学习笔记---基于深度学习的猫狗分类器练习

为了复习巩固刚学过的知识,在这里做一些简单的笔记。

在这里将使用Kaggle数据平台中2013年的dogs-vs-cats数据集中的很少一部分作为训练集和测试集,在2013年的计算视觉比赛中,当时还未出现主流卷积神经网络算法,但当年结果最优之一者就是使用了卷积神经网络取得了95%的精度,所以用来实验卷积神经网络是个不错的选择。这里为了单纯实验模型,所以降低数据计算量,只采用2000张数据图像,Kaggle数据平台公开有25000张。主要实验两部分:一是只靠原始数据训练模型,得到精度大概为72%,保存为模型1。第二采用数据增强、Dropout、正则化等技术,降低过拟合,可以取得82%的精度,保存为模型2。

具体步骤:

1:数据集下载地址:https://www.kaggle.com/salader/dogs-vs-cats 压缩包大概545M

2:新建文件夹kaggle_original_data存放数据集,将下载下来的数据解压后保存到这个文件夹中。注意,下载的数据是别人分好训练集和测试集的,所以要把子文件中所有图像都复制到kaggle_original_data文件夹中,不要子文件夹。

3:选择2000张图像构建自己的训练集、验证集、测试集,程序中的路径注意修改为自己的就行,文件夹会自动创建。

import keras

keras.__version__

import os, shutil

# The path to the directory where the original

# dataset was uncompressed

original_dataset_dir = r'E:\CJ_Data\deeplearning5_2\kaggle_original_data'

# The directory where we will

# store our smaller dataset

base_dir = r'E:\CJ_Data\deeplearning5_2\cats_and_dogs_small'

os.mkdir(base_dir)

# Directories for our training,

# validation and test splits

train_dir = os.path.join(base_dir, 'train')

os.mkdir(train_dir)

validation_dir = os.path.join(base_dir, 'validation')

os.mkdir(validation_dir)

test_dir = os.path.join(base_dir, 'test')

os.mkdir(test_dir)

# Directory with our training cat pictures

train_cats_dir = os.path.join(train_dir, 'cats')

os.mkdir(train_cats_dir)

# Directory with our training dog pictures

train_dogs_dir = os.path.join(train_dir, 'dogs')

os.mkdir(train_dogs_dir)

# Directory with our validation cat pictures

validation_cats_dir = os.path.join(validation_dir, 'cats')

os.mkdir(validation_cats_dir)

# Directory with our validation dog pictures

validation_dogs_dir = os.path.join(validation_dir, 'dogs')

os.mkdir(validation_dogs_dir)

# Directory with our validation cat pictures

test_cats_dir = os.path.join(test_dir, 'cats')

os.mkdir(test_cats_dir)

# Directory with our validation dog pictures

test_dogs_dir = os.path.join(test_dir, 'dogs')

os.mkdir(test_dogs_dir)

# Copy first 1000 cat images to train_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 cat images to validation_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 cat images to test_cats_dir

fnames = ['cat.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_cats_dir, fname)

shutil.copyfile(src, dst)

# Copy first 1000 dog images to train_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(train_dogs_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 dog images to validation_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1000, 1500)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(validation_dogs_dir, fname)

shutil.copyfile(src, dst)

# Copy next 500 dog images to test_dogs_dir

fnames = ['dog.{}.jpg'.format(i) for i in range(1500, 2000)]

for fname in fnames:

src = os.path.join(original_dataset_dir, fname)

dst = os.path.join(test_dogs_dir, fname)

shutil.copyfile(src, dst)

4:构建网络

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu',

input_shape=(150, 150, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(128, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

5:编译

from keras import optimizers

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(lr=1e-4),

metrics=['acc'])

6:使用生成器读取图像

from keras.preprocessing.image import ImageDataGenerator

# All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1./255)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

# This is the target directory

train_dir,

# All images will be resized to 150x150

target_size=(150, 150),

batch_size=20,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

7:训练模型

%%time

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=30,

validation_data=validation_generator,

validation_steps=50)

8:保存模型

model.save(r'E:\CJ_Data\deeplearning5_2\cats_and_dogs_small_1.h5')

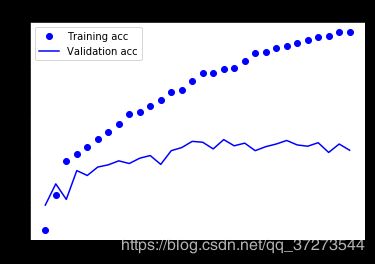

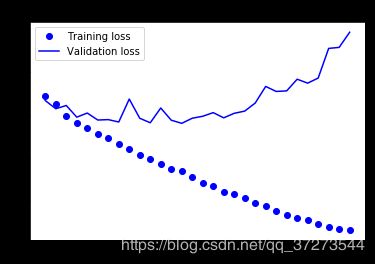

9:绘制训练过程中的损失曲线和精度曲线

import matplotlib.pyplot as plt

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()