python手势识别上下左右(SVM+HOG特征)

项目环境:opencv==3.4.5 scikit-learn =>=0.20.2. numpy == 1.17.4

参考博客:https://blog.csdn.net/qq_41562704/article/details/88975569

一、收集数据集

1、数据集图片的大小 300x300

2、获取上、下、左、右的手势,进行皮肤检测,将背景二值化

3、皮肤检测参考文章(https://blog.csdn.net/weixin_40893939/article/details/84527037)

dataset.py

# -*- coding: utf8 -*-

# @auth

# 获取数据集

import cv2

import numpy as np

from picture import skinMask

#图片数据格式为 300x300

HEIGHT = 300

WIDTH = 300

#获取手势框左上角坐标

x0 = 100

y0 = 100

#保存图片计数器

count = 0

if __name__ == '__main__':

cap = cv2.VideoCapture(0) #打开摄像头

while True:

ret, frame = cap.read() #读取摄像头内容

if ret == False:

break #若内容读取失败则跳出循环

frame = cv2.flip(frame, 1) #翻转图片

cv2.rectangle(frame, (x0-1,y0-1), (x0+WIDTH+1, y0+HEIGHT+1), (0,255,0), 2) #画出获取手势的位置框

roi = frame[y0:y0+HEIGHT, x0:x0+WIDTH] # 获取手势框内容

res = skinMask(roi) # 皮肤检测

frame[y0:y0+HEIGHT, x0:x0+WIDTH] = res # 将肌肤检测后

cv2.imshow("frame", frame) #展示图片

key = cv2.waitKey(25) #获取按键,进行判断下一步操作

if key == 27:

break # 按ESC键退出

elif key == ord("w"):

y0 -= 5 # 按w向上移动

elif key == ord("a"):

x0 -= 5 # 按a向左移动

elif key == ord("s"):

y0 += 5 # 按s向下移动

elif key == ord("d"):

x0 += 5 # 按d向右移动

elif key == ord("l"):

# 按l保存手势

count += 1

cv2.imwrite("./dataset/{:d}.png".format(count), roi) # 保存手势

cap.release()

cv2.destroyAllWindows()效果展示:

按键:a(左移手势框), w(上移手势框), s(下移手势框), d(右移手势框), l(保存手势框中数据)

二、训练数据集

1、提取数据集的HOG特征,调用OpenCV自带函数

2、使用SVM进行训练,分类

classify.py

# -*- coding: utf-8 -*-

# @auth

# 使用SVM对手势方向进行训练, 分类

import cv2

import numpy as np

from os import listdir

from sklearn.svm import SVC

from sklearn.externals import joblib

from sklearn.model_selection import GridSearchCV

train_path = "./feature/"

test_path = "./test_feature/"

model_path = "./model/"

# 将txt文件中的特征转化成向量

def txtToVector(filename, N):

returnVec = np.zeros((1,N))

fr = open(filename)

lineStr = fr.readline()

lineStr = lineStr.split(" ")

for i in range(N):

returnVec[0, i] = int(lineStr[i])

return returnVec

# 训练SVM

def train_SVM(N):

svc = SVC()

parameters = {'kernel':('linear', 'rbf'),

'C':[1, 3, 5, 7, 9, 11, 13, 15, 17, 19],

'gamma':[0.00001, 0.0001, 0.001, 0.1, 1, 10, 100, 1000]}#预设置一些参数值

hwLabels = []#存放类别标签

trainingFileList = listdir(train_path)

m = len(trainingFileList)

trainingMat = np.zeros((m,N))

for i in range(m):

fileNameStr = trainingFileList[i]

classNumber = int(fileNameStr.split('_')[0])

hwLabels.append(classNumber)

trainingMat[i,:] = txtToVector(train_path+fileNameStr, N)#将训练集改为矩阵格式

clf = GridSearchCV(svc, parameters, cv=5, n_jobs=8)#网格搜索法,设置5-折交叉验证

clf.fit(trainingMat, hwLabels)

print(clf.return_train_score)

print(clf.best_params_) #打印出最好的结果

best_model = clf.best_estimator_

print("SVM Model saved")

save_path = model_path + "svm_train_model.m"

joblib.dump(best_model,save_path)#保存最好的模型

# 测试SVM

def test_SVM(clf,N):

testFileList = listdir(train_path)

errorCount = 0#记录错误个数

mTest = len(testFileList)

for i in range(mTest):

fileNameStr = testFileList[i]

classNum = int(fileNameStr.split('_')[0])

vectorTest = txtToVector(train_path+fileNameStr,N)

valTest = clf.predict(vectorTest)

#print("分类返回结果为%d\t真实结果为%d" % (valTest, classNum))

print("file:", fileNameStr,"classNum:", classNum, "Test:", clf.predict(vectorTest))

if valTest != classNum:

errorCount += 1

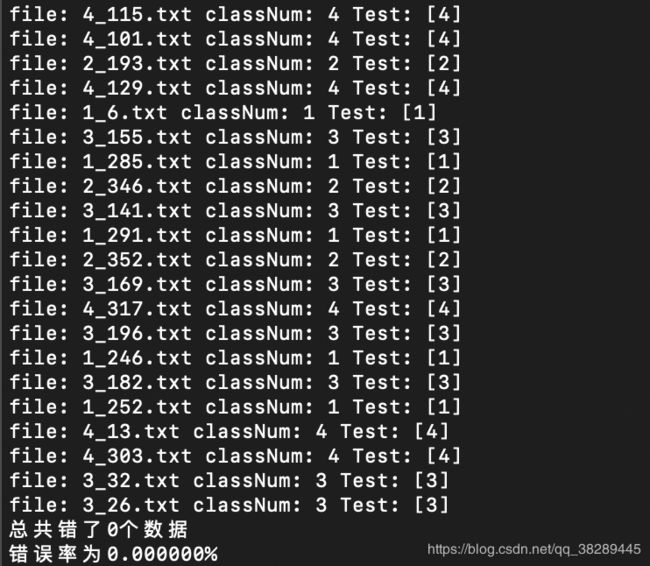

print("总共错了%d个数据\n错误率为%f%%" % (errorCount, errorCount/mTest * 100))

# 载入模型并进行测试

def test_fd(fd_test):

clf = joblib.load(model_path + "svm_train_model.m")

test_svm = clf.predict(fd_test)

return test_svm

if __name__=="__main__":

train_SVM(99)

clf = joblib.load(model_path + "svm_train_model.m")

test_SVM(clf,99)

训练后,结果测试:

三、完成训练

运行myGUI.py

效果展示:

小结:

1、训练样本共1417张手势图片

2、食指超出摄像界面或是手臂出现在摄像界面中错误概率较大

3、运行GUI界面可能会出现“延迟卡顿”(鼠标点击终端会更新识别状态)

4、代码下载