015-Storm计算网站UV(去重计算模式)

一、需求分析-UV统计

方案分析

如下是否可行?

1、把session_id 放入Set实现自动去重,Set.size() 获得UV

该方案在单线程和单JVM下可以,没有问题。高并发情况的多线程情况不适用。

方案分析

如下是否可行?

1、把session_id 放入Set实现自动去重,Set.size() 获得UV

该方案在单线程和单JVM下可以,没有问题。高并发情况的多线程情况不适用。

2、可行的方案

bolt1通过fieldGrouping 进行多线程局部汇总,下一级blot2进行单线程保存session_id和count数到Map,下一级blot3进行Map遍历,可以得到:

Pv、UV、访问深度(每个session_id 的浏览数)

3、统计结果位

日期 pv uv

2015-10-12 6 3

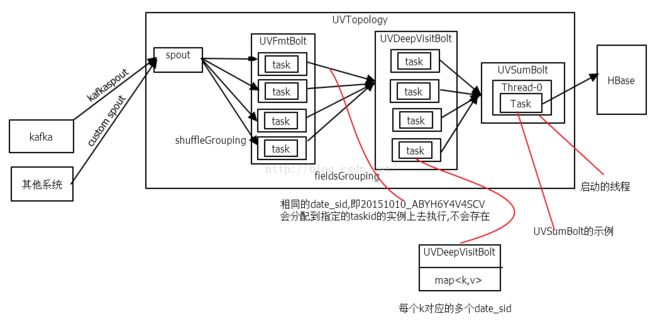

二、Storm统计UV和PV的流程图和Storm代码

(1)

UVTopology

整体代码

package com.yun.storm.uv;

import java.util.HashMap;

import java.util.Map;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.topology.TopologyBuilder;

import backtype.storm.tuple.Fields;

public class UVTopology {

public static final String SPOUT_ID = SourceSpout.class .getSimpleName();

public static final String UVFMT_ID = UVFmtBolt.class .getSimpleName();

public static final String UVDEEPVISITBOLT_ID = UVDeepVisitBolt.class .getSimpleName();

public static final String UVSUMBOLT_ID = UVSumBolt.class .getSimpleName();

public static void main(String[] args) {

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout( SPOUT_ID , new SourceSpout(), 1);

// 格式化数据源: 日期 sid,格式: 20151010 ABYH6Y4V4SCV

builder.setBolt( UVFMT_ID , new UVFmtBolt(), 4).shuffleGrouping(SPOUT_ID );

// 统计每个线程 对应的日 UV ,格式: 20151010_ABYH6Y4V4SCV 4

builder.setBolt( UVDEEPVISITBOLT_ID , new UVDeepVisitBolt(), 4).fieldsGrouping( UVFMT_ID,

new Fields("date" , "sid" ));

// 单线程汇总

builder.setBolt( UVSUMBOLT_ID , new UVSumBolt(), 1).shuffleGrouping(UVDEEPVISITBOLT_ID );

Map conf = new HashMap();

conf.put(Config. TOPOLOGY_RECEIVER_BUFFER_SIZE , 8);

conf.put(Config. TOPOLOGY_TRANSFER_BUFFER_SIZE , 32);

conf.put(Config. TOPOLOGY_EXECUTOR_RECEIVE_BUFFER_SIZE , 16384);

conf.put(Config. TOPOLOGY_EXECUTOR_SEND_BUFFER_SIZE , 16384);

LocalCluster cluster = new LocalCluster();

cluster.submitTopology(UVTopology. class .getSimpleName(), conf , builder .createTopology());

}

}

/**

(1)产生问题现象:

做实时数据分析,使用到了twitter开源的storm,在初始化的时候报了一个序列化的错:

java.lang.RuntimeException: java.io.NotSerializableException

(2)问题原因: 因为storm工作机制的问题。在你启动一个topology以后,supervisor会初始化这个bolt,并发送到worker,然后再调用bolt的prepare()方法。

OK,从这里就能看出来发送到bolt这一步涉及到了序列化,因此会报错

(3)解决方案:

从supervisor发送到bolt这一步并不是初始化DateTimeFormatter,HBaseUtils相关的类,把初始化的操作放到prepare方法中即可解决

具体可以参考: http://www.xuebuyuan.com/616276.html

*/

(2)

SourceSpout模拟数据

package com.yun.storm.uv;

import java.util.Map;

import java.util.Random;

import java.util.concurrent.ConcurrentLinkedQueue;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

/**

* 模拟消息队列产生的数据

*

* @author shenfl

*

*/

public class SourceSpout extends BaseRichSpout {

ConcurrentLinkedQueue queue = new ConcurrentLinkedQueue();// 原子操作线程安全

private SpoutOutputCollector collector;

/**

*

*/

private static final long serialVersionUID = 1L;

/**

* Called when a task for this component is initialized within a worker on the cluster.

* It provides the spout with the environment in which the spout executes.

*

* This includes the:

*

* @param conf The Storm configuration for this spout. This is the configuration provided to the topology merged in with cluster configuration on this machine.

* @param context This object can be used to get information about this task's place within the topology, including the task id and component id of this task, input and output information, etc.

* @param collector The collector is used to emit tuples from this spout. Tuples can be emitted at any time, including the open and close methods. The collector is thread-safe and should be saved as an instance variable of this spout object.

*/

@Override

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

String[] time = { "2015-10-12 08:40:50", "2015-10-12 08:40:50", "2015-10-12 08:40:50","2015-10-12 08:40:50" };

String[] sid = { "ABYH6Y4V4SCV00", "ABYH6Y4V4SCV01", "ABYH6Y4V4SCV01","ABYH6Y4V4SCV03" };

String[] url = { "http://www.jd.com/1.html", "http://www.jd.com/2.html", "http://www.jd.com/3.html" ,"http://www.jd.com/3.html"};

for (int i = 0; i < 6; i++) {

Random r = new Random();

int k = r.nextInt(4);

queue.add(time[k] + "\t" + sid[k] + "\t" + url[k]);

}

this.collector = collector;

}

/**

* 从数据源独断的读取数据,每隔5s读取一次

*/

@Override

public void nextTuple() {

if(queue.size()>0){

String line = queue.poll();

this.collector.emit(new Values(line));

}

try {

//Thread.sleep(1000);

} catch (Exception e) {

e.printStackTrace();

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

}

(3)UVFmtBolt 为一级bolt,进行格式转换

package com.yun.storm.uv;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.Map;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

/**

* 进行多线程局部汇总

* @author shenfl

*

*/

public class UVFmtBolt extends BaseRichBolt {

/**

*

*/

private static final long serialVersionUID = 1L;

OutputCollector collector;

@Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

@Override

public void execute(Tuple input) {

//2014-01-07 08:40:50 ABYH6Y4V4SCV http://www.jd.com/1.html

String line = input.getStringByField("line");

String date = line.split("\t")[0];

String sid = line.split("\t")[1];

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

try {

date = sdf.format(new Date(sdf.parse(date).getTime()));

this.collector.emit(new Values(date,sid));

this.collector.ack(input);

} catch (Exception e) {

e.printStackTrace();

this.collector.fail(input);

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("date","sid"));

}

}(4) UVDeepVisitBolt 二级bolt ,多线程统计每个访客对应的pv数

package com.yun.storm.uv;

import java.util.HashMap;

import java.util.Map;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

import backtype.storm.tuple.Values;

/**

* 进行多线程局部汇总,统计每天UV数

*

* @author shenfl

*

*/

public class UVDeepVisitBolt extends BaseRichBolt {

/**

*

*/

private static final long serialVersionUID = 1L;

OutputCollector collector;

Map counts = new HashMap();// 每个task实例都会输出:

// k为日期_sid,v

// 为每个用户的PV

@Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

/**

* 输入内容: 2014-01-07 ABYH6Y4V4SCV 2014-01-07 ABYH6Y4V4SCV 2014-01-07

* ACYH6Y4V4SCV

*/

@Override

public void execute(Tuple input) {

String date = input.getStringByField("date");

String sid = input.getStringByField("sid");

String key = date + "_" + sid;

Integer count = 0;

try {

count = counts.get(key);

if (count == null) {

count = 0;

}

count++;

counts.put(key, count);

this.collector.emit(new Values(date + "_" + sid, count));//每个访客 PV数

this.collector.ack(input);

} catch (Exception e) {

e.printStackTrace();

System.err.println("UVDeepVisitBolt is failure.date:" + date + ",sid:" + sid + ",uvCount" + count);

this.collector.fail(input);

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("date_sid", "count"));

}

}

(5)

UVSumBolt 三级bolt,单线程汇总pv和uv数据,并保持hbase

package com.yun.storm.uv;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import com.yun.hbase.HBaseUtils;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichBolt;

import backtype.storm.tuple.Tuple;

/**

* 进行单线程汇总最终结果

*

* @author shenfl

*

*/

public class UVSumBolt extends BaseRichBolt {

SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

/**

*

*/

private static final long serialVersionUID = 1L;

OutputCollector collector;

HBaseUtils hbase;

Map counts = new HashMap();

// 为每个访客 对应的PV数

@Override

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

this.hbase = new HBaseUtils();

}

//初始化hbase代码放在这里不正确,通过prepare初始化参数数据,具体原因可参考http://www.xuebuyuan.com/616276.html

//HBaseUtils hbase = new HBaseUtils();

@Override

public void execute(Tuple input) {

int pv = 0;

int uv = 0;

String dateSid = input.getStringByField("date_sid");

Integer count = input.getIntegerByField("count");

// 访问日期若不是以今天开头并且访问日期大于当前日期,则计算新的一天的UV

String currDate = sdf.format(new Date());

try {

Date accessDate = sdf.parse(dateSid.split("_")[0]);

if (!dateSid.startsWith(currDate) && accessDate.after(new Date())) {

counts.clear();

}

} catch (ParseException e1) {

e1.printStackTrace();

}

counts.put(dateSid, count);// 汇总每个访客 对应的PV数,这里可以通过map或者hbase作为去重的持久化操作

for (Map.Entry e : counts.entrySet()) {

if(dateSid.split("_")[0].startsWith(currDate)){

uv++;

pv+=e.getValue();

}

}

//保存到HBase或者数据库中

System.out.println(currDate + "的pv数为"+pv+",uv数为"+uv);

put(pv, uv, currDate);

}

/**

* 保持pv和uv数据到hbase中,并实时更新

* @param pv

* @param uv

* @param currDate

*/

public void put(int pv, int uv, String currDate) {

hbase.put("pv_uv", currDate, "info", "pv", String.valueOf(pv));

hbase.put("pv_uv", currDate, "info", "uv", String.valueOf(uv));

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

} hbase(main):014:0> scan 'pv_uv'

ROW COLUMN+CELL

2015-10-12 column=info:pv, timestamp=1444633152008, value=6

2015-10-12 column=info:uv, timestamp=1444633152012, value=3

通过结果发现,2015-10-12的pv和uv分别为6和3