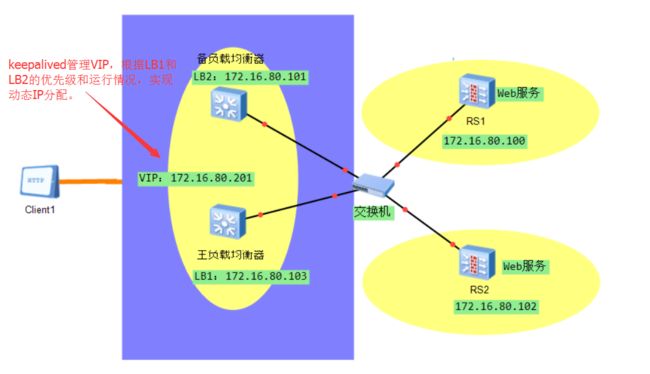

上面的拓扑图,LB1和LB2是两个Nginx反向代理,后端RS1和RS2是两个Web服务器。

接下来我们通过keepalived实现高可用的nginx反向代理集群。

keepalived的实现本质是:

当LB1和LB2都正常运行时,把VIP 172.16.80.201分配给高优先级的LB1。用户访问时,就由LB1提供反向代理。

当LB1出现故障时,keepalived就会把VIP漂移到LB2,由LB2提供反向代理功能。从而保证了业务的正常运行。

RS1和RS2配置

1、安装Nginx

[root@RS1 ~]#yum install nginx -y

[root@RS2 ~]#yum install nginx -y

2、配置访问页面,并启动RS1和RS2的Nginx服务

[root@RS1 ~]#vim /usr/share/nginx/html/index.html

RS1 172.16.80.100

[root@RS2 ~]#vim /usr/share/nginx/html/index.html

RS2 172.16.80.102

客户端访问RS1和RS2效果如下:

web页面如下:

LB1和LB2的配置

1、LB1和LB2的Nginx负载均衡配置

[root@LB1 nginx]#vim /etc/nginx/nginx.conf

worker_processes 4;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

server_tokens off;

sendfile on;

keepalive_timeout 65;

upstream www.server.pools {

server 172.16.80.100:80 weight=1;

server 172.16.80.102:80 weight=1;

}

server {

listen 80;

server_name www.keepalived.com;

location / {

proxy_pass http://www.server.pools;

proxy_set_header Host $host;

proxy_set_header X-Forwardered-For $remote_addr;

}

}

}

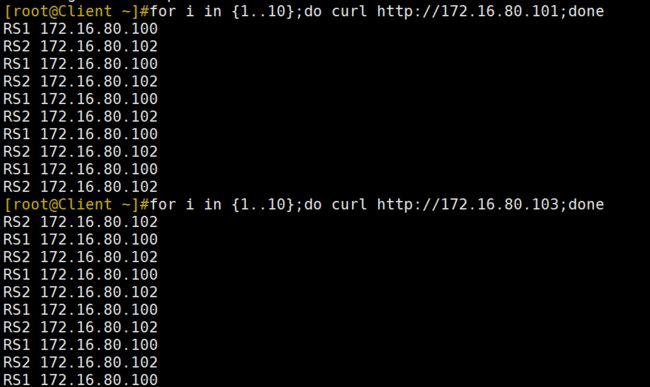

LB2的Nginx配置与LB1一样。配置后重启nginx服务。客户端分别访问172.16.80.101和172.16.80.103,可以看到LB实现了负责均衡的反向代理:

2、LB1和LB2通过keepalived实现高可用

安装keepalived

[root@LB1 ~]#yum install keepalived ipvsadm -y

[root@LB2 ~]#yum install keepalived ipvsadm -y

配置VRRP实例,LB1为master,LB22为backup。

VS1配置:

[root@LB1 ~]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keadmin@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id CentOS7A.luo.com

vrrp_mcast_group4 224.0.0.22

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass haha

}

virtual_ipaddress {

172.16.80.201/16

}

}

LB2配置

[root@LB2 ~]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs { <==全局配置段开始

notification_email {

root@localhost <==故障邮件的收件人

}

notification_email_from keadmin@localhost <==故障邮件的发件人

smtp_server 127.0.0.1 <==故障邮件的发件服务器

smtp_connect_timeout 30

router_id CentOS7B.luo.com <==路由器ID

vrrp_mcast_group4 224.0.0.22 <==组播地址

} <==全局配置段结束

vrrp_instance VI_1 { <==实例名字为VI_1,备节点的实例名字要和主节点的相同

state BACKUP <==状态为backup

interface ens33 <==通信的接口

virtual_router_id 51 <==实例ID

priority 100 <==优先级

advert_int 1 <==通信检查时间间隔

authentication {

auth_type PASS <==认证类型

auth_pass haha <==认证密码

}

virtual_ipaddress {

172.16.80.201/16 <==虚拟IP

}

}

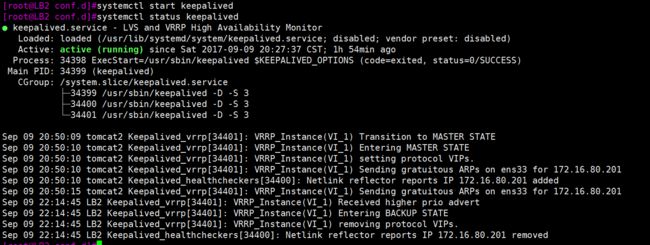

配置完后,启动VS1和VS2的keepalived

上图可以看到,LB1成功拿到VIP 172.16.80.201。

验证

客户端访问http://172.16.80.201时,被轮询调度到RS1和RS2。

当我把LB1关机后,VIP就会被keepalived分配到LB2。这时客户端再次访问http://172.16.80.201,仍然被轮询调度到后端RS服务器,用户是无法感知到LB1的故障的。