TensorFlow(9):Xubuntu 18.04 安装 OpenVINO™ toolkit for Linux,安装驱动,intel的CPU和GPU 跑着没啥区别,然后运行demo程序识别

目录

- 前言

- 1,关于openVINO

- 2,下载地址:

- 3,运行demo

- 4,总结

前言

相关TensorFlow 全部分类:

https://blog.csdn.net/freewebsys/category_6872378.html

本文的原文连接是:

https://blog.csdn.net/freewebsys/article/details/105321790

未经博主允许不得转载。

博主地址是:http://blog.csdn.net/freewebsys

1,关于openVINO

英特尔® OpenVINO™ 工具套件分发版支持快速部署可模拟人类视觉的应用和解

决方案。该工具套件基于卷积神经网络 (CNN),可在英特尔® 硬件中扩展计算机

视觉 (CV) 工作负载,实现卓越性能。英特尔® OpenVINO™ 工具套件分发版包括

英特尔® 深度学习部署工具套件(英特尔® DLDT)。

面向 Linux* 的英特尔® OpenVINO™ 工具套件分发版:

在边缘支持基于 CNN 的深度学习推理

支持跨英特尔® CPU、英特尔® 集成显卡、英特尔® Movidius™ 神经计算

棒、英特尔® 神经计算棒 2 和采用英特尔® Movidius™ VPU 的英特尔® 视

觉加速器设计的异构执行

通过易于使用的计算机视觉函数库和预优化的内核缩短上市时间

包括针对计算机视觉标准(包括 OpenCV* 和 OpenCL™)的优化调用

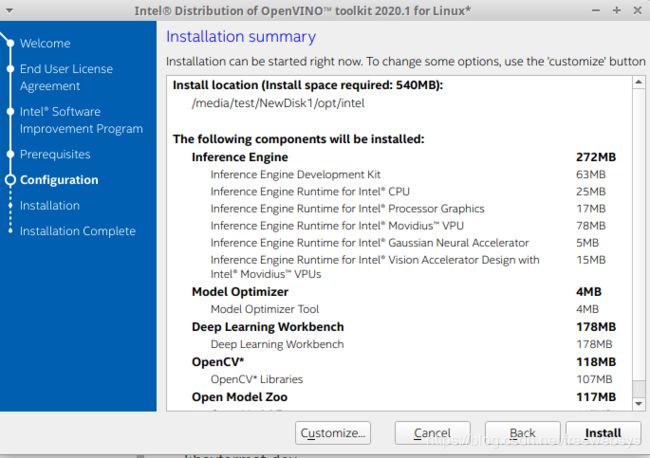

安装包中包含的组件

默认安装以下组件:

组件 描述

模型优化器

该工具可将在常见框架上训练的模型导入、转换与优化

为可供英特尔工具(特别是推理引擎)使用的格式。

常见框架包括 Caffe*、TensorFlow*、MXNet* 和

ONNX*。

推理引擎

这是一款运行深度学习模型的引擎。它包括一组库,可

将推理轻松集成至您的应用。

面向 OpenCL™ 版本 2.1

的驱动程序和运行时

在英特尔® 处理器或是英特尔®处理器显卡上支持

OpenCL

英特尔® 媒体软件开发

套件

支持访问硬件加速视频编解码器和帧处理

OpenCV 针对英特尔® 硬件编译的 OpenCV* 社区版本

示例应用

一组简单的控制台应用,演示了如何在应用中使用推理

引擎

演示

一组控制台应用,演示了如何在应用中使用推理引擎处

理特定用例

其他工具 一组用于处理模型的工具

预训练模型文档 针对预训练模型的文档详见 Open Model Zoo 存储库

开发与目标平台

开发和目标平台具有相同的要求,但是您可以在安装过程中根据您的预期用途,选

择不同的组件。

硬件

第六代到第十代智能英特尔® 酷睿™ 处理器

英特尔® 至强® v5 产品家族

英特尔® 至强® v6 产品家族

采用英特尔® 核芯显卡的英特尔® 奔腾® 处理器 N4200/5、N3350/5、

N3450/5

英特尔® Movidius™ 神经计算棒

英特尔® 神经计算棒 2

采用英特尔® Movidius™ VPU 的英特尔® 视觉加速器设计

https://docs.openvinotoolkit.org/cn/index.html

2,下载地址:

https://software.intel.com/en-us/openvino-toolkit/choose-download

https://software.intel.com/en-us/openvino-toolkit/choose-download/free-download-linux

需要填的信息,然后就有下载页面了:

特别好的地方是,重要到正式版本了。 1.0 了。之前一直都是R1 R3 啥的版本。

解压缩:

ls -lh

l_openvino_toolkit_p_2020.1.023.tgz

tar -zxvf l_openvino_toolkit_p_2020.1.023.tgz

cd l_openvino_toolkit_p_2020.1.023

sh install_GUI.sh

带界面的安装:

libswscale-dev

libavcodec-dev

libavformat-dev

Intel® Graphics Compute Runtime for OpenCL™ Driver is missing but you will be prompted to install later

You will be prompted later to install the required Intel® Graphics Compute Runtime for OpenCL™ Driver For applications that offload computation to your Intel® GPU, the Intel® Graphics Compute Runtime for OpenCL™ Driver package for Linux is required.

安装完成的文档:

https://docs.openvinotoolkit.org/2020.1/_docs_install_guides_installing_openvino_linux.html#install-external-dependencies

可以看到同时安装了 OpenCV :

$ ls

bin data_processing deployment_tools documentation inference_engine install_dependencies licensing opencv openvino_toolkit_uninstaller python

3,运行demo

ls openvino/deployment_tools/demo$

car_1.bmp demo_benchmark_app.sh demo_security_barrier_camera.sh demo_squeezenet_download_convert_run.sh README.txt utils.sh

car.png demo_security_barrier_camera.conf demo_speech_recognition.sh how_are_you_doing.wav squeezenet1.1.labels

然后执行 demo_benchmark_app

特别注意这个是个 bash 脚步。不能用sh 执行。!!!!

bash demo_benchmark_app.sh

然后输入管理员密码,一顿下载省略中。。。

###################################################

Convert a model with Model Optimizer

Run python3 /media/test/NewDisk1/opt/intel/openvino_2020.1.023/deployment_tools/open_model_zoo/tools/downloader/converter.py --mo /media/test/NewDisk1/opt/intel/openvino_2020.1.023/deployment_tools/model_optimizer/mo.py --name squeezenet1.1 -d /home/test/openvino_models/models -o /home/test/openvino_models/ir --precisions FP16

========= Converting squeezenet1.1 to IR (FP16)

Conversion command: /usr/bin/python3 -- /media/test/NewDisk1/opt/intel/openvino_2020.1.023/deployment_tools/model_optimizer/mo.py --framework=caffe --data_type=FP16 --output_dir=/home/test/openvino_models/ir/public/squeezenet1.1/FP16 --model_name=squeezenet1.1 '--input_shape=[1,3,227,227]' --input=data '--mean_values=data[104.0,117.0,123.0]' --output=prob --input_model=/home/test/openvino_models/models/public/squeezenet1.1/squeezenet1.1.caffemodel --input_proto=/home/test/openvino_models/models/public/squeezenet1.1/squeezenet1.1.prototxt

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /home/test/openvino_models/models/public/squeezenet1.1/squeezenet1.1.caffemodel

- Path for generated IR: /home/test/openvino_models/ir/public/squeezenet1.1/FP16

- IR output name: squeezenet1.1

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: data

- Output layers: prob

- Input shapes: [1,3,227,227]

- Mean values: data[104.0,117.0,123.0]

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP16

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

Caffe specific parameters:

- Path to Python Caffe* parser generated from caffe.proto: /media/test/NewDisk1/opt/intel/openvino_2020.1.023/deployment_tools/model_optimizer/mo/front/caffe/proto

- Enable resnet optimization: True

- Path to the Input prototxt: /home/test/openvino_models/models/public/squeezenet1.1/squeezenet1.1.prototxt

- Path to CustomLayersMapping.xml: Default

- Path to a mean file: Not specified

- Offsets for a mean file: Not specified

Model Optimizer version: 2020.1.0-61-gd349c3ba4a

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: /home/test/openvino_models/ir/public/squeezenet1.1/FP16/squeezenet1.1.xml

[ SUCCESS ] BIN file: /home/test/openvino_models/ir/public/squeezenet1.1/FP16/squeezenet1.1.bin

[ SUCCESS ] Total execution time: 4.52 seconds.

[ SUCCESS ] Memory consumed: 82 MB.

###################################################

Build Inference Engine samples

-- The C compiler identification is GNU 7.5.0

-- The CXX compiler identification is GNU 7.5.0

-- Check for working C compiler: /usr/bin/cc

-- Check for working C compiler: /usr/bin/cc -- works

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Detecting C compile features

-- Detecting C compile features - done

-- Check for working CXX compiler: /usr/bin/c++

-- Check for working CXX compiler: /usr/bin/c++ -- works

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Looking for C++ include unistd.h

-- Looking for C++ include unistd.h - found

-- Looking for C++ include stdint.h

-- Looking for C++ include stdint.h - found

-- Looking for C++ include sys/types.h

-- Looking for C++ include sys/types.h - found

-- Looking for C++ include fnmatch.h

-- Looking for C++ include fnmatch.h - found

-- Looking for strtoll

-- Looking for strtoll - found

-- Found InferenceEngine: /media/test/NewDisk1/opt/intel/openvino_2020.1.023/deployment_tools/inference_engine/lib/intel64/libinference_engine.so (Required is at least version "2.1")

-- Configuring done

-- Generating done

-- Build files have been written to: /home/test/inference_engine_samples_build

Scanning dependencies of target gflags_nothreads_static

Scanning dependencies of target format_reader

[ 7%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags_reporting.cc.o

[ 21%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags.cc.o

[ 21%] Building CXX object thirdparty/gflags/CMakeFiles/gflags_nothreads_static.dir/src/gflags_completions.cc.o

[ 28%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/bmp.cpp.o

[ 35%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/MnistUbyte.cpp.o

[ 42%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/format_reader.cpp.o

[ 50%] Building CXX object common/format_reader/CMakeFiles/format_reader.dir/opencv_wraper.cpp.o

[ 57%] Linking CXX shared library ../../intel64/Release/lib/libformat_reader.so

[ 57%] Built target format_reader

[ 64%] Linking CXX static library ../../intel64/Release/lib/libgflags_nothreads.a

[ 64%] Built target gflags_nothreads_static

Scanning dependencies of target benchmark_app

[ 71%] Building CXX object benchmark_app/CMakeFiles/benchmark_app.dir/inputs_filling.cpp.o

[ 78%] Building CXX object benchmark_app/CMakeFiles/benchmark_app.dir/statistics_report.cpp.o

[ 85%] Building CXX object benchmark_app/CMakeFiles/benchmark_app.dir/main.cpp.o

[ 92%] Building CXX object benchmark_app/CMakeFiles/benchmark_app.dir/utils.cpp.o

[100%] Linking CXX executable ../intel64/Release/benchmark_app

[100%] Built target benchmark_app

###################################################

Run Inference Engine benchmark app

Run ./benchmark_app -d CPU -i /home/test/newDisk1/opt/intel/openvino/deployment_tools/demo/car.png -m /home/test/openvino_models/ir/public/squeezenet1.1/FP16/squeezenet1.1.xml -pc -niter 1000

[Step 1/11] Parsing and validating input arguments

[ INFO ] Parsing input parameters

[ INFO ] Files were added: 1

[ INFO ] /home/test/newDisk1/opt/intel/openvino/deployment_tools/demo/car.png

[ WARNING ] -nstreams default value is determined automatically for a device. Although the automatic selection usually provides a reasonable performance,but it still may be non-optimal for some cases, for more information look at README.

[Step 2/11] Loading Inference Engine

[ INFO ] InferenceEngine:

API version ............ 2.1

Build .................. 37988

Description ....... API

[ INFO ] Device info:

CPU

MKLDNNPlugin version ......... 2.1

Build ........... 37988

[Step 3/11] Setting device configuration

[Step 4/11] Reading the Intermediate Representation network

[ INFO ] Loading network files

[ INFO ] Read network took 153.06 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1, precision: MIXED

[Step 6/11] Configuring input of the model

[Step 7/11] Loading the model to the device

[ INFO ] Load network took 400.16 ms

[Step 8/11] Setting optimal runtime parameters

[Step 9/11] Creating infer requests and filling input blobs with images

[ INFO ] Network input 'data' precision U8, dimensions (NCHW): 1 3 227 227

[ WARNING ] Some image input files will be duplicated: 4 files are required but only 1 are provided

[ INFO ] Infer Request 0 filling

[ INFO ] Prepare image /home/test/newDisk1/opt/intel/openvino/deployment_tools/demo/car.png

[ WARNING ] Image is resized from (787, 259) to (227, 227)

[ INFO ] Infer Request 1 filling

[ INFO ] Prepare image /home/test/newDisk1/opt/intel/openvino/deployment_tools/demo/car.png

[ WARNING ] Image is resized from (787, 259) to (227, 227)

[ INFO ] Infer Request 2 filling

[ INFO ] Prepare image /home/test/newDisk1/opt/intel/openvino/deployment_tools/demo/car.png

[ WARNING ] Image is resized from (787, 259) to (227, 227)

[ INFO ] Infer Request 3 filling

[ INFO ] Prepare image /home/test/newDisk1/opt/intel/openvino/deployment_tools/demo/car.png

[ WARNING ] Image is resized from (787, 259) to (227, 227)

[Step 10/11] Measuring performance (Start inference asyncronously, 4 inference requests using 4 streams for CPU, limits: 1000 iterations)

[Step 11/11] Dumping statistics report

Full device name: Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz

Count: 1000 iterations

Duration: 5812.04 ms

Latency: 21.63 ms

Throughput: 172.06 FPS

Peak Virtual Memory (VmPeak) Size, kBytes: 680688

Peak Resident Memory (VmHWM) Size, kBytes: 100152

###################################################

Inference Engine benchmark app completed successfully.

运行成功。还是 linux 方便。

可以制定参数是 cpu 还是 gpu 当然是intel 的集成显卡了。

bash demo_benchmark_app.sh -d CPU

Full device name: Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz

Count: 1000 iterations

Duration: 5403.40 ms

Latency: 21.26 ms

Throughput: 185.07 FPS

Peak Virtual Memory (VmPeak) Size, kBytes: 671476

Peak Resident Memory (VmHWM) Size, kBytes: 99756

要使用 GPU 进行运行呢。需要安装下驱动:

$ sudo intel/openvino/install_dependencies/install_NEO_OCL_driver.sh

然后执行下:

bash demo_benchmark_app.sh -d CPU

Total time: 21394 microseconds

Full device name: Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz

Count: 1000 iterations

Duration: 5428.91 ms

Latency: 21.28 ms

Throughput: 184.20 FPS

Peak Virtual Memory (VmPeak) Size, kBytes: 680688

Peak Resident Memory (VmHWM) Size, kBytes: 99772

尴尬了。这个比 cpu 还慢点呢。不制定执行的对不对呢。之前倒是遇到了几个错误。

安装了驱动就可以执行了。

然后执行车牌识别程序:

./demo_security_barrier_camera.sh

[ INFO ] InferenceEngine: 0x7fbba84a6040

[ INFO ] Files were added: 1

[ INFO ] /home/test/newDisk1/opt/intel/openvino/deployment_tools/demo/car_1.bmp

[ INFO ] Loading device CPU

CPU

MKLDNNPlugin version ......... 2.1

Build ........... 37988

[ INFO ] Loading detection model to the CPU plugin

[ INFO ] Loading Vehicle Attribs model to the CPU plugin

[ INFO ] Loading Licence Plate Recognition (LPR) model to the CPU plugin

[ INFO ] Number of InferRequests: 1 (detection), 3 (classification), 3 (recognition)

[ INFO ] 4 streams for CPU

[ INFO ] Display resolution: 1920x1080

[ INFO ] Number of allocated frames: 3

[ INFO ] Resizable input with support of ROI crop and auto resize is disabled

0.0FPS for (3 / 1) frames

Detection InferRequests usage: 0.0%

[ INFO ] Execution successful

车牌识别成功,河北, MD711 。车辆是黑色的。

4,总结

OpenVINO 上还是有很多样例可以学习的,同时利用好 CPU 和 lib 库,样例做好物体识别。

https://docs.openvinotoolkit.org/cn/index.html

这里使用的 CPU 是 Intel® Core™ i5-7200U CPU @ 2.50GHz。

我还特意买了本书。

https://item.jd.com/12824906.html

特别特别的新呢。3 月份才出的。同时, opencv 的版本是:

4.2.0-82-g4de7015cf (OpenVINO/2019R4)

也可买个 OpenCV 看看呢。

本文的原文连接是:

https://blog.csdn.net/freewebsys/article/details/105321790

博主地址是:https://blog.csdn.net/freewebsys

![]()