ELK-收集微服务日志

前面已经收集了nginx的日志了,现在来收集下微服务的日志。我这里分别收集两台服务器上的三个微服务的日志。

一 、安装filebeat

就像之前的elk一样,客户端上的filebeat也放到/usr/local/elkstack/这个目录下,并用elkstack这个用户启动。

[root@ms1 ~]# useradd elkstack

[root@ms1 ~]# mkdir -p /usr/local/elkstack

下载filebeat的tar包,解压

[root@ms1 ~]# cd /usr/local/elkstack

[root@ms1 elkstack]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.4.0-linux-x86_64.tar.gz

[root@ms1 elkstack]# tar -zxf filebeat-7.4.0-linux-x86_64.tar.gz

修改微服务服务器1的配置文件

[root@ms1 ~]# cat /usr/local/elkstack/filebeat-7.4.0-linux-x86_64/filebeat.yml | grep -Ev '^$|^#'

filebeat.inputs:

- type: log

enabled: true

paths:

- /data/logs/orderform/*.log

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

fields:

log_topics: ms1-orderform-log

logtype: ms1-orderform

- type: log

enabled: true

paths:

- /data/logs/trip/*.log

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

fields:

log_topics: ms1-trip-log

logtype: ms1-trip

- type: log

enabled: true

paths:

- /data/logs/orderbill/*.log

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

fields:

log_topics: ms1-orderbill-log

logtype: ms1-orderbill

output.kafka:

enabled: true

hosts: ["192.168.0.141:9092","192.168.0.142:9092","192.168.0.143:9092"]

topic: '%{[fields][log_topics]}'

修改微服务服务器2的配置文件

[root@ms2 ~]# cat /usr/local/elkstack/filebeat-7.4.0-linux-x86_64/filebeat.yml | grep -Ev '^$|^#'

filebeat.inputs:

- type: log

enabled: true

paths:

- /data/logs/orderform/*.log

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

fields:

log_topics: ms2-orderform-log

logtype: ms2-orderform

- type: log

enabled: true

paths:

- /data/logs/trip/*.log

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

fields:

log_topics: ms2-trip-log

logtype: ms2-trip

- type: log

enabled: true

paths:

- /data/logs/orderbill/*.log

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2}'

negate: true

match: after

fields:

log_topics: ms2-orderbill-log

logtype: ms2-orderbill

output.kafka:

enabled: true

hosts: ["192.168.0.141:9092","192.168.0.142:9092","192.168.0.143:9092"]

topic: '%{[fields][log_topics]}'

这里需要强调一下的是multiline这个参数,为什么要加这个呢?因为我们的微服务应用日志正产的DEBUG/INFO级别的日志是单行的,这个收集起来没任何问题。但是ERROR级别的日志往往是有多行的,我们要做的是把这些多行的日志展示在同一条日志里面,这样查看的时候会方便很多。

首先看一下我的一条日志2019-12-12 15:03:34,020 INFO [http-nio-9013-exec-3] impl.Jdk14Logger (Jdk14Logger.java:91) - Multiple Config Server Urls found listed.

可以根据日志的开头是日期+时间的格式来区分这行是不是一个新日志,不是的话就和上面一行合并。multiline就起到了这个作用。

创建日志目录

[root@ms1 ~]# mkdir -p /data/filebeat7/logs

[root@ms1 ~]# chown -R elkstack:elkstack /data/filebeat7

启动filebeat

[root@ms1 ~]# chown -R elkstack:elkstack /usr/local/elkstack/filebeat-7.4.0-linux-x86_64

[root@ms1 ~]# su - elkstack

[elkstack@ms1 ~]$ cd /usr/local/elkstack/filebeat-7.4.0-linux-x86_64

[elkstack@ms1 ~]$ nohup ./filebeat -e -c filebeat.yml >> /data/filebeat7/logs/filebeat_nohup.log &

二、配置、启动logstash

之前在收集nginx日志的时候,已经在logstash下创建了一个专门由于放配置文件

编写收集三个微服务日志的配置文件,因为这里有两台微服务服务器,就分成两个文件写。

注意:因为之前做了x-pack的破解,所以这里output到es的时候需要加上用户名密码

ms1.conf

[elkstack@node1 ~]$ cat /usr/local/elkstack/logstash-7.4.0/conf.d/ms1.conf

input {

kafka {

bootstrap_servers => "192.168.0.141:9092,192.168.0.142:9092,192.168.0.143:9092"

group_id => "logstash-group"

topics => ["ms1-orderform-log","ms1-trip-log","ms1-orderbill-log"]

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => true

codec => json

}

}

filter {

if [fields][logtype] == "ms1-orderform" {

json {

source => "message"

}

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:level}" }

}

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

target => "@timestamp"

}

}

if [fields][logtype] == "ms1-trip" {

json {

source => "message"

}

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:level}" }

}

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

target => "@timestamp"

}

}

if [fields][logtype] == "ms1-orderbill" {

json {

source => "message"

}

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:level}" }

}

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

target => "@timestamp"

}

}

}

output {

if [fields][logtype] == "ms1-orderform" {

elasticsearch {

user => "elastic"

password => "password"

hosts => ["192.168.0.141:9200","192.168.0.142:9200","192.168.0.143:9200"]

index => "ms1-orderform.log-%{+YYYY.MM.dd}"

}

}

if [fields][logtype] == "ms1-trip" {

elasticsearch {

user => "elastic"

password => "password"

hosts => ["192.168.0.141:9200","192.168.0.142:9200","192.168.0.143:9200"]

index => "ms1-trip.log-%{+YYYY.MM.dd}"

}

}

if [fields][logtype] == "ms1-orderbill" {

elasticsearch {

user => "elastic"

password => "password"

hosts => ["192.168.0.141:9200","192.168.0.142:9200","192.168.0.143:9200"]

index => "ms1-orderbill.log-%{+YYYY.MM.dd}"

}

}

}

ms2.conf

[elkstack@node1 ~]$ cat /usr/local/elkstack/logstash-7.4.0/conf.d/ms2.conf

input {

kafka {

bootstrap_servers => "192.168.0.141:9092,192.168.0.142:9092,192.168.0.143:9092"

group_id => "logstash-group"

topics => ["ms2-orderform-log","ms2-trip-log","ms2-orderbill-log"]

auto_offset_reset => "latest"

consumer_threads => 5

decorate_events => true

codec => json

}

}

filter {

if [fields][logtype] == "ms2-orderform" {

json {

source => "message"

}

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:level}" }

}

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

target => "@timestamp"

}

}

if [fields][logtype] == "ms2-trip" {

json {

source => "message"

}

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:level}" }

}

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

target => "@timestamp"

}

}

if [fields][logtype] == "ms2-orderbill" {

json {

source => "message"

}

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:level}" }

}

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

target => "@timestamp"

}

}

}

output {

if [fields][logtype] == "ms2-orderform" {

elasticsearch {

user => "elastic"

password => "password"

hosts => ["192.168.0.141:9200","192.168.0.142:9200","192.168.0.143:9200"]

index => "ms2-orderform.log-%{+YYYY.MM.dd}"

}

}

if [fields][logtype] == "ms2-trip" {

elasticsearch {

user => "elastic"

password => "password"

hosts => ["192.168.0.141:9200","192.168.0.142:9200","192.168.0.143:9200"]

index => "ms2-trip.log-%{+YYYY.MM.dd}"

}

}

if [fields][logtype] == "ms2-orderbill" {

elasticsearch {

user => "elastic"

password => "password"

hosts => ["192.168.0.141:9200","192.168.0.142:9200","192.168.0.143:9200"]

index => "ms2-orderbill.log-%{+YYYY.MM.dd}"

}

}

}

这里使用logstash中的grok插件过滤出日志的级别、替换@timestrap时间为日志的生成时间而不是处理时间。

注:这两个配置文件在logstash集群的每一个节点中都需要写。

重启logstash

[elkstack@node1 ~]$ kill -9 `ps -ef|grep "logstash-7.4.0\/logstash-core"|awk '{print $2}'`

[elkstack@node1 ~]$ nohup logstash -f /usr/local/elkstack/logstash-7.4.0/conf.d/ >> /data/logstash7/logs/logstash_nohup.log &

三、在kibana中添加索引

在kibana的"管理"–>“Elasticsearch”–>"索引管理"中可以看到新生成的微服务日志的索引。

在kibana的"管理"–>“Kibana”–>“索引模式"中,将这6个索引都加到kibana中。注意:在kibana中创建索引的时候,需要添加"Time”,不然查看日志的时候就只有_source没有Time。

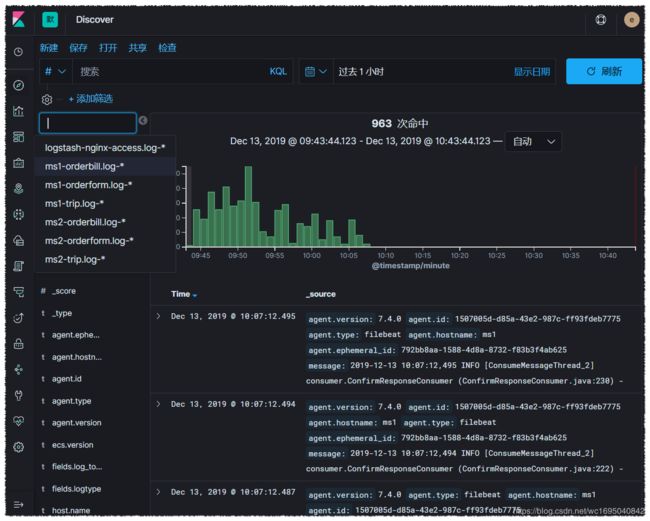

然后在"Discover"就可以选择对应的索引查看相关的日志了。