安装Hadoop3.2.1(很多坑)

安装Hadoop3.2.1(很多坑)

从官网下载hadoop包,hadoop-3.2.1.tar.gz ,342.56M 931KB/s 用时 8m 19s

$ wget http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz

解压,路径为/home/wang/hadoop/hadoop-3.2.1

$ tar -zxvf hadoop-3.2.1.tar.gz

设置环境变量

$ vim /etc/profile

加入以下设置

export HADOOP_HOME=/home/wang/hadoop/hadoop-3.2.1

export PATH=$PATH:$HADOOP_HOME/bin

生效

$ source /etc/profile

不成功的话,修改权限,

$ su passwd

改密码:123456

$ su

$ chmod 777 /etc/profile

$ source /etc/profile

检查环境变量是否设置成功

$ hadoop version

报错:ERROR: JAVA_HOME is not set and could not be found.

安装JDK14.0.1,下载地址:

http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

解压:

$ tar -zxvf jdk-14.0.1_linux-x64_bin.tar.gz

配置环境:

$ vim /etc/profile

在文件末尾加上以下内容

export JAVA_HOME=/home/wang/jdk-14.0.1

export JRE_HOME=/home/wang/jdk-14.0.1/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

使其生效

$ source /etc/profile

然后重启电脑

验证安装是否成功

java -version

以下表示安装成功

wang@wang2019:~$ java -version

java version "14.0.1" 2020-04-14

Java(TM) SE Runtime Environment (build 14.0.1+7)

Java HotSpot(TM) 64-Bit Server VM (build 14.0.1+7, mixed mode, sharing)

再查看Hadoop安装成功没

$ hadoop version

以下则表示设置成功

Hadoop 3.2.1

Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842

Compiled by rohithsharmaks on 2019-09-10T15:56Z

Compiled with protoc 2.5.0

From source with checksum 776eaf9eee9c0ffc370bcbc1888737

This command was run using /home/wang/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar

参数设置

core-site.xml:集群全局参数,定义系统级别的参数,如HDFS URL 、Hadoop的临时目录等

修改/home/wangjie/hadoop-3.2.1/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>file:///</value>

<value>hdfs://localhost/</value>

</property>

</configuration>

hdfs-site.xml:namenode,datanode存放位置、文件副本的个数、文件的读取权限等

修改//home/wangjie/hadoop-3.2.1/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

修改//home/wangjie/hadoop-3.2.1/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

修改//home/wangjie/hadoop-3.2.1/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanger.hostname</name>

<value>localhost</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

初始化hdfs

格式化HDFS,可以执行$ hdfs namenode -format,如果这个命令不行执行以下命令

cd /home/wang/hadoop-3.2.1/bin

./hdfs namenode -format

启动hadoop

cd /home/wang/hadoop-3.2.1/sbin

./start-all.sh

报如下错误:

WARNING: Attempting to start all Apache Hadoop daemons as wang in 10 seconds.

WARNING: This is not a recommended production deployment configuration.

WARNING: Use CTRL-C to abort.

Starting namenodes on [localhost]

localhost: ssh: connect to host localhost port 22: Connection refused

Starting datanodes

localhost: ssh: connect to host localhost port 22: Connection refused

Starting secondary namenodes [wang2019]

wangjie2019: ssh: connect to host wang2019 port 22: Connection refused

Starting resourcemanager

Starting nodemanagers

localhost: ssh: connect to host localhost port 22: Connection refused

解决:

Ubuntu默认并没有安装ssh服务,如果通过ssh链接Ubuntu,需要自己手动安装openssh-server。判断是否安装ssh服务,可以通过如下命令进行:

ssh localhost

显示:ssh: connect to host localhost port 22: Connection refused

问题分析如下:出现这个问题是因为Ubuntu默认没有安装openssh-server,我们用一个命令来看下,如果只有agent,说明没有安装openssh-server,命令如下:

ps -e|grep ssh

显示: 2015 ? 00:00:00 ssh-agent

既然问题找到了,我们就开始用命令来安装openssh-server,命令如下:

sudo apt-get install openssh-server

安装完成后,我们再用ps -e|grep ssh命令来看下,openssh-server安装上去没有。输入命令后出现如下结果,说明安装完毕。

显示:

2015 ? 00:00:00 ssh-agent

15588 ? 00:00:00 sshd

最后,我们通过ssh localhost命令来看下,这个命令主要用来连接本机如果出现要输入密码,说明成功。

ssh localhost

但又出现下面问题:

WARNING: Attempting to start all Apache Hadoop daemons as wang in 10 seconds.

WARNING: This is not a recommended production deployment configuration.

WARNING: Use CTRL-C to abort.

Starting namenodes on [localhost]

localhost: wang@localhost: Permission denied (publickey,password).

Starting datanodes

localhost: wang@localhost: Permission denied (publickey,password).

Starting secondary namenodes [wang2019]

wang2019: Warning: Permanently added 'wang2019' (ECDSA) to the list of known hosts.

wang2019: wang@wang2019: Permission denied (publickey,password).

Starting resourcemanager

Starting nodemanagers

localhost: wang@localhost: Permission denied (publickey,password).

配置ssh免密码连入

(base) wang@wang2019:~$ cd ~/.ssh

(base) wang@wang2019:~/.ssh$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/wang/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/wang/.ssh/id_rsa.

Your public key has been saved in /home/wang/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:7VvvtwaEAU/zOk6GG8N02w1G/Ffr2A2ccrLzJHFMzLY wang@wangjie2019

The key's randomart image is:

+---[RSA 2048]----+

| ..o.+ |

| o.+.= .|

| . ooO.oo|

| o.o.O.Eo.|

| S=.*.O+oo|

| .B =o.o.|

| ....=. |

| o ....|

| . .+o.|

+----[SHA256]-----+

(base) wang@wang2019:~/.ssh$ ls

id_rsa id_rsa.pub known_hosts

(base) wang@wang2019:~/.ssh$ cat ./id——rsa.pub >> ./authorized_keys

cat: ./id——rsa.pub: 没有那个文件或目录

(base) wang@wang2019:~/.ssh$ cat ./id_rsa.pub >> ./authorized_keys

单机回环ssh免密码登录测试

即在单机结点上用ssh进行登录,看能否登录成功。登录成功后注销退出,过程如下:

(base) wang@wang2019:~/.ssh$ ssh localhost

Welcome to Ubuntu 18.04.4 LTS (GNU/Linux 5.3.0-62-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

* Canonical Livepatch is available for installation.

- Reduce system reboots and improve kernel security. Activate at:

https://ubuntu.com/livepatch

1 个可升级软件包。

0 个安全更新。

Your Hardware Enablement Stack (HWE) is supported until April 2023.

*** System restart required ***

Last login: Wed Jul 8 09:53:25 2020 from 127.0.0.1

(base) wang@wang2019:~$ exit

注销

Connection to localhost closed.

(base) wang@wang2019:~/.ssh$

如上图所示有以上信息表示操作成功,单点回环SSH登录及注销成功,这将为后续跨子结点SSH远程免密码登录作好准备。

此时出现错误:

WARNING: Attempting to start all Apache Hadoop daemons as wang in 10 seconds.

WARNING: This is not a recommended production deployment configuration.

WARNING: Use CTRL-C to abort.

Starting namenodes on [localhost]

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting datanodes

localhost: ERROR: JAVA_HOME is not set and could not be found.

Starting secondary namenodes [wangjie2019]

wang2019: ERROR: JAVA_HOME is not set and could not be found.

Starting resourcemanager

Starting nodemanagers

localhost: ERROR: JAVA_HOME is not set and could not be found.

其实是hadoop里面hadoop-env.sh文件里面的java路径设置不对,hadoop-env.sh在hadoop-3.2.1/etc/hadoop目录下,具体的修改办法如下:

将语句 export JAVA_HOME=

修改为 export JAVA_HOME=/home/wang/jdk-14.0.1

再次在输入命令启动Hadoop:

cd hadoop-3.2.1/sbin

./start-all.sh

显示:

WARNING: Attempting to start all Apache Hadoop daemons as wangjie in 10 seconds.

WARNING: This is not a recommended production deployment configuration.

WARNING: Use CTRL-C to abort.

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [wangjie2019]

Starting resourcemanager

Starting nodemanagers

再次确认启动成功

启动成功后执行jps检查下是否成功启动

$ jps

结果为:

(base) wang@wang2019:~/hadoop-3.2.1/sbin$ jps

23488 NameNode

26931 Jps

23721 DataNode

24010 SecondaryNameNode

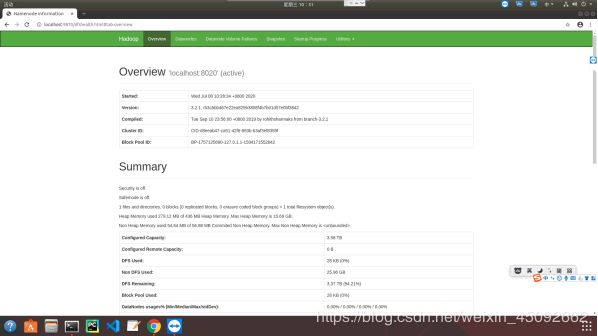

hadoop web页面默认地址:http://localhost:9870/

yarn默认地址:http://localhost:8088

参考博客:

https://blog.csdn.net/zheng911209/article/details/105389909

https://blog.csdn.net/lgyfhk/article/details/103099047