TensorFlow-2.x-10-AlexNet的实现

AlexNet可以说是使得深度学习大火的一个网络模型,它构建了多层CNN,增大感受野,加入LRN等方法,使得网络拥有极高的特征提取能力,但受限于设备的元素,当时用了2块GPU来提升训练效率。相关结构及原理可参考一下这篇文章:

深入理解AlexNet网络

本章使用tf-2.x来实现AlexNet,由于设备的原因,修改了全连接层神经元个数、输出层个数。又因为LRN对模型的提升作用不大,且容易造成模型训练难以收敛等因素,所以并没有使用LRN。

1、读取数据集并Resize

下面的代码随机从数据集中选取batch_size个训练数据和测试数据,并将图片大小resize成224*224大小。

class Data_load():

def __init__(self):

fashion_mnist = tf.keras.datasets.fashion_mnist

(self.train_images, self.train_labels), (self.test_images, self.test_labels)\

= fashion_mnist.load_data()

# 数据维度扩充成[n,h,w,c]的模式

self.train_images = np.expand_dims(self.train_images.astype(np.float32) / 255.0, axis=-1)

self.test_images = np.expand_dims(self.test_images.astype(np.float32)/255.0,axis=-1)

# 标签

self.train_labels = self.train_labels.astype(np.int32)

self.test_labels = self.test_labels.astype(np.int32)

# 训练和测试的数据个数

self.num_train, self.num_test = self.train_images.shape[0], self.test_images.shape[0]

def get_train_batch(self,batch_size):

# 随机取batch_size个索引

index = np.random.randint(0, np.shape(self.train_images)[0], batch_size)

# resize

resized_images = tf.image.resize_with_pad(self.train_images[index], 224, 224 )

return resized_images.numpy(), self.train_labels[index]

def get_test_batch(self,batch_size):

index = np.random.randint(0, np.shape(self.test_images)[0], batch_size)

# resize

resized_images = tf.image.resize_with_pad(self.test_images[index], 224, 224 )

return resized_images.numpy(), self.test_labels[index]

2、定义模型

根据AlexNet的网络结构,定义相关模型结构:

def AlexNet():

net=tf.keras.Sequential()

net.add(tf.keras.layers.Conv2D(96,11,(4,4),"same",activation="relu"))

net.add(tf.keras.layers.MaxPool2D(pool_size=3, strides=2))

net.add(tf.keras.layers.Conv2D(filters=256, kernel_size=5, padding='same', activation='relu'))

net.add(tf.keras.layers.MaxPool2D(pool_size=3, strides=2))

net.add(tf.keras.layers.Conv2D(filters=384, kernel_size=3, padding='same', activation='relu'))

net.add(tf.keras.layers.Conv2D(filters=384, kernel_size=3, padding='same', activation='relu'))

net.add(tf.keras.layers.Conv2D(filters=256, kernel_size=3, padding='same', activation='relu'))

net.add(tf.keras.layers.MaxPool2D(pool_size=3, strides=2))

net.add(tf.keras.layers.Flatten())

net.add(tf.keras.layers.Dense(512, activation='relu'))# 为了方便训练 神经元个数改小,原来是1024

net.add(tf.keras.layers.Dropout(0.5))

net.add(tf.keras.layers.Dense(256, activation='relu'))# 为了方便训练 神经元个数改小,原来是1024

net.add(tf.keras.layers.Dropout(0.5))

net.add(tf.keras.layers.Dense(10, activation='sigmoid'))

return net

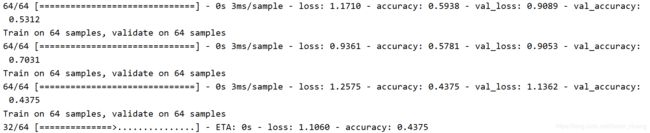

3、训练

训练比较简单,利用keras方法即可:

def train(num_epoches,net):

optimizer = tf.keras.optimizers.SGD(learning_rate=0.01, momentum=0.0, nesterov=False)

net.compile(optimizer=optimizer,

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

num_iter = data_load.num_train // batch_size

for e in range(num_epoches):

for n in range(num_iter):

x_batch, y_batch = data_load.get_train_batch(batch_size)

test_x_batch, test_y_batch = data_load.get_test_batch(batch_size)

net.fit(x_batch, y_batch,validation_data=(test_x_batch, test_y_batch))

train(5,net)

附上所有源码:

import tensorflow as tf

from tensorflow.keras.datasets import fashion_mnist

from tensorflow import data as tfdata

import numpy as np

# 将 GPU 的显存使用策略设置为 “仅在需要时申请显存空间”。

for gpu in tf.config.experimental.list_physical_devices('GPU'):

tf.config.experimental.set_memory_growth(gpu, True)

# 1、读取数据

'''由于Imagenet数据集是一个比较庞大的数据集,且网络的输入为224*224,所以,我们定义一个方法,

来读取数据集并将数据resize到224*224的大小'''

class Data_load():

def __init__(self):

fashion_mnist = tf.keras.datasets.fashion_mnist

(self.train_images, self.train_labels), (self.test_images, self.test_labels)\

= fashion_mnist.load_data()

# 数据维度扩充成[n,h,w,c]的模式

self.train_images = np.expand_dims(self.train_images.astype(np.float32) / 255.0, axis=-1)

self.test_images = np.expand_dims(self.test_images.astype(np.float32)/255.0,axis=-1)

# 标签

self.train_labels = self.train_labels.astype(np.int32)

self.test_labels = self.test_labels.astype(np.int32)

# 训练和测试的数据个数

self.num_train, self.num_test = self.train_images.shape[0], self.test_images.shape[0]

def get_train_batch(self,batch_size):

# 随机取batch_size个索引

index = np.random.randint(0, np.shape(self.train_images)[0], batch_size)

# resize

resized_images = tf.image.resize_with_pad(self.train_images[index], 224, 224 )

return resized_images.numpy(), self.train_labels[index]

def get_test_batch(self,batch_size):

index = np.random.randint(0, np.shape(self.test_images)[0], batch_size)

# resize

resized_images = tf.image.resize_with_pad(self.test_images[index], 224, 224 )

return resized_images.numpy(), self.test_labels[index]

batch_size=64

data_load=Data_load()

x_train_batch,y_train_batch=data_load.get_train_batch(batch_size)

print("x_batch shape:",x_train_batch.shape,"y_batch shape:", y_train_batch.shape)

# 2、定义模型

def AlexNet():

net=tf.keras.Sequential()

net.add(tf.keras.layers.Conv2D(96,11,(4,4),"same",activation="relu"))

net.add(tf.keras.layers.MaxPool2D(pool_size=3, strides=2))

net.add(tf.keras.layers.Conv2D(filters=256, kernel_size=5, padding='same', activation='relu'))

net.add(tf.keras.layers.MaxPool2D(pool_size=3, strides=2))

net.add(tf.keras.layers.Conv2D(filters=384, kernel_size=3, padding='same', activation='relu'))

net.add(tf.keras.layers.Conv2D(filters=384, kernel_size=3, padding='same', activation='relu'))

net.add(tf.keras.layers.Conv2D(filters=256, kernel_size=3, padding='same', activation='relu'))

net.add(tf.keras.layers.MaxPool2D(pool_size=3, strides=2))

net.add(tf.keras.layers.Flatten())

net.add(tf.keras.layers.Dense(512, activation='relu'))# 为了方便训练 神经元个数改小,原来是1024

net.add(tf.keras.layers.Dropout(0.5))

net.add(tf.keras.layers.Dense(256, activation='relu'))# 为了方便训练 神经元个数改小,原来是1024

net.add(tf.keras.layers.Dropout(0.5))

net.add(tf.keras.layers.Dense(10, activation='sigmoid'))

return net

net=AlexNet()

# 查看网络结构

X = tf.random.uniform((1,224,224,1))

for layer in net.layers:

X = layer(X)

print(layer.name, 'output shape\t', X.shape)

def train(num_epoches,net):

optimizer = tf.keras.optimizers.SGD(learning_rate=0.01, momentum=0.0, nesterov=False)

net.compile(optimizer=optimizer,

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

num_iter = data_load.num_train // batch_size

for e in range(num_epoches):

for n in range(num_iter):

x_batch, y_batch = data_load.get_train_batch(batch_size)

test_x_batch, test_y_batch = data_load.get_test_batch(batch_size)

net.fit(x_batch, y_batch,validation_data=(test_x_batch, test_y_batch))

train(5,net)