python代码实现Opencv调用海康Rtsp视频流,进行人脸检测

代码都是开源的代码,用的是opencv的DNN人脸检测算法。

测试过程中的所用到的模块的版本:

python (3.5.1)

opencv-python (4.1.2.30)

opencv-contrib-python (4.1.2.30)

1、先贴出第一版本的代码:

from __future__ import division

import cv2

import time

import sys

def detectFaceOpenCVDnn(net, frame):

frameOpencvDnn = frame.copy()

frameHeight = frameOpencvDnn.shape[0]

frameWidth = frameOpencvDnn.shape[1]

blob = cv2.dnn.blobFromImage(frameOpencvDnn, 1.0, (300, 300), [104, 117, 123], False, False)

net.setInput(blob)

detections = net.forward()

bboxes = []

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > conf_threshold:

x1 = int(detections[0, 0, i, 3] * frameWidth)

y1 = int(detections[0, 0, i, 4] * frameHeight)

x2 = int(detections[0, 0, i, 5] * frameWidth)

y2 = int(detections[0, 0, i, 6] * frameHeight)

bboxes.append([x1, y1, x2, y2])

cv2.rectangle(frameOpencvDnn, (x1, y1), (x2, y2), (0, 255, 0), int(round(frameHeight/150)), 8)

return frameOpencvDnn, bboxes

if __name__ == "__main__" :

DNN = "TF"

if DNN == "CAFFE":

modelFile = "res10_300x300_ssd_iter_140000_fp16.caffemodel"

configFile = "deploy.prototxt"

net = cv2.dnn.readNetFromCaffe(configFile, modelFile)

else:

modelFile = "opencv_face_detector_uint8.pb"

configFile = "opencv_face_detector.pbtxt"

net = cv2.dnn.readNetFromTensorflow(modelFile, configFile)

conf_threshold = 0.7

source = "rtsp://***:***@192.168.**.**:554/h264/ch1/main/av_stream"

if len(sys.argv) > 1:

source = sys.argv[1]

cap = cv2.VideoCapture(source)

hasFrame, frame = cap.read()

vid_writer = cv2.VideoWriter('output-dnn-{}.avi'.format(str(source).split(".")[0]),cv2.VideoWriter_fourcc('M','J','P','G'), 15, (frame.shape[1],frame.shape[0]))

frame_count = 0

tt_opencvDnn = 0

while(1):

hasFrame, frame = cap.read()

if not hasFrame:

break

frame_count += 1

t = time.time()

outOpencvDnn, bboxes = detectFaceOpenCVDnn(net,frame)

tt_opencvDnn += time.time() - t

fpsOpencvDnn = frame_count / tt_opencvDnn

label = "OpenCV DNN ; FPS : {:.2f}".format(fpsOpencvDnn)

cv2.putText(outOpencvDnn, label, (10,50), cv2.FONT_HERSHEY_SIMPLEX, 1.4, (0, 0, 255), 3, cv2.LINE_AA)

cv2.imshow("Face Detection Comparison", outOpencvDnn)

vid_writer.write(outOpencvDnn)

if frame_count == 1:

tt_opencvDnn = 0

k = cv2.waitKey(10)

if k == 27:

break

cv2.destroyAllWindows()

vid_writer.release()

注:上面关于rtsp流的地址,请改为自己摄像机的地址!

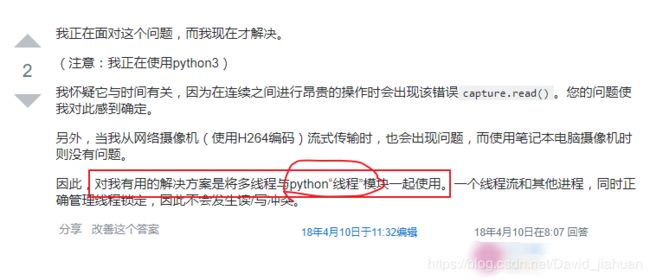

2、在上面的这个例子执行过程中,会发现当检测到人脸时,程序会断开并提示报错信息:

error while decoding MB 78 12, bytestream -5

这个报错信息中的数字可能会与你的不太一样,但是大致的意思是跟python线程有关:

于是接着搜索 “python多线程使用”,然后经过修改和调试后的最新版本:

from __future__ import division

import cv2

import time

import sys

import queue

import threading

q = queue.Queue()

def detectFaceOpenCVDnn(net, frame):

frameOpencvDnn = frame.copy()

frameHeight = frameOpencvDnn.shape[0]

frameWidth = frameOpencvDnn.shape[1]

blob = cv2.dnn.blobFromImage(frameOpencvDnn, 1.0, (300, 300), [104, 117, 123], False, False)

net.setInput(blob)

detections = net.forward()

bboxes = []

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > conf_threshold:

x1 = int(detections[0, 0, i, 3] * frameWidth)

y1 = int(detections[0, 0, i, 4] * frameHeight)

x2 = int(detections[0, 0, i, 5] * frameWidth)

y2 = int(detections[0, 0, i, 6] * frameHeight)

bboxes.append([x1, y1, x2, y2])

cv2.rectangle(frameOpencvDnn, (x1, y1), (x2, y2), (0, 255, 0), int(round(frameHeight/150)), 8)

return frameOpencvDnn, bboxes

def receive():

print("start Receive")

source = "rtsp://***:***@192.168.**.**:554/h264/ch1/sub/av_stream"

if len(sys.argv) > 1:

source = sys.argv[1]

cap = cv2.VideoCapture(source)

hasFrame, frame = cap.read()

q.put(frame)

while(hasFrame):

hasFrame, frame = cap.read()

q.put(frame)

def display():

print("start Display")

frame_count = 0

tt_opencvDnn = 0

while(1):

if q.empty() != True:

frame = q.get()

frame_count += 1

t = time.time()

outOpencvDnn, bboxes = detectFaceOpenCVDnn(net,frame)

tt_opencvDnn += time.time() - t

fpsOpencvDnn = frame_count / tt_opencvDnn

label = "OpenCV DNN ; FPS : {:.2f}".format(fpsOpencvDnn)

cv2.putText(outOpencvDnn, label, (10,50), cv2.FONT_HERSHEY_SIMPLEX, 1.4, (0, 0, 255), 3, cv2.LINE_AA)

cv2.imshow("Face Detection Comparison", outOpencvDnn)

vid_writer.write(outOpencvDnn)

if frame_count == 1:

tt_opencvDnn = 0

k = cv2.waitKey(10)

if k == 27:

break

if __name__ == "__main__" :

# OpenCV DNN supports 2 networks.

# 1. FP16 version of the original caffe implementation ( 5.4 MB )

# 2. 8 bit Quantized version using Tensorflow ( 2.7 MB )

DNN = "TF"

if DNN == "CAFFE":

modelFile = "res10_300x300_ssd_iter_140000_fp16.caffemodel"

configFile = "deploy.prototxt"

net = cv2.dnn.readNetFromCaffe(configFile, modelFile)

else:

modelFile = "opencv_face_detector_uint8.pb"

configFile = "opencv_face_detector.pbtxt"

net = cv2.dnn.readNetFromTensorflow(modelFile, configFile)

conf_threshold = 0.7

source = "rtsp://***:***@192.168.**.**:554/h264/ch1/sub/av_stream"

if len(sys.argv) > 1:

source = sys.argv[1]

cap = cv2.VideoCapture(source)

hasFrame, frame = cap.read()

vid_writer = cv2.VideoWriter('output-dnn-{}.avi'.format(str(source).split(".")[0]),cv2.VideoWriter_fourcc('M','J','P','G'), 15, (frame.shape[1],frame.shape[0]))

p1 = threading.Thread(target = receive)

p2 = threading.Thread(target = display)

p1.start()

p2.start()

cv2.destroyAllWindows()

vid_writer.release()其实细心的朋友可能已经发现,我调用的rtsp的视频流地址source已经由第一个版本的main主码流改为了sub辅码流,这是因为主码流的视频分辨率是1080P的,对于我这太笔记本来说,处理起来相当吃力;所以我为了降低视频分辨率,改成了获取实时辅码流sub!