Python+Flask实现全国、全球疫情大数据可视化(一):爬取疫情数据并保存至mysql数据库

文章目录

- 相关文章

- 一、实现效果

- 二、数据获取地址

- 注意1:需要获取的字段

- 注意2:json数据格式标准化

- 注意3:外国国家名称转转换为英文

- 三、数据保存

- 四、完整项目获取

相关文章

Python+Flask实现全国、全球疫情大数据可视化(二):网页页面布局+echarts可视化中国地图、世界地图、柱状图和折线图

Python+Flask实现全国、全球疫情大数据可视化(三):ajax读取mysql中的数据并将参数传递至echarts表格中

一、实现效果

最近简单学习了一下flask,决定来做一个疫情大数据的网页出来。

话不多说先上效果图。还是比较喜欢这样的排版的。

![]()

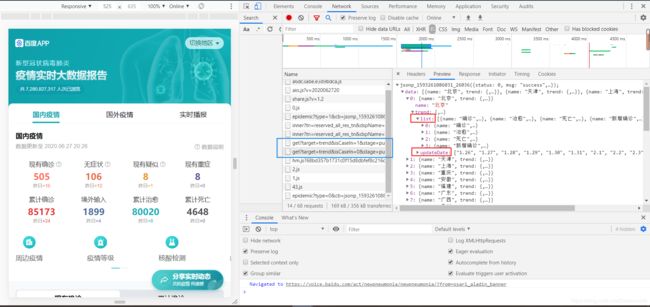

二、数据获取地址

数据来源于百度提供的api接口。直接在百度搜索疫情数据。就能看到国内疫情与国外疫情两个内容了。然后进入网页去找接口。那么接口我已经找到了,如下:

#国内疫情数据接口

url='https://voice.baidu.com/newpneumonia/get?target=trend&isCaseIn=0&stage=publish&callback=jsonp_1593074577909_83653'

#国外疫情数据接口

url2='https://voice.baidu.com/newpneumonia/get?target=trend&isCaseIn=1&stage=publish&callback=jsonp_1593247926359_31143'

注意1:需要获取的字段

我们需要爬取的字段就name(疫情地区)、List(疫情数据)与updateDate(数据更新日期)。这里有一点要注意的是,其中List与updateDate是一个一一对应的关系。

注意2:json数据格式标准化

在这里如果直接将请求到的接口数据转换为json的话会出现报错,我们需要把开头部分jsonp_1593261086031_26036(去除,以及文末的分号和括号。再将数据转换为json就可以了。代码如下

import requests

import pandas as pd

import json

from sqlalchemy import create_engine

#标准化字符串为json格式

#国内疫情数据

url='https://voice.baidu.com/newpneumonia/get?target=trend&isCaseIn=0&stage=publish&callback=jsonp_1593074577909_83653'

#国外疫情数据

url2='https://voice.baidu.com/newpneumonia/get?target=trend&isCaseIn=1&stage=publish&callback=jsonp_1593247926359_31143'

text1='jsonp_1593074577909_83653('

text2='jsonp_1593247926359_31143('

def get_text(extra,url):

r=requests.get(url).text

r=r.replace(extra,"")

r=r[0:-2]

r=json.loads(r)

return r

r=get_text(text2,url2)

names=[]

dates=[]

dignose=[]

heal=[]

dead=[]

add=[]

#遍历每个地点

for place in r['data']:

# print(place['name'])

length=len(place['trend']['updateDate'])

place_name=(place['name'] for i in range(length))

names.extend(place_name)

#获取日期数据

dates.extend(place['trend']['updateDate'])

for trend in place['trend']['list']:

if(trend['name']=='确诊'):

dignose.extend(trend['data'])

if(trend['name']=='治愈'):

heal.extend(trend['data'])

if(trend['name']=='死亡'):

dead.extend(trend['data'])

if(trend['name']=='新增确诊'):

add.extend(trend['data'])

#新增确诊数据如果存在缺失值,则将缺失值填充为0

diff=len(names)-len(add)

if(diff!=0):

add=add+[0 for i in range(diff)]

print(len(names),len(dates),len(dignose),len(heal),len(dead),len(add))

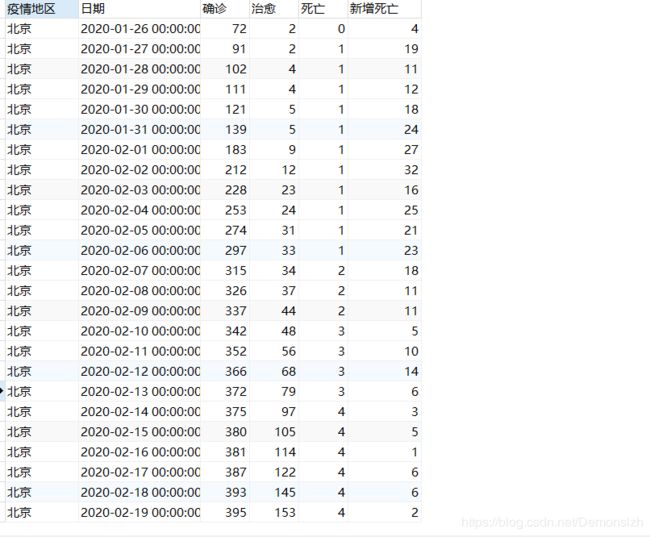

df=pd.DataFrame({

"疫情地区":names,

"日期":dates,

"确诊":dignose,

"治愈":heal,

"死亡":dead,

"新增死亡":add

})

df['日期']=['2020.'+i for i in df['日期']]

df['日期']=df['日期'].str.replace(".","-")

df['日期']=pd.to_datetime(df['日期'])

注意3:外国国家名称转转换为英文

我们需要爬的数据是中国国内数据与全球国家的数据。由于可视化时需要用到echarts绘制世界地图,而爬取到的各国家名称是中文,下面需要将中文转换为英文。

转换函数如下:

def traslate(word):

'''

将世界各国的中文名转化为英文

'''

countries={

"Somalia": "索马里",

"Liechtenstein": "列支敦士登",

"Morocco": "摩洛哥",

"W. Sahara": "西撒哈拉",

"Serbia": "塞尔维亚",

"Afghanistan": "阿富汗",

"Angola": "安哥拉",

"Albania": "阿尔巴尼亚",

"Andorra": "安道尔共和国",

"United Arab Emirates": "阿拉伯联合酋长国",

"Argentina": "阿根廷",

"Armenia": "亚美尼亚",

"Australia": "澳大利亚",

"Austria": "奥地利",

"Azerbaijan": "阿塞拜疆",

"Burundi": "布隆迪",

"Belgium": "比利时",

"Benin": "贝宁",

"Burkina Faso": "布基纳法索",

"Bangladesh": "孟加拉国",

"Bulgaria": "保加利亚",

"Bahrain": "巴林",

"Bahamas": "巴哈马",

"Bosnia and Herz.": "波斯尼亚和黑塞哥维那",

"Belarus": "白俄罗斯",

"Belize": "伯利兹",

"Bermuda": "百慕大",

"Bolivia": "玻利维亚",

"Brazil": "巴西",

"Barbados": "巴巴多斯",

"Brunei": "文莱",

"Bhutan": "不丹",

"Botswana": "博茨瓦纳",

"Central African Rep.": "中非",

"Canada": "加拿大",

"Switzerland": "瑞士",

"Chile": "智利",

"China": "中国",

"Côte d'Ivoire": "科特迪瓦",

"Cameroon": "喀麦隆",

"Dem. Rep. Congo": "刚果民主共和国",

"Congo": "刚果",

"Colombia": "哥伦比亚",

"Cape Verde": "佛得角",

"Costa Rica": "哥斯达黎加",

"Cuba": "古巴",

"N. Cyprus": "北塞浦路斯",

"Cyprus": "塞浦路斯",

"Czech Rep.": "捷克",

"Germany": "德国",

"Djibouti": "吉布提",

"Denmark": "丹麦",

"Dominican Rep.": "多米尼加",

"Algeria": "阿尔及利亚",

"Ecuador": "厄瓜多尔",

"Egypt": "埃及",

"Eritrea": "厄立特里亚",

"Spain": "西班牙",

"Estonia": "爱沙尼亚",

"Ethiopia": "埃塞俄比亚",

"Finland": "芬兰",

"Fiji": "斐济",

"France": "法国",

"Gabon": "加蓬",

"United Kingdom": "英国",

"Georgia": "格鲁吉亚",

"Ghana": "加纳",

"Guinea": "几内亚",

"Gambia": "冈比亚",

"Guinea-Bissau": "几内亚比绍",

"Eq. Guinea": "赤道几内亚",

"Greece": "希腊",

"Grenada": "格林纳达",

"Greenland": "格陵兰",

"Guatemala": "危地马拉",

"Guam": "关岛",

"Guyana": "圭亚那",

"Honduras": "洪都拉斯",

"Croatia": "克罗地亚",

"Haiti": "海地",

"Hungary": "匈牙利",

"Indonesia": "印度尼西亚",

"India": "印度",

"Br. Indian Ocean Ter.": "英属印度洋领土",

"Ireland": "爱尔兰",

"Iran": "伊朗",

"Iraq": "伊拉克",

"Iceland": "冰岛",

"Israel": "以色列",

"Italy": "意大利",

"Jamaica": "牙买加",

"Jordan": "约旦",

"Japan": "日本",

"Siachen Glacier": "锡亚琴冰川",

"Kazakhstan": "哈萨克斯坦",

"Kenya": "肯尼亚",

"Kyrgyzstan": "吉尔吉斯坦",

"Cambodia": "柬埔寨",

"Korea": "韩国",

"Kuwait": "科威特",

"Lao PDR": "老挝",

"Lebanon": "黎巴嫩",

"Liberia": "利比里亚",

"Libya": "利比亚",

"Sri Lanka": "斯里兰卡",

"Lesotho": "莱索托",

"Lithuania": "立陶宛",

"Luxembourg": "卢森堡",

"Latvia": "拉脱维亚",

"Moldova": "摩尔多瓦",

"Madagascar": "马达加斯加",

"Mexico": "墨西哥",

"Macedonia": "马其顿",

"Mali": "马里",

"Malta": "马耳他",

"Myanmar": "缅甸",

"Montenegro": "黑山",

"Mongolia": "蒙古",

"Mozambique": "莫桑比克",

"Mauritania": "毛里塔尼亚",

"Mauritius": "毛里求斯",

"Malawi": "马拉维",

"Malaysia": "马来西亚",

"Namibia": "纳米比亚",

"New Caledonia": "新喀里多尼亚",

"Niger": "尼日尔",

"Nigeria": "尼日利亚",

"Nicaragua": "尼加拉瓜",

"Netherlands": "荷兰",

"Norway": "挪威",

"Nepal": "尼泊尔",

"New Zealand": "新西兰",

"Oman": "阿曼",

"Pakistan": "巴基斯坦",

"Panama": "巴拿马",

"Peru": "秘鲁",

"Philippines": "菲律宾",

"Papua New Guinea": "巴布亚新几内亚",

"Poland": "波兰",

"Puerto Rico": "波多黎各",

"Dem. Rep. Korea": "朝鲜",

"Portugal": "葡萄牙",

"Paraguay": "巴拉圭",

"Palestine": "巴勒斯坦",

"Qatar": "卡塔尔",

"Romania": "罗马尼亚",

"Russia": "俄罗斯",

"Rwanda": "卢旺达",

"Saudi Arabia": "沙特阿拉伯",

"Sudan": "苏丹",

"S. Sudan": "南苏丹",

"Senegal": "塞内加尔",

"Singapore": "新加坡",

"Solomon Is.": "所罗门群岛",

"Sierra Leone": "塞拉利昂",

"El Salvador": "萨尔瓦多",

"Suriname": "苏里南",

"Slovakia": "斯洛伐克",

"Slovenia": "斯洛文尼亚",

"Sweden": "瑞典",

"Swaziland": "斯威士兰",

"Seychelles": "塞舌尔",

"Syria": "叙利亚",

"Chad": "乍得",

"Togo": "多哥",

"Thailand": "泰国",

"Tajikistan": "塔吉克斯坦",

"Turkmenistan": "土库曼斯坦",

"Timor-Leste": "东帝汶",

"Tonga": "汤加",

"Trinidad and Tobago": "特立尼达和多巴哥",

"Tunisia": "突尼斯",

"Turkey": "土耳其",

"Tanzania": "坦桑尼亚",

"Uganda": "乌干达",

"Ukraine": "乌克兰",

"Uruguay": "乌拉圭",

"United States": "美国",

"Uzbekistan": "乌兹别克斯坦",

"Venezuela": "委内瑞拉",

"Vietnam": "越南",

"Vanuatu": "瓦努阿图",

"Yemen": "也门",

"South Africa": "南非",

"Zambia": "赞比亚",

"Zimbabwe": "津巴布韦",

"Aland": "奥兰群岛",

"American Samoa": "美属萨摩亚",

"Fr. S. Antarctic Lands": "南极洲",

"Antigua and Barb.": "安提瓜和巴布达",

"Comoros": "科摩罗",

"Curaçao": "库拉索岛",

"Cayman Is.": "开曼群岛",

"Dominica": "多米尼加",

"Falkland Is.": "马尔维纳斯群岛(福克兰)",

"Faeroe Is.": "法罗群岛",

"Micronesia": "密克罗尼西亚",

"Heard I. and McDonald Is.": "赫德岛和麦克唐纳群岛",

"Isle of Man": "曼岛",

"Jersey": "泽西岛",

"Kiribati": "基里巴斯",

"Saint Lucia": "圣卢西亚",

"N. Mariana Is.": "北马里亚纳群岛",

"Montserrat": "蒙特塞拉特",

"Niue": "纽埃",

"Palau": "帕劳",

"Fr. Polynesia": "法属波利尼西亚",

"S. Geo. and S. Sandw. Is.": "南乔治亚岛和南桑威奇群岛",

"Saint Helena": "圣赫勒拿",

"St. Pierre and Miquelon": "圣皮埃尔和密克隆群岛",

"São Tomé and Principe": "圣多美和普林西比",

"Turks and Caicos Is.": "特克斯和凯科斯群岛",

"St. Vin. and Gren.": "圣文森特和格林纳丁斯",

"U.S. Virgin Is.": "美属维尔京群岛",

"Samoa": "萨摩亚"

}

#中文-英文字典

c2e={}

for k,v in countries.items():

c2e[v]=k

try:

res=c2e[word]

except:

res=word

return res

最后再加上如下语句将英文国家名的中文名翻译为英文,并作为新的一列加入到DataFrame中

df['name']=df.疫情地区.apply(traslate)

三、数据保存

def save_data(df,db_name,table_name,user,password):

conn = create_engine('mysql://{}:{}@localhost:3306/{}?charset=utf8'.format(user,password,db_name))

pd.io.sql.to_sql(df,table_name,con=conn,if_exists = 'replace',index=None)

df.head()

#注意换成你的数据库的库名、表名、账号密码

save_data(df,db_name,table_name,user,password)

四、完整项目获取

关注一下公众号,回复"0007"即可get完整项目源码

![]()