程序设计思路

爬虫程序的设计思路大同小异,下面是我的设计思路

1.模拟浏览器抓取数据

2.清洗数据

3.存入数据库或者Excel

4.数据分析与处理

需要的类库

requests 用于模拟浏览器向网站发送请求

BeautifulSoup 用于将抓取的html数据进行清洗

html5lib 用于BeautifulSoup对html的解析使用

openpyxl 用于将清洗过的数据存入Excel

抓取数据

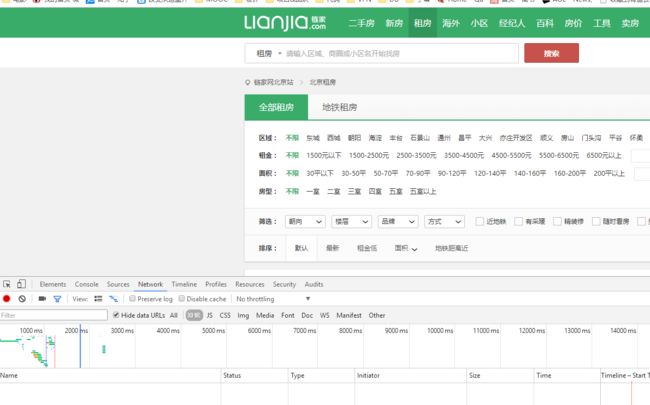

通过对network的分析没有找到链家通过json传递的数据,这时候我们的策略就是读取网页分析网页。

使用python当中的requests模块模拟浏览器访问的过程获取html信息。

这里需要注意的是,当我们需要requests模拟浏览器去访问链家网站的时候在headers里面我们要模拟完整的信息。

模拟完整信息的目的是为了保证防止链家的服务器误以为我们是程序在抓取网站的信息而阻止我们抓取新信息。

代码片段

headers = {

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding':'gzip, deflate, sdch',

'Accept-Language':'zh-CN,zh;q=0.8',

'Connection':'keep-alive',

'Cookie':'lianjia_uuid=9615f3ee-0865-4a66-b674-b94b64f709dc; logger_session=d205696d584e350975cf1d649f944f4b; select_city=110000; all-lj=144beda729446a2e2a6860f39454058b; _smt_uid=5871c8fd.2beaddb7; CNZZDATA1253477573=329766555-1483847667-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483851778; CNZZDATA1254525948=58093639-1483848060-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483853460; CNZZDATA1255633284=1668427390-1483847993-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483851644; CNZZDATA1255604082=1041799577-1483850582-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483850582; _ga=GA1.2.430968090.1483852019; lianjia_ssid=05e8ddcc-b863-4ff6-9f1d-f283e82edd4f',

'Host':'bj.lianjia.com',

'Upgrade-Insecure-Requests':'1',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.116 Safari/537.36'

}

html = requests.get(realurl,headers=headers)

# 解码

html.encoding="utf-8"

soup = BeautifulSoup(html.text,"html5lib")

info_ul = soup.find(id="house-lst")

显示html的时候我们要使用requests的encoding方法设置好编码格式,防止出现乱码。

清洗数据

我们通过requests模块提供的方法获得的数据是带有html标签的数据,显然这些html标签对我们进行数据分析是无用的,所以我们需要对数据进行清洗,这时候就需要使用BeautifulSoup模块来进行操作了。

# 创建一个list

house_all = []

# 遍历

for i in range(0,30):

house_one = []

info_li = info_ul.find(attrs={"data-index":i})

info_panel = info_li.find(attrs={"class":"info-panel"})

# 清洗数据

title = info_panel.h2.text

region = info_panel.find(attrs={"class":"region"}).text

zone = info_panel.find(attrs={"class":"zone"}).text

meters = info_panel.find(attrs={"class":"meters"}).text

con = info_panel.find(attrs={"class":"con"}).text

# subway = info_panel.find(attrs={"class":"fang-subway-ex"}).text

# visit = info_panel.find(attrs={"class":"haskey-ex"}).text

# warm = info_panel.find(attrs={"class":"heating-ex"}).text

price = info_panel.find(attrs={"class":"price"}).text

update = info_panel.find(attrs={"class":"price-pre"}).text

lookman = info_panel.find(attrs={"class":"square"}).text

house_one.append(title)

house_one.append(region)

house_one.append(zone)

house_one.append(meters)

house_one.append(con)

# house_one.append(subway)

# house_one.append(visit)

# house_one.append(warm)

house_one.append(price)

house_one.append(update)

house_one.append(lookman)

house_all.append(house_one)

return house_all

具体方法可以去看BeautifulSoup的中文官方手册。上面我只是将数据逐一取出来,然后放到一个list里面。

存入Excel

显然链家网并不允许我们去抓取几十万条数据,所以我们使用Excel存储我们抓到的数据就可以了。这需要用到openpyxl模块,这个python模块可以对xlsx文件进行操作。

编写主程序将每一页的数据遍历插入到Excel当中。

def main():

url = "http://bj.lianjia.com/zufang/"

house_result = []

for i in range(1,101):

params = "pg"+str(i)+"l2"

realurl = url + params

result = get_house(realurl)

house_result = house_result + result

wb = Workbook()

ws1 = wb.active

ws1.title = "beijing"

for row in house_result:

ws1.append(row)

wb.save('北京两室一厅租房信息.xlsx')

if __name__ == '__main__':

main()

代码思路

1.引入需要使用库

2.创建一个静态方法get_house()接受一个参数realurl

3.对抓取到的数据进行清洗获得纯文本

4.主函数当中遍历页数,多次调用get_house()获取每一页的数据逐行写入到excel当中

完整代码参考

import requests

from bs4 import BeautifulSoup

from openpyxl import Workbook

def get_house(realurl):

# 请求

headers = {

'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding':'gzip, deflate, sdch',

'Accept-Language':'zh-CN,zh;q=0.8',

'Connection':'keep-alive',

'Cookie':'lianjia_uuid=9615f3ee-0865-4a66-b674-b94b64f709dc; logger_session=d205696d584e350975cf1d649f944f4b; select_city=110000; all-lj=144beda729446a2e2a6860f39454058b; _smt_uid=5871c8fd.2beaddb7; CNZZDATA1253477573=329766555-1483847667-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483851778; CNZZDATA1254525948=58093639-1483848060-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483853460; CNZZDATA1255633284=1668427390-1483847993-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483851644; CNZZDATA1255604082=1041799577-1483850582-http%253A%252F%252Fbj.fang.lianjia.com%252F%7C1483850582; _ga=GA1.2.430968090.1483852019; lianjia_ssid=05e8ddcc-b863-4ff6-9f1d-f283e82edd4f',

'Host':'bj.lianjia.com',

'Upgrade-Insecure-Requests':'1',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.116 Safari/537.36'

}

html = requests.get(realurl,headers=headers)

# 解码

html.encoding="utf-8"

soup = BeautifulSoup(html.text,"html5lib")

info_ul = soup.find(id="house-lst")

# 创建一个list

house_all = []

# 遍历

for i in range(0,30):

house_one = []

info_li = info_ul.find(attrs={"data-index":i})

info_panel = info_li.find(attrs={"class":"info-panel"})

# 清洗数据

title = info_panel.h2.text

region = info_panel.find(attrs={"class":"region"}).text

zone = info_panel.find(attrs={"class":"zone"}).text

meters = info_panel.find(attrs={"class":"meters"}).text

con = info_panel.find(attrs={"class":"con"}).text

# subway = info_panel.find(attrs={"class":"fang-subway-ex"}).text

# visit = info_panel.find(attrs={"class":"haskey-ex"}).text

# warm = info_panel.find(attrs={"class":"heating-ex"}).text

price = info_panel.find(attrs={"class":"price"}).text

update = info_panel.find(attrs={"class":"price-pre"}).text

lookman = info_panel.find(attrs={"class":"square"}).text

house_one.append(title)

house_one.append(region)

house_one.append(zone)

house_one.append(meters)

house_one.append(con)

# house_one.append(subway)

# house_one.append(visit)

# house_one.append(warm)

house_one.append(price)

house_one.append(update)

house_one.append(lookman)

house_all.append(house_one)

return house_all

def main():

url = "http://bj.lianjia.com/zufang/"

house_result = []

for i in range(1,101):

params = "pg"+str(i)+"l2"

realurl = url + params

result = get_house(realurl)

house_result = house_result + result

wb = Workbook()

ws1 = wb.active

ws1.title = "beijing"

for row in house_result:

ws1.append(row)

wb.save('北京两室一厅租房信息.xlsx')

if __name__ == '__main__':

main()