python-scrapy爬虫框架爬取王者荣耀英雄皮肤图片和技能信息

1.创建工程

将路径切换到想要保存爬虫项目的文件夹内,运行scrapy startproject WZRY新建一个名为WZRY的工程。

2.产生爬虫

将路径切换至新创建的spiders文件夹中,运行scrapy genspider wzry "https://pvp.qq.com/",wzry是产生的爬虫名,"https://pvp.qq.com/"是要爬取的域名。

3.具体实现

3.1 item.py

列出想要爬取的数据信息

import scrapy

class WzryItem(scrapy.Item):

# define the fields for your item here like:

hero=scrapy.Field()

skins=scrapy.Field()

skill1=scrapy.Field()

skill1_detail=scrapy.Field()

skill2=scrapy.Field()

skill2_detail=scrapy.Field()

skill3=scrapy.Field()

skill3_detail=scrapy.Field()

skill4=scrapy.Field()

skill4_detail=scrapy.Field()

skill5=scrapy.Field()

skill5_detail=scrapy.Field()

image_urls=scrapy.Field()3.2 wzry.py

爬取item.py中的相应数据。我们需要获取英雄名、皮肤名、皮肤url、各个技能及其详情等信息,要通过二级子页面爬取。爬取全部英雄的列表页,获取英雄名和英雄详情页url对应的编号,将编号存储下来,再逐个爬取各个英雄的详情页获取技能信息。

# -*- coding: utf-8 -*-

import scrapy

import re

from WZRY.items import WzryItem

class WzrySpider(scrapy.Spider):

name = 'wzry'

allowed_domains = ['pvp.qq.com'] #不能带https,会报错

start_urls = ['https://pvp.qq.com/web201605/herolist.shtml']

def parse(self, response):

#//是相对路径,/是绝对路径

hero_list=response.xpath("//ul[@class='herolist clearfix']//a")

print('一共有'+str(len(hero_list))+'个英雄')

for hero in hero_list:

item=WzryItem()

item['hero']=hero.xpath("./text()").extract()[0]

number=hero.xpath("./img/@src").extract()[0][-7:-4]

item['image_urls']="https://game.gtimg.cn/images/yxzj/img201606/

skin/hero-info/{0}/{0}-bigskin-".format(number)

url="https://pvp.qq.com/web201605/herodetail/"+number+".shtml"

yield scrapy.Request(url=url,callback=self.parse_detail,meta={'item':item})

def parse_detail(self,response):

item=response.meta["item"]

sample=r'[\u4E00-\u9FA5●]+'

skins=response.xpath(".//ul[@class='pic-pf-list pic-pf-list3']/@data-imgname").extract()[0]

item['skins']=re.findall(sample,skins)

image_urls=item['image_urls']

item['image_urls']=[]

for i in range(1,len(item['skins'])+1):

image_url=image_urls+str(i)+'.jpg'

item['image_urls'].append(image_url)

skills=response.xpath(".//p[@class='skill-name']/b/text()").extract()

skills_detail=response.xpath(".//p[@class='skill-desc']/text()").extract()

item['skill1']=skills[0]

item['skill2']=skills[1]

item['skill3']=skills[2]

item['skill4']=skills[3]

item['skill1_detail']=skills_detail[0]

item['skill2_detail']=skills_detail[1]

item['skill3_detail']=skills_detail[2]

item['skill4_detail']=skills_detail[3]

if len(skills)!=4:

item['skill5']=skills[4]

item['skill5_detail']=skills_detail[4]

else:

item['skill5']='null'

item['skill5_detail']='null'

print(item['hero']+"爬取成功!")

yield item

3.3 pipelines.py

处理爬下来的数据,实现以下功能:

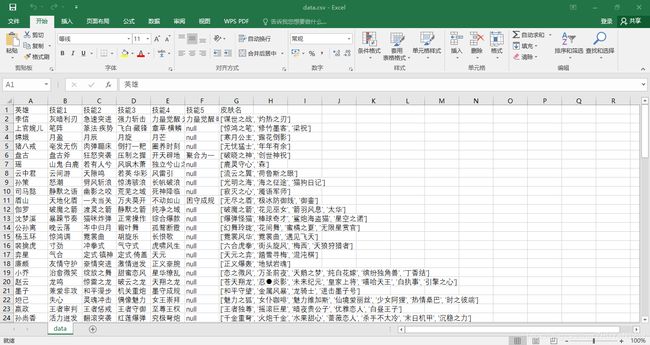

- 将英雄名、技能名、皮肤名存储到csv文件里

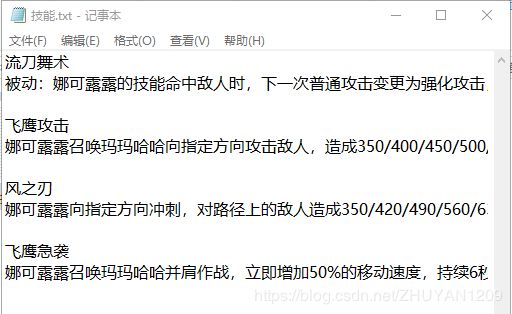

- 将技能详情写入以英雄名命名的txt文件中

- 下载皮肤图片,并重命名为皮肤名

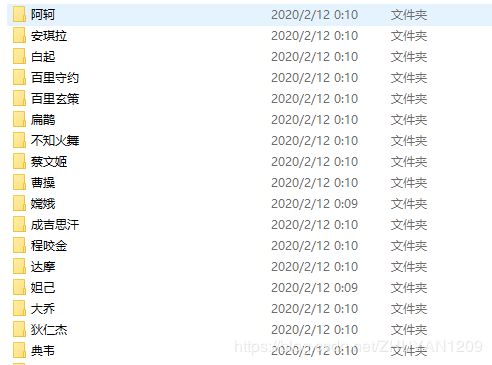

- 每个英雄创建一个文件夹,存储皮肤图片、技能详情txt文本文件

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import os

import csv

import scrapy

from scrapy.pipelines.images import ImagesPipeline

from WZRY.settings import IMAGES_STORE as images_store

class WzryPipeline(object):

def __init__(self):

#csv文件的位置,无需事先创建

store_file=os.path.dirname(__file__)+'/info/data.csv'

print('****************************************************')

#打开(创建)文件

self.file=open(store_file,'w',newline='')

#csv写法

self.writer=csv.writer(self.file,dialect="excel")

#写入第一行

self.writer.writerow(['英雄','技能1','技能2','技能3','技能4','技能5','皮肤名'])

def process_item(self,item,spider):

#判断字段值不为空再写入文件

if item['hero']:

self.writer.writerow([item['hero'],item['skill1'],item['skill2'],item['skill3'],item['skill4'],item['skill5'],item['skins']])

#以英雄名字创建文件夹

isExist=os.path.exists("E:/爬虫/王者荣耀/WZRY/WZRY/info/{}".format(item['hero']))

if not isExist:

os.mkdir("E:/爬虫/王者荣耀/WZRY/WZRY/info/{}".format(item['hero']))

try:

with open('E:/爬虫/王者荣耀/WZRY/WZRY/info/{}/技能.txt'.format(item['hero']),'w',encoding='gbk') as f:

f.write(item['skill1']+'\n'+item['skill1_detail']+'\n\n') #写入技能详情

f.write(item['skill2']+'\n'+item['skill2_detail']+'\n\n')

f.write(item['skill3']+'\n'+item['skill3_detail']+'\n\n')

f.write(item['skill4']+'\n'+item['skill4_detail']+'\n\n')

if item['skill5'] is not'null':

f.write(item['skill5']+'\n'+item['skill5_detail']+'\n\n')

except Exception:

raise

print(item['hero']+"技能存储成功!")

return item

def close_spider(self,spider):

#关闭爬虫时顺便将文件保存退出

self.file.close()

class WzryImgPipeline(ImagesPipeline):

#此方法是在发送下载请求之前调用,其实此方法本身就是去发送下载请求

def get_media_requests(self,item,info):

#调用原父类方法,发送下载请求并返回的结果(request的列表)

request_objs=super().get_media_requests(item,info)

#给每个request对象带上meta属性传入hero、name参数,并返回

for request_obj,num in zip(request_objs,range(0,len(item['skins']))):

request_obj.meta['hero']=item['hero']

request_obj.meta['skin']=item['skins'][num]

return request_objs

#此方法是在图片将要被存储时调用,用来获取这个图片存储的全部路径

def file_path(self,request,response=None,info=None):

#获取request的meta属性的hero作为文件夹名称

hero=request.meta.get('hero')

#获取request的meta属性的skin并拼接作为文件名称

image=request.meta.get('skin')+'.jpg'

#获取IMAGES_STORE图片的默认地址并拼接!!!!不执行那一步

hero_path=os.path.join(images_store,hero)

#判断地址是否存在,不存在则创建

if not os.path.exists(hero_path):

os.makedirs(hero_path)

#拼接文件夹地址与图片名存储的全部路径并返回!!!!原方法

image_path=os.path.join(hero_path,image)

return image_path

3.4 settings.py

设置图片存储位置,管道优先级等。

# -*- coding: utf-8 -*-

# Scrapy settings for WZRY project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'WZRY'

SPIDER_MODULES = ['WZRY.spiders']

NEWSPIDER_MODULE = 'WZRY.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'WZRY (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'WZRY.middlewares.WzrySpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'WZRY.middlewares.WzryDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'WZRY.pipelines.WzryPipeline': 300,

'WZRY.pipelines.WzryImgPipeline':200

}

#图片存储路径

IMAGES_STORE='E:\\爬虫\\王者荣耀\\WZRY\\WZRY\\info'

IMAGES_URLS_FILED='image_urls'

#设置图片通道失效时间

IMAGES_EXPIRES=90

#设置允许重定向,否则可能找不到图片

MEDIA_ALLOW_REDIRECTS=True

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'4.结果展示

存储英雄名、技能名、皮肤名的csv文件:

以英雄名命名的文件夹:

打开安琪拉文件夹:

技能.txt

最可爱的娜可露露~嘿~~⭐