TensorFlow(Keras) 一步步实现Fashion MNIST衣服鞋子图片分类 (2) Coursera深度学习教程分享

@[TOC](Coursera TensorFlow(Keras) 一步步手写体Fashion Mnist识别分类(2) Tensorflow和ML, DL 机器学习/深度学习Coursera教程分享 )

Fashion MNIST数据简介

相信很多人,对于Mnist这个数据集都已经学腻了。现在出了个Fashion Mnist更加有趣,而且tensorflow/keras自带这个数据集非常方便调用。这个数据集包含了很多衣服、鞋子的图片,每张图片恰好也是[28*28]的shape,很容易处理。

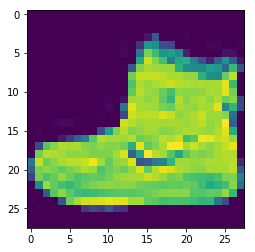

我们可以打印出一张看看,是一只鞋子。

模型构建

我们按照coursera上的教程直接上手写一个分类模型:

先下载数据

from tensorflow.examples.tutorials.mnist import input_data

data = input_data.read_data_sets('data/fashion', source_url='http://fashion-mnist.s3-website.eu-central-1.amazonaws.com/')

# 如果已经下载直接读入

data = input_data.read_data_sets('data/fashion')

BATCH_SIZE = 64

# 可以检查下数据,没问题

data.train.next_batch(BATCH_SIZE)

当然,keras里面已经有这个数据集了,直接load就行。下面开始写模型:

from tensorflow import keras

fashion_mnist = keras.datasets.fashion_mnist

(X_train, Y_train), (X_test, Y_test) = fashion_mnist.load_data()

# 输出看看X, Y的 shape

X_train.shape, X_test.shape, Y_train.shape, Y_test.shape

# ((60000, 28, 28), (10000, 28, 28), (60000,), (10000,))

#simply normlizing the data

X_train = X_train / 255.

X_test = X_test / 255.

import tensorflow as tf

from keras import Sequential

from keras.layers import Dense, Conv2D, MaxPool2D, Flatten

model = Sequential()

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dense(10, activation='softmax'))

model.compile(tf.train.AdamOptimizer(), loss='sparse_categorical_crossentropy', metrics=['acc'])

model.fit(X_train, Y_train, epochs=10, batch_size=32, validation_data=(X_test, Y_test))

Train on 60000 samples, validate on 10000 samples

Epoch 1/10

60000/60000 [] - 3s 48us/step - loss: 0.2165 - acc: 0.9197 - val_loss: 0.3426 - val_acc: 0.8861

Epoch 2/10

60000/60000 [] - 3s 49us/step - loss: 0.2097 - acc: 0.9211 - val_loss: 0.3956 - val_acc: 0.8603

Epoch 3/10

60000/60000 [==============================] - 3s 47us/step - loss: 0.2074 - acc: 0.9215 - val_loss: 0.3464 - val_acc: 0.8814

模型效果

检查模型预测结果:

预测20条

preds = model.predict(X_test[:20])

preds.argmax(axis=-1)

预测结果:

array([9, 2, 1, 1, 6, 1, 4, 6, 5, 7, 4, 5, 5, 3, 4, 1, 2, 2, 8, 0])

真是结果:

Y_test[:20]

array([9, 2, 1, 1, 6, 1, 4, 6, 5, 7, 4, 5, 7, 3, 4, 1, 2, 4, 8, 0])

发现大多数都预测正确了。

在全部数据集上测试模型结果:

model.evaluate(X_test, Y_test)

loss和准确率accuracy分别如下:

[0.3542726508885622, 0.8834]

我们也可以增加一个keras的callback函数,每个epoch结束时调用

class myCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs={}):

if(logs.get('acc')>0.9):

print("\nReached 90% accuracy so cancelling training!")

# if(logs.get('loss')<0.15):

# print("\nReached 0.15 loss so cancelling training!")

self.model.stop_training = True

callbacks = myCallback()

这样我们再次训练的时候,一旦准确率超过90%,训练就会停止。

model.fit(X_train, Y_train, epochs=10, batch_size=32, callbacks=[callbacks])

Epoch 1/10

60000/60000 [==============================] - 3s 45us/step - loss: 0.1320 - acc: 0.9498

Reached 90% accuracy so cancelling training!