Mac-单机Hive安装与测试

hive文档:hive 说明wiki

参考:

http://blog.csdn.net/isoleo/article/details/78401103

https://www.cnblogs.com/kinginme/p/7233315.html

前提本机已经安装单机hadoop和mysql

mysql安装:Mac安装Mysql_解决中文乱码_JDBC访问操作

hadoop安装:hadoop单机安装参考

(1)设置环境变量

vim ~/.bash_profile

设置 Hive环境变量

# Hive environment

export HIVE_HOME=/Users/hjw/Documents/software/hive

export PATH=$HIVE_HOME/bin:$HIVE_HOME/conf:$PATH

(2)配置hive

cd /usr/local/hive/conf

cp hive-default.xml.template hive-site.xml

cp hive-env.sh.template hive-env.sh

hive.metastore.warehouse.dir

该参数指定了 Hive 的数据存储目录,默认位置在 HDFS 上面的 /user/hive/warehouse 路径下。

hive.exec.scratchdir

该参数指定了 Hive 的数据临时文件目录,默认位置为 HDFS 上面的 /tmp/hive 路径下。

vim hive-env.sh

修改如下位置

HADOOP_HOME=/Users/hjw/Documents/software/hadoop/hadoop-2.7.4

export HIVE_CONF_DIR=/Users/hjw/Documents/software/hive/conf

export HIVE_AUX_JARS_PATH=/Users/hjw/Documents/software/hive/lib

/bin/hdfs dfs -mkdir -p /user/hive/warehouse

/bin/hdfs dfs -mkdir -p /tmp/hive/

hdfs dfs -chmod 777 /user/hive/warehouse

hdfs dfs -chmod 777 /tmp/hive

hadoop fs -chmod 777 /user/hive/warehouse

hadoop fs -chmod 777 /tmp/hive

修改 hive-site.xml

vim hive-site.xml

在最前面添加:https://stackoverflow.com/questions/27099898/java-net-urisyntaxexception-when-starting-hive

system:java.io.tmpdir

/tmp/hive/java

system:user.name

${user.name}

将hive.exec.local.scratchdir

${system:java.io.tmpdir}/${ system:user.name}

改成:

hive.exec.local.scratchdir

/Users/hjw/Documents/software/hive/data0/hive/${ user.name},这里记得新建目录

修改元数据存储位置

javax.jdo.option.ConnectionURL

jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

root

javax.jdo.option.ConnectionPassword

root

hive.metastore.schema.verification

false

Enforce metastore schema version consistency.

True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manully migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

下载msyql 驱动

https://dev.mysql.com/get/Downloads/Connector-J/mysql-connector-java-5.1.44.tar.gz

解压后,将里边的jar包,放入 /xxx/hive/lib目录 中。

(3)数据库初始化

bin/schematool -initSchema -dbType mysql

运行 bin/hive

hive> show databases;

OK

default

Time taken: 1.037 seconds, Fetched: 1 row(s)

(4)测试hive是否可以正确使用

hive> create database hive_test;

OK

Time taken: 0.041 seconds

hive> show databases;

OK

default

hive_test

Time taken: 0.029 seconds, Fetched: 2 row(s)

hive> use hive_test

> ;

OK

Time taken: 0.013 seconds

hive> create table student(id int, name string) row format delimited fields terminated by '\t';

OK

Time taken: 0.273 seconds

hive> show create table student;

OK

CREATE TABLE `student`(

`id` int,

`name` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'field.delim'='\t',

'serialization.format'='\t')

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs://localhost:9000/user/hive/warehouse/hive_test.db/student'

TBLPROPERTIES (

'COLUMN_STATS_ACCURATE'='{\"BASIC_STATS\":\"true\"}',

'numFiles'='0',

'numRows'='0',

'rawDataSize'='0',

'totalSize'='0',

'transient_lastDdlTime'='1513697309')

Time taken: 0.06 seconds, Fetched: 21 row(s)

hive> show tables;

OK

student

Time taken: 0.038 seconds, Fetched: 1 row(s)在hive目录下新建一个student.txt

1001 zhangsan

1002 lisi

向student表中load数据

hive> load data local inpath '/Users/hjw/Documents/software/hive/student.txt' into table hive_test.student;

Loading data to table hive_test.student

OK

Time taken: 0.386 seconds

查询数据

hive> select * from hive_test.student;

OK

1001 zhangsan

1002 lisi

Time taken: 1.068 seconds, Fetched: 2 row(s)

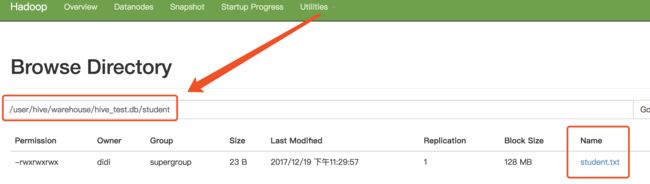

(5)查看HDFS中hive创建的数据

http://localhost:50070/explorer.html#/user/hive/warehouse/hive_test.db/student

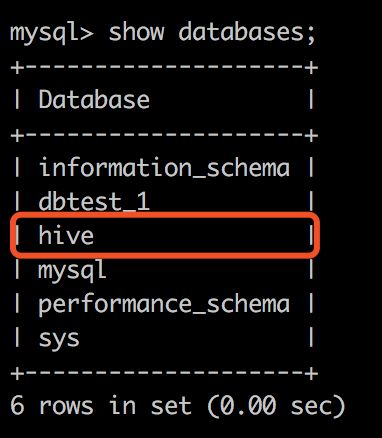

(6)查看mysql中的元数据

mysql> use hive

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+---------------------------+

| Tables_in_hive |

+---------------------------+

| AUX_TABLE |

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_COMPACTIONS |

| COMPLETED_TXN_COMPONENTS |

| DATABASE_PARAMS |

mysql> select * from TBLS where TBL_NAME ='student';

+--------+-------------+-------+------------------+-------+-----------+-------+----------+---------------+--------------------+--------------------+

| TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT |

+--------+-------------+-------+------------------+-------+-----------+-------+----------+---------------+--------------------+--------------------+

| 2 | 1513697309 | 6 | 0 | hjw | 0 | 2 | student | MANAGED_TABLE | NULL | NULL |

+--------+-------------+-------+------------------+-------+-----------+-------+----------+---------------+--------------------+--------------------+

(6) 再次启动hive时要先启动Metastore Server服务进程

/software/hive$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/xxx/Documents/software/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/xxx/Documents/software/hadoop/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/Users/xxx/Documents/software/hive/lib/hive-common-2.1.1.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:591)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:531)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)解决办法:

原因:因为没有正常启动Hive 的 Metastore Server服务进程。

解决方法:启动Hive 的 Metastore Server服务进程,执行如下命令:hive --service metastore &

按照:Hive常见问题汇总 多谢分享

(7)本地文件load到hive表

7-1 首先

将数据存在本地/Users/xxx/Documents/workfile/hive 文件夹下 如, hive_testfile.txt

内容格式(举例)

[INFO] XXXXXX

[INFO] XXXXXX

。。。

7-2 建表并将本地数据load到表中

hive> CREATE EXTERNAL TABLE system_log (`log_info` string COMMENT '日志信息')

> COMMENT 'XX执行日志'

> PARTITIONED BY (`dt` string COMMENT '日');

OK

Time taken: 1.444 seconds

hive> show tables;

OK

system_log

student

Time taken: 0.148 seconds, Fetched: 2 row(s)

@localhost:~/Documents/workfile/hive$ ls

hive_testfile.txt

@localhost:~/Documents/workfile/hive$ pwd

/Users/xxx/Documents/workfile/hive

hive> use hive_test;

OK

Time taken: 0.922 seconds

hive> show tables;

OK

system_log

student

Time taken: 0.135 seconds, Fetched: 2 row(s)

hive>

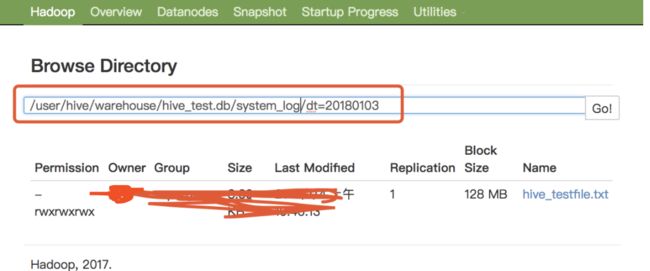

> LOAD DATA LOCAL INPATH '/Users/xxx/Documents/workfile/hive'

> OVERWRITE INTO TABLE system_log

> PARTITION(dt = '20180103');

Loading data to table hive_test.system_log partition (dt=20180103)

OK

Time taken: 2.097 seconds

hive> select * from hive_test.system_log limit 2;

OK

[INFO]xxxxxx 20180103

[INFO]xxxxxx 20180103

Time taken: 0.884 seconds, Fetched: 2 row(s)

查看HDFS文件

CREATE EXTERNAL TABLE `hive_test.change_type_test_table`

(`code` string COMMENT '测试code')

COMMENT '修改表字段类型示例表'

PARTITIONED BY (

`dt` string COMMENT '日'

)

row format delimited fields terminated by '\t'

STORED AS textfile;

insert overwrite table hive_test.change_type_test_table partition (dt=20180222) values(1);