残差网络resnet

残差网络

论文链接:https://arxiv.org/pdf/1512.03385.pdf

产生背景:随着网络深度的增加,导致了训练误差的增大;究其原因是,网络没有被很好的优化。

残差网络可以很深的原因:在一定程度上解决了梯度消失。

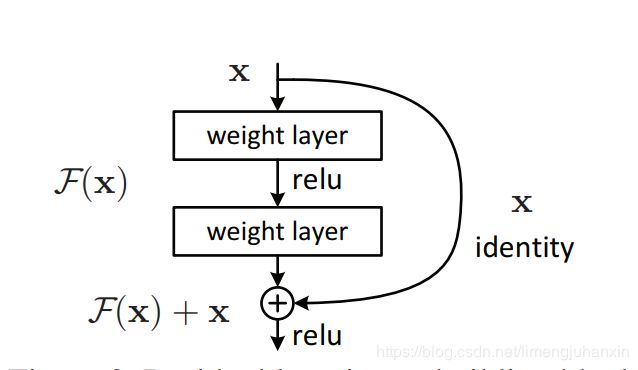

残差单元如下图:

当 维 度 x = F 当维度x=F 当维度x=F

y = F ( x , { W i } ) + x F = W 2 σ ( W 1 x ) \begin{aligned} y &= F(x,\{W_i\})+x \\ F &=W_2\sigma(W_1x) \end{aligned} yF=F(x,{Wi})+x=W2σ(W1x)

当 维 度 x ≠ F 当维度x\ne F 当维度x̸=F

y = F ( x , { W i } ) + W s x F = W 2 σ ( W 1 x ) \begin{aligned} y &= F(x,\{W_i\})+W_sx \\ F &=W_2\sigma(W_1x) \end{aligned} yF=F(x,{Wi})+Wsx=W2σ(W1x)

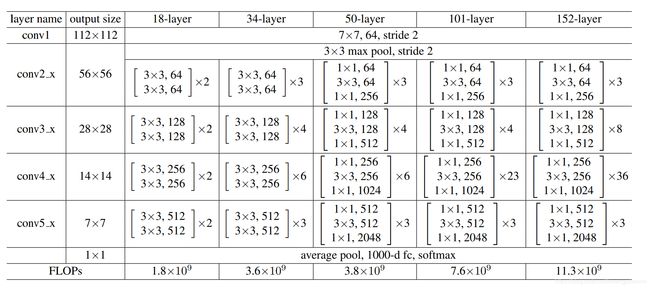

主要的网络结构

resnet网络的设计原则:

1.使用3x3卷积

2.stride=2时,ls通道数变2倍

4.几乎没有pooling

5.没有全连接层

6.没有使用dropout

caffe中的残差网络

protobuf链接:https://github.com/KaimingHe/deep-residual-networks

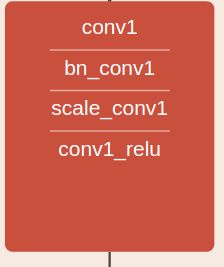

对于每个卷积层都是:conv->bn->scale->relu

layer {

bottom: "data"

top: "conv1"

name: "conv1"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 7

pad: 3

stride: 2

bias_term: false

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "bn_conv1"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "conv1"

top: "conv1"

name: "scale_conv1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

top: "conv1"

bottom: "conv1"

name: "conv1_relu"

type: "ReLU"

}

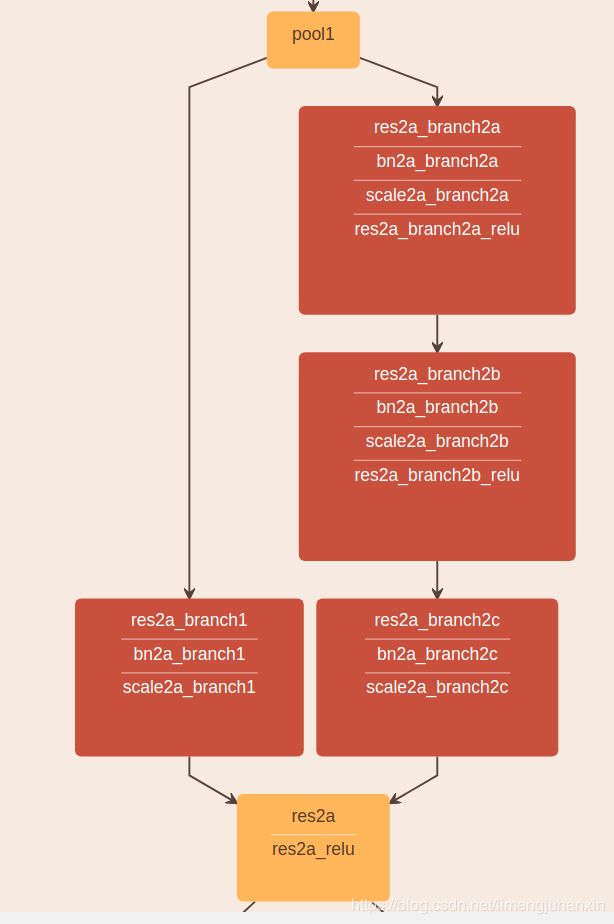

为了减小计算,从conv2_x到conv3_x,都增加一个1x1的卷积核,即为

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "bn2a_branch2a"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "scale2a_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

top: "res2a_branch2a"

bottom: "res2a_branch2a"

name: "res2a_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2b"

name: "res2a_branch2b"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "bn2a_branch2b"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "scale2a_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

top: "res2a_branch2b"

bottom: "res2a_branch2b"

name: "res2a_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2c"

name: "res2a_branch2c"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "bn2a_branch2c"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "scale2a_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

从conv1到conv2过渡时,由于通读数不同,需要对x进行project.

layer {

bottom: "pool1"

top: "res2a_branch1"

name: "res2a_branch1"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch1"

top: "res2a_branch1"

name: "bn2a_branch1"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch1"

top: "res2a_branch1"

name: "scale2a_branch1"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "pool1"

top: "res2a_branch2a"

name: "res2a_branch2a"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "bn2a_branch2a"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2a"

name: "scale2a_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

top: "res2a_branch2a"

bottom: "res2a_branch2a"

name: "res2a_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2a"

top: "res2a_branch2b"

name: "res2a_branch2b"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "bn2a_branch2b"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2b"

name: "scale2a_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

top: "res2a_branch2b"

bottom: "res2a_branch2b"

name: "res2a_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res2a_branch2b"

top: "res2a_branch2c"

name: "res2a_branch2c"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "bn2a_branch2c"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2a_branch2c"

top: "res2a_branch2c"

name: "scale2a_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a_branch1"

bottom: "res2a_branch2c"

top: "res2a"

name: "res2a"

type: "Eltwise"

}

layer {

bottom: "res2a"

top: "res2a"

name: "res2a_relu"

type: "ReLU"

}

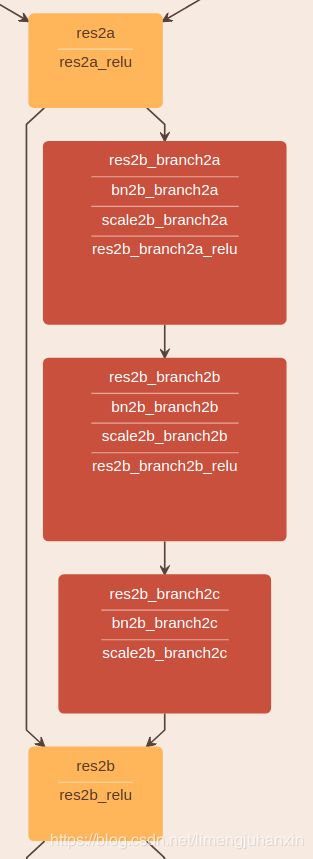

当x和F维度相同时,就不需要projection了。

layer {

bottom: "res2a_branch1"

bottom: "res2a_branch2c"

top: "res2a"

name: "res2a"

type: "Eltwise"

}

layer {

bottom: "res2a"

top: "res2a"

name: "res2a_relu"

type: "ReLU"

}

layer {

bottom: "res2a"

top: "res2b_branch2a"

name: "res2b_branch2a"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "bn2b_branch2a"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2a"

name: "scale2b_branch2a"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

top: "res2b_branch2a"

bottom: "res2b_branch2a"

name: "res2b_branch2a_relu"

type: "ReLU"

}

layer {

bottom: "res2b_branch2a"

top: "res2b_branch2b"

name: "res2b_branch2b"

type: "Convolution"

convolution_param {

num_output: 64

kernel_size: 3

pad: 1

stride: 1

bias_term: false

}

}

layer {

bottom: "res2b_branch2b"

top: "res2b_branch2b"

name: "bn2b_branch2b"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2b_branch2b"

top: "res2b_branch2b"

name: "scale2b_branch2b"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

top: "res2b_branch2b"

bottom: "res2b_branch2b"

name: "res2b_branch2b_relu"

type: "ReLU"

}

layer {

bottom: "res2b_branch2b"

top: "res2b_branch2c"

name: "res2b_branch2c"

type: "Convolution"

convolution_param {

num_output: 256

kernel_size: 1

pad: 0

stride: 1

bias_term: false

}

}

layer {

bottom: "res2b_branch2c"

top: "res2b_branch2c"

name: "bn2b_branch2c"

type: "BatchNorm"

batch_norm_param {

use_global_stats: true

}

}

layer {

bottom: "res2b_branch2c"

top: "res2b_branch2c"

name: "scale2b_branch2c"

type: "Scale"

scale_param {

bias_term: true

}

}

layer {

bottom: "res2a"

bottom: "res2b_branch2c"

top: "res2b"

name: "res2b"

type: "Eltwise"

}

layer {

bottom: "res2b"

top: "res2b"

name: "res2b_relu"

type: "ReLU"

}