python 爬取小说网站实战

2017.12.9

中午产生想法,大规模爬取小说网站上的小说。那么,有以下几个问题

- 下载链接有失效的可能,大部分网站的下载链接实质上是通过浏览器的内置检测程序检测到而弹出下载框 解决办法:暂时在test中事先确定链接可用,但即使如此,仍然会出现链接失效的状况

- 隐藏网页的问题 解决办法:通过观察比对,发现第二层网页链接是有规律的,因此编写链接生成函数,用list封存

def seturl(ur,i):

y=0

urllist=[x for x in range(2, i+ 1)]

for x in range(2, i+ 1):

url=ur+str(x)+'.html'

urllist[y]=url

y=y+1

return urllist- 链接失效导致程序自动停止的问题 解决办法:抛异常

try: print('正在下载: 小说 '+name+'...') urllib.request.urlretrieve(urla,filepath) except: print(name+' '+'下载失败') else: print(name+' '+'下载成功') - 按照原定需求,程序应从首页开始下载程序,不断的向下获取分类链接,但经过测试,有大量分类链接不可用 解决办法:取消从首页开始分析的原定计划,暂将分类页(第二层)作为首页

以上是在程序编写时遇到的主要问题

整个程序的思路,即不断的处理网页链接

程序待优化的地方

- 上面提到的链接失效问题

- 下载数据跟踪和统计问题,包括文件下载的大小,进度,耗时等

- 分类下载时文件夹自动生成问题

- 下载速度问题(不知是网站本身的链接问题还是程序能进一步优化)

- 试试多线程?

- 能否生成exe文件去执行,图形化界面?

参考资料链接如下

python笔记

正则表达式

BeautifulSoup 解析html方法(爬虫)

http://blog.csdn.net/u013372487/article/details/51734047

正则表达式在线测试

https://c.runoob.com/front-end/854

python正则 re部分

http://www.cnblogs.com/tina-python/p/5508402.html

异常处理

http://www.runoob.com/python/python-exceptions.html

http://www.cnblogs.com/zhangyingai/p/7097920.html

python下载文件

http://www.360doc.com/content/13/0929/20/11729272_318036381.shtml

python计算运行时间

http://www.cnblogs.com/rookie-c/p/5827694.html

程序源码如下 配置环境

python3

//用到的包

import urllib

import requests

from urllib import request

from bs4 import BeautifulSoup

import re

import timeseturl.py

import urllib

# http://www.txt53.com/html/qita/list_45_3.html

def seturl(ur,i):

y=0

urllist=[x for x in range(2, i+ 1)]

for x in range(2, i+ 1):

url=ur+str(x)+'.html'

urllist[y]=url

y=y+1

return urllist

end.py

import urllib

from urllib import request

import download

from bs4 import BeautifulSoup

import requests

import re

def end(url):

# url='http://www.txt53.com/down/42598.html'

header={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 UBrowser/6.1.2107.204 Safari/537.36'}

html=requests.get(url,headers=header)

soup=BeautifulSoup(html.text)

getContent=soup.find('div',attrs={"class":"xiazai"})

pattern=re.compile('http://www.txt53.com/home/down/txt/id/[0-9]*')

link=pattern.findall(str(getContent))

getContent=soup.find('a',attrs={"class":"shang"})

res = r'(.*?)'

name =re.findall(res,str(getContent), re.S|re.M)

pattern=re.compile( r'(.*?)')

# name=pattern.findall(str(getContent))

# print(name)

strurl=link[0]

download.download(strurl,name) download.py

import requests

import urllib

from urllib import request

def download(urla,na):

name=str(na[0])

filepath='D:/小说下载/'+name+'.txt'

try:

print('正在下载: 小说 '+name+'...')

urllib.request.urlretrieve(urla,filepath)

except:

print(name+' '+'下载失败')

else:

print(name+' '+'下载成功')mian.py

import urllib

import requests

from urllib import request

from bs4 import BeautifulSoup

import re

import end

import seturl

# import timelist

import time

import download

# 1.多层嵌套,在主网页中打开获取链接,层层分析取得目标链接

# timelist.viewBar(200)

startime=time.clock()

ur='http://www.txt53.com/html/qita/list_45_'

i=91

numa=0

urllist=seturl.seturl(ur,i)

for url in urllist[10:]:

print('正在自动打开新的页面 '+url)

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 UBrowser/6.1.2107.204 Safari/537.36'}

# get html内容

html = requests.get(url, headers=header)

soup = BeautifulSoup(html.text)

getContent = soup.findAll('li', attrs={"class": "qq_g"})

pattern = re.compile(r"(?<=href=\").+?(?=\")|(?<=href=\').+?(?=\')")

link = pattern.findall(str(getContent))

# 拿到排行榜上的链接

for list in link:

# 进入单页的详细介绍界面

urlb = list

html = requests.get(urlb, headers=header)

soup = BeautifulSoup(html.text)

getContent = soup.findAll('div', attrs={"class": "downbox"})

pattern = re.compile(r"(?<=href=\").+?(?=\")|(?<=href=\').+?(?=\')")

link = pattern.findall(str(getContent))

print('正在打开链接:' + link[0])

end.end(link[0])

numa=numa+1

print('共下载小说'+str(numa)+'部,下载完成!')

endtime=time.clock()

usetime=endtime-startime

print('本次爬取程序共耗时%f秒'%usetime)

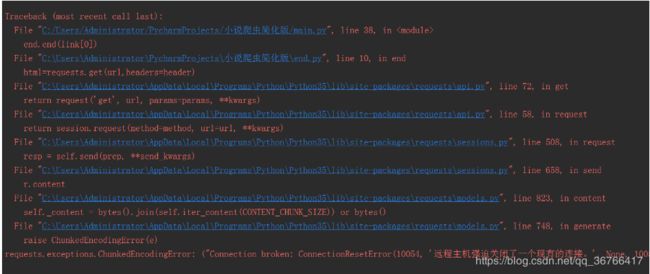

后记 在程序长期运行以后 远程主机检测到频繁请求 ,断开链接

处理方式如下

【Python爬虫错误】ConnectionResetError: [WinError 10054] 远程主机强迫关闭了一个现有的连接

http://blog.csdn.net/illegalname/article/details/77164521

Python爬虫突破封禁的6种常见方法

http://blog.csdn.net/offbye/article/details/52235139