Andrew Ng Deep Learning 第四课 第三周

Andrew Ng Deep Learning 第四课 第三周

- 前言

- 目标检测

- 目标定位

- 特征点检测

- 滑动窗口

- YOLO(You only look once)

- Bounding box预测

- 交并化

- 非极大值抑制

- Anchor boxes

- YOLO算法

- 编程作业

- 题目

前言

网易云课堂(双语字幕,不卡):https://mooc.study.163.com/smartSpec/detail/1001319001.htmcourseId=1004570029、

Coursera(贵):https://www.coursera.org/specializations/deep-learning

本人初学者,先在网易云课堂上看网课,再去Coursera上做作业,开博客以记录,文章中引用图片皆为课程中所截。

题目转载至:http://www.cnblogs.com/hezhiyao/p/7810725.html

编程作业所需库:链接:https://pan.baidu.com/s/1aS1Oia2fskemBHHEMnSepw 密码:66gd

目标检测

目标定位

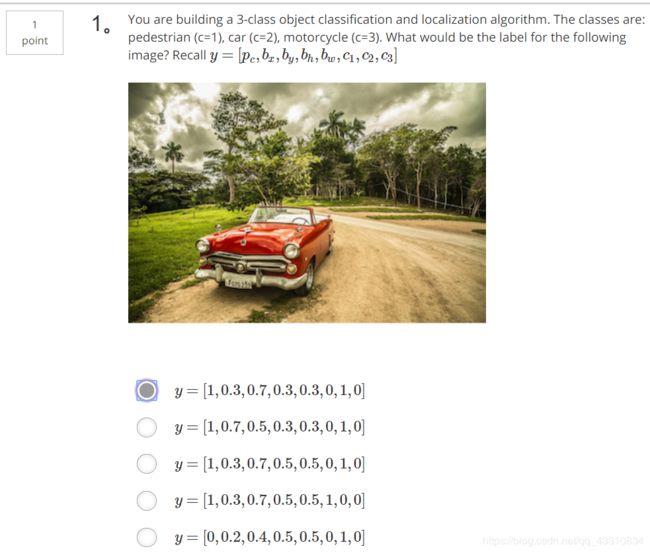

Tips:该情况只适用于只检测一个物体的情况,Pc=1表示该图中有想检测的物体,等于0则没有,bx表示物体中点横坐标,by表示纵坐标,bh表示包裹物体的框高,bw表示宽,c1表示检测到行人,其他类似,则得到的y向量为1×8向量

特征点检测

Tips:类似于确定边界框位置一样,选取某脸部特征或者肢体特征一样可以使用坐标形式

滑动窗口

滑动窗口算法解释在

https://blog.csdn.net/qq_43310834/article/details/85548237

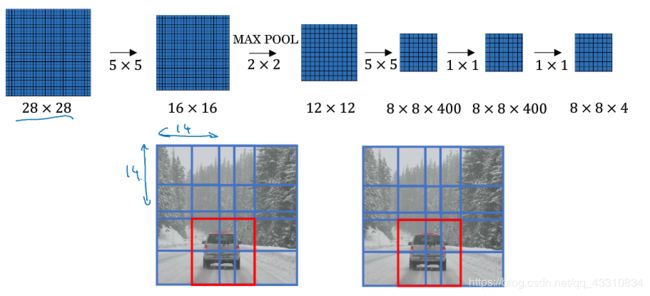

Tips:假设使用14×14×3的窗口,先使用卷积方法缩小边界减少计算量

YOLO(You only look once)

Bounding box预测

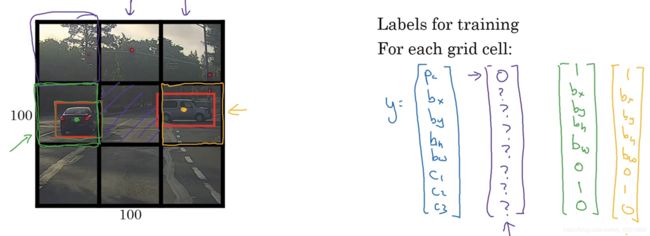

Tips:将一张图片分为多个格子,在不同格子中各自进行目标检测和目标定位,此处Pc=1即为有物体中点在该格子内

Tips:假设每个格子左上角为(0,0),右下角为(0,0)其他数据均按比例规定

交并化

非极大值抑制

Tips:第一步先计算出各个边界框的Pc,取最大的那个(假设为A)与其他进行交并化计算,将结果>=0.5的边界框删除,如果存在没被删除的框,从中选出最大的(假设为B),与其他(除了第一次最大的框A)进行交并化,同理删除直到没剩下没有标记过(不是每一步的最大并且没被删除)的框

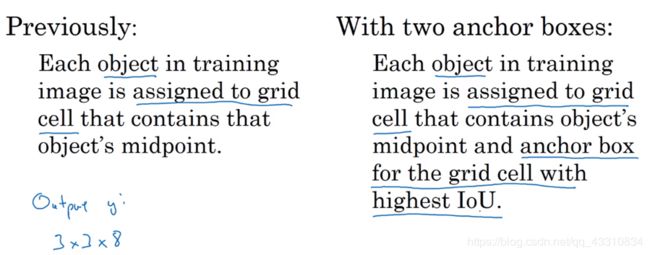

Anchor boxes

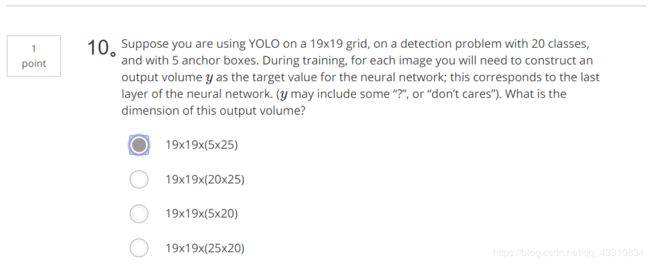

Tips:简单来说在前面的步骤上,如果该Bounding box内存在物体,和预先设置好的Anchor boxes交并化,取结果最大的那个。在预测y向量中,对应那个anchor box的Pc为1,即现在对每个格子不仅仅要标记有没有物体,还要检测它是哪一种anchor box

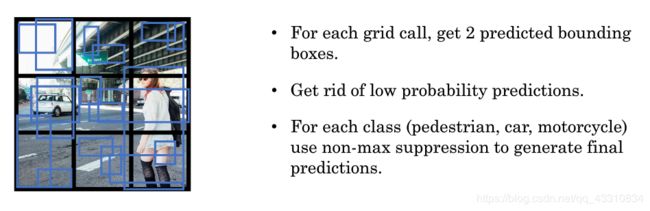

YOLO算法

Tips:第一步:遍历每个Bounding box,训练每一个目标向量y

Tips:第二步:利用训练得到的参数进行预测

Tips:第三步:执行非极大值抑制来减少多余的预测

编程作业

import argparse

import os

import matplotlib.pyplot as plt

from matplotlib.pyplot import imshow

import scipy.io

import scipy.misc

import numpy as np

import pandas as pd

import PIL

import tensorflow as tf

from keras import backend as K

from keras.layers import Input, Lambda, Conv2D

from keras.models import load_model, Model

from yad2k.models.keras_yolo import yolo_head, yolo_boxes_to_corners, preprocess_true_boxes, yolo_loss, yolo_body

import yolo_utils

%matplotlib inline

def yolo_filter_boxes(box_confidence , boxes, box_class_probs, threshold = 0.6):

"""

通过阈值来过滤对象和分类的置信度。

参数:

box_confidence - tensor类型,维度为(19,19,5,1),包含19x19单元格中每个单元格预测的5个锚框中的所有的锚框的pc (一些对象的置信概率)。

boxes - tensor类型,维度为(19,19,5,4),包含了所有的锚框的(px,py,ph,pw )。

box_class_probs - tensor类型,维度为(19,19,5,80),包含了所有单元格中所有锚框的所有对象( c1,c2,c3,···,c80 )检测的概率。

threshold - 实数,阈值,如果分类预测的概率高于它,那么这个分类预测的概率就会被保留。

返回:

scores - tensor 类型,维度为(None,),包含了保留了的锚框的分类概率。

boxes - tensor 类型,维度为(None,4),包含了保留了的锚框的(b_x, b_y, b_h, b_w)

classess - tensor 类型,维度为(None,),包含了保留了的锚框的索引

注意:"None"是因为你不知道所选框的确切数量,因为它取决于阈值。

比如:如果有10个锚框,scores的实际输出大小将是(10,)

"""

# Step 1: Compute box scores

### START CODE HERE ### (≈ 1 line)

box_scores=box_confidence*box_class_probs # (19, 19, 5, 80)

### END CODE HERE ###

# Step 2: Find the box_classes thanks to the max box_scores, keep track of the corresponding score

### START CODE HERE ### (≈ 2 lines)

box_classes =K.argmax( box_scores, axis=-1) #找最大分数所对应的类别,即分数对应的索引值 (19, 19, 5, 1)

box_class_scores = K.max(box_scores, axis=-1, keepdims=False) # 找最大分数所对应的类别的分数值 (19, 19, 5, 1)

### END CODE HERE ###

# Step 3: Create a filtering mask based on "box_class_scores" by using "threshold". The mask should have the

# same dimension as box_class_scores, and be True for the boxes you want to keep (with probability >= threshold)

### START CODE HERE ### (≈ 1 line)

filtering_mask = box_class_scores >= threshold #(19, 19, 5, 1)

### END CODE HERE ###

# Step 4: Apply the mask to scores, boxes and classes

### START CODE HERE ### (≈ 3 lines)

scores = tf.boolean_mask(box_class_scores, filtering_mask) # (19, 19, 5, 1)中false对应的值都去掉

boxes = tf.boolean_mask(boxes, filtering_mask) # tensor of shape (19, 19, 5, 4) 中false对应的值都去掉

classes = tf.boolean_mask( box_classes, filtering_mask) #tensor of shape 中(19, 19, 5, 1) 中false对应的值都去掉

### END CODE HERE ###

return scores, boxes, classes

def iou(box1, box2):

"""

实现两个锚框的交并比的计算

参数:

box1 - 第一个锚框,元组类型,(x1, y1, x2, y2)

box2 - 第二个锚框,元组类型,(x1, y1, x2, y2)

返回:

iou - 实数,交并比。

"""

# Calculate the (y1, x1, y2, x2) coordinates of the intersection of box1 and box2. Calculate its Area.

### START CODE HERE ### (≈ 5 lines)

xmax1=max(box1[0],box2[0]) # 或者 max(box1[0],box2[0])

xmin2=min(box1[2],box2[2])

ymax1=max(box1[1],box2[1])

ymin2=min(box1[3],box2[3])

jiao=(xmin2-xmax1)*(ymin2-ymax1)

### END CODE HERE ###

# Calculate the Union area by using Formula: Union(A,B) = A + B - Inter(A,B)

### START CODE HERE ### (≈ 3 lines)

s1=(box1[2]-box1[0])*(box1[3]-box1[1])

s2=(box2[2]-box2[0])*(box2[3]-box2[1])

bing=s1+s2-jiao

### END CODE HERE ###

# compute the IoU

### START CODE HERE ### (≈ 1 line)

iou=jiao/bing

### END CODE HERE ###

return iou

def yolo_non_max_suppression(scores, boxes, classes, max_boxes=10, iou_threshold=0.5):

"""

为锚框实现非最大值抑制( Non-max suppression (NMS))

参数:

scores - tensor类型,维度为(None,),yolo_filter_boxes()的输出

boxes - tensor类型,维度为(None,4),yolo_filter_boxes()的输出,已缩放到图像大小(见下文)

classes - tensor类型,维度为(None,),yolo_filter_boxes()的输出

max_boxes - 整数,预测的锚框数量的最大值

iou_threshold - 实数,交并比阈值。

返回:

scores - tensor类型,维度为(,None),每个锚框的预测的可能值

boxes - tensor类型,维度为(4,None),预测的锚框的坐标

classes - tensor类型,维度为(,None),每个锚框的预测的分类

注意:"None"是明显小于max_boxes的,这个函数也会改变scores、boxes、classes的维度,这会为下一步操作提供方便。

"""

max_boxes_tensor = K.variable(max_boxes, dtype='int32') # tensor to be used in tf.image.non_max_suppression()

K.get_session().run(tf.variables_initializer([max_boxes_tensor])) # initialize variable max_boxes_tensor

# Use tf.image.non_max_suppression() to get the list of indices corresponding to boxes you keep

### START CODE HERE ### (≈ 1 line)

nms_indices = tf.image.non_max_suppression(boxes, scores,max_boxes,iou_threshold)

### END CODE HERE ###

# Use K.gather() to select only nms_indices from scores, boxes and classes 使用 K.gather() 从 scores, boxes and classes只选择 nms_indices

### START CODE HERE ### (≈ 3 lines)

scores = K.gather(scores, nms_indices)

boxes = K.gather(boxes, nms_indices)

classes = K.gather(classes, nms_indices)

### END CODE HERE ###

return scores, boxes, classes

def yolo_eval(yolo_outputs, image_shape=(720.,1280.),

max_boxes=10, score_threshold=0.6,iou_threshold=0.5):

"""

将YOLO编码的输出(很多锚框)转换为预测框以及它们的分数,框坐标和类。

参数:

yolo_outputs - 编码模型的输出(对于维度为(608,608,3)的图片),包含4个tensors类型的变量:

box_confidence : tensor类型,维度为(None, 19, 19, 5, 1)

box_xy : tensor类型,维度为(None, 19, 19, 5, 2)

box_wh : tensor类型,维度为(None, 19, 19, 5, 2)

box_class_probs: tensor类型,维度为(None, 19, 19, 5, 80)

image_shape - tensor类型,维度为(2,),包含了输入的图像的维度,这里是(608.,608.)

max_boxes - 整数,预测的锚框数量的最大值

score_threshold - 实数,可能性阈值。

iou_threshold - 实数,交并比阈值。

返回:

scores - tensor类型,维度为(,None),每个锚框的预测的可能值

boxes - tensor类型,维度为(4,None),预测的锚框的坐标

classes - tensor类型,维度为(,None),每个锚框的预测的分类

"""

### START CODE HERE ###

# Retrieve outputs of the YOLO model (≈1 line)

box_confidence, box_xy, box_wh, box_class_probs = yolo_outputs

# Convert boxes to be ready for filtering functions

boxes = yolo_boxes_to_corners(box_xy, box_wh)

# Use one of the functions you've implemented to perform Score-filtering with a threshold of score_threshold (≈1 line)

scores, boxes, classes = yolo_filter_boxes(box_confidence, boxes, box_class_probs, score_threshold)

# Scale boxes back to original image shape.

boxes = yolo_utils.scale_boxes(boxes, image_shape)

# Use one of the functions you've implemented to perform Non-max suppression with a threshold of iou_threshold (≈1 line)

scores, boxes, classes = yolo_non_max_suppression(scores, boxes, classes, max_boxes, iou_threshold)

### END CODE HERE ###

return scores, boxes, classes

sess = K.get_session()

class_names = yolo_utils.read_classes("model_data/coco_classes.txt")

anchors = yolo_utils.read_anchors("model_data/yolo_anchors.txt")

image_shape = (720.,1280.)

yolo_model = load_model("model_data/yolov2.h5")

#yolo_model.summary()

yolo_outputs = yolo_head(yolo_model.output, anchors, len(class_names))

scores, boxes, classes = yolo_eval(yolo_outputs, image_shape)

#image, image_data = yolo_utils.preprocess_image("images/" + image_file, model_image_size = (608, 608))

def predict(sess, image_file, is_show_info=True, is_plot=True):

"""

运行存储在sess的计算图以预测image_file的边界框,打印出预测的图与信息。

参数:

sess - 包含了YOLO计算图的TensorFlow/Keras的会话。

image_file - 存储在images文件夹下的图片名称

返回:

out_scores - tensor类型,维度为(None,),锚框的预测的可能值。

out_boxes - tensor类型,维度为(None,4),包含了锚框位置信息。

out_classes - tensor类型,维度为(None,),锚框的预测的分类索引。

"""

# Preprocess your image

image, image_data = yolo_utils.preprocess_image("images/" + image_file, model_image_size = (608, 608))

# Run the session with the correct tensors and choose the correct placeholders in the feed_dict.

# You'll need to use feed_dict={yolo_model.input: ... , K.learning_phase(): 0})

### START CODE HERE ### (≈ 1 line)

out_scores, out_boxes, out_classes = sess.run([scores, boxes, classes],feed_dict={yolo_model.input:image_data , K.learning_phase(): 0})

### END CODE HERE ###

# Print predictions info

print('Found {} boxes for {}'.format(len(out_boxes), image_file))

# Generate colors for drawing bounding boxes.

colors = yolo_utils.generate_colors(class_names)

# Draw bounding boxes on the image file

yolo_utils.draw_boxes(image, out_scores, out_boxes, out_classes, class_names, colors)

# Save the predicted bounding box on the image

image.save(os.path.join("out", image_file), quality=90)

# Display the results in the notebook

output_image = imageio.imread(os.path.join("out", image_file))

imshow(output_image)

return out_scores, out_boxes, out_classes

for i in range(1,121):

#计算需要在前面填充几个0

num_fill = int( len("0000") - len(str(1))) + 1

#对索引进行填充

filename = str(i).zfill(num_fill) + ".jpg"

print("当前文件:" + str(filename))

#开始绘制,不打印信息,不绘制图

out_scores, out_boxes, out_classes = predict(sess, filename,is_show_info=False,is_plot=False)

print("绘制完成!")

filenames = []

for i in range(1,121):

num_fill = int( len("0000") - len(str(1))) + 1

#对索引进行填充

filename = os.path.join("out",str(i).zfill(num_fill) + ".jpg")

filenames.append(imageio.imread(filename))

imageio.mimsave('gif.gif', filenames,duration=0.5)