- html+css网页设计 旅游网站首页1个页面

html+css+js网页设计

htmlcss旅游

html+css网页设计旅游网站首页1个页面网页作品代码简单,可使用任意HTML辑软件(如:Dreamweaver、HBuilder、Vscode、Sublime、Webstorm、Text、Notepad++等任意html编辑软件进行运行及修改编辑等操作)。获取源码1,访问该网站https://download.csdn.net/download/qq_42431718/897527112,点击

- webstorm报错TypeError: this.cliEngine is not a constructor

Blue_Color

点击Details在控制台会显示报错的位置TypeError:this.cliEngineisnotaconstructoratESLintPlugin.invokeESLint(/Applications/RubyMine.app/Contents/plugins/JavaScriptLanguage/languageService/eslint/bin/eslint-plugin.js:97:

- [附源码]SSM计算机毕业设计游戏账号交易平台JAVA

计算机程序源码

java游戏mysql

项目运行环境配置:Jdk1.8+Tomcat7.0+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:SSM+mybatis+Maven+Vue等等组成,B/S模式+Maven管理等等。环境需要1.运行环境:最好是javajdk1.8,我们在这个平台上运行的。其他版本理论上也可以。2.ID

- Java中的大数据处理框架对比分析

省赚客app开发者

java开发语言

Java中的大数据处理框架对比分析大家好,我是微赚淘客系统3.0的小编,是个冬天不穿秋裤,天冷也要风度的程序猿!今天,我们将深入探讨Java中常用的大数据处理框架,并对它们进行对比分析。大数据处理框架是现代数据驱动应用的核心,它们帮助企业处理和分析海量数据,以提取有价值的信息。本文将重点介绍ApacheHadoop、ApacheSpark、ApacheFlink和ApacheStorm这四种流行的

- Python+Django毕业设计校园易购二手交易平台(程序+LW+部署)

Python、JAVA毕设程序源码

课程设计javamysql

项目运行环境配置:Jdk1.8+Tomcat7.0+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:SSM+mybatis+Maven+Vue等等组成,B/S模式+Maven管理等等。环境需要1.运行环境:最好是javajdk1.8,我们在这个平台上运行的。其他版本理论上也可以。2.ID

- Hbase - kerberos认证异常

kikiki2

之前怎么认证都认证不上,问题找了好了,发现它的异常跟实际操作根本就对不上,死马当活马医,当时也是瞎改才好的,给大家伙记录记录。KrbException:ServernotfoundinKerberosdatabase(7)-LOOKING_UP_SERVER>>>KdcAccessibility:removestorm1.starsriver.cnatsun.security.krb5.KrbTg

- WebStorm 配置 PlantUML

weijia_kmys

其他webstormideuml

1.安装PlantUML插件在WebStorm插件市场搜索PlantUMLIntegration并安装,重启WebStorm使插件生效。2.安装GraphvizPlantUML需要Graphviz来生成图形。使用Homebrew安装Graphviz:打开终端(Terminal)。确保你已经安装了Homebrew。如果没有,请先安装Homebrew,可以在Homebrew官网获取安装命令。在终端中输

- ReactNative 常用开源组件

2401_84875852

程序员reactnative开源react.js

WebStormReactNative的代码模板插件,包括:1.组件名称2.Api名称3.所有StyleSheets属性4.组件属性https://github.com/virtoolswebplayer/ReactNative-LiveTemplateReact-native调用cordova插件https://github.com/axemclion/react-native-cordova-

- phpstorm 2018激活教程,phpstorm破解版下载

八重樱勿忘

前言:本次phpstorm2018激活教程非常详细,所有细节指导软件是phpstorm10.0是因新版本破解还不稳定,大家放心这个版本不影响开发和使用。文件以及教程都打包在群文件里了。第一步:解压并打开文件,运行“PhpStorm-10.0.3.exe”点击Next进入下一步第二步:选择软件安装目录自定义选择安装根目录-->点击Next进入下一步注意!后面还需要找安装目录里的文件,所以记住安装到一

- Java Kafka生产者实现

stormsha

Javawebjavakafkalinq

欢迎莅临我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。推荐:「stormsha的主页」,「stormsha的知识库」持续学习,不断总结,共同进步,为了踏实,做好当下事儿~专栏导航Python系列:Python面试题合集,剑指大厂Git系列:Git操作技巧GO系列:记录博主学习GO语言的笔记,该笔记专栏

- Python 开心消消乐

stormsha

Python基础python开发语言游戏

文章目录效果图项目结构程序代码完整代码:https://gitcode.com/stormsha1/games/overview效果图项目结构程序代码run.pyimportsysimportpygamefrompygame.localsimportKEYDOWN,QUIT,K_q,K_ESCAPE,MOUSEBUTTONDOWNfromdissipate.levelimportManagerfr

- 玩手机的弹指之间,记忆受到了损坏,这是如何发生的?

跨鹿

一:从拍照损伤效应说起为了验证拍照损伤效应(photo-taking-impairmenteffect)——即拍照不利于记忆形成,加州大学圣克鲁斯分校的学者JuliaS.Soares和BenjaminC.Storm进行了两组实验(篇幅有限,不详细介绍该实验):实验一:42名被试与三个独立变量:观察,相机(手机相机)和Snapchat(色拉布,美国的一款“阅后即焚”照片分享应用)——在观察条件下,参

- 【附源码】计算机毕业设计java智慧后勤系统设计与实现

计算机毕设程序设计

javamybatismysql

项目运行环境配置:Jdk1.8+Tomcat7.0+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:SSM+mybatis+Maven+Vue等等组成,B/S模式+Maven管理等等。环境需要1.运行环境:最好是javajdk1.8,我们在这个平台上运行的。其他版本理论上也可以。2.ID

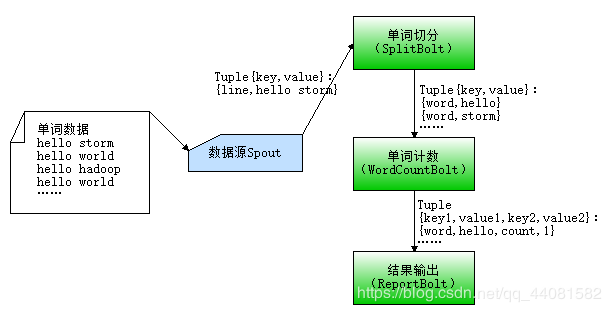

- Apache Storm:入门了解

布说在见

apachestorm大数据

前言Storm是一个开源的分布式实时计算系统,它能够处理无边界的数据流,类似于Hadoop对于批量数据处理的作用,但是Storm更侧重于实时数据流的处理。以下是关于Storm的一些关键特性及其应用场景的详细介绍:特性实时处理:Storm能够实时处理数据流,而不是像Hadoop那样需要先收集一批数据再进行处理。它可以持续不断地处理数据,这意味着一旦数据到达,就会立即被处理。简单易用:开发者可以用多种

- 大数据秋招面经之spark系列

wq17629260466

大数据spark

文章目录前言spark高频面试题汇总1.spark介绍2.spark分组取TopN方案总结:方案2是最佳方案。3.repartition与coalesce4.spark的oom问题怎么产生的以及解决方案5.storm与flink,sparkstreaming之间的区别6.spark的几种部署方式:7.复习spark的yarn-cluster模式执行流程:8.spark的job提交流程:9.spar

- PhpStorm中配置调试功能

hong161688

phpstormandroidide

在PhpStorm中配置调试功能是一个相对直接且强大的过程,它允许开发者在开发过程中高效地定位和解决代码问题。以下是一个详细的步骤指南,涵盖了从安装PhpStorm到配置调试环境的整个过程,以及如何在PhpStorm中使用调试功能。一、安装PhpStorm首先,确保你已经从JetBrains的官方网站下载了最新版本的PhpStorm。安装过程相对简单,只需按照安装向导的指示逐步进行即可。在安装过程

- 新闻编辑室第1季第4集台词

kuailexuewaiyu

英文中文♪TrytokeepmyheadaboveitthebestIcan♪♪我要努力挺过这一关♪♪That'swhyhereIam♪♪所以我才在这里♪♪Justwaitingforthisstormtopassmeby♪♪等着暴风雨经过♪♪Andthat'sthesoundofsunshine♪♪聆听阳光的声音♪♪Comingdown♪♪从空中洒下♪♪Andthat'sthesoundofsu

- web期末作业网页设计——我的家乡黑龙江(网页源码)

软件技术NINI

html+css+js旅游旅游htmlcss

一、网站题目旅游,当地特色,历史文化,特色小吃等网站的设计与制作。二、✍️网站描述静态网站的编写主要是用HTMLDIV+CSS等来完成页面的排版设计,常用的网页设计软件有Dreamweaver、EditPlus、HBuilderX、VScode、Webstorm、Animate等等,用的最多的还是DW,当然不同软件写出的前端Html5代码都是一致的,本网页适合修改成为各种类型的产品展示网页,

- 使用命令行创建任意大小文件的方法

拽拽夏天~

测试技巧linux功能测试

使用命令行创建任意大小文件的方法作为一个测试工程师,在工作中可能需要创建任意大小的文件。下面介绍下如何使用命令行创建任意大小的文件:1、Linux-dd命令:Linux下的dd命令很是强大,可以这样使用dd命令来创建指定大小的文件:生成固定大小文件ddif=/dev/zeroof=/home/bluestorm/100M.imgbs=1Mcount=1024(生成一个100M的文件,文件名为100

- phpstorm 插件等功能

胡萝卜的兔

laravelphpstormphpphpstormide

插件MaterialThemeUIUI主题插件ChinesePHPDocumentphp基本函数的中文文档PHPcomposer.jsonsupport在做php组件开发时,编辑composer.json文件时有对应的属性和值的自动完成功能BackgroundImagePlus背景图设置,安装之后,在打开View选项,就可以看到SetBackgroundImage选项了。.envfilessupp

- phpstrom连接远程服务器

努力学习的笨小孩

PHP开发工具phpstorm通过sftp和FTP远程连接服务器创建编辑远程项目PhpStorm是一个编辑PHP代码的PHP开发工具神器,应该说是目前世界上编辑PHP代码的最好用的PHP开发工具IDE了吧,本地项目的创建相信一般人都会,不过有时候我们可能项目运行在远程服务器上,比如有一种情况:在windows下使用PhpStorm编码,代码放在linux服务器上运行。还有一种情况,代码使用版本控制

- JAVA计算机毕业设计校园二手书交易系统(附源码、数据库)

卓杰计算机设计

java数据库开发语言

JAVA计算机毕业设计校园二手书交易系统(附源码、数据库)目运行环境项配置:Jdk1.8+Tomcat8.5+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:JAVA+mybatis+Maven+Vue等等组成,B/S模式+Maven管理等等。环境需要1.运行环境:最好是javajdk1

- JAVA毕设项目校园二手商品交易系统(java+VUE+Mybatis+Maven+Mysql)

贤杰程序源码

mybatisjavavue.js

JAVA毕设项目校园二手商品交易系统(java+VUE+Mybatis+Maven+Mysql)项目运行环境配置:Jdk1.8+Tomcat8.5+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:JAVA+mybatis+Maven+Vue等等组成,B/S模式+Maven管理等等。环境需

- html5 css3 JavaScript响应式中文静态网页模板js源代码

Yucool01

html5javascriptcss3

该批次模板具备如下功能:首页,二级页面,三级页面登录页面均有,页面齐全,功能齐全,js+css+html,前端HTML纯静态页面,无后台,可用dreamweaver,sublime,webstorm等工具修改;部分网页模板效果图:有需要的同学可以下载学习一下:https://download.csdn.net/download/Yucool01/22408278https://download.c

- 【python】python指南(十四):**操作符解包字典传参

LDG_AGI

Pythonpython开发语言人工智能机器学习图像处理深度学习计算机视觉

目录一、引言二、**操作符应用2.1**操作符介绍2.2**操作符案例三、总结一、引言对于算法工程师来说,语言从来都不是关键,关键是快速学习以及解决问题的能力。大学的时候参加ACM/ICPC一直使用的是C语言,实习的时候做一个算法策略后台用的是php,毕业后做策略算法开发,因为要用spark,所以写了scala,后来用基于storm开发实时策略,用的java。至于python,从日常用hive做数

- 2018-10-31

马修斯

WebStormmac下如何安装WebStorm+破解1、下载软件最好的地址就是官网了下载地址选择好系统版本以后点击DOWNLOAD下载Webstorm2、安装双击下载好的安装包、将WebStromt拖入application文件夹,然后在Launchpad中找到并打开安装.png3、等待一段时间后,WebStorm将进行第一次运行,当出现如下页面的时候第一行选择Activate,第二行选择Act

- [附源码]JAVA毕业设计基于web旅游网站的设计与实现(系统+LW)

晶涛计算机毕设

java前端旅游

[附源码]JAVA毕业设计基于web旅游网站的设计与实现(系统+LW)目运行环境项配置:Jdk1.8+Tomcat8.5+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:JAVA+mybatis+Maven+Vue等等组成,B/S模式+Maven管理等等。环境需要1.运行环境:最好是ja

- [附源码]java毕业设计基于web旅游网站的设计与实现

李会计算机程序设计

java前端旅游

项目运行环境配置:Jdk1.8+Tomcat7.0+Mysql+HBuilderX(Webstorm也行)+Eclispe(IntelliJIDEA,Eclispe,MyEclispe,Sts都支持)。项目技术:SSM+mybatis+Maven+Vue等等组成,B/S模式+Maven管理等等。环境需要1.运行环境:最好是javajdk1.8,我们在这个平台上运行的。其他版本理论上也可以。2.ID

- Module not found: Error: Can‘t resolve ‘sass-loader‘ in...

Coisini_甜柚か

web前端sassvue.jscss

"sass-loader"报错!!!Failedtocompile../src/main.jsModulenotfound:Error:Can'tresolve'sass-loader'in'E:\study_software\JetBrains\WebStorm2021\WebstormProjects\vue-element-admin-master'npminstallsass-loader

- pycharm 打开大文件代码洞察功能不可用的解决方法

weixin_44482092

intellij-ideajavaintellijidea

2019-11-13阅读1.3K0今天在使用WebStorm打开一个6.58MB的文件时,编辑器提示文件超过最大限制,代码洞察功能不可用。编辑器很多功能不可用,包括标签折叠、自动补齐、标签自动匹配等。Thefilesize(6.58MB)exceedsconfiquredlimit(2.56MB).Codeinsightfeaturesarenotavailable.其实JetBrains软件有一

- JAVA基础

灵静志远

位运算加载Date字符串池覆盖

一、类的初始化顺序

1 (静态变量,静态代码块)-->(变量,初始化块)--> 构造器

同一括号里的,根据它们在程序中的顺序来决定。上面所述是同一类中。如果是继承的情况,那就在父类到子类交替初始化。

二、String

1 String a = "abc";

JAVA虚拟机首先在字符串池中查找是否已经存在了值为"abc"的对象,根

- keepalived实现redis主从高可用

bylijinnan

redis

方案说明

两台机器(称为A和B),以统一的VIP对外提供服务

1.正常情况下,A和B都启动,B会把A的数据同步过来(B is slave of A)

2.当A挂了后,VIP漂移到B;B的keepalived 通知redis 执行:slaveof no one,由B提供服务

3.当A起来后,VIP不切换,仍在B上面;而A的keepalived 通知redis 执行slaveof B,开始

- java文件操作大全

0624chenhong

java

最近在博客园看到一篇比较全面的文件操作文章,转过来留着。

http://www.cnblogs.com/zhuocheng/archive/2011/12/12/2285290.html

转自http://blog.sina.com.cn/s/blog_4a9f789a0100ik3p.html

一.获得控制台用户输入的信息

&nbs

- android学习任务

不懂事的小屁孩

工作

任务

完成情况 搞清楚带箭头的pupupwindows和不带的使用 已完成 熟练使用pupupwindows和alertdialog,并搞清楚两者的区别 已完成 熟练使用android的线程handler,并敲示例代码 进行中 了解游戏2048的流程,并完成其代码工作 进行中-差几个actionbar 研究一下android的动画效果,写一个实例 已完成 复习fragem

- zoom.js

换个号韩国红果果

oom

它的基于bootstrap 的

https://raw.github.com/twbs/bootstrap/master/js/transition.js transition.js模块引用顺序

<link rel="stylesheet" href="style/zoom.css">

<script src=&q

- 详解Oracle云操作系统Solaris 11.2

蓝儿唯美

Solaris

当Oracle发布Solaris 11时,它将自己的操作系统称为第一个面向云的操作系统。Oracle在发布Solaris 11.2时继续它以云为中心的基调。但是,这些说法没有告诉我们为什么Solaris是配得上云的。幸好,我们不需要等太久。Solaris11.2有4个重要的技术可以在一个有效的云实现中发挥重要作用:OpenStack、内核域、统一存档(UA)和弹性虚拟交换(EVS)。

- spring学习——springmvc(一)

a-john

springMVC

Spring MVC基于模型-视图-控制器(Model-View-Controller,MVC)实现,能够帮助我们构建像Spring框架那样灵活和松耦合的Web应用程序。

1,跟踪Spring MVC的请求

请求的第一站是Spring的DispatcherServlet。与大多数基于Java的Web框架一样,Spring MVC所有的请求都会通过一个前端控制器Servlet。前

- hdu4342 History repeat itself-------多校联合五

aijuans

数论

水题就不多说什么了。

#include<iostream>#include<cstdlib>#include<stdio.h>#define ll __int64using namespace std;int main(){ int t; ll n; scanf("%d",&t); while(t--)

- EJB和javabean的区别

asia007

beanejb

EJB不是一般的JavaBean,EJB是企业级JavaBean,EJB一共分为3种,实体Bean,消息Bean,会话Bean,书写EJB是需要遵循一定的规范的,具体规范你可以参考相关的资料.另外,要运行EJB,你需要相应的EJB容器,比如Weblogic,Jboss等,而JavaBean不需要,只需要安装Tomcat就可以了

1.EJB用于服务端应用开发, 而JavaBeans

- Struts的action和Result总结

百合不是茶

strutsAction配置Result配置

一:Action的配置详解:

下面是一个Struts中一个空的Struts.xml的配置文件

<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE struts PUBLIC

&quo

- 如何带好自已的团队

bijian1013

项目管理团队管理团队

在网上看到博客"

怎么才能让团队成员好好干活"的评论,觉得写的比较好。 原文如下: 我做团队管理有几年了吧,我和你分享一下我认为带好团队的几点:

1.诚信

对团队内成员,无论是技术研究、交流、问题探讨,要尽可能的保持一种诚信的态度,用心去做好,你的团队会感觉得到。 2.努力提

- Java代码混淆工具

sunjing

ProGuard

Open Source Obfuscators

ProGuard

http://java-source.net/open-source/obfuscators/proguardProGuard is a free Java class file shrinker and obfuscator. It can detect and remove unused classes, fields, m

- 【Redis三】基于Redis sentinel的自动failover主从复制

bit1129

redis

在第二篇中使用2.8.17搭建了主从复制,但是它存在Master单点问题,为了解决这个问题,Redis从2.6开始引入sentinel,用于监控和管理Redis的主从复制环境,进行自动failover,即Master挂了后,sentinel自动从从服务器选出一个Master使主从复制集群仍然可以工作,如果Master醒来再次加入集群,只能以从服务器的形式工作。

什么是Sentine

- 使用代理实现Hibernate Dao层自动事务

白糖_

DAOspringAOP框架Hibernate

都说spring利用AOP实现自动事务处理机制非常好,但在只有hibernate这个框架情况下,我们开启session、管理事务就往往很麻烦。

public void save(Object obj){

Session session = this.getSession();

Transaction tran = session.beginTransaction();

try

- maven3实战读书笔记

braveCS

maven3

Maven简介

是什么?

Is a software project management and comprehension tool.项目管理工具

是基于POM概念(工程对象模型)

[设计重复、编码重复、文档重复、构建重复,maven最大化消除了构建的重复]

[与XP:简单、交流与反馈;测试驱动开发、十分钟构建、持续集成、富有信息的工作区]

功能:

- 编程之美-子数组的最大乘积

bylijinnan

编程之美

public class MaxProduct {

/**

* 编程之美 子数组的最大乘积

* 题目: 给定一个长度为N的整数数组,只允许使用乘法,不能用除法,计算任意N-1个数的组合中乘积中最大的一组,并写出算法的时间复杂度。

* 以下程序对应书上两种方法,求得“乘积中最大的一组”的乘积——都是有溢出的可能的。

* 但按题目的意思,是要求得这个子数组,而不

- 读书笔记-2

chengxuyuancsdn

读书笔记

1、反射

2、oracle年-月-日 时-分-秒

3、oracle创建有参、无参函数

4、oracle行转列

5、Struts2拦截器

6、Filter过滤器(web.xml)

1、反射

(1)检查类的结构

在java.lang.reflect包里有3个类Field,Method,Constructor分别用于描述类的域、方法和构造器。

2、oracle年月日时分秒

s

- [求学与房地产]慎重选择IT培训学校

comsci

it

关于培训学校的教学和教师的问题,我们就不讨论了,我主要关心的是这个问题

培训学校的教学楼和宿舍的环境和稳定性问题

我们大家都知道,房子是一个比较昂贵的东西,特别是那种能够当教室的房子...

&nb

- RMAN配置中通道(CHANNEL)相关参数 PARALLELISM 、FILESPERSET的关系

daizj

oraclermanfilespersetPARALLELISM

RMAN配置中通道(CHANNEL)相关参数 PARALLELISM 、FILESPERSET的关系 转

PARALLELISM ---

我们还可以通过parallelism参数来指定同时"自动"创建多少个通道:

RMAN > configure device type disk parallelism 3 ;

表示启动三个通道,可以加快备份恢复的速度。

- 简单排序:冒泡排序

dieslrae

冒泡排序

public void bubbleSort(int[] array){

for(int i=1;i<array.length;i++){

for(int k=0;k<array.length-i;k++){

if(array[k] > array[k+1]){

- 初二上学期难记单词三

dcj3sjt126com

sciet

concert 音乐会

tonight 今晚

famous 有名的;著名的

song 歌曲

thousand 千

accident 事故;灾难

careless 粗心的,大意的

break 折断;断裂;破碎

heart 心(脏)

happen 偶尔发生,碰巧

tourist 旅游者;观光者

science (自然)科学

marry 结婚

subject 题目;

- I.安装Memcahce 1. 安装依赖包libevent Memcache需要安装libevent,所以安装前可能需要执行 Shell代码 收藏代码

dcj3sjt126com

redis

wget http://download.redis.io/redis-stable.tar.gz

tar xvzf redis-stable.tar.gz

cd redis-stable

make

前面3步应该没有问题,主要的问题是执行make的时候,出现了异常。

异常一:

make[2]: cc: Command not found

异常原因:没有安装g

- 并发容器

shuizhaosi888

并发容器

通过并发容器来改善同步容器的性能,同步容器将所有对容器状态的访问都串行化,来实现线程安全,这种方式严重降低并发性,当多个线程访问时,吞吐量严重降低。

并发容器ConcurrentHashMap

替代同步基于散列的Map,通过Lock控制。

&nb

- Spring Security(12)——Remember-Me功能

234390216

Spring SecurityRemember Me记住我

Remember-Me功能

目录

1.1 概述

1.2 基于简单加密token的方法

1.3 基于持久化token的方法

1.4 Remember-Me相关接口和实现

- 位运算

焦志广

位运算

一、位运算符C语言提供了六种位运算符:

& 按位与

| 按位或

^ 按位异或

~ 取反

<< 左移

>> 右移

1. 按位与运算 按位与运算符"&"是双目运算符。其功能是参与运算的两数各对应的二进位相与。只有对应的两个二进位均为1时,结果位才为1 ,否则为0。参与运算的数以补码方式出现。

例如:9&am

- nodejs 数据库连接 mongodb mysql

liguangsong

mongodbmysqlnode数据库连接

1.mysql 连接

package.json中dependencies加入

"mysql":"~2.7.0"

执行 npm install

在config 下创建文件 database.js

- java动态编译

olive6615

javaHotSpotjvm动态编译

在HotSpot虚拟机中,有两个技术是至关重要的,即动态编译(Dynamic compilation)和Profiling。

HotSpot是如何动态编译Javad的bytecode呢?Java bytecode是以解释方式被load到虚拟机的。HotSpot里有一个运行监视器,即Profile Monitor,专门监视

- Storm0.9.5的集群部署配置优化

roadrunners

优化storm.yaml

nimbus结点配置(storm.yaml)信息:

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional inf

- 101个MySQL 的调节和优化的提示

tomcat_oracle

mysql

1. 拥有足够的物理内存来把整个InnoDB文件加载到内存中——在内存中访问文件时的速度要比在硬盘中访问时快的多。 2. 不惜一切代价避免使用Swap交换分区 – 交换时是从硬盘读取的,它的速度很慢。 3. 使用电池供电的RAM(注:RAM即随机存储器)。 4. 使用高级的RAID(注:Redundant Arrays of Inexpensive Disks,即磁盘阵列

- zoj 3829 Known Notation(贪心)

阿尔萨斯

ZOJ

题目链接:zoj 3829 Known Notation

题目大意:给定一个不完整的后缀表达式,要求有2种不同操作,用尽量少的操作使得表达式完整。

解题思路:贪心,数字的个数要要保证比∗的个数多1,不够的话优先补在开头是最优的。然后遍历一遍字符串,碰到数字+1,碰到∗-1,保证数字的个数大于等1,如果不够减的话,可以和最后面的一个数字交换位置(用栈维护十分方便),因为添加和交换代价都是1