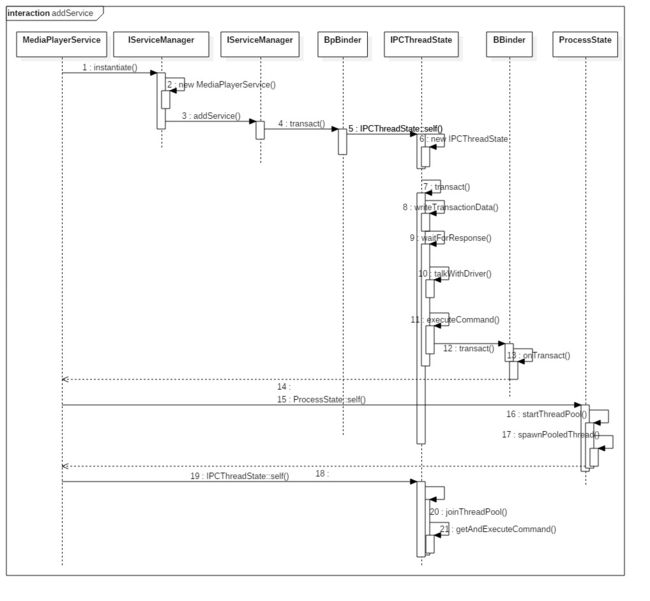

第四部分: binder之四 服务注册

入口

main_mediaserver.cpp

sp proc(ProcessState::self());//1

sp sm = defaultServiceManager();//2

...

MediaPlayerService::instantiate();

...

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

1和2是获取ServiceManager的过程

1. MediaPlayerService::instantiate()

void MediaPlayerService::instantiate() {

defaultServiceManager()->addService(

String16("media.player"), new MediaPlayerService());

}

1.defaultServiceManager返回的是BpServiceManager

2.BpServiceManager有一个变量mRemote指向了BpBinder,同时BpServiceManager还实现了IServiceManager的业务函数;

3.IServiceManager定义了ServiceManager的所有功能,如addService,getService等。

2. IServiceManager::addService

virtual status_t addService(const String16& name, const sp& service,

bool allowIsolated)

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}

1:初始化data和reply对象,其中data用于封装请求数据,reply用于接收返回数据

2:填充请求数据

3:remote()->transact,其中remote()就是是一个BpBinder对象,BpBinder对象存放在BpServiceManager中,BpServiceManager是defaultServiceManager返回的。

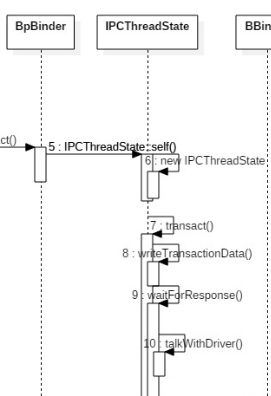

3.BpBInder::transact

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

BpBinder也就是Binder代理调用transact,只是将工作交给IPCThreadState来执行transact。

4.IPCThreadState::self

IPCThreadState* IPCThreadState::self()

{

if (gHaveTLS) {

restart:

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

return new IPCThreadState;

}

if (gShutdown) return NULL;

pthread_mutex_lock(&gTLSMutex);

if (!gHaveTLS) {

if (pthread_key_create(&gTLS, threadDestructor) != 0) {

pthread_mutex_unlock(&gTLSMutex);

return NULL;

}

gHaveTLS = true;

}

pthread_mutex_unlock(&gTLSMutex);

goto restart;

}

5 new IPCThreadState

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

mMyThreadId(androidGetTid()),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0)

{

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

}

每个线程都有一个IPCThreadState,每个IPCThreadState都有min和mout,mIn用来接收数据,mOut用来往BinderDriver发送数据

6 IPCThreadState::transact

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

err = waitForResponse(reply);

//如果是ONEWAY则不等待reply

return err;

}

IPCThreadState::transact完成的有两件事

1.发送数据

2.接收返回数据

这里BC_TRANSACTION是发往BinderDriver的消息码,BinderDriver向应用程序回复消息的消息码以BR_开头

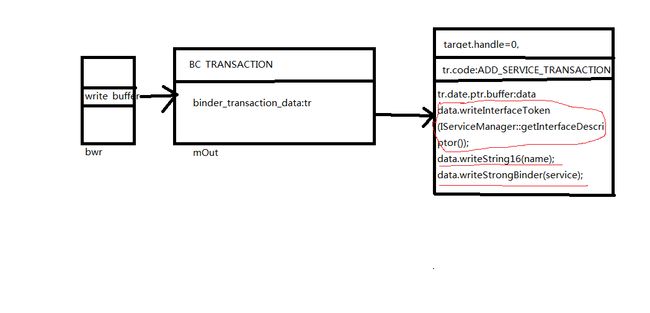

7. IPCThreadState::writeTransactionData

writeTransactionData(BC_TRANSACTION, 0, 0, ADD_SERVICE_TRANSACTION, data, NULL)

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

//把数据存入到binder_transaction_tr对象tr中

binder_transaction_data tr;

tr.target.handle = handle;//tr.target.handle保存传进来的handle的值

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

} else {

return (mLastError = err);

}

//将cmd和tr放到mOut中

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

将传递过来的handle,code,data等数据放到binder_transaction_data对象tr中,再把tr和BC_TRANSACTION放到mOut中。

handle:用来标识目的端,注册服务过程的目的端是SM,handle是0,对应的是binder_context_mgr_node对象,正是SM所对应的Binder实体对象

8.IPC::waitForResponse

waitForResponse(&reply, NULL);

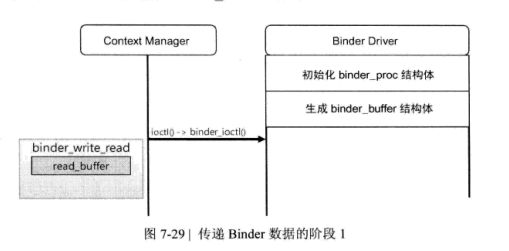

9.IPCThreadState::talkWithDriver

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

//把放在mOut中的数据提取出来放到bwr的write中

bwr.write_size = outAvail;

bwr.write_buffer = (long unsigned int)mOut.data();

//

if (doReceive && needRead) {//如果收到数据就放在mIn中

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (long unsigned int)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

//如果既没有write也没有read数据,就直接返回

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

//初始化已经处理的数据,都是0

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

#if defined(HAVE_ANDROID_OS)

//通过ioctl与BinderDriver通信

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

} while (err == -EINTR);

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {//处理数据,如果已经有write数据被处理

if (bwr.write_consumed < (ssize_t)mOut.dataSize())

mOut.remove(0, bwr.write_consumed);//把处理掉的数据移除出去

else

mOut.setDataSize(0);//write区里的数据全部被处理了

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

return NO_ERROR;

}

return err;

}

10.IPCThreadState::executeCommand

executeCommand(BR_TRANSACTION)

status_t IPCThreadState::executeCommand(int32_t cmd)

{

BBinder* obj;

RefBase::weakref_type* refs;

status_t result = NO_ERROR;

switch (cmd) {

case BR_TRANSACTION:

{

binder_transaction_data tr;

//mIn接收返回的数据

result = mIn.read(&tr, sizeof(tr));

Parcel buffer;

if (tr.target.ptr) {

//BBinder,BnService从BBinder派生而来,这里的b其实就是BnService,tr.cookie里存放的是BBinder

sp b((BBinder*)tr.cookie);

const status_t error = b->transact(tr.code, buffer, &reply, tr.flags);

if (error < NO_ERROR) reply.setError(error);

} else {

}

}

break;

default:

printf("*** BAD COMMAND %d received from Binder driver\n", cmd);

result = UNKNOWN_ERROR;

break;

}

if (result != NO_ERROR) {

mLastError = result;

}

return result;

}

11.BBinder::transact

status_t BBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

data.setDataPosition(0);

status_t err = NO_ERROR;

switch (code) {

case PING_TRANSACTION:

reply->writeInt32(pingBinder());

break;

default:

err = onTransact(code, data, reply, flags);

break;

}

if (reply != NULL) {

reply->setDataPosition(0);

}

return err;

}

12.BBinder::onTransact

status_t BBinder::onTransact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t /*flags*/)

{

switch (code) {

case INTERFACE_TRANSACTION:

reply->writeString16(getInterfaceDescriptor());

return NO_ERROR;

case DUMP_TRANSACTION: {

int fd = data.readFileDescriptor();

int argc = data.readInt32();

Vector args;

for (int i = 0; i < argc && data.dataAvail() > 0; i++) {

args.add(data.readString16());

}

return dump(fd, args);

}

case SYSPROPS_TRANSACTION: {

report_sysprop_change();

return NO_ERROR;

}

default:

return UNKNOWN_TRANSACTION;

}

}

在MediaPlayerService 的场景下实际上是BnMediaPlayerService继承了BBinder,且重载了onTransact方法,实际是执行BnMediaPlayerService的onTransact方法。

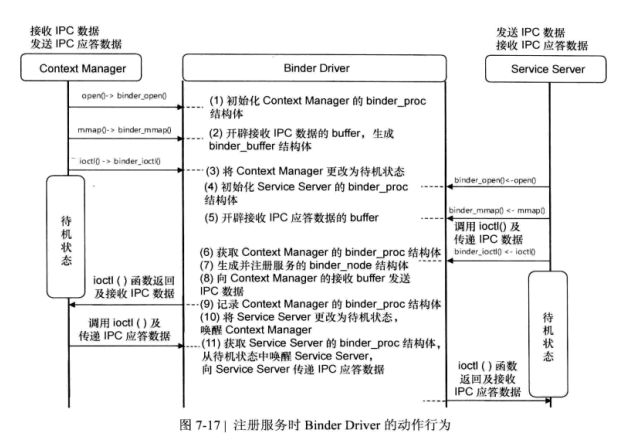

总结

1-12是native层服务注册的过程,其中在talkWithDriver中,

有ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr),这段代码会与BinderDriver交互。下面从驱动层对这一过程进行分析

1.第一阶段

service_manager.c通过open打开BinderDriver,通过mmap函数创建接收IPC数据的buffer,通过ioctl进入待机状态

1.1 service_manager的main函数

int main(int argc, char **argv)

{

struct binder_state *bs;

void *svcmgr = BINDER_SERVICE_MANAGER;

bs = binder_open(128*1024);

if (binder_become_context_manager(bs)) {

}

svcmgr_handle = svcmgr;

binder_loop(bs, svcmgr_handler);

return 0;

}

1.通过binder_open初始化bs,(bs->mapped是通过mmap得到的)

2.binder_become_context_manager将自己设置成contextmgr

3.进入循环,消息的处理有svcmgr_handler负责

1.2 binder_open(这里的binder_open是service_manager自己的binder.c)

打开BinderDriver并映射一块区域

struct binder_state *binder_open(unsigned mapsize)

{

struct binder_state *bs;

bs = malloc(sizeof(*bs));

if (!bs) {

}

bs->fd = open("/dev/binder", O_RDWR);

if (bs->fd < 0) {

goto fail_open;

}

bs->mapsize = mapsize;

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

goto fail_map;

}

return bs;

}

1.初始化binder_state对象bs

2.bs->fd是打开/dev/binde之后获得文件描述符

3.bs->mapsize是指定的映射区大小

4.bs->mapped是映射区的地址

struct binder_state

{

int fd;

void *mapped;

unsigned mapsize;

};

1.3 binder_become_context_manager

int binder_become_context_manager(struct binder_state *bs)

{

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}

这段代码会调用到BinderDriver里的binder_ioctl函数的BINDER_SET_CONTEXT_MGR那部分,其中最重要的是binder_context_mgr_node = binder_new_node(proc, NULL, NULL);

也就是根据service_manager的进程proc生成一个binder_node,并赋值给binder_context_mgr_node

1.4 binder_loop

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

unsigned readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;

//read_size>0,write_size=0

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

}

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

}

}

binder_loop的前几行代码,初始化了一个bwr,并且read有数据,write没有数据,然后执行ioctl

1.5 binder_ioctl

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;//内核空间中的binder_write_read数据

if (size != sizeof(struct binder_write_read)) { //校验数据

}

//把用户空间的数据复制到内核空间中

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

}

//此时bwr.write_size=0,先不分析这段

if (bwr.write_size > 0) {

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

}

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

//把数据从内核空间复制到用户空间

}

break;

}

因为bwr->read_size>0所以这里执行到binder_thread_read

1.6 binder_thread_read函数

ret = wait_event_interruptible_exclusive(proc->wait, binder_has_proc_work(proc, thread));

在binder_thread_read函数中,通过wait_event_interruptible_exclusive,service_manager这个进程进入等待状态。

2.第二阶段

服务端,就是要往SM注册的服务端通过binder_open,binder_mmap等,初始化binder_proc,并生成binder_buffer用于接收IPC应答数据,这些是通过ProcessState来完成的

下面以mediasserver为例子来分析这个过程

sp proc(ProcessState::self());//1

sp sm = defaultServiceManager();//2

MediaPlayerService::instantiate();

2.1 ProcessState::self()

sp ProcessState::self()

{

Mutex::Autolock _l(gProcessMutex);

if (gProcess != NULL) {

return gProcess;

}

gProcess = new ProcessState;

return gProcess;

}

ProcessState::ProcessState()

: mDriverFD(open_driver())

, mVMStart(MAP_FAILED)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

{

if (mDriverFD >= 0) {

#if !defined(HAVE_WIN32_IPC)

// mmap the binder, providing a chunk of virtual address space to receive transactions.

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

}

#else

mDriverFD = -1;

#endif

}

}

static int open_driver()

{

int fd = open("/dev/binder", O_RDWR);

if (fd >= 0) {

fcntl(fd, F_SETFD, FD_CLOEXEC);

int vers;

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

}

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

}

size_t maxThreads = 15;

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);

} else {

}

return fd;

}

1.用单例模式构建了一个ProcessState

2.打开BinderDriver

3.mmap映射一块区域

4.设置最大支持线程数

2.2 defaultSericeManager

sp defaultServiceManager()

{

if (gDefaultServiceManager != NULL) return gDefaultServiceManager;

{

AutoMutex _l(gDefaultServiceManagerLock);

while (gDefaultServiceManager == NULL) {

gDefaultServiceManager = interface_cast(

ProcessState::self()->getContextObject(NULL));

if (gDefaultServiceManager == NULL)

sleep(1);

}

}

return gDefaultServiceManager;

}

defaultServiceManager的过程大致就是返回一个BpServiceManager,其中的mRemote是一个BpBinder(0)

2.3 MediaPlayerService::instantiate();填充数据并发送

virtual status_t addService(const String16& name, const sp& service,

bool allowIsolated)

{

Parcel data, reply;

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}

1:初始化data和reply对象,其中data用于封装请求数据,reply用于接收返回数据

2:填充请求数据

3:remote()->transact,其中remote()就是是一个BpBinder对象,BpBinder对象存放在BpServiceManager中,BpServiceManager是defaultServiceManager返回的。

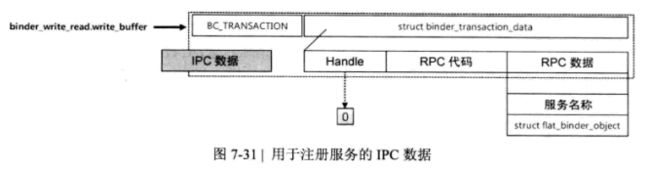

在talkWithDriver的ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)中陷入内核,也就是进入BinderDriver阶段的分析,此时,handle,code,data等数据在binder_transaction_data对象tr中, tr和BC_TRANSACTION在mOut中。handle:用来标识目的端,注册服务过程的目的端是SM,handle是0,对应的是binder_context_mgr_node对象,正是SM所对应的Binder实体对象,经过talkWithDriver, bwr.write_buffer = (long unsigned int)mOut.data(); 把数据放到了binder_write_read中

2.1-2.3是native层的调用过程而BinderDriver层的分析如下

ServiceServer端也会生成binder_write_read结构体,包含write_buffer和read_buffer,其中read_buffer用于接收IPC应答数据,write_buffer用于发送IPC数据。

2.4 ioctl

ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

thread = binder_get_thread(proc);

if (thread == NULL) {

}

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;//驱动层的binder_write_read数据

if (size != sizeof(struct binder_write_read)) {

}

//将用户空间的数据拷贝到驱动层

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

}

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

}

break;

}

}

ret = 0;

return ret;

}

因为设置了write,所以执行binder_thread_write函数

2.5 binder_thread_write

int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread,

void __user *buffer, int size, signed long *consumed)

{

uint32_t cmd;

//ptr和end分别指向数据的开始和结尾

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

//调用getUser,将协议拷贝到内核中,这里使用的是BC_TRANSACTION

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);//表示这个数据已经处理了,向后移动一位

switch (cmd) {

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

//将binder_transaction_data变量tr从用户空间拷贝到内核空间

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd == BC_REPLY);

break;

}

default:

return -EINVAL;

}

*consumed = ptr - buffer;

}

return 0;

}

获取数据里的命令,根据命令执行到BC_TRANSACTION那块,然后将tr从用户空间拷贝到内核空间,并调用binder_transaction

2.6 binder_transaction函数

binder_transaction(proc, thread, &tr, cmd == BC_REPLY),其中cmd是BC_TRANSACTION

该函数的功能:Binder寻址,复制IPC数据,生成以及检索Binder节点等

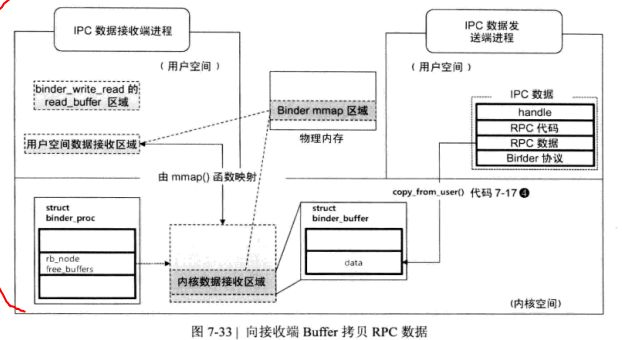

第一步:复制IPC数据

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

struct binder_proc *target_proc;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

if (reply) {

//不是reply,所以这段可以先不分析

} else {

if (tr->target.handle) {

//如果target.handle不是0,

struct binder_ref *ref;

//执行寻址,根据handle寻找相应的binder_ref对象

ref = binder_get_ref(proc, tr->target.handle);

//binder_ref的node就是目标Binder节点

target_node = ref->node;

} else {

//如果是0,就是binder_context_mgr_node,

//binder_context_mgr_node是在ContextMgr执行setContextManger函数是赋值的

target_node = binder_context_mgr_node;

}

target_proc = target_node->proc;//目标进程

}

if (target_thread) {//并没有对target_thread赋值

...

} else {

//找到目标进程的todo和wait队列

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

//初始化一个binder_transaction_data指针对象t

t = kzalloc(sizeof(*t), GFP_KERNEL);

if (t == NULL) {

}

binder_stats_created(BINDER_STAT_TRANSACTION);

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

t->debug_id = ++binder_last_id;

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;//把ServiceServer的thread对象赋值给t->from

else

t->from = NULL;

//tr中的codeBC_TRANSACTION赋值给t的code

t->code = tr->code;

//赋值数据到t->buffer

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

if (t->buffer == NULL) {

}

t->buffer->allow_user_free = 0;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

if (target_node)

binder_inc_node(target_node, 1, 0, NULL);

offp = (size_t *)(t->buffer->data + ALIGN(tr->data_size, sizeof(void *)));

//把tr的数据复制到t中

if (copy_from_user(t->buffer->data, tr->data.ptr.buffer, tr->data_size)) {

}

if (copy_from_user(offp, tr->data.ptr.offsets, tr->offsets_size)) {

}

找到目标进程,并把IPC数据复制到映射区

第二步:创建Binder节点的过程

fp = (struct flat_binder_object *)(t->buffer->data + *offp);

switch (fp->type) {

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct binder_ref *ref;

struct binder_node *node = binder_get_node(proc, fp->binder);

if (node == NULL) {

node = binder_new_node(proc, fp->binder, fp->cookie);

}

ref = binder_get_ref_for_node(target_proc, node);

fp->handle = ref->desc;

在服务注册阶段,flat_binder_object的type是BINDER_TYPE_BINDER,

1.binder_get_node,在proc中查找node是否已经存在,若不存在,则创建新的node节点

2.binder_new_node,创建新的node节点,并将新生成的binder_node注册到binder_proc中

3.binder_get_ref_for_node查找node节点是否已经存在于指定的binder_proc中,如果存在则返回binder_node所在的binder_ref,否则,创建binder_ref节点并将binder_node注册其中,

binder_ref结构体

binder_ref{

struct binder_proc *proc;

struct binder_node *node;

uint32_t desc;

}

proc:指向binder_ref所在的binder_proc

node:指向binder_ref所在的binder_node

desc是一个编号,用来区分当前进程的binder节点,有biner节点生成时,会递增

ref = binder_get_ref_for_node(target_proc, node); 的第一个参数是ContextManager的proc,第二个参数是binder节点,也就是ServiceServer的BinderNode被注册到ContextManger的binder_proc

第三步:唤醒目标进程ServiceManger

t->work.type = BINDER_WORK_TRANSACTION;

//target_list是target_proc->todo,也就是目标进程的todo队列

//也就是把一个binder_work放到todo队列里

list_add_tail(&t->work.entry, target_list);

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

if (target_wait)

wake_up_interruptible(target_wait);

相关结构体

struct binder_transaction {

int debug_id;

struct binder_work work;

struct binder_thread *from;

struct binder_transaction *from_parent;

struct binder_proc *to_proc;

struct binder_thread *to_thread;

struct binder_transaction *to_parent;

unsigned need_reply:1;

struct binder_buffer *buffer;

unsigned int code;

unsigned int flags;

long priority;

long saved_priority;

uid_t sender_euid;

};

struct binder_work {

struct list_head entry;

enum {

BINDER_WORK_TRANSACTION = 1,

BINDER_WORK_TRANSACTION_COMPLETE,

BINDER_WORK_NODE,

BINDER_WORK_DEAD_BINDER,

BINDER_WORK_DEAD_BINDER_AND_CLEAR,

BINDER_WORK_CLEAR_DEATH_NOTIFICATION,

} type;

};

第三阶段 ServiceServer进入待机状态

也是在ioctl中执行binder_thread_read函数,然后进入待机状态

第四阶段

ServiceManager从待机状态中苏醒,首先检查binder_transaction_data,该结构体是在ServiceServer中生成的

binder_thread_read函数中,在wait_event_interruptible_exclusive后面,进程苏醒后就执行这里的代码

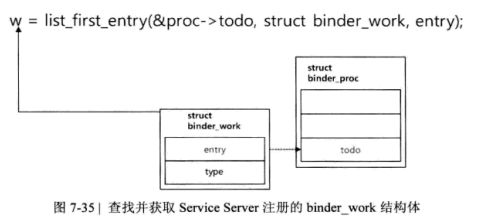

4.1 获取到binder_transaction_data数据t

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

if (!list_empty(&proc->todo) && wait_for_proc_work)

w = list_first_entry(&proc->todo, struct binder_work, entry);

else {

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

t = container_of(w, struct binder_transaction, work);

} break;

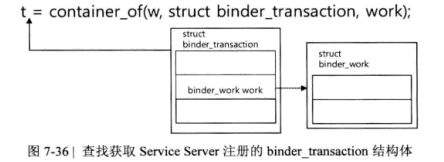

1.从todo中查找binder_work结构体

2.从binder_work中查找binder_transaction_data

container_of是根据首个参数,查找包含这个参数的结构体的首地址

4.2 把t的数据复制到tr中

tr.code = t->code;

tr.flags = t->flags;

tr.sender_euid = t->sender_euid;

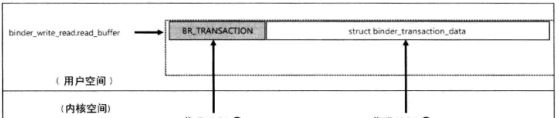

4.3 把数据传递到用户空间,也就是SM的进程空间中

cmd = BR_TRANSACTION;//设置binder协议为BR_TRANSACTION

if (put_user(cmd, (uint32_t __user *)ptr))//ptr指向bwr的read_buffer

return -EFAULT;

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr)))//将binder_transaction_data放到readbuffer中

return -EFAULT;

ptr += sizeof(tr);

binder_stat_br(proc, thread, cmd);

list_del(&t->work.entry);

t->buffer->allow_user_free = 1;

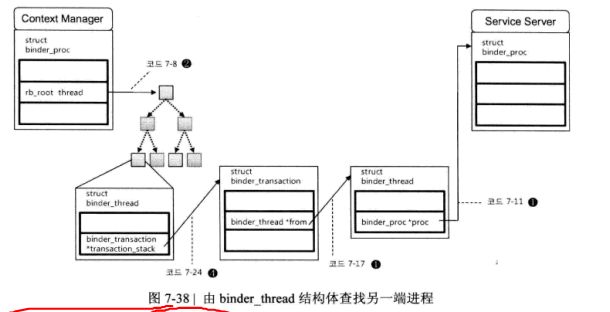

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) {

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

}

第五阶段

ContextManager处理接收到的IPC数据,而后向ServiceServer发送应答数据告知处理完成,涉及到的协议时BC_REPLY(ContextManager->BinderDriver)和BR_REPLY(BinderDriver->ContextManager)

if (reply) {

in_reply_to = thread->transaction_stack;

thread->transaction_stack = in_reply_to->to_parent;

target_thread = in_reply_to->from;

target_proc = target_thread->proc;

}

找到ServiceServer端,并将数据传递过去

第六阶段

ServiceServer苏醒,接收IPC数据后执行相关操作