百度PY-Day4理论课课堂笔记

声明:本文首发于soarli博客(https://blog.soarli.top/archives/383.html

)转载请注明来源。

课程大纲:

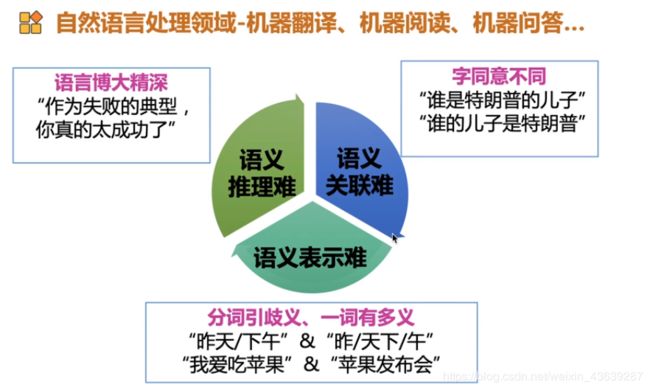

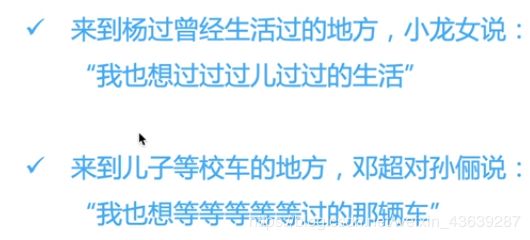

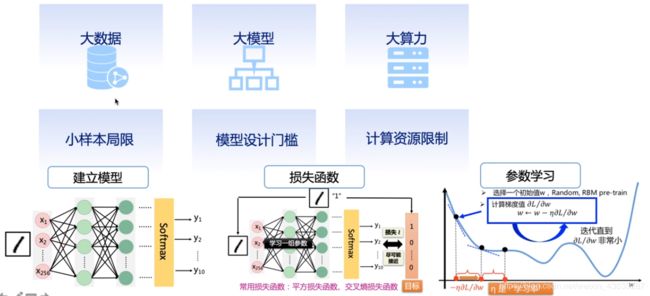

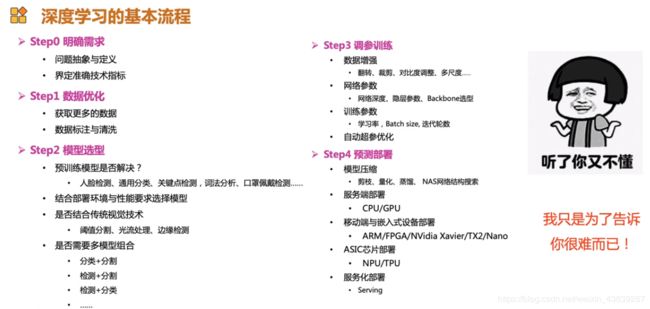

深度学习难点

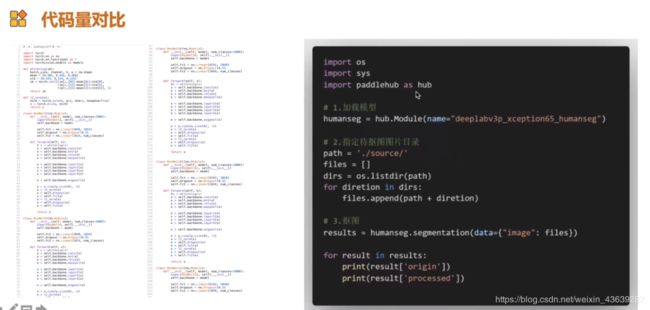

PaddleHub是什么

PaddleHub的效果

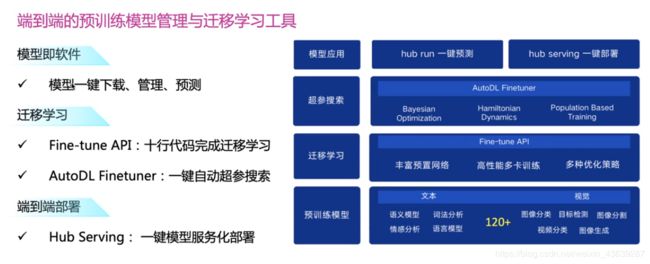

PaddleHub的优势

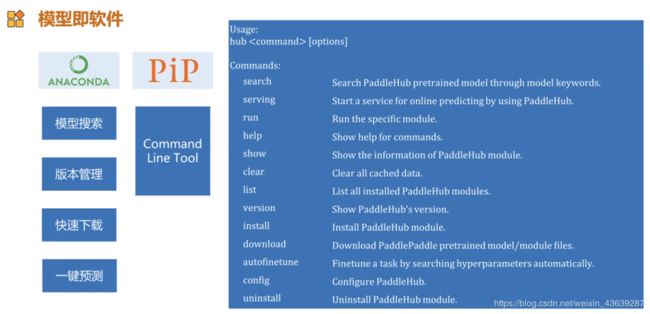

PaddleHub的理念

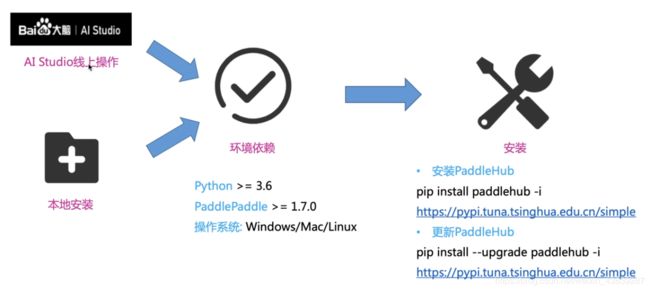

PaddleHub安装

PaddleHub体验

作业:

PaddleHub之《青春有你2》作业:五人识别

一、任务简介

图像分类是计算机视觉的重要领域,它的目标是将图像分类到预定义的标签。近期,许多研究者提出很多不同种类的神经网络,并且极大的提升了分类算法的性能。本文以自己创建的数据集:青春有你2中选手识别为例子,介绍如何使用PaddleHub进行图像分类任务。

#CPU环境启动请务必执行该指令

%set_env CPU_NUM=1

env: CPU_NUM=1

#安装paddlehub

!pip install paddlehub==1.6.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple

Requirement already satisfied: paddlehub==1.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (1.6.0)

Requirement already satisfied: flake8 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (3.7.9)

Requirement already satisfied: tb-paddle in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (0.3.6)

Requirement already satisfied: gunicorn>=19.10.0; sys_platform != "win32" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (20.0.4)

Requirement already satisfied: protobuf>=3.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (3.10.0)

Requirement already satisfied: six>=1.10.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (1.12.0)

Requirement already satisfied: requests in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (2.22.0)

Requirement already satisfied: pyyaml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (5.1.2)

Requirement already satisfied: tensorboard>=1.15 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (2.1.0)

Requirement already satisfied: pre-commit in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (1.21.0)

Requirement already satisfied: pandas; python_version >= "3" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (0.23.4)

Requirement already satisfied: chardet==3.0.4 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (3.0.4)

Requirement already satisfied: Pillow in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (6.2.0)

Requirement already satisfied: colorlog in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (4.1.0)

Requirement already satisfied: flask>=1.1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (1.1.1)

Requirement already satisfied: sentencepiece in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (0.1.85)

Requirement already satisfied: yapf==0.26.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (0.26.0)

Requirement already satisfied: numpy; python_version >= "3" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (1.16.4)

Requirement already satisfied: cma==2.7.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (2.7.0)

Requirement already satisfied: opencv-python in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (4.1.1.26)

Requirement already satisfied: nltk in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from paddlehub==1.6.0) (3.4.5)

Requirement already satisfied: entrypoints<0.4.0,>=0.3.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8->paddlehub==1.6.0) (0.3)

Requirement already satisfied: pycodestyle<2.6.0,>=2.5.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8->paddlehub==1.6.0) (2.5.0)

Requirement already satisfied: mccabe<0.7.0,>=0.6.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8->paddlehub==1.6.0) (0.6.1)

Requirement already satisfied: pyflakes<2.2.0,>=2.1.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flake8->paddlehub==1.6.0) (2.1.1)

Requirement already satisfied: moviepy in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tb-paddle->paddlehub==1.6.0) (1.0.1)

Requirement already satisfied: setuptools>=3.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from gunicorn>=19.10.0; sys_platform != "win32"->paddlehub==1.6.0) (41.4.0)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->paddlehub==1.6.0) (1.25.6)

Requirement already satisfied: idna<2.9,>=2.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->paddlehub==1.6.0) (2.8)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests->paddlehub==1.6.0) (2019.9.11)

Requirement already satisfied: grpcio>=1.24.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tensorboard>=1.15->paddlehub==1.6.0) (1.26.0)

Requirement already satisfied: werkzeug>=0.11.15 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tensorboard>=1.15->paddlehub==1.6.0) (0.16.0)

Requirement already satisfied: google-auth-oauthlib<0.5,>=0.4.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tensorboard>=1.15->paddlehub==1.6.0) (0.4.1)

Requirement already satisfied: absl-py>=0.4 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tensorboard>=1.15->paddlehub==1.6.0) (0.8.1)

Requirement already satisfied: google-auth<2,>=1.6.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tensorboard>=1.15->paddlehub==1.6.0) (1.10.0)

Requirement already satisfied: markdown>=2.6.8 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tensorboard>=1.15->paddlehub==1.6.0) (3.1.1)

Requirement already satisfied: wheel>=0.26; python_version >= "3" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from tensorboard>=1.15->paddlehub==1.6.0) (0.33.6)

Requirement already satisfied: importlib-metadata; python_version < "3.8" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->paddlehub==1.6.0) (0.23)

Requirement already satisfied: identify>=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->paddlehub==1.6.0) (1.4.10)

Requirement already satisfied: virtualenv>=15.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->paddlehub==1.6.0) (16.7.9)

Requirement already satisfied: aspy.yaml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->paddlehub==1.6.0) (1.3.0)

Requirement already satisfied: nodeenv>=0.11.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->paddlehub==1.6.0) (1.3.4)

Requirement already satisfied: cfgv>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->paddlehub==1.6.0) (2.0.1)

Requirement already satisfied: toml in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pre-commit->paddlehub==1.6.0) (0.10.0)

Requirement already satisfied: pytz>=2011k in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pandas; python_version >= "3"->paddlehub==1.6.0) (2019.3)

Requirement already satisfied: python-dateutil>=2.5.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from pandas; python_version >= "3"->paddlehub==1.6.0) (2.8.0)

Requirement already satisfied: itsdangerous>=0.24 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.0->paddlehub==1.6.0) (1.1.0)

Requirement already satisfied: Jinja2>=2.10.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.0->paddlehub==1.6.0) (2.10.3)

Requirement already satisfied: click>=5.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from flask>=1.1.0->paddlehub==1.6.0) (7.0)

Requirement already satisfied: proglog<=1.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from moviepy->tb-paddle->paddlehub==1.6.0) (0.1.9)

Requirement already satisfied: tqdm<5.0,>=4.11.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from moviepy->tb-paddle->paddlehub==1.6.0) (4.36.1)

Requirement already satisfied: imageio-ffmpeg>=0.2.0; python_version >= "3.4" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from moviepy->tb-paddle->paddlehub==1.6.0) (0.3.0)

Requirement already satisfied: imageio<3.0,>=2.5; python_version >= "3.4" in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from moviepy->tb-paddle->paddlehub==1.6.0) (2.6.1)

Requirement already satisfied: decorator<5.0,>=4.0.2 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from moviepy->tb-paddle->paddlehub==1.6.0) (4.4.0)

Requirement already satisfied: requests-oauthlib>=0.7.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from google-auth-oauthlib<0.5,>=0.4.1->tensorboard>=1.15->paddlehub==1.6.0) (1.3.0)

Requirement already satisfied: rsa<4.1,>=3.1.4 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from google-auth<2,>=1.6.3->tensorboard>=1.15->paddlehub==1.6.0) (4.0)

Requirement already satisfied: cachetools<5.0,>=2.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from google-auth<2,>=1.6.3->tensorboard>=1.15->paddlehub==1.6.0) (4.0.0)

Requirement already satisfied: pyasn1-modules>=0.2.1 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from google-auth<2,>=1.6.3->tensorboard>=1.15->paddlehub==1.6.0) (0.2.7)

Requirement already satisfied: zipp>=0.5 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from importlib-metadata; python_version < "3.8"->pre-commit->paddlehub==1.6.0) (0.6.0)

Requirement already satisfied: MarkupSafe>=0.23 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from Jinja2>=2.10.1->flask>=1.1.0->paddlehub==1.6.0) (1.1.1)

Requirement already satisfied: oauthlib>=3.0.0 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<0.5,>=0.4.1->tensorboard>=1.15->paddlehub==1.6.0) (3.1.0)

Requirement already satisfied: pyasn1>=0.1.3 in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from rsa<4.1,>=3.1.4->google-auth<2,>=1.6.3->tensorboard>=1.15->paddlehub==1.6.0) (0.4.8)

Requirement already satisfied: more-itertools in /opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages (from zipp>=0.5->importlib-metadata; python_version < "3.8"->pre-commit->paddlehub==1.6.0) (7.2.0)

二、任务实践

Step1、基础工作

加载数据文件

导入python包

!unzip -o dataset/file.zip -d ./dataset/

import paddlehub as hub

Step2、加载预训练模型

接下来我们要在PaddleHub中选择合适的预训练模型来Finetune,由于是图像分类任务,因此我们使用经典的ResNet-50作为预训练模型。PaddleHub提供了丰富的图像分类预训练模型,包括了最新的神经网络架构搜索类的PNASNet,我们推荐您尝试不同的预训练模型来获得更好的性能。

module = hub.Module(name="resnet_v2_50_imagenet")

[2020-04-26 14:31:19,068] [ INFO] - Installing resnet_v2_50_imagenet module

[2020-04-26 14:31:19,086] [ INFO] - Module resnet_v2_50_imagenet already installed in /home/aistudio/.paddlehub/modules/resnet_v2_50_imagenet

Step3、数据准备

接着需要加载图片数据集。我们使用自定义的数据进行体验,请查看适配自定义数据

from paddlehub.dataset.base_cv_dataset import BaseCVDataset

class DemoDataset(BaseCVDataset):

def __init__(self):

# 数据集存放位置

self.dataset_dir = "dataset"

super(DemoDataset, self).__init__(

base_path=self.dataset_dir,

train_list_file="train_list.txt",

validate_list_file="validate_list.txt",

test_list_file="test_list.txt",

label_list_file="label_list.txt",

)

dataset = DemoDataset()

Step4、生成数据读取器

接着生成一个图像分类的reader,reader负责将dataset的数据进行预处理,接着以特定格式组织并输入给模型进行训练。

当我们生成一个图像分类的reader时,需要指定输入图片的大小

data_reader = hub.reader.ImageClassificationReader(

image_width=module.get_expected_image_width(),

image_height=module.get_expected_image_height(),

images_mean=module.get_pretrained_images_mean(),

images_std=module.get_pretrained_images_std(),

dataset=dataset)

[2020-04-26 14:31:19,424] [ INFO] - Dataset label map = {'虞书欣': 0, '许佳琪': 1, '赵小棠': 2, '安崎': 3, '王承渲': 4}

Step5、配置策略

在进行Finetune前,我们可以设置一些运行时的配置,例如如下代码中的配置,表示:

use_cuda:设置为False表示使用CPU进行训练。如果您本机支持GPU,且安装的是GPU版本的PaddlePaddle,我们建议您将这个选项设置为True;epoch:迭代轮数;batch_size:每次训练的时候,给模型输入的每批数据大小为32,模型训练时能够并行处理批数据,因此batch_size越大,训练的效率越高,但是同时带来了内存的负荷,过大的batch_size可能导致内存不足而无法训练,因此选择一个合适的batch_size是很重要的一步;log_interval:每隔10 step打印一次训练日志;eval_interval:每隔50 step在验证集上进行一次性能评估;checkpoint_dir:将训练的参数和数据保存到cv_finetune_turtorial_demo目录中;strategy:使用DefaultFinetuneStrategy策略进行finetune;

更多运行配置,请查看RunConfig

同时PaddleHub提供了许多优化策略,如AdamWeightDecayStrategy、ULMFiTStrategy、DefaultFinetuneStrategy等,详细信息参见策略

config = hub.RunConfig(

use_cuda=False, #是否使用GPU训练,默认为False;

num_epoch=3, #Fine-tune的轮数;

checkpoint_dir="cv_finetune_turtorial_demo",#模型checkpoint保存路径, 若用户没有指定,程序会自动生成;

batch_size=3, #训练的批大小,如果使用GPU,请根据实际情况调整batch_size;

eval_interval=10, #模型评估的间隔,默认每100个step评估一次验证集;

strategy=hub.finetune.strategy.DefaultFinetuneStrategy()) #Fine-tune优化策略;

[2020-04-26 14:41:58,841] [ INFO] - Checkpoint dir: cv_finetune_turtorial_demo

Step6、组建Finetune Task

有了合适的预训练模型和准备要迁移的数据集后,我们开始组建一个Task。

由于该数据设置是一个二分类的任务,而我们下载的分类module是在ImageNet数据集上训练的千分类模型,所以我们需要对模型进行简单的微调,把模型改造为一个五分类模型:

- 获取module的上下文环境,包括输入和输出的变量,以及Paddle Program;

- 从输出变量中找到特征图提取层feature_map;

- 在feature_map后面接入一个全连接层,生成Task;

input_dict, output_dict, program = module.context(trainable=True)

img = input_dict["image"]

feature_map = output_dict["feature_map"]

feed_list = [img.name]

task = hub.ImageClassifierTask(

data_reader=data_reader,

feed_list=feed_list,

feature=feature_map,

num_classes=dataset.num_labels,

config=config)

[2020-04-26 14:42:05,578] [ INFO] - 267 pretrained paramaters loaded by PaddleHub

Step5、开始Finetune

我们选择finetune_and_eval接口来进行模型训练,这个接口在finetune的过程中,会周期性的进行模型效果的评估,以便我们了解整个训练过程的性能变化。

run_states = task.finetune_and_eval()

[2020-04-26 14:52:14,227] [ INFO] - PaddleHub finetune start

[2020-04-26 14:52:27,151] [ TRAIN] - step 20 / 407: loss=1.40396 acc=0.50000 [step/sec: 0.78]

[2020-04-26 14:52:27,152] [ INFO] - Evaluation on dev dataset start

share_vars_from is set, scope is ignored.

[2020-04-26 14:52:27,995] [ EVAL] - [dev dataset evaluation result] loss=1.58546 acc=0.25000 [step/sec: 3.36]

[2020-04-26 14:52:27,996] [ EVAL] - best model saved to cv_finetune_turtorial_demo/best_model [best acc=0.25000]

[2020-04-26 14:52:41,352] [ TRAIN] - step 30 / 407: loss=1.09900 acc=0.50000 [step/sec: 0.80]

[2020-04-26 14:52:41,354] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:52:42,152] [ EVAL] - [dev dataset evaluation result] loss=1.49427 acc=0.25000 [step/sec: 3.58]

[2020-04-26 14:52:54,764] [ TRAIN] - step 40 / 407: loss=1.20739 acc=0.53333 [step/sec: 0.79]

[2020-04-26 14:52:54,765] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:52:55,571] [ EVAL] - [dev dataset evaluation result] loss=1.46154 acc=0.50000 [step/sec: 3.53]

[2020-04-26 14:52:55,572] [ EVAL] - best model saved to cv_finetune_turtorial_demo/best_model [best acc=0.50000]

[2020-04-26 14:53:09,099] [ TRAIN] - step 50 / 407: loss=1.17680 acc=0.46667 [step/sec: 0.79]

[2020-04-26 14:53:09,100] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:53:09,905] [ EVAL] - [dev dataset evaluation result] loss=1.60205 acc=0.25000 [step/sec: 3.55]

[2020-04-26 14:53:22,546] [ TRAIN] - step 60 / 407: loss=1.14852 acc=0.50000 [step/sec: 0.79]

[2020-04-26 14:53:22,547] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:53:23,339] [ EVAL] - [dev dataset evaluation result] loss=1.74368 acc=0.25000 [step/sec: 3.62]

[2020-04-26 14:53:36,011] [ TRAIN] - step 70 / 407: loss=1.02741 acc=0.53333 [step/sec: 0.79]

[2020-04-26 14:53:36,012] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:53:36,815] [ EVAL] - [dev dataset evaluation result] loss=1.87898 acc=0.25000 [step/sec: 3.56]

[2020-04-26 14:53:49,368] [ TRAIN] - step 80 / 407: loss=1.18922 acc=0.56667 [step/sec: 0.80]

[2020-04-26 14:53:49,369] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:53:50,182] [ EVAL] - [dev dataset evaluation result] loss=1.82476 acc=0.25000 [step/sec: 3.55]

[2020-04-26 14:54:02,730] [ TRAIN] - step 90 / 407: loss=1.47436 acc=0.40000 [step/sec: 0.80]

[2020-04-26 14:54:02,732] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:54:03,541] [ EVAL] - [dev dataset evaluation result] loss=1.71757 acc=0.25000 [step/sec: 3.52]

[2020-04-26 14:54:16,199] [ TRAIN] - step 100 / 407: loss=1.41214 acc=0.50000 [step/sec: 0.79]

[2020-04-26 14:54:16,200] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:54:17,012] [ EVAL] - [dev dataset evaluation result] loss=2.49438 acc=0.25000 [step/sec: 3.52]

[2020-04-26 14:54:29,679] [ TRAIN] - step 110 / 407: loss=1.09387 acc=0.50000 [step/sec: 0.79]

[2020-04-26 14:54:29,680] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:54:30,484] [ EVAL] - [dev dataset evaluation result] loss=2.29288 acc=0.50000 [step/sec: 3.56]

[2020-04-26 14:54:43,248] [ TRAIN] - step 120 / 407: loss=1.05538 acc=0.60000 [step/sec: 0.78]

[2020-04-26 14:54:43,249] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:54:44,050] [ EVAL] - [dev dataset evaluation result] loss=1.73628 acc=0.50000 [step/sec: 3.59]

[2020-04-26 14:54:56,782] [ TRAIN] - step 130 / 407: loss=1.27089 acc=0.46667 [step/sec: 0.79]

[2020-04-26 14:54:56,783] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:54:57,582] [ EVAL] - [dev dataset evaluation result] loss=1.30656 acc=0.66667 [step/sec: 3.58]

[2020-04-26 14:54:57,583] [ EVAL] - best model saved to cv_finetune_turtorial_demo/best_model [best acc=0.66667]

[2020-04-26 14:55:10,939] [ TRAIN] - step 140 / 407: loss=1.05220 acc=0.56667 [step/sec: 0.79]

[2020-04-26 14:55:10,941] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:55:11,742] [ EVAL] - [dev dataset evaluation result] loss=1.30367 acc=0.50000 [step/sec: 3.57]

[2020-04-26 14:55:24,392] [ TRAIN] - step 150 / 407: loss=0.92734 acc=0.41667 [step/sec: 0.76]

[2020-04-26 14:55:24,393] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:55:25,174] [ EVAL] - [dev dataset evaluation result] loss=1.86053 acc=0.50000 [step/sec: 3.70]

[2020-04-26 14:55:37,651] [ TRAIN] - step 160 / 407: loss=0.62062 acc=0.80000 [step/sec: 0.80]

[2020-04-26 14:55:37,652] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:55:38,433] [ EVAL] - [dev dataset evaluation result] loss=2.13082 acc=0.41667 [step/sec: 3.70]

[2020-04-26 14:55:50,865] [ TRAIN] - step 170 / 407: loss=0.87543 acc=0.63333 [step/sec: 0.80]

[2020-04-26 14:55:50,866] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:55:51,652] [ EVAL] - [dev dataset evaluation result] loss=0.89465 acc=0.66667 [step/sec: 3.68]

[2020-04-26 14:56:04,174] [ TRAIN] - step 180 / 407: loss=1.10530 acc=0.50000 [step/sec: 0.80]

[2020-04-26 14:56:04,176] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:56:04,970] [ EVAL] - [dev dataset evaluation result] loss=1.55989 acc=0.66667 [step/sec: 3.60]

[2020-04-26 14:56:17,498] [ TRAIN] - step 190 / 407: loss=0.80936 acc=0.66667 [step/sec: 0.80]

[2020-04-26 14:56:17,500] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:56:18,304] [ EVAL] - [dev dataset evaluation result] loss=2.20594 acc=0.41667 [step/sec: 3.54]

[2020-04-26 14:56:31,020] [ TRAIN] - step 200 / 407: loss=0.79162 acc=0.66667 [step/sec: 0.79]

[2020-04-26 14:56:31,021] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:56:31,812] [ EVAL] - [dev dataset evaluation result] loss=2.33450 acc=0.41667 [step/sec: 3.64]

[2020-04-26 14:56:44,558] [ TRAIN] - step 210 / 407: loss=1.08516 acc=0.50000 [step/sec: 0.78]

[2020-04-26 14:56:44,560] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:56:45,349] [ EVAL] - [dev dataset evaluation result] loss=0.92071 acc=0.66667 [step/sec: 3.66]

[2020-04-26 14:56:58,028] [ TRAIN] - step 220 / 407: loss=0.60862 acc=0.80000 [step/sec: 0.79]

[2020-04-26 14:56:58,029] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:56:58,818] [ EVAL] - [dev dataset evaluation result] loss=1.81622 acc=0.41667 [step/sec: 3.66]

[2020-04-26 14:57:11,493] [ TRAIN] - step 230 / 407: loss=0.71227 acc=0.76667 [step/sec: 0.79]

[2020-04-26 14:57:11,495] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:57:12,301] [ EVAL] - [dev dataset evaluation result] loss=2.00925 acc=0.25000 [step/sec: 3.54]

[2020-04-26 14:57:24,971] [ TRAIN] - step 240 / 407: loss=1.14968 acc=0.56667 [step/sec: 0.79]

[2020-04-26 14:57:24,972] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:57:25,772] [ EVAL] - [dev dataset evaluation result] loss=1.51558 acc=0.50000 [step/sec: 3.57]

[2020-04-26 14:57:38,404] [ TRAIN] - step 250 / 407: loss=1.03684 acc=0.56667 [step/sec: 0.79]

[2020-04-26 14:57:38,405] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:57:39,209] [ EVAL] - [dev dataset evaluation result] loss=1.59875 acc=0.25000 [step/sec: 3.55]

[2020-04-26 14:57:51,739] [ TRAIN] - step 260 / 407: loss=0.87253 acc=0.66667 [step/sec: 0.80]

[2020-04-26 14:57:51,740] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:57:52,531] [ EVAL] - [dev dataset evaluation result] loss=2.15564 acc=0.50000 [step/sec: 3.65]

[2020-04-26 14:58:05,287] [ TRAIN] - step 270 / 407: loss=0.74611 acc=0.76667 [step/sec: 0.78]

[2020-04-26 14:58:05,288] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:58:06,103] [ EVAL] - [dev dataset evaluation result] loss=1.80917 acc=0.66667 [step/sec: 3.49]

[2020-04-26 14:58:18,807] [ TRAIN] - step 280 / 407: loss=0.61917 acc=0.80000 [step/sec: 0.79]

[2020-04-26 14:58:18,808] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:58:45,892] [ TRAIN] - step 300 / 407: loss=0.54226 acc=0.80000 [step/sec: 0.78]

[2020-04-26 14:58:45,894] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:58:46,678] [ EVAL] - [dev dataset evaluation result] loss=0.99110 acc=0.58333 [step/sec: 3.67]

[2020-04-26 14:58:59,598] [ TRAIN] - step 310 / 407: loss=0.95645 acc=0.66667 [step/sec: 0.77]

[2020-04-26 14:58:59,599] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:59:00,394] [ EVAL] - [dev dataset evaluation result] loss=1.16195 acc=0.41667 [step/sec: 3.66]

[2020-04-26 14:59:13,256] [ TRAIN] - step 320 / 407: loss=0.66790 acc=0.73333 [step/sec: 0.78]

[2020-04-26 14:59:13,257] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:59:14,036] [ EVAL] - [dev dataset evaluation result] loss=1.87141 acc=0.25000 [step/sec: 3.72]

[2020-04-26 14:59:26,719] [ TRAIN] - step 330 / 407: loss=0.89824 acc=0.70000 [step/sec: 0.79]

[2020-04-26 14:59:26,720] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:59:27,508] [ EVAL] - [dev dataset evaluation result] loss=2.16238 acc=0.50000 [step/sec: 3.67]

[2020-04-26 14:59:53,776] [ TRAIN] - step 350 / 407: loss=0.66608 acc=0.80000 [step/sec: 0.79]

[2020-04-26 14:59:53,777] [ INFO] - Evaluation on dev dataset start

[2020-04-26 14:59:54,587] [ EVAL] - [dev dataset evaluation result] loss=2.02997 acc=0.25000 [step/sec: 3.51]

[2020-04-26 15:00:20,672] [ TRAIN] - step 370 / 407: loss=0.90537 acc=0.60000 [step/sec: 0.79]

[2020-04-26 15:00:20,674] [ INFO] - Evaluation on dev dataset start

[2020-04-26 15:00:21,479] [ EVAL] - [dev dataset evaluation result] loss=2.12513 acc=0.50000 [step/sec: 3.55]

[2020-04-26 15:00:47,782] [ TRAIN] - step 390 / 407: loss=0.72874 acc=0.76667 [step/sec: 0.78]

[2020-04-26 15:00:47,783] [ INFO] - Evaluation on dev dataset start

[2020-04-26 15:00:48,586] [ EVAL] - [dev dataset evaluation result] loss=2.07444 acc=0.50000 [step/sec: 3.57]

[2020-04-26 15:01:01,435] [ TRAIN] - step 400 / 407: loss=0.62824 acc=0.80000 [step/sec: 0.78]

[2020-04-26 15:01:01,437] [ INFO] - Evaluation on dev dataset start

[2020-04-26 15:01:02,242] [ EVAL] - [dev dataset evaluation result] loss=1.72133 acc=0.25000 [step/sec: 3.55]

Step6、预测

当Finetune完成后,我们使用模型来进行预测,先通过以下命令来获取测试的图片

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

with open("dataset/test_list.txt","r") as f:

filepath = f.readlines()

data = [filepath[0].split(" ")[0],filepath[1].split(" ")[0],filepath[2].split(" ")[0],filepath[3].split(" ")[0],filepath[4].split(" ")[0]]

label_map = dataset.label_dict()

index = 0

run_states = task.predict(data=data)

results = [run_state.run_results for run_state in run_states]

for batch_result in results:

print(batch_result)

batch_result = np.argmax(batch_result, axis=2)[0]

print(batch_result)

for result in batch_result:

index += 1

result = label_map[result]

print("input %i is %s, and the predict result is %s" %

(index, data[index - 1], result))

[2020-04-26 15:02:07,700] [ INFO] - PaddleHub predict start

[2020-04-26 15:02:07,701] [ INFO] - The best model has been loaded

share_vars_from is set, scope is ignored.

[2020-04-26 15:02:08,568] [ INFO] - PaddleHub predict finished.

[array([[0.10850388, 0.03892484, 0.3766357 , 0.3425531 , 0.13338248],

[0.22734572, 0.02854738, 0.04086622, 0.4217964 , 0.28144428],

[0.05866686, 0.04220689, 0.6305476 , 0.13286346, 0.13571516]],

dtype=float32)]

[2 3 2]

input 1 is dataset/test/yushuxin.jpg, and the predict result is 赵小棠

input 2 is dataset/test/xujiaqi.jpg, and the predict result is 安崎

input 3 is dataset/test/zhaoxiaotang.jpg, and the predict result is 赵小棠

[array([[0.08772717, 0.02577625, 0.05073174, 0.4185046 , 0.41726026],

[0.00707476, 0.00106204, 0.0092694 , 0.16083124, 0.82176256]],

dtype=float32)]

[3 4]

input 4 is dataset/test/anqi.jpg, and the predict result is 安崎

input 5 is dataset/test/wangchengxuan.jpg, and the predict result is 王承渲

说明:

由于前两个人的测试集纯手工下载且数量太少,预测结果出现了错误。后三人各使用爬虫爬取了上百张,预测结果正确,不过先就这样吧,熟悉一下平台的使用和人脸识别的基本方法。训练集图片爬取和整理方法见这里!