tensorflow实现猫狗分类项目

最近暑假有时间,因此想学一点东西,然后又因为限于自己电脑的显卡是A卡,不能GPU加速,也用不了pytorch框架,所以就选择tensorflow。

现在也在刚刚入坑tensorflow因此做的项目比较低级,现在这篇文章就是关于猫狗分类。之前也曾网上也举行过猫狗分类的比赛,因此猫狗数据集,可以到链接猫狗数据集,直接就可以下载到本地。

但是我下载完,抽取猫狗分别500张图片,然后进行跑程序时发现,其中有一张张片出现问题,不能在opencv进行resize操作,这个问题我i也是找了好久才发现,难受。。。

废话不多说了,直接进入正题。

方法:采用CNN对图像分类

思路:获得大量的猫狗数据集以后,分别抽取500张猫狗照片放到另一个文件夹,作为数据的输入,需要录取的数据有图片(images)、图片的标签(labels)、图片的名字(img_names)、以及分类(cls)。

成功加载数据后,就要对数据进行预处理操作。由于在经过第一层数据的卷积时,每次输入的参数必须是要一致的,因此就需要对图像进行resize操作,全部图像变成shape为[64, 64, 3],然后对每一张图像的像素进行归一化处理

然后对数据进行shuffle(打乱数据),其中20%作为数据的验正集(validation),剩下的80%作为训练数据。

然后对超参数的赋值…

在进行完上面操作以后就可以搭建网络模型了。现在这个CNN有三层卷积,然后进行relu函数激活,池化,最后还有两层的全连接层,全连接层也别忘了激活,还有最重要的是,当一个网络模型生成完以后,有很大的概率会出现过拟合(训练效果特别好,但是实际测试数据时,准确率却特别低),然后模型就废了。因此为了减少过拟合的风险,引入dropout函数((一般都是在全连接层进行)每次进行全连接操作时,在激活函数前,dropout掉一些神经元)。

完成全连接层以后,就要使用placholder(对placeholder不了解可以百度查一下)对变量进行图像变量(x_data)以及图片标签(y_data)占位。还有在进行完第二层全连接对返回结果进行softmax操作,将返回值映射到0-1区间上,就是是猫概率是多少,狗的概率又是多少。获取最大概率的索引就是预测值了。

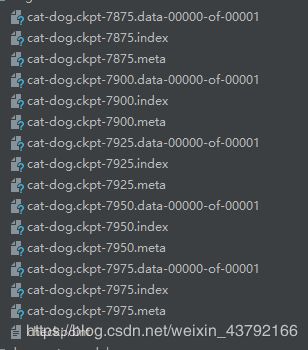

将网络模型搭建好久可以把数据进行训练,数据将进行8000次的迭代,cpu处理也就半小时吧,训练完成后就把网络模型保存起来。保存以后就可以对新数据进行预测操作。

如何预测?

首先需要对图片进行统一的预处理操作,然后从文件中加载网络模型,最后就可以预测了。

文件分为三个py文件,分别作用为训练、读取数据以及预处理、测试数据

具体代码如下。

训练文件代码:

导入工具包

import data_set_dog_cat

import tensorflow as tf

import numpy as np

from numpy.random import seed

确定随机种子,每次随机就是相同的结果,方便进行调试不会,出现不同结果

seed(10)

from tensorflow import set_random_seed

set_random_seed(20)

超参数

batch_size = 32 #每次迭代32张图片。一共1000张

#标签

classes = ['cats', 'dogs']

num_classes = len(classes)

#验正集 占0.2

validation_size = 0.2

#resize成规定大小

img_size = 64

#颜色通道

num_channels = 3

#图片绝对路径

train_path = 'F:\img1'

读取图像数据

#data_set_dog_cat为读取py文件的文件名

#read_train_sets为自定义函数

data = data_set_dog_cat.read_train_sets(train_path, img_size, classes, validation_size=validation_size)

卷积核大小一般都为3,5等等,卷积核数量可以自己设定,一般为2的幂次数,还有进行池化是窗口大小一般都为ksize=[1, 2, 2, 1],步长为[1, 2, 2, 1]

#第一层卷积层卷积核大小以及卷积核数量

filter_size_conv1 = 3

num_filter_conv1 = 32

#第二层卷积层卷积核大小以及卷积核数量

filter_size_conv2 = 3

num_filter_conv2 = 32

#第三层卷积层卷积核大小以及卷积核数量

filter_size_conv3 = 3

num_filter_conv3 = 64

#第一层全连接层的深度

fc_layer_size = 1024

声明权重参数以及偏置项生成函数

def create_weights(shape):

#生成高斯分布,方差为0.05,大小为shape数据

return tf.Variable(tf.random_normal(shape, stddev=0.05))

# return tf.Variable(tf.truncated_normal(shape, stddev=0.05))

#生成大小为size,值为0.05的一维常量

def create_biases(size):

return tf.Variable(tf.constant(0.05, shape=[size]))

声明卷积操作以及池化操作函数

#input输入图像,num_put_channels通道数,conv_filter_size卷积核大小,最后一个为卷积核数量

def create_convolutional_layer(input, num_input_channels, conv_filter_size, num_filters):

#随机生成权重参数

Weight = create_weights([conv_filter_size, conv_filter_size, num_input_channels, num_filters])

#随机生成偏置项

biasese = create_biases(num_filters)

#进行操作,卷积过后,图像的shape未发生改变,因为padding取为SAME,shape为[-1, 64,64,32],-1代表让计算机计算图片的数量是多少

layer = tf.nn.conv2d(input, Weight, strides=[1, 1, 1, 1], padding='SAME')

layer = tf.add(layer, biasese)

#一般都用relu进行激活,由线性转化为非线性

layer = tf.nn.relu(layer)

#池化,步长为2,则一次pooling后图片大小都变为原来两倍,深度不变,此时shape为[-1, 32, 32, 32]

pooling = tf.nn.max_pool(layer, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

return pooling

转化shape,因为卷积shape接受为4参数,但是全连接接受的是两个参数,就需要将shape展平,变更为[num_images, widthheightchannels]

def create_flatten_layer(layer):

#获取当前图像的shape值为[num_iamges, width, height, channels]

layer_shape = layer.get_shape()

#切片方式获得后三个数据,并且获得总数

num_features = layer_shape[1:4].num_elements()

#将原来的layer,resiae为规定要求(-1,为计算机自动计算num_iamges)

layer = tf.reshape(layer, [-1, num_features])

return layer

转化完格式就可以进行全连接操作了

#注意的是activation_function的值,因为有两层全连接层,第一层需要进行激活,但是最后一个全连接就不需要了,因为需要获得最后未处理的结果

def create_fully_connection(inputs, num_inputs, num_outputs, activation_function=True):

weight = create_weights([num_inputs, num_outputs])

biases = create_biases(num_outputs)

fully_connection = tf.add(tf.matmul(inputs, weight), biases)

#dropout神经元,减少过拟合的风险

fully_connection = tf.nn.dropout(fully_connection, keep_prob=0.7)

if activation_function is True:

fully_connection = tf.nn.relu(fully_connection)

return fully_connection

占位

#因为卷积输入的shape要四个参数,因此shape如下

x_data = tf.placeholder(tf.float32, shape=[None, img_size, img_size, num_channels], name='x_data')

#为二分类问题最后输出结果为num_classes个结果

y_data = tf.placeholder(tf.float32, shape=[None, num_classes], name='y_data')

#获得结果中最大值的索引,0代表列中最大,1代表行最大

y_data_class = tf.argmax(y_data, 1)

卷积以及池化操作

layer_conv1 = create_convolutional_layer(input=x_data, num_input_channels=num_channels, conv_filter_size=filter_size_conv1, num_filters=num_filter_conv1)

layer_conv2 = create_convolutional_layer(input=layer_conv1, num_input_channels=num_filter_conv1, conv_filter_size=filter_size_conv2, num_filters=num_filter_conv2)

layer_conv3 = create_convolutional_layer(input=layer_conv2, num_input_channels=num_filter_conv2, conv_filter_size=filter_size_conv3, num_filters=num_filter_conv3)

转化格式

layer_flat = create_flatten_layer(layer_conv3)

两层的全连接层

fc_1 = create_fully_connection(inputs=layer_flat, num_inputs=layer_flat.get_shape()[1:4].num_elements(), num_outputs=fc_layer_size, activation_function=True)

fc_2 = create_fully_connection(inputs=fc_1, num_inputs=fc_layer_size, num_outputs=num_classes, activation_function=False)

softmax将全连接输出映射到0-1区间变为概率值,然后argmax获取概率值最大的索引,即可获得分类

prediction = tf.nn.softmax(fc_2, name='prediction')

prediction_class = tf.argmax(prediction, 1)

交叉熵函数计算误差

cross_entrory = tf.nn.softmax_cross_entropy_with_logits(labels=y_data, logits=fc_2)

loss = tf.reduce_mean(cross_entrory)

定义优化器,学习率不要置太大,否者有可能收敛不到最小值

optimizer = tf.train.AdamOptimizer(1e-4).minimize(loss)

计算图并初始化所有的Variable,在tensorflow中变量都要用Variable声明,在用tf.global_variable_initializer初始化变量才生效

sess = tf.Session()

sess.run(tf.global_variables_initializer())

#比较预测值与真实值,返回True与False

correct_prediction = tf.equal(y_data_class, prediction_class)

将True与False转化为float32,True为1,False为0,求均值就是准确率

accurary = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

输出格式,输出多少次epoch,第几次迭代,训练时的准确率,验正集准确率,训练集的损失值。每次要获得变量的值都需要run一次,才能获得值,还需要喂入数据,喂入到之前进行占位的变量(x_data, y_data)

def show_progress(epoch, feed_dict_train, feed_dict_valid, train_loss, i):

acc = sess.run(accurary, feed_dict=feed_dict_train)

val_acc = sess.run(accurary, feed_dict=feed_dict_valid)

print("epoch:", str(epoch + 1) + ",i:", str(i) +

",acc:", str(acc) + ",val_acc:", str(val_acc) + ",train_loss:", str(train_loss))

定义迭代次数,以及保存网咯模型的saver

total_iteration = 0

saver = tf.train.Saver()

万事俱备只欠东风,现在就可以训练数据了

def train(num_iteration):

#迭代总数

global total_iteration

for i in range(total_iteration, num_iteration+total_iteration):#(0, 8000)

# next_batch自定义函数,每次获取batch_size大小的数据,训练集

x_batch, y_true_batch, _, cls_batch = data.train.next_batch(batch_size)

#每次获取batch_size大小的数据,验正集

x_valid_batch, y_valid_true_batch, _, cls_valid_batch = data.train.next_batch(batch_size)

#准备喂入的训练数据

feed_dict_train = {x_data:x_batch, y_data:y_true_batch}

#准备喂入的验正集数据

feed_dict_valid = {x_data:x_valid_batch, y_data:y_valid_true_batch}

#每次run优化器,将loss减少

sess.run(optimizer, feed_dict=feed_dict_train)

#num_examples除以batch_size,就是有多少个epoch

if i%int(data.train._num_examples/batch_size) == 0:

train_loss = sess.run(loss, feed_dict=feed_dict_train)

epoch = i/int(data.train._num_examples/batch_size)

#打印

show_progress(epoch, feed_dict_train, feed_dict_valid, train_loss, i)

#保存网络

saver.save(sess, './dog-cat-model/cat-dog.ckpt', global_step=i)

这上面的就是训练数据的训练文件py

接下来就是数据读取

导入包

import cv2

import os

import glob

from sklearn.utils import shuffle

import numpy as np

定义一个类,方便操作

class DataSet(object):

#构造函数

def __init__(self, images, labels, img_names, cls):

#获取图像的总数量

self._num_examples = images.shape[0]

self._images = images

self._labels = labels

self._img_names = img_names

self._cls = cls

#目前正在第几个epoch

self._epochs_done = 0

#在每个epoch里面,正在处理第几张图像

self._index_in_epoch = 0

#方便训练文件py获取数据

def images(self):

return self._images

def labels(self):

return self._labels

def img_names(self):

return self._img_names

def cls(self):

return self._cls

def num_example(self):

return self._num_examples

def epochs_done(self):

return self._epochs_done

#每次获取batch_size大小的图像

def next_batch(self, batch_size):

start = self._index_in_epoch

self._index_in_epoch += batch_size

#如果大于总数,就重新开始

if self._index_in_epoch > self._num_examples:

# After each epoch we update this

self._epochs_done += 1

start = 0

self._index_in_epoch = batch_size

assert batch_size <= self._num_examples

end = self._index_in_epoch

#返回数据

return self._images[start:end], self._labels[start:end], self._img_names[start:end], self._cls[start:end]

加载数据,每张每张加载放到一个list结构里面

def load_train(train_path, img_size, classes):

images = []

labels = []

img_names = []

cls = []

print("going to read training data")

for fields in classes:#['cat', 'dog']

index = classes.index(fields)#当前是猫或者狗的索引

path = os.path.join(train_path, fields, '*g')#拼接字符串,获得绝对路径

files = glob.glob(path)#获取路径下满足条件的所有文件

for f1 in files:#遍历每一张图

try:

image = cv2.imread(f1)

#异常处理

except:

print("读取异常")

print("读取成功")

try:

#resize为64, 64大小的图

image = cv2.resize(image, (img_size, img_size), 0, 0, cv2.INTER_LINEAR)

except:

print("resize异常")

#转化为float32,

image = image.astype(np.float32)

#归一化处理

image = np.multiply(image, 1.0 / 255.0)

images.append(image)

label = np.zeros(len(classes))

label[index] = 1.0

labels.append(label)

#获取路径名字

fibase = os.path.basename(f1)

img_names.append(fibase)

cls.append(fields)

#由list转化为ndarray格式,方便管理

images = np.array(images)

labels = np.array(labels)

cls = np.array(cls)

return images, labels, img_names, cls

读取以及划分数据(训练集,验正集)

def read_train_sets(train_path, imag_size, classes, validation_size):

class DataSets(object):

pass

#声明对象

data_sets = DataSets()

#加载数据

images, labels, img_names, cls = load_train(train_path, imag_size, classes)

images, labels, img_names, cls = shuffle(images, labels, img_names, cls)

#判断valiation_size变量类型

if isinstance(validation_size, float):#valiation_size为0.2

#0.2乘以图像数量

validation_size = int(validation_size * images.shape[0])

#切片切分数据

validation_images = images[:validation_size]

validation_labels = labels[:validation_size]

validation_img_name = img_names[:validation_size]

validation_cls = cls[:validation_size]

train_images = images[validation_size:]

train_labels = labels[validation_size:]

train_img_names = img_names[validation_size:]

train_cls = cls[validation_size:]

#转到类里面的构造函数,初始化变量

data_sets.train = DataSet(train_images, train_labels, train_img_names, train_cls)

data_sets.valid = DataSet(validation_images, validation_labels, validation_img_name, validation_cls)

#返回已经分类的数据

return data_sets

上面就是读取数据

到这里已经可以进行数据的训练

准确率已经有0.9多说明效果还是不错

接下来解可以真正的加载已经训练好的网络来预测了

在测试py文件导入工具包

import tensorflow as tf

import numpy as np

import os, cv2

定义超参数

image_size = 64

num_channels = 3

images = []

#为何是\\test?因为只有\t会默认为一个tab键

path = "F:\img1\\test"

数据处理,要注意的是要进行跟训练时一模一样的处理,操作一样就不多说了

#加载路径下所有文件

direct = os.listdir(path)

for file in direct:

image = cv2.imread(path + '/' + file)

print("adress:", path + '/' + file)

image = cv2.resize(image, (image_size, image_size), 0, 0, cv2.INTER_LINEAR)

images.append(image)

images = np.array(images, dtype=np.uint8)

images = images.astype("float")

images = np.multiply(images, 1.0 / 255.0)

然后就可以一张一张进行预测了

for img in images:

#不要忘记reshape,卷积接受的时4维

x_batch = img.reshape(1, image_size, image_size, num_channels)

sess = tf.Session()

# step1网络结构图

saver = tf.train.import_meta_graph('./dog-cat-model/cat-dog.ckpt-7975.meta')

# step2加载权重参数

saver.restore(sess, './dog-cat-model/cat-dog.ckpt-7975')

# 获取默认的计算图

graph = tf.get_default_graph()

#获取数据

y_pred = graph.get_tensor_by_name("prediction:0")

x = graph.get_tensor_by_name("x_data:0")

y_true = graph.get_tensor_by_name("y_data:0")

y_test_images = np.zeros((1, 2))#[0, 0]

#喂入数据

feed_dict_testing = {x: x_batch, y_true: y_test_images}

#得出结果

result = sess.run(y_pred, feed_dict_testing)

res_label = ['cat', 'dog']

#输出预测值

print(res_label[result.argmax()])

好了,上面就已经完成了,我没有计算测试集的准确率,你自己可以搞一下。下面是完整代码

训练py

import data_set_dog_cat

import tensorflow as tf

import numpy as np

from numpy.random import seed

#确定随机种子,每次随机就是相同的结果,方便进行调试不会,出现不同结果

seed(10)

from tensorflow import set_random_seed

set_random_seed(20)

batch_size = 32 #每次迭代32张图片。一共1000张

#标签

classes = ['cats', 'dogs']

num_classes = len(classes)

#验正集 占0.2

validation_size = 0.2

#resize成规定大小

img_size = 64

#颜色通道

num_channels = 3

#图片绝对路径

train_path = 'F:\img1'

#读数据

data = data_set_dog_cat.read_train_sets(train_path, img_size, classes, validation_size=validation_size)

#第一层卷积层卷积核大小以及卷积核数量

#卷积核大小一般都为3,5等等,卷积核数量可以自己设定,一般为2的幂次数

filter_size_conv1 = 3

num_filter_conv1 = 32

#第二层卷积层卷积核大小以及卷积核数量

filter_size_conv2 = 3

num_filter_conv2 = 32

#第三层卷积层卷积核大小以及卷积核数量

filter_size_conv3 = 3

num_filter_conv3 = 64

fc_layer_size = 1024

def create_weights(shape):

return tf.Variable(tf.random_normal(shape, stddev=0.05))

# return tf.Variable(tf.truncated_normal(shape, stddev=0.05))

def create_biases(size):

return tf.Variable(tf.constant(0.05, shape=[size]))

def create_convolutional_layer(input, num_input_channels, conv_filter_size, num_filters):

Weight = create_weights([conv_filter_size, conv_filter_size, num_input_channels, num_filters])

biasese = create_biases(num_filters)

layer = tf.nn.conv2d(input, Weight, strides=[1, 1, 1, 1], padding='SAME')

layer = tf.add(layer, biasese)

layer = tf.nn.relu(layer)

pooling = tf.nn.max_pool(layer, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

return pooling

def create_flatten_layer(layer):

layer_shape = layer.get_shape()

num_features = layer_shape[1:4].num_elements()

layer = tf.reshape(layer, [-1, num_features])

return layer

def create_fully_connection(inputs, num_inputs, num_outputs, activation_function=True):

weight = create_weights([num_inputs, num_outputs])

biases = create_biases(num_outputs)

fully_connection = tf.add(tf.matmul(inputs, weight), biases)

fully_connection = tf.nn.dropout(fully_connection, keep_prob=0.7)

if activation_function is True:

fully_connection = tf.nn.relu(fully_connection)

return fully_connection

x_data = tf.placeholder(tf.float32, shape=[None, img_size, img_size, num_channels], name='x_data')

y_data = tf.placeholder(tf.float32, shape=[None, num_classes], name='y_data')

y_data_class = tf.argmax(y_data, 1)

layer_conv1 = create_convolutional_layer(input=x_data, num_input_channels=num_channels, conv_filter_size=filter_size_conv1, num_filters=num_filter_conv1)

layer_conv2 = create_convolutional_layer(input=layer_conv1, num_input_channels=num_filter_conv1, conv_filter_size=filter_size_conv2, num_filters=num_filter_conv2)

layer_conv3 = create_convolutional_layer(input=layer_conv2, num_input_channels=num_filter_conv2, conv_filter_size=filter_size_conv3, num_filters=num_filter_conv3)

layer_flat = create_flatten_layer(layer_conv3)

fc_1 = create_fully_connection(inputs=layer_flat, num_inputs=layer_flat.get_shape()[1:4].num_elements(), num_outputs=fc_layer_size, activation_function=True)

fc_2 = create_fully_connection(inputs=fc_1, num_inputs=fc_layer_size, num_outputs=num_classes, activation_function=False)

prediction = tf.nn.softmax(fc_2, name='prediction')

prediction_class = tf.argmax(prediction, 1)

cross_entrory = tf.nn.softmax_cross_entropy_with_logits(labels=y_data, logits=fc_2)

loss = tf.reduce_mean(cross_entrory)

optimizer = tf.train.AdamOptimizer(1e-4).minimize(loss)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

correct_prediction = tf.equal(y_data_class, prediction_class)

accurary = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

def show_progress(epoch, feed_dict_train, feed_dict_valid, train_loss, i):

acc = sess.run(accurary, feed_dict=feed_dict_train)

val_acc = sess.run(accurary, feed_dict=feed_dict_valid)

print("epoch:", str(epoch + 1) + ",i:", str(i) +

",acc:", str(acc) + ",val_acc:", str(val_acc) + ",train_loss:", str(train_loss))

total_iteration = 0

saver = tf.train.Saver()

def train(num_iteration):

global total_iteration

for i in range(total_iteration, num_iteration+total_iteration):

x_batch, y_true_batch, _, cls_batch = data.train.next_batch(batch_size)

x_valid_batch, y_valid_true_batch, _, cls_valid_batch = data.train.next_batch(batch_size)

feed_dict_train = {x_data:x_batch, y_data:y_true_batch}

feed_dict_valid = {x_data:x_valid_batch, y_data:y_valid_true_batch}

sess.run(optimizer, feed_dict=feed_dict_train)

if i%int(data.train._num_examples/batch_size) == 0:

train_loss = sess.run(loss, feed_dict=feed_dict_train)

epoch = i/int(data.train._num_examples/batch_size)

show_progress(epoch, feed_dict_train, feed_dict_valid, train_loss, i)

saver.save(sess, './dog-cat-model/cat-dog.ckpt', global_step=i)

train(num_iteration=8000)

读取数据

import cv2

import os

import glob

from sklearn.utils import shuffle

import numpy as np

class DataSet(object):

def __init__(self, images, labels, img_names, cls):

self._num_examples = images.shape[0]

self._images = images

self._labels = labels

self._img_names = img_names

self._cls = cls

self._epochs_done = 0

self._index_in_epoch = 0

def images(self):

return self._images

def labels(self):

return self._labels

def img_names(self):

return self._img_names

def cls(self):

return self._cls

def num_example(self):

return self._num_examples

def epochs_done(self):

return self._epochs_done

def next_batch(self, batch_size):

"""Return the next `batch_size` examples from this data set."""

start = self._index_in_epoch

self._index_in_epoch += batch_size

if self._index_in_epoch > self._num_examples:

# After each epoch we update this

self._epochs_done += 1

start = 0

self._index_in_epoch = batch_size

assert batch_size <= self._num_examples

end = self._index_in_epoch

return self._images[start:end], self._labels[start:end], self._img_names[start:end], self._cls[start:end]

def load_train(train_path, img_size, classes):

images = []

labels = []

img_names = []

cls = []

print("going to read training data")

for fields in classes:

index = classes.index(fields)

path = os.path.join(train_path, fields, '*g')

files = glob.glob(path)

for f1 in files:

try:

image = cv2.imread(f1)

except:

print("读取异常")

print("读取成功")

try:

image = cv2.resize(image, (img_size, img_size), 0, 0, cv2.INTER_LINEAR)

except:

print("resize异常")

image = image.astype(np.float32)

image = np.multiply(image, 1.0 / 255.0)

images.append(image)

label = np.zeros(len(classes))

label[index] = 1.0

labels.append(label)

fibase = os.path.basename(f1)

img_names.append(fibase)

cls.append(fields)

images = np.array(images)

labels = np.array(labels)

cls = np.array(cls)

return images, labels, img_names, cls

def read_train_sets(train_path, imag_size, classes, validation_size):

class DataSets(object):

pass

data_sets = DataSets()

images, labels, img_names, cls = load_train(train_path, imag_size, classes)

images, labels, img_names, cls = shuffle(images, labels, img_names, cls)

if isinstance(validation_size, float):

validation_size = int(validation_size * images.shape[0])

validation_images = images[:validation_size]

validation_labels = labels[:validation_size]

validation_img_name = img_names[:validation_size]

validation_cls = cls[:validation_size]

train_images = images[validation_size:]

train_labels = labels[validation_size:]

train_img_names = img_names[validation_size:]

train_cls = cls[validation_size:]

data_sets.train = DataSet(train_images, train_labels, train_img_names, train_cls)

data_sets.valid = DataSet(validation_images, validation_labels, validation_img_name, validation_cls)

return data_sets

测试Py

import tensorflow as tf

import numpy as np

import os, cv2

image_size = 64

num_channels = 3

images = []

path = "F:\img1\\test"

direct = os.listdir(path)

for file in direct:

image = cv2.imread(path + '/' + file)

print("adress:", path + '/' + file)

image = cv2.resize(image, (image_size, image_size), 0, 0, cv2.INTER_LINEAR)

images.append(image)

images = np.array(images, dtype=np.uint8)

images = images.astype("float")

images = np.multiply(images, 1.0 / 255.0)

for img in images:

x_batch = img.reshape(1, image_size, image_size, num_channels)

sess = tf.Session()

# step1网络结构图

saver = tf.train.import_meta_graph('./dog-cat-model/cat-dog.ckpt-7975.meta')

# step2加载权重参数

saver.restore(sess, './dog-cat-model/cat-dog.ckpt-7975')

# 获取默认的计算图

graph = tf.get_default_graph()

y_pred = graph.get_tensor_by_name("prediction:0")

x = graph.get_tensor_by_name("x_data:0")

y_true = graph.get_tensor_by_name("y_data:0")

y_test_images = np.zeros((1, 2))

feed_dict_testing = {x: x_batch, y_true: y_test_images}

result = sess.run(y_pred, feed_dict_testing)

res_label = ['cat', 'dog']

print(res_label[result.argmax()], s)

大功告成