学习Faster R-CNN代码rpn(六)

RPN是Faster R-CNN的一个重点。

本人前面的博客《 Faster R-CNN》

( https://blog.csdn.net/weixin_43872578/article/details/85869629 )

提到RPN的实现。

下面看看代码文件结构

- bbox_transform.py

bounding box变换。 - generate_anchors.py

生成anchor,根据几种尺度和比例生成的anchor。 - proposal_layer.py

通过将估计的边界框变换应用于一组常规框(称为“锚点”)来输出对象检测候选区域。选出合适的ROIS。 - anchor_target_layer.py

将anchor对应ground truth。生成anchor分类标签和边界框回归目标。为anchor找到训练所需的ground truth类别和坐标变换信息。 - proposal_target_layer_cascade.py

将对象检测候选分配给ground truth目标。生成候选分类标签和边界框回归目标。为选择出的rois找到训练所需的ground truth类别和坐标变换信息 - rpn.py

RPN网络定义。

参考 详细的Faster R-CNN源码解析之RPN源码解析 https://blog.csdn.net/jiongnima/article/details/79781792 和 Faster R-CNN 入坑之源码阅读 https://www.jianshu.com/p/a223853f8402?tdsourcetag=s_pcqq_aiomsg 对RPN部分代码进行注释。

1 rpn.py

定义了一个 _RPN 类,详细注释如下:

class _RPN(nn.Module):

""" region proposal network """

def __init__(self, din):

super(_RPN, self).__init__()

#得到输入特征图的深度

self.din = din # get depth of input feature map, e.g., 512

#anchor的尺度 __C.ANCHOR_SCALES = [8,16,32]

self.anchor_scales = cfg.ANCHOR_SCALES

#anchor的比例 __C.ANCHOR_RATIOS = [0.5,1,2]

self.anchor_ratios = cfg.ANCHOR_RATIOS

#特征步长 __C.FEAT_STRIDE = [16, ]

self.feat_stride = cfg.FEAT_STRIDE[0]

# define the convrelu layers processing input feature map

#定义处理输入要素图的convrelu层

#nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True)

self.RPN_Conv = nn.Conv2d(self.din, 512, 3, 1, 1, bias=True)

# define bg/fg classifcation score layer

#定义背景和前景分类得分

#对每个anchor都要进行背景或前景的分类得分,个数就是尺度个数乘以比例个数再乘以2

self.nc_score_out = len(self.anchor_scales) * len(self.anchor_ratios) * 2 # 2(bg/fg) * 9 (anchors)

#上面是RPN卷积 这里是分类, 网络输入是512 输出是参数个数

self.RPN_cls_score = nn.Conv2d(512, self.nc_score_out, 1, 1, 0)

# define anchor box offset prediction layer

#定义anchor的偏移层

#偏移的输出个数是anchor个数乘以4

self.nc_bbox_out = len(self.anchor_scales) * len(self.anchor_ratios) * 4 # 4(coords) * 9 (anchors)

#网络输入是512 输出是参数个数

self.RPN_bbox_pred = nn.Conv2d(512, self.nc_bbox_out, 1, 1, 0)

# define proposal layer

#定义候选区域层 _ProposalLayer

# 参数是 特征步长 尺度 比例

self.RPN_proposal = _ProposalLayer(self.feat_stride, self.anchor_scales, self.anchor_ratios)

# define anchor target layer

#定义anchor目标层 _AnchorTargetLayer

# 参数是 特征步长 尺度 比例

self.RPN_anchor_target = _AnchorTargetLayer(self.feat_stride, self.anchor_scales, self.anchor_ratios)

self.rpn_loss_cls = 0 #分类损失

self.rpn_loss_box = 0 #回归损失

@staticmethod #静态方法

#将x reshape

def reshape(x, d):

input_shape = x.size()

x = x.view(

input_shape[0],

int(d),

int(float(input_shape[1] * input_shape[2]) / float(d)),

input_shape[3]

)

return x

def forward(self, base_feat, im_info, gt_boxes, num_boxes):

#features信息包括batch_size,data_height,data_width,num_channels

#即批尺寸,特征数据高度,特征数据宽度,特征的通道数。

batch_size = base_feat.size(0)#特征的第一维

# return feature map after convrelu layer

# 在卷积之后返回特征图

rpn_conv1 = F.relu(self.RPN_Conv(base_feat), inplace=True)

# get rpn classification score

#得到RPN分类得分

rpn_cls_score = self.RPN_cls_score(rpn_conv1)

##将rpn_cls_score转化为rpn_cls_score_reshape

rpn_cls_score_reshape = self.reshape(rpn_cls_score, 2)

#用softmax函数得到概率

rpn_cls_prob_reshape = F.softmax(rpn_cls_score_reshape, 1)

#前景背景分类,2个参数

rpn_cls_prob = self.reshape(rpn_cls_prob_reshape, self.nc_score_out)

# get rpn offsets to the anchor boxes

#4个参数的偏移

rpn_bbox_pred = self.RPN_bbox_pred(rpn_conv1)

# proposal layer

cfg_key = 'TRAIN' if self.training else 'TEST'

#用anchor提取候选区域

#参数有分类概率 四个参数偏移 图片信息

rois = self.RPN_proposal((rpn_cls_prob.data, rpn_bbox_pred.data,

im_info, cfg_key))

self.rpn_loss_cls = 0#分类损失

self.rpn_loss_box = 0#回归损失

# generating training labels and build the rpn loss

#生成训练标签并构建rpn损失

if self.training:#训练

assert gt_boxes is not None

#anchor的目标

rpn_data = self.RPN_anchor_target((rpn_cls_score.data, gt_boxes, im_info, num_boxes))

# compute classification loss

#计算分类损失

#permute(多维数组,[维数的组合]) 该函数是改变维数

#contiguous:view只能用在contiguous的variable上。

#如果在view之前用了transpose, permute等,需要用contiguous()来返回一个contiguous copy。

##返回rpn网络判断的anchor前后景分数

rpn_cls_score = rpn_cls_score_reshape.permute(0, 2, 3, 1).contiguous().view(batch_size, -1, 2)

##返回每个anchor属于前景还是后景的ground truth

rpn_label = rpn_data[0].view(batch_size, -1)

rpn_keep = Variable(rpn_label.view(-1).ne(-1).nonzero().view(-1))

rpn_cls_score = torch.index_select(rpn_cls_score.view(-1,2), 0, rpn_keep)

rpn_label = torch.index_select(rpn_label.view(-1), 0, rpn_keep.data)

rpn_label = Variable(rpn_label.long())

self.rpn_loss_cls = F.cross_entropy(rpn_cls_score, rpn_label)

fg_cnt = torch.sum(rpn_label.data.ne(0))

rpn_bbox_targets, rpn_bbox_inside_weights, rpn_bbox_outside_weights = rpn_data[1:]

# compute bbox regression loss

#计算回归损失

##在训练计算边框误差时有用,仅对未超出图像边界的anchor有用

rpn_bbox_inside_weights = Variable(rpn_bbox_inside_weights)

##在训练计算边框误差时有用,仅对未超出图像边界的anchor有用

rpn_bbox_outside_weights = Variable(rpn_bbox_outside_weights)

##返回每个anchor对应的事实的四个偏移值

rpn_bbox_targets = Variable(rpn_bbox_targets)

##计算rpn的边界损失loss,请注意在这里用到了inside和outside_weights

self.rpn_loss_box = _smooth_l1_loss(rpn_bbox_pred, rpn_bbox_targets, rpn_bbox_inside_weights,

rpn_bbox_outside_weights, sigma=3, dim=[1,2,3])

return rois, self.rpn_loss_cls, self.rpn_loss_box

2 generate_anchors.py

这一部分比较简单,就是把几种尺度几种比例(这里是3种)的anchor合起来用anchors来存储所有的anchor。

详细注释如下:

# Verify that we compute the same anchors as Shaoqing's matlab implementation:

#

# >> load output/rpn_cachedir/faster_rcnn_VOC2007_ZF_stage1_rpn/anchors.mat

# >> anchors

#

# anchors = %9种anchor

#

# -83 -39 100 56

# -175 -87 192 104

# -359 -183 376 200

# -55 -55 72 72

# -119 -119 136 136

# -247 -247 264 264

# -35 -79 52 96

# -79 -167 96 184

# -167 -343 184 360

#array([[ -83., -39., 100., 56.],

# [-175., -87., 192., 104.],

# [-359., -183., 376., 200.],

# [ -55., -55., 72., 72.],

# [-119., -119., 136., 136.],

# [-247., -247., 264., 264.],

# [ -35., -79., 52., 96.],

# [ -79., -167., 96., 184.],

# [-167., -343., 184., 360.]])

try:

xrange # Python 2

except NameError:

xrange = range # Python 3

def generate_anchors(base_size=16, ratios=[0.5, 1, 2],

scales=2**np.arange(3, 6)):#arange函数用于创建等差数组3 4 5

"""

Generate anchor (reference) windows by enumerating aspect ratios X

scales wrt a reference (0, 0, 15, 15) window.

"""

base_anchor = np.array([1, 1, base_size, base_size]) - 1

ratio_anchors = _ratio_enum(base_anchor, ratios)

#vstack(tup) ,参数tup可以是元组,列表,或者numpy数组,返回结果为numpy的数组,

#就是横着排起来

anchors = np.vstack([_scale_enum(ratio_anchors[i, :], scales)

for i in xrange(ratio_anchors.shape[0])])

return anchors

#Return width, height, x center, and y center for an anchor (window).

#得到anchor宽 高 中点坐标

def _whctrs(anchor):

"""

Return width, height, x center, and y center for an anchor (window).

"""

w = anchor[2] - anchor[0] + 1

h = anchor[3] - anchor[1] + 1

x_ctr = anchor[0] + 0.5 * (w - 1)

y_ctr = anchor[1] + 0.5 * (h - 1)

return w, h, x_ctr, y_ctr

#把给的anchor合在一起,按列排

def _mkanchors(ws, hs, x_ctr, y_ctr):

"""

Given a vector of widths (ws) and heights (hs) around a center

(x_ctr, y_ctr), output a set of anchors (windows).

"""

ws = ws[:, np.newaxis]#np.newaxis 在使用和功能上等价于 None,其实就是 None 的一个别名。

hs = hs[:, np.newaxis]

anchors = np.hstack((x_ctr - 0.5 * (ws - 1),

y_ctr - 0.5 * (hs - 1),

x_ctr + 0.5 * (ws - 1),

y_ctr + 0.5 * (hs - 1)))

return anchors

#每个比例下有一组anchor

def _ratio_enum(anchor, ratios):

"""

Enumerate a set of anchors for each aspect ratio wrt an anchor.

"""

w, h, x_ctr, y_ctr = _whctrs(anchor)#上面定义的函数 得到anchor的宽高中心

size = w * h

size_ratios = size / ratios#该比例下anchor的大小

ws = np.round(np.sqrt(size_ratios))

hs = np.round(ws * ratios)

anchors = _mkanchors(ws, hs, x_ctr, y_ctr)#把这个比例下的anchor保留下来

return anchors

#每个尺度下有一组anchor

def _scale_enum(anchor, scales):

"""

Enumerate a set of anchors for each scale wrt an anchor.

"""

w, h, x_ctr, y_ctr = _whctrs(anchor)

ws = w * scales

hs = h * scales

anchors = _mkanchors(ws, hs, x_ctr, y_ctr)#把这个比例下的anchor保留下来

return anchors

if __name__ == '__main__':

import time

t = time.time()

a = generate_anchors()#得到的anchor

print(time.time() - t)

print(a)

from IPython import embed; embed()

3 proposal_layer.py

根据anchor得到候选区域,这里有NMS,在后面再介绍。详细注释如下:

#通过将估计的边界框变换应用于一组常规框(称为“锚点”)来输出对象检测候选区域。

class _ProposalLayer(nn.Module):

"""

Outputs object detection proposals by applying estimated bounding-box

transformations to a set of regular boxes (called "anchors").

"""

#参数是 特征步长 尺度 比例

def __init__(self, feat_stride, scales, ratios):

super(_ProposalLayer, self).__init__()

#得到特征步长

self._feat_stride = feat_stride

#得到所有的anchor

self._anchors = torch.from_numpy(generate_anchors(scales=np.array(scales),

ratios=np.array(ratios))).float()

#anchors的行数就是所有anchor的个数

self._num_anchors = self._anchors.size(0)

# rois blob: holds R regions of interest, each is a 5-tuple #一个索引和四个矩形参数

# (n, x1, y1, x2, y2) specifying an image batch index n and a

# rectangle (x1, y1, x2, y2)

# top[0].reshape(1, 5)

#

# # scores blob: holds scores for R regions of interest

# if len(top) > 1:

# top[1].reshape(1, 1, 1, 1)

def forward(self, input):

# Algorithm:

#

# for each (H, W) location i

# generate A anchor boxes centered on cell i

# apply predicted bbox deltas at cell i to each of the A anchors

# clip predicted boxes to image

# remove predicted boxes with either height or width < threshold

# sort all (proposal, score) pairs by score from highest to lowest

# take top pre_nms_topN proposals before NMS

# apply NMS with threshold 0.7 to remaining proposals

# take after_nms_topN proposals after NMS

# return the top proposals (-> RoIs top, scores top)

#在NMS后得到最佳的

# the first set of _num_anchors channels are bg probs

#_num_anchors通道的第一组是背景概率

# the second set are the fg probs

#第二组是前景概率

scores = input[0][:, self._num_anchors:, :, :]#分类概率

bbox_deltas = input[1]#偏移

im_info = input[2]#图像信息

cfg_key = input[3]#是training还是test

#设置一些参数

pre_nms_topN = cfg[cfg_key].RPN_PRE_NMS_TOP_N

post_nms_topN = cfg[cfg_key].RPN_POST_NMS_TOP_N

nms_thresh = cfg[cfg_key].RPN_NMS_THRESH

min_size = cfg[cfg_key].RPN_MIN_SIZE

#批尺寸

batch_size = bbox_deltas.size(0)

#下面是在原图上生成anchor

feat_height, feat_width = scores.size(2), scores.size(3)

shift_x = np.arange(0, feat_width) * self._feat_stride#shape: [width,] 特征图相对于原图的偏移

shift_y = np.arange(0, feat_height) * self._feat_stride#shape: [height,]

shift_x, shift_y = np.meshgrid(shift_x, shift_y) #生成网格 shift_x shape: [height, width], shift_y shape: [height, width]

shifts = torch.from_numpy(np.vstack((shift_x.ravel(), shift_y.ravel(),

shift_x.ravel(), shift_y.ravel())).transpose()) #shape[height*width, 4]

shifts = shifts.contiguous().type_as(scores).float()

A = self._num_anchors

K = shifts.size(0)

self._anchors = self._anchors.type_as(scores)

# anchors = self._anchors.view(1, A, 4) + shifts.view(1, K, 4).permute(1, 0, 2).contiguous()

anchors = self._anchors.view(1, A, 4) + shifts.view(K, 1, 4)

anchors = anchors.view(1, K * A, 4).expand(batch_size, K * A, 4)

# Transpose and reshape predicted bbox transformations to get them

# into the same order as the anchors:

#转置和重塑预测的bbox转换,使它们与锚点的顺序相同:

bbox_deltas = bbox_deltas.permute(0, 2, 3, 1).contiguous()

bbox_deltas = bbox_deltas.view(batch_size, -1, 4)

# Same story for the scores:

scores = scores.permute(0, 2, 3, 1).contiguous()

scores = scores.view(batch_size, -1)

# Convert anchors into proposals via bbox transformations

#通过bbox转换将锚点转换为候选区域

proposals = bbox_transform_inv(anchors, bbox_deltas, batch_size)

# 2. clip predicted boxes to image

#裁剪预测框到图像

#严格限制proposal的四个角在图像边界内

proposals = clip_boxes(proposals, im_info, batch_size)

# proposals = clip_boxes_batch(proposals, im_info, batch_size)

# assign the score to 0 if it's non keep.

# keep = self._filter_boxes(proposals, min_size * im_info[:, 2])

# trim keep index to make it euqal over batch

# keep_idx = torch.cat(tuple(keep_idx), 0)

# scores_keep = scores.view(-1)[keep_idx].view(batch_size, trim_size)

# proposals_keep = proposals.view(-1, 4)[keep_idx, :].contiguous().view(batch_size, trim_size, 4)

# _, order = torch.sort(scores_keep, 1, True)

scores_keep = scores

proposals_keep = proposals

_, order = torch.sort(scores_keep, 1, True)

output = scores.new(batch_size, post_nms_topN, 5).zero_()

for i in range(batch_size):

# # 3. remove predicted boxes with either height or width < threshold

# # (NOTE: convert min_size to input image scale stored in im_info[2])

#删除高度或宽度<阈值的预测框(注意:将min_size转换为存储在im_info [2]中的输入图像比例)

proposals_single = proposals_keep[i]

scores_single = scores_keep[i]

# # 4. sort all (proposal, score) pairs by score from highest to lowest

#按分数从最高到最低排序所有(h候选区域,得分)对

# # 5. take top pre_nms_topN (e.g. 6000)

#取顶部pre_nms_topN

order_single = order[i]

if pre_nms_topN > 0 and pre_nms_topN < scores_keep.numel():

order_single = order_single[:pre_nms_topN]

proposals_single = proposals_single[order_single, :]

scores_single = scores_single[order_single].view(-1,1)

# 6. apply nms (e.g. threshold = 0.7)

# 7. take after_nms_topN (e.g. 300)

# 8. return the top proposals (-> RoIs top)

keep_idx_i = nms(torch.cat((proposals_single, scores_single), 1), nms_thresh, force_cpu=not cfg.USE_GPU_NMS)

keep_idx_i = keep_idx_i.long().view(-1)

if post_nms_topN > 0:

keep_idx_i = keep_idx_i[:post_nms_topN]

proposals_single = proposals_single[keep_idx_i, :]

scores_single = scores_single[keep_idx_i, :]

# padding 0 at the end.

num_proposal = proposals_single.size(0)

output[i,:,0] = i

output[i,:num_proposal,1:] = proposals_single

return output

def backward(self, top, propagate_down, bottom):

"""This layer does not propagate gradients."""

pass

def reshape(self, bottom, top):

"""Reshaping happens during the call to forward."""

pass

#删除任何小于min_size的边框

def _filter_boxes(self, boxes, min_size):

"""Remove all boxes with any side smaller than min_size."""

ws = boxes[:, :, 2] - boxes[:, :, 0] + 1

hs = boxes[:, :, 3] - boxes[:, :, 1] + 1

#expand_as(ws) 将tensor扩展为参数ws的大小

keep = ((ws >= min_size.view(-1,1).expand_as(ws)) & (hs >= min_size.view(-1,1).expand_as(hs)))

return keep

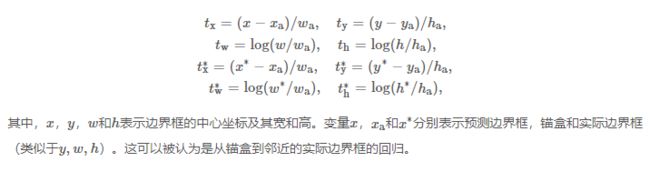

4 bbox_transform.py

就是一些变换,注释如下:

#在计算anchor的坐标变换值的时候,使用到了bbox_transform函数,

#注意在计算坐标变换的时候是将anchor的表示形式变成中心坐标与长宽

def bbox_transform(ex_rois, gt_rois):

ex_widths = ex_rois[:, 2] - ex_rois[:, 0] + 1.0

ex_heights = ex_rois[:, 3] - ex_rois[:, 1] + 1.0

ex_ctr_x = ex_rois[:, 0] + 0.5 * ex_widths

ex_ctr_y = ex_rois[:, 1] + 0.5 * ex_heights#计算得到每个anchor的中心坐标和长宽

gt_widths = gt_rois[:, 2] - gt_rois[:, 0] + 1.0

gt_heights = gt_rois[:, 3] - gt_rois[:, 1] + 1.0

gt_ctr_x = gt_rois[:, 0] + 0.5 * gt_widths

gt_ctr_y = gt_rois[:, 1] + 0.5 * gt_heights#计算每个anchor对应的ground truth box对应的中心坐标和长宽

targets_dx = (gt_ctr_x - ex_ctr_x) / ex_widths#计算四个坐标变换值

targets_dy = (gt_ctr_y - ex_ctr_y) / ex_heights

targets_dw = torch.log(gt_widths / ex_widths)

targets_dh = torch.log(gt_heights / ex_heights)

targets = torch.stack(

(targets_dx, targets_dy, targets_dw, targets_dh),1)#对于每一个anchor,得到四个关系值 shape: [4, num_anchor]

return targets

def bbox_transform_batch(ex_rois, gt_rois):

if ex_rois.dim() == 2:

ex_widths = ex_rois[:, 2] - ex_rois[:, 0] + 1.0

ex_heights = ex_rois[:, 3] - ex_rois[:, 1] + 1.0

ex_ctr_x = ex_rois[:, 0] + 0.5 * ex_widths

ex_ctr_y = ex_rois[:, 1] + 0.5 * ex_heights

gt_widths = gt_rois[:, :, 2] - gt_rois[:, :, 0] + 1.0

gt_heights = gt_rois[:, :, 3] - gt_rois[:, :, 1] + 1.0

gt_ctr_x = gt_rois[:, :, 0] + 0.5 * gt_widths

gt_ctr_y = gt_rois[:, :, 1] + 0.5 * gt_heights

targets_dx = (gt_ctr_x - ex_ctr_x.view(1,-1).expand_as(gt_ctr_x)) / ex_widths

targets_dy = (gt_ctr_y - ex_ctr_y.view(1,-1).expand_as(gt_ctr_y)) / ex_heights

targets_dw = torch.log(gt_widths / ex_widths.view(1,-1).expand_as(gt_widths))

targets_dh = torch.log(gt_heights / ex_heights.view(1,-1).expand_as(gt_heights))

elif ex_rois.dim() == 3:

ex_widths = ex_rois[:, :, 2] - ex_rois[:, :, 0] + 1.0

ex_heights = ex_rois[:,:, 3] - ex_rois[:,:, 1] + 1.0

ex_ctr_x = ex_rois[:, :, 0] + 0.5 * ex_widths

ex_ctr_y = ex_rois[:, :, 1] + 0.5 * ex_heights

gt_widths = gt_rois[:, :, 2] - gt_rois[:, :, 0] + 1.0

gt_heights = gt_rois[:, :, 3] - gt_rois[:, :, 1] + 1.0

gt_ctr_x = gt_rois[:, :, 0] + 0.5 * gt_widths

gt_ctr_y = gt_rois[:, :, 1] + 0.5 * gt_heights

targets_dx = (gt_ctr_x - ex_ctr_x) / ex_widths

targets_dy = (gt_ctr_y - ex_ctr_y) / ex_heights

targets_dw = torch.log(gt_widths / ex_widths)

targets_dh = torch.log(gt_heights / ex_heights)

else:

raise ValueError('ex_roi input dimension is not correct.')

targets = torch.stack(

(targets_dx, targets_dy, targets_dw, targets_dh),2)

return targets

#bbox_transform_inv函数结合RPN的输出对所有初始框进行了坐标变换

def bbox_transform_inv(boxes, deltas, batch_size):

##获得初始proposal的中心和长宽信息

widths = boxes[:, :, 2] - boxes[:, :, 0] + 1.0

heights = boxes[:, :, 3] - boxes[:, :, 1] + 1.0

ctr_x = boxes[:, :, 0] + 0.5 * widths

ctr_y = boxes[:, :, 1] + 0.5 * heights

##获得坐标变换信息

dx = deltas[:, :, 0::4]

dy = deltas[:, :, 1::4]

dw = deltas[:, :, 2::4]

dh = deltas[:, :, 3::4]

# #得到改变后的proposal的中心和长宽信息

pred_ctr_x = dx * widths.unsqueeze(2) + ctr_x.unsqueeze(2)

pred_ctr_y = dy * heights.unsqueeze(2) + ctr_y.unsqueeze(2)

pred_w = torch.exp(dw) * widths.unsqueeze(2)

pred_h = torch.exp(dh) * heights.unsqueeze(2)

#将改变后的proposal的中心和长宽信息还原成左上角和右下角的版本

pred_boxes = deltas.clone()

# x1

pred_boxes[:, :, 0::4] = pred_ctr_x - 0.5 * pred_w

# y1

pred_boxes[:, :, 1::4] = pred_ctr_y - 0.5 * pred_h

# x2

pred_boxes[:, :, 2::4] = pred_ctr_x + 0.5 * pred_w

# y2

pred_boxes[:, :, 3::4] = pred_ctr_y + 0.5 * pred_h

return pred_boxes

def clip_boxes_batch(boxes, im_shape, batch_size):

"""

Clip boxes to image boundaries.

"""

num_rois = boxes.size(1)

boxes[boxes < 0] = 0

# batch_x = (im_shape[:,0]-1).view(batch_size, 1).expand(batch_size, num_rois)

# batch_y = (im_shape[:,1]-1).view(batch_size, 1).expand(batch_size, num_rois)

batch_x = im_shape[:, 1] - 1

batch_y = im_shape[:, 0] - 1

boxes[:,:,0][boxes[:,:,0] > batch_x] = batch_x

boxes[:,:,1][boxes[:,:,1] > batch_y] = batch_y

boxes[:,:,2][boxes[:,:,2] > batch_x] = batch_x

boxes[:,:,3][boxes[:,:,3] > batch_y] = batch_y

return boxes

#严格限制proposal的四个角在图像边界内

def clip_boxes(boxes, im_shape, batch_size):

for i in range(batch_size):

boxes[i,:,0::4].clamp_(0, im_shape[i, 1]-1)

boxes[i,:,1::4].clamp_(0, im_shape[i, 0]-1)

boxes[i,:,2::4].clamp_(0, im_shape[i, 1]-1)

boxes[i,:,3::4].clamp_(0, im_shape[i, 0]-1)

return boxes

##计算重合程度,两个框之间的重合区域的面积 / 两个区域一共加起来的面

def bbox_overlaps(anchors, gt_boxes):

"""

anchors: (N, 4) ndarray of float

gt_boxes: (K, 4) ndarray of float

overlaps: (N, K) ndarray of overlap between boxes and query_boxes

"""

N = anchors.size(0)

K = gt_boxes.size(0)

gt_boxes_area = ((gt_boxes[:,2] - gt_boxes[:,0] + 1) *

(gt_boxes[:,3] - gt_boxes[:,1] + 1)).view(1, K)

anchors_area = ((anchors[:,2] - anchors[:,0] + 1) *

(anchors[:,3] - anchors[:,1] + 1)).view(N, 1)

boxes = anchors.view(N, 1, 4).expand(N, K, 4)

query_boxes = gt_boxes.view(1, K, 4).expand(N, K, 4)

iw = (torch.min(boxes[:,:,2], query_boxes[:,:,2]) -

torch.max(boxes[:,:,0], query_boxes[:,:,0]) + 1)

iw[iw < 0] = 0

ih = (torch.min(boxes[:,:,3], query_boxes[:,:,3]) -

torch.max(boxes[:,:,1], query_boxes[:,:,1]) + 1)

ih[ih < 0] = 0

ua = anchors_area + gt_boxes_area - (iw * ih)

overlaps = iw * ih / ua

return overlaps

def bbox_overlaps_batch(anchors, gt_boxes):

"""

anchors: (N, 4) ndarray of float

gt_boxes: (b, K, 5) ndarray of float

overlaps: (N, K) ndarray of overlap between boxes and query_boxes

"""

batch_size = gt_boxes.size(0)

if anchors.dim() == 2:

N = anchors.size(0)

K = gt_boxes.size(1)

anchors = anchors.view(1, N, 4).expand(batch_size, N, 4).contiguous()

gt_boxes = gt_boxes[:,:,:4].contiguous()

gt_boxes_x = (gt_boxes[:,:,2] - gt_boxes[:,:,0] + 1)

gt_boxes_y = (gt_boxes[:,:,3] - gt_boxes[:,:,1] + 1)

gt_boxes_area = (gt_boxes_x * gt_boxes_y).view(batch_size, 1, K)

anchors_boxes_x = (anchors[:,:,2] - anchors[:,:,0] + 1)

anchors_boxes_y = (anchors[:,:,3] - anchors[:,:,1] + 1)

anchors_area = (anchors_boxes_x * anchors_boxes_y).view(batch_size, N, 1)

gt_area_zero = (gt_boxes_x == 1) & (gt_boxes_y == 1)

anchors_area_zero = (anchors_boxes_x == 1) & (anchors_boxes_y == 1)

boxes = anchors.view(batch_size, N, 1, 4).expand(batch_size, N, K, 4)

query_boxes = gt_boxes.view(batch_size, 1, K, 4).expand(batch_size, N, K, 4)

iw = (torch.min(boxes[:,:,:,2], query_boxes[:,:,:,2]) -

torch.max(boxes[:,:,:,0], query_boxes[:,:,:,0]) + 1)

iw[iw < 0] = 0

ih = (torch.min(boxes[:,:,:,3], query_boxes[:,:,:,3]) -

torch.max(boxes[:,:,:,1], query_boxes[:,:,:,1]) + 1)

ih[ih < 0] = 0

ua = anchors_area + gt_boxes_area - (iw * ih)

overlaps = iw * ih / ua

# mask the overlap here.

overlaps.masked_fill_(gt_area_zero.view(batch_size, 1, K).expand(batch_size, N, K), 0)

overlaps.masked_fill_(anchors_area_zero.view(batch_size, N, 1).expand(batch_size, N, K), -1)

elif anchors.dim() == 3:

N = anchors.size(1)

K = gt_boxes.size(1)

if anchors.size(2) == 4:

anchors = anchors[:,:,:4].contiguous()

else:

anchors = anchors[:,:,1:5].contiguous()

gt_boxes = gt_boxes[:,:,:4].contiguous()

gt_boxes_x = (gt_boxes[:,:,2] - gt_boxes[:,:,0] + 1)

gt_boxes_y = (gt_boxes[:,:,3] - gt_boxes[:,:,1] + 1)

gt_boxes_area = (gt_boxes_x * gt_boxes_y).view(batch_size, 1, K)

anchors_boxes_x = (anchors[:,:,2] - anchors[:,:,0] + 1)

anchors_boxes_y = (anchors[:,:,3] - anchors[:,:,1] + 1)

anchors_area = (anchors_boxes_x * anchors_boxes_y).view(batch_size, N, 1)

gt_area_zero = (gt_boxes_x == 1) & (gt_boxes_y == 1)

anchors_area_zero = (anchors_boxes_x == 1) & (anchors_boxes_y == 1)

boxes = anchors.view(batch_size, N, 1, 4).expand(batch_size, N, K, 4)

query_boxes = gt_boxes.view(batch_size, 1, K, 4).expand(batch_size, N, K, 4)

iw = (torch.min(boxes[:,:,:,2], query_boxes[:,:,:,2]) -

torch.max(boxes[:,:,:,0], query_boxes[:,:,:,0]) + 1)

iw[iw < 0] = 0

ih = (torch.min(boxes[:,:,:,3], query_boxes[:,:,:,3]) -

torch.max(boxes[:,:,:,1], query_boxes[:,:,:,1]) + 1)

ih[ih < 0] = 0

ua = anchors_area + gt_boxes_area - (iw * ih)

overlaps = iw * ih / ua

# mask the overlap here.

overlaps.masked_fill_(gt_area_zero.view(batch_size, 1, K).expand(batch_size, N, K), 0)

overlaps.masked_fill_(anchors_area_zero.view(batch_size, N, 1).expand(batch_size, N, K), -1)

else:

raise ValueError('anchors input dimension is not correct.')

return overlaps

5 anchor_target_layer.py

为anchor找到训练所需的ground truth类别和坐标变换信息

6 proposal_target_layer_cascade.py

为选出的ROIS找到训练所需的ground truth类别和坐标变换信息

【占坑,未完待续…】