VggNet10模型的cifar10深度学习训练

目录

一:数据准备:

二:VGG模型

三:代码部分

1.input_data.py

2.VGG.py

3.tools.py

4.train_and_val.py

一:数据准备:

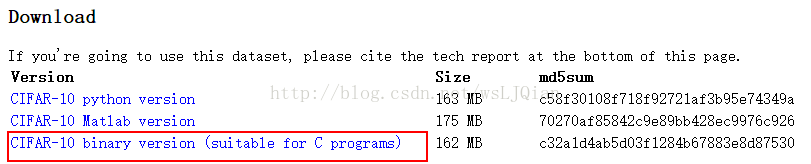

先放些链接,cifar10的数据集的下载地址:http://www.cs.toronto.edu/~kriz/cifar.html

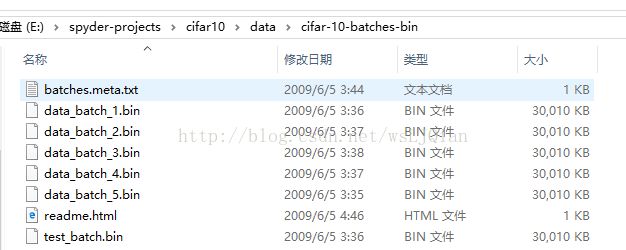

用二进制tfcords的数据集训练,下载第三个,下载的数据文件集是这样的

上面下载的文件中,data_batch_(num).bin是训练集,一共有5个训练集;test_batch.bin为测试集

在数据集输读入的时候,也将会根据文件名来获取这些数据,后面代码中将会体现到

二:VGG模型

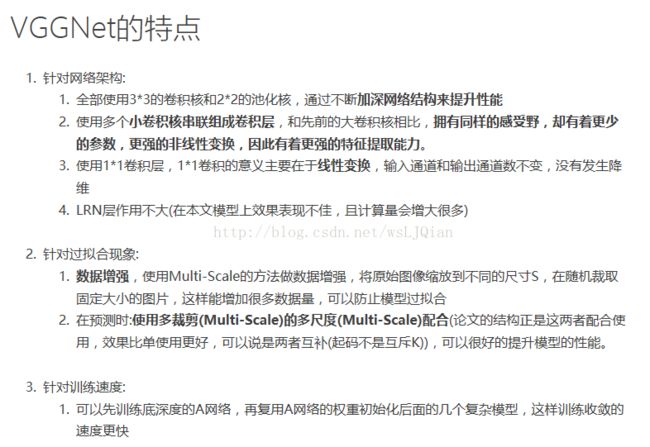

VGGNet是牛津大学计算机视觉组(Visual Geometry Group)和Google Deepmind公司研究员一起研发的深度卷积神经网络。VGGNet在AlexNet的基础上探索了卷积神经网络的深度与性能之间的关系,通过反复堆叠3*3的小型卷积核和2*2的最大池化层,VGGNet构筑的16~19层卷积神经网络模型取得了很好的识别性能,同时VGGNet的拓展性很强,迁移到其他图片数据上泛化能力很好,而且VGGNet结构简洁,现在依然被用来提取图像特征。

VGG的网络结构随处可见,这里列出一个最常见的结构。

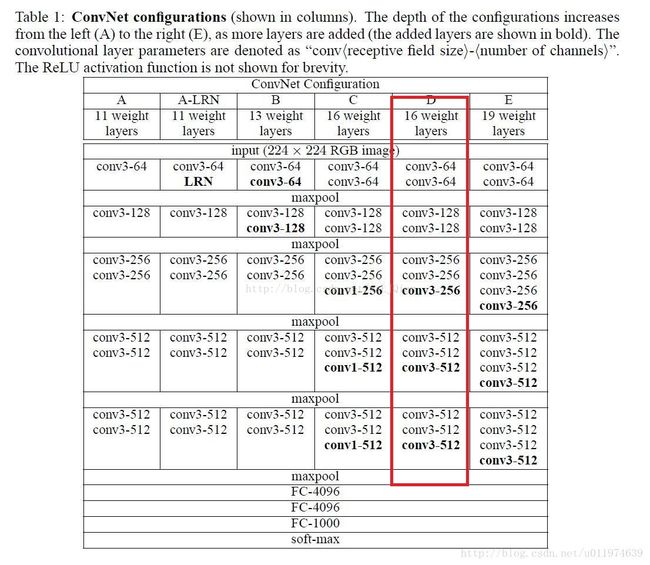

VGG根据深度的不同,列出了这么些。其中根据结构深度的不同,从左边的A(11层)到E(19层)是逐渐增加的。单个以列的方式来看,conv3-256,表示:有depth的输入数据集,其中,该层的卷积核数量是256个。(这部分是VGG区别与其他模型的一个核心部分,在代码中也是主要根据这部分来构建模型的)

这里对cifar10的数据集进行训练,采用D,16层的一个模型(13个由卷积+池化和3个全连接层组成),直接对照上面部分,就看出来结构是如何定义的了

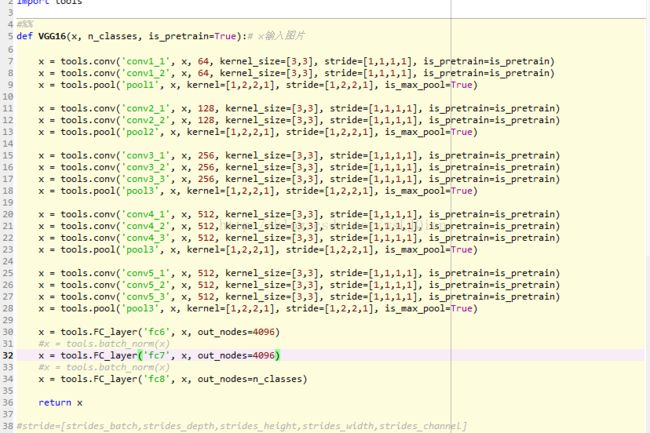

根据上面说到的,再与结构图创建的代码结构进行对照,能更好的理解。(后面给出了VGG16N是为了让整个模型在tensorboard下看起来更好看)

其中卷积部分的kernel_size=[3,3].池化部分的kernel_size=[2,2],完全符合上面提到的内容。三个FC全连接层,其中在第三个全连接层部分,输出的节点(nodes)=n_classes,也就是分类有多少个类型,这里的训练集是cifar10.所以后面给出的n_classes=10.

其实到这里,这个模型基本上是已经创建完毕了。后续的数据输入,和训练部分,[catsVSdogs]猫狗大战代码注释讲解_1大同小异。

三:代码部分

1.input_data.py

import tensorflow as tf

import numpy as np

import os

#%% Reading data

def read_cifar10(data_dir, is_train, batch_size, shuffle):

"""Read CIFAR10

Args:

data_dir: the directory of CIFAR10

is_train: boolen

batch_size:

shuffle:

Returns:

label: 1D tensor, tf.int32

image: 4D tensor, [batch_size, height, width, 3], tf.float32

"""

img_width = 32

img_height = 32

img_depth = 3

label_bytes = 1

image_bytes = img_width*img_height*img_depth

with tf.name_scope('input'):

if is_train:

filenames = [os.path.join(data_dir, 'data_batch_%d.bin' %ii)

for ii in np.arange(1, 6)]

else:

filenames = [os.path.join(data_dir, 'test_batch.bin')]

filename_queue = tf.train.string_input_producer(filenames)

reader = tf.FixedLengthRecordReader(label_bytes + image_bytes)

key, value = reader.read(filename_queue)

record_bytes = tf.decode_raw(value, tf.uint8)

label = tf.slice(record_bytes, [0], [label_bytes])

label = tf.cast(label, tf.int32)

image_raw = tf.slice(record_bytes, [label_bytes], [image_bytes])

image_raw = tf.reshape(image_raw, [img_depth, img_height, img_width])

image = tf.transpose(image_raw, (1,2,0)) # convert from D/H/W to H/W/D

image = tf.cast(image, tf.float32)

# # data argumentation

# image = tf.random_crop(image, [24, 24, 3])# randomly crop the image size to 24 x 24

# image = tf.image.random_flip_left_right(image)

# image = tf.image.random_brightness(image, max_delta=63)

# image = tf.image.random_contrast(image,lower=0.2,upper=1.8)

image = tf.image.per_image_standardization(image) #substract off the mean and divide by the variance

if shuffle:

images, label_batch = tf.train.shuffle_batch(

[image, label],

batch_size = batch_size,

num_threads= 64,

capacity = 20000,

min_after_dequeue = 3000)

else:

images, label_batch = tf.train.batch(

[image, label],

batch_size = batch_size,

num_threads = 64,

capacity= 2000)

## ONE-HOT

n_classes = 10

label_batch = tf.one_hot(label_batch, depth= n_classes)

label_batch = tf.cast(label_batch, dtype=tf.int32)

label_batch = tf.reshape(label_batch, [batch_size, n_classes])

return images, label_batch这里对tfcords的文件读取,以后还是很有借鉴价值的,留着

2.VGG.py

import tensorflow as tf

import tools

#%%

def VGG16(x, n_classes, is_pretrain=True):# x输入图片

x = tools.conv('conv1_1', x, 64, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv1_2', x, 64, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.pool('pool1', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv2_1', x, 128, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv2_2', x, 128, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.pool('pool2', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv3_1', x, 256, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv3_2', x, 256, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv3_3', x, 256, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.pool('pool3', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv4_1', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv4_2', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv4_3', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.pool('pool3', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv5_1', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv5_2', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv5_3', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.pool('pool3', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.FC_layer('fc6', x, out_nodes=4096)

#x = tools.batch_norm(x)

x = tools.FC_layer('fc7', x, out_nodes=4096)

#x = tools.batch_norm(x)

x = tools.FC_layer('fc8', x, out_nodes=n_classes)

return x

#stride=[strides_batch,strides_depth,strides_height,strides_width,strides_channel]

#%% TO get better tensorboard figures!

def VGG16N(x, n_classes, is_pretrain=True):

with tf.name_scope('VGG16'):

x = tools.conv('conv1_1', x, 64, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv1_2', x, 64, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

with tf.name_scope('pool1'):

x = tools.pool('pool1', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv2_1', x, 128, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv2_2', x, 128, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

with tf.name_scope('pool2'):

x = tools.pool('pool2', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv3_1', x, 256, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv3_2', x, 256, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv3_3', x, 256, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

with tf.name_scope('pool3'):

x = tools.pool('pool3', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv4_1', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv4_2', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv4_3', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

with tf.name_scope('pool4'):

x = tools.pool('pool4', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.conv('conv5_1', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv5_2', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

x = tools.conv('conv5_3', x, 512, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=is_pretrain)

with tf.name_scope('pool5'):

x = tools.pool('pool5', x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True)

x = tools.FC_layer('fc6', x, out_nodes=4096)

#with tf.name_scope('batch_norm1'):

#x = tools.batch_norm(x)

x = tools.FC_layer('fc7', x, out_nodes=4096)

#with tf.name_scope('batch_norm2'):

#x = tools.batch_norm(x)

x = tools.FC_layer('fc8', x, out_nodes=n_classes)

return x

#%%3.tools.py

import tensorflow as tf

import numpy as np

#%%

def conv(layer_name, x, out_channels, kernel_size=[3,3], stride=[1,1,1,1], is_pretrain=True):

'''Convolution op wrapper, use RELU activation after convolution

Args:

layer_name: e.g. conv1, pool1...

x: input tensor, [batch_size, height, width, channels]

out_channels: number of output channels (or comvolutional kernels)

kernel_size: the size of convolutional kernel, VGG paper used: [3,3]

stride: A list of ints. 1-D of length 4. VGG paper used: [1, 1, 1, 1]

is_pretrain: if load pretrained parameters, freeze all conv layers.

Depending on different situations, you can just set part of conv layers to be freezed.

the parameters of freezed layers will not change when training.

Returns:

4D tensor

'''

in_channels = x.get_shape()[-1]

with tf.variable_scope(layer_name):

w = tf.get_variable(name='weights',

trainable=is_pretrain,

shape=[kernel_size[0], kernel_size[1], in_channels, out_channels],

initializer=tf.contrib.layers.xavier_initializer()) # default is uniform distribution initialization

b = tf.get_variable(name='biases',

trainable=is_pretrain,

shape=[out_channels],

initializer=tf.constant_initializer(0.0))

x = tf.nn.conv2d(x, w, stride, padding='SAME', name='conv')

x = tf.nn.bias_add(x, b, name='bias_add')

x = tf.nn.relu(x, name='relu')

return x

#%%

def pool(layer_name, x, kernel=[1,2,2,1], stride=[1,2,2,1], is_max_pool=True):

'''Pooling op

Args:

x: input tensor

kernel: pooling kernel, VGG paper used [1,2,2,1], the size of kernel is 2X2

stride: stride size, VGG paper used [1,2,2,1]

padding:

is_max_pool: boolen

if True: use max pooling

else: use avg pooling

'''

if is_max_pool:

x = tf.nn.max_pool(x, kernel, strides=stride, padding='SAME', name=layer_name)

else:

x = tf.nn.avg_pool(x, kernel, strides=stride, padding='SAME', name=layer_name)

return x

#%%

def batch_norm(x):

'''Batch normlization(I didn't include the offset and scale)

'''

epsilon = 1e-3

batch_mean, batch_var = tf.nn.moments(x, [0])

x = tf.nn.batch_normalization(x,

mean=batch_mean,

variance=batch_var,

offset=None,

scale=None,

variance_epsilon=epsilon)

return x

#%%

def FC_layer(layer_name, x, out_nodes):

'''Wrapper for fully connected layers with RELU activation as default

Args:

layer_name: e.g. 'FC1', 'FC2'

x: input feature map

out_nodes: number of neurons for current FC layer

'''

shape = x.get_shape()

if len(shape) == 4:

size = shape[1].value * shape[2].value * shape[3].value

else:

size = shape[-1].value

with tf.variable_scope(layer_name):

w = tf.get_variable('weights',

shape=[size, out_nodes],

initializer=tf.contrib.layers.xavier_initializer())

b = tf.get_variable('biases',

shape=[out_nodes],

initializer=tf.constant_initializer(0.0))

flat_x = tf.reshape(x, [-1, size]) # flatten into 1D

x = tf.nn.bias_add(tf.matmul(flat_x, w), b)

x = tf.nn.relu(x)

return x

#%%

def loss(logits, labels):

'''Compute loss

Args:

logits: logits tensor, [batch_size, n_classes]

labels: one-hot labels

'''

with tf.name_scope('loss') as scope:

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(logits=logits, labels=labels,name='cross-entropy')

loss = tf.reduce_mean(cross_entropy, name='loss')

tf.summary.scalar(scope+'/loss', loss)

return loss

#%%

def accuracy(logits, labels):

"""Evaluate the quality of the logits at predicting the label.

Args:

logits: Logits tensor, float - [batch_size, NUM_CLASSES].

labels: Labels tensor,

"""

with tf.name_scope('accuracy') as scope:

correct = tf.equal(tf.arg_max(logits, 1), tf.arg_max(labels, 1))

correct = tf.cast(correct, tf.float32)

accuracy = tf.reduce_mean(correct)*100.0

tf.summary.scalar(scope+'/accuracy', accuracy)

return accuracy

#%%

def num_correct_prediction(logits, labels):

"""Evaluate the quality of the logits at predicting the label.

Return:

the number of correct predictions

"""

correct = tf.equal(tf.arg_max(logits, 1), tf.arg_max(labels, 1))

correct = tf.cast(correct, tf.int32)

n_correct = tf.reduce_sum(correct)

return n_correct

#%%

def optimize(loss, learning_rate, global_step):

'''optimization, use Gradient Descent as default

'''

with tf.name_scope('optimizer'):

optimizer = tf.train.GradientDescentOptimizer(learning_rate=learning_rate)

#optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate)

train_op = optimizer.minimize(loss, global_step=global_step)

return train_op

#%%

def load(data_path, session):

data_dict = np.load(data_path, encoding='latin1').item()

keys = sorted(data_dict.keys())

for key in keys:

with tf.variable_scope(key, reuse=True):

for subkey, data in zip(('weights', 'biases'), data_dict[key]):

session.run(tf.get_variable(subkey).assign(data))

#%% 取出网络的各层的结构形式

def test_load():

data_path = 'E:\\spyder-projects\\VGG_tensorflow\\vgg16_pretrain\\vgg16.npy'

data_dict = np.load(data_path, encoding='latin1').item()

keys = sorted(data_dict.keys())

for key in keys:

weights = data_dict[key][0]

biases = data_dict[key][1]

print('\n')

print(key)

print('weights shape: ', weights.shape)

print('biases shape: ', biases.shape)

#%% 对某些层不引用,skip

def load_with_skip(data_path, session, skip_layer):

data_dict = np.load(data_path, encoding='latin1').item()

for key in data_dict:

if key not in skip_layer:

with tf.variable_scope(key, reuse=True):

for subkey, data in zip(('weights', 'biases'), data_dict[key]):

session.run(tf.get_variable(subkey).assign(data))

#%%

def print_all_variables(train_only=True):

"""Print all trainable and non-trainable variables

without tl.layers.initialize_global_variables(sess)

Parameters

----------

train_only : boolean

If True, only print the trainable variables, otherwise, print all variables.

"""

# tvar = tf.trainable_variables() if train_only else tf.all_variables()

if train_only:

t_vars = tf.trainable_variables()

print(" [*] printing trainable variables")

else:

try: # TF1.0

t_vars = tf.global_variables()

except: # TF0.12

t_vars = tf.all_variables()

print(" [*] printing global variables")

for idx, v in enumerate(t_vars):

print(" var {:3}: {:15} {}".format(idx, str(v.get_shape()), v.name))

#%%

def weight(kernel_shape, is_uniform = True):

''' weight initializer

Args:

shape: the shape of weight

is_uniform: boolen type.

if True: use uniform distribution initializer

if False: use normal distribution initizalizer

Returns:

weight tensor

'''

w = tf.get_variable(name='weights',

shape=kernel_shape,

initializer=tf.contrib.layers.xavier_initializer())

return w

#%%

def bias(bias_shape):

'''bias initializer

'''

b = tf.get_variable(name='biases',

shape=bias_shape,

initializer=tf.constant_initializer(0.0))

return b

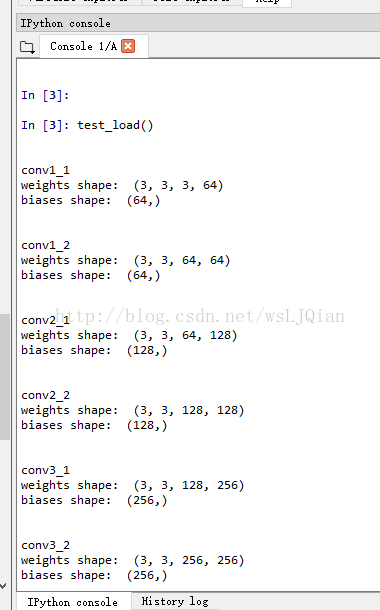

#%%本次训练,应用了别人已经训练好模型的参数,就是vgg16.npy.此时加载这个已经训练好的文件,查看一下他的内部结构形式,利用test_load()就可以查看了

也就是这部分代码

#%% 取出网络的各层的结构形式

def test_load():

data_path = 'E:\\spyder-projects\\VGG_tensorflow\\vgg16_pretrain\\vgg16.npy'

data_dict = np.load(data_path, encoding='latin1').item()

keys = sorted(data_dict.keys())

for key in keys:

weights = data_dict[key][0]

biases = data_dict[key][1]

print('\n')

print(key)

print('weights shape: ', weights.shape)

print('biases shape: ', biases.shape)

将它运行看下输出结果

会发现,他的网络结构形式,与我们自己创建的网络形式是一致的。

后面还有对网络进行修改的tools,因为迁移学习中(http://www.sohu.com/a/130511730_465975),前面几层的卷积运算都是对图像特征的提取(看了很久资料,还是没太懂,明白了再补充)

4.train_and_val.py

import os

import os.path

import numpy as np

import tensorflow as tf

import input_data

import VGG

import tools

#%%

IMG_W = 32

IMG_H = 32

N_CLASSES = 10

BATCH_SIZE = 32

learning_rate = 0.01

MAX_STEP = 10000 # it took me about one hour to complete the training.

IS_PRETRAIN = True

#%% Training

def train():

pre_trained_weights = 'E:\\spyder-projects\\VGG_tensorflow\\vgg16_pretrain\\vgg16.npy'

data_dir = 'E:\\spyder-projects\\cifar10\\data\\cifar-10-batches-bin\\'

train_log_dir = 'E:\\spyder-projects\\VGG_tensorflow\\logs\\train\\'#训练日志

val_log_dir = 'E:\\spyder-projects\\VGG_tensorflow\\logs\\val\\' #验证日志

with tf.name_scope('input'):

tra_image_batch, tra_label_batch = input_data.read_cifar10(data_dir=data_dir,

is_train=True,

batch_size= BATCH_SIZE,

shuffle=True)

val_image_batch, val_label_batch = input_data.read_cifar10(data_dir=data_dir,

is_train=False,

batch_size= BATCH_SIZE,

shuffle=False)

logits = VGG.VGG16N(tra_image_batch, N_CLASSES, IS_PRETRAIN)

loss = tools.loss(logits, tra_label_batch)

accuracy = tools.accuracy(logits, tra_label_batch)

my_global_step = tf.Variable(0, name='global_step', trainable=False)

train_op = tools.optimize(loss, learning_rate, my_global_step)

x = tf.placeholder(tf.float32, shape=[BATCH_SIZE, IMG_W, IMG_H, 3])

y_ = tf.placeholder(tf.int16, shape=[BATCH_SIZE, N_CLASSES])

saver = tf.train.Saver(tf.global_variables())

summary_op = tf.summary.merge_all()

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

# load the parameter file, assign the parameters, skip the specific layers

tools.load_with_skip(pre_trained_weights, sess, ['fc6','fc7','fc8'])

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess, coord=coord)

tra_summary_writer = tf.summary.FileWriter(train_log_dir, sess.graph)

val_summary_writer = tf.summary.FileWriter(val_log_dir, sess.graph)

try:

for step in np.arange(MAX_STEP):

if coord.should_stop():

break

tra_images,tra_labels = sess.run([tra_image_batch, tra_label_batch])

_, tra_loss, tra_acc = sess.run([train_op, loss, accuracy],

feed_dict={x:tra_images, y_:tra_labels})

if step % 50 == 0 or (step + 1) == MAX_STEP:

print ('Step: %d, loss: %.4f, accuracy: %.4f%%' % (step, tra_loss, tra_acc))

summary_str = sess.run(summary_op)

tra_summary_writer.add_summary(summary_str, step)

if step % 200 == 0 or (step + 1) == MAX_STEP:

val_images, val_labels = sess.run([val_image_batch, val_label_batch])

val_loss, val_acc = sess.run([loss, accuracy],

feed_dict={x:val_images,y_:val_labels})

print('** Step %d, val loss = %.2f, val accuracy = %.2f%% **' %(step, val_loss, val_acc))

summary_str = sess.run(summary_op)

val_summary_writer.add_summary(summary_str, step)

if step % 2000 == 0 or (step + 1) == MAX_STEP:

checkpoint_path = os.path.join(train_log_dir, 'model.ckpt')

saver.save(sess, checkpoint_path, global_step=step)

except tf.errors.OutOfRangeError:

print('Done training -- epoch limit reached')

finally:

coord.request_stop()

coord.join(threads)

sess.close()

#%% Test the accuracy on test dataset. got about 85.69% accuracy.

import math

def evaluate():

with tf.Graph().as_default():

# log_dir = 'C://Users//kevin//Documents//tensorflow//VGG//logsvgg//train//'

log_dir = 'E:\\spyder-projects\\VGG_tensorflow\\logs\\train\\'#训练日志

test_dir = 'E:\\spyder-projects\\cifar10\\data\\cifar-10-batches-bin\\'

n_test = 10000

images, labels = input_data.read_cifar10(data_dir=test_dir,

is_train=False,

batch_size= BATCH_SIZE,

shuffle=False)

logits = VGG.VGG16N(images, N_CLASSES, IS_PRETRAIN)

correct = tools.num_correct_prediction(logits, labels)

saver = tf.train.Saver(tf.global_variables())

with tf.Session() as sess:

print("Reading checkpoints...")

ckpt = tf.train.get_checkpoint_state(log_dir)

if ckpt and ckpt.model_checkpoint_path:

global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1]

saver.restore(sess, ckpt.model_checkpoint_path)

print('Loading success, global_step is %s' % global_step)

else:

print('No checkpoint file found')

return

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess = sess, coord = coord)

try:

print('\nEvaluating......')

num_step = int(math.floor(n_test / BATCH_SIZE))

num_sample = num_step*BATCH_SIZE

step = 0

total_correct = 0

while step < num_step and not coord.should_stop():

batch_correct = sess.run(correct)

total_correct += np.sum(batch_correct)

step += 1

print('Total testing samples: %d' %num_sample)

print('Total correct predictions: %d' %total_correct)

print('Average accuracy: %.2f%%' %(100*total_correct/num_sample))

except Exception as e:

coord.request_stop(e)

finally:

coord.request_stop()

coord.join(threads)

#%%

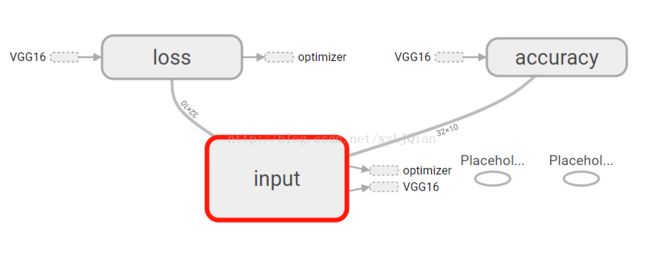

上面代码中有部分是为了画tensorboard而操作的语句,参考http://blog.csdn.net/u010099080/article/details/77426577

对于整个数据流,在tensorboard给出了明确的一个数据流向过程。如图

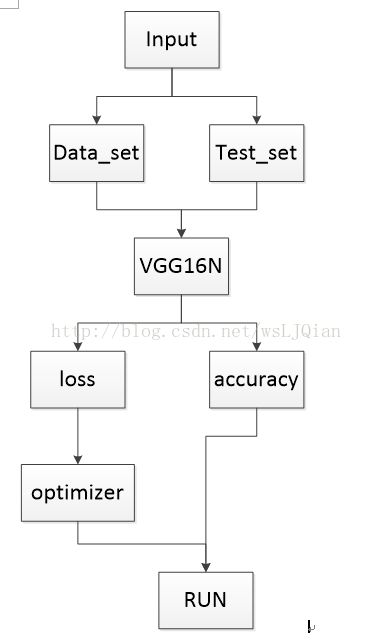

这是tensorboard 给出的一个整体数据模型,我自己看了下,又自己列出了一个细致的数据流向图

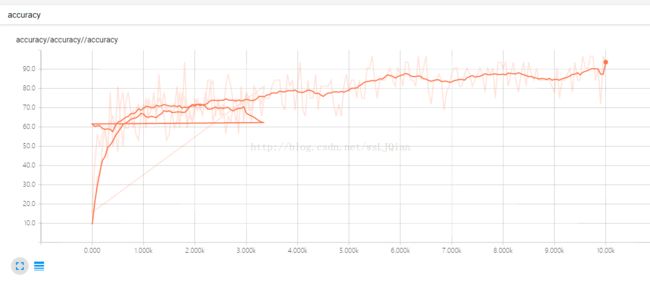

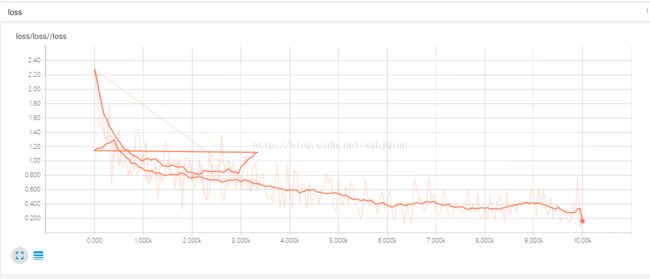

看下SCALARS绘制出来的图标

有点怪,是不是,其实是因为以前训练过一次,到3K多点就强制停止了训练,再次训练,保存了两个文件,迭代3k时又重新迭代,之前的记录没有清除。然后就成这样子了

生成时候出现这种提醒

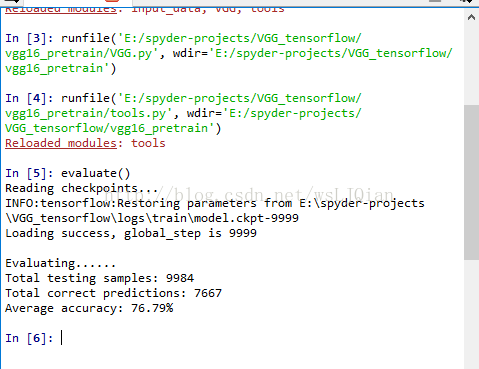

最后是测试准确率

想get更多有趣知识?请加微信公众号“小白算法”,谢谢

看到很多人要vgg16.npy文件,这里 放百度云地址:链接:https://pan.baidu.com/s/10oZ8r0ZRnfYUXYtytTlIeg

提取码:acsc 。也可关注:小白CV,回复关键字“vgg16.npy”获取