垃圾分类、EfficientNet模型、数据增强(ImageDataGenerator)、混合训练Mixup、Random Erasing随机擦除、标签平滑正则化、tf.keras.Sequence

日萌社

人工智能AI:Keras PyTorch MXNet TensorFlow PaddlePaddle 深度学习实战(不定时更新)

垃圾分类、EfficientNet模型、数据增强(ImageDataGenerator)、混合训练Mixup、Random Erasing随机擦除、标签平滑正则化、tf.keras.Sequence

垃圾分类、EfficientNet模型B0~B7、Rectified Adam(RAdam)、Warmup、带有Warmup的余弦退火学习率衰减

数据集garbage_classify_data.zip 下载

链接:https://pan.baidu.com/s/13OtaUv6j4x8dD7cgD4sL5g

提取码:7tze

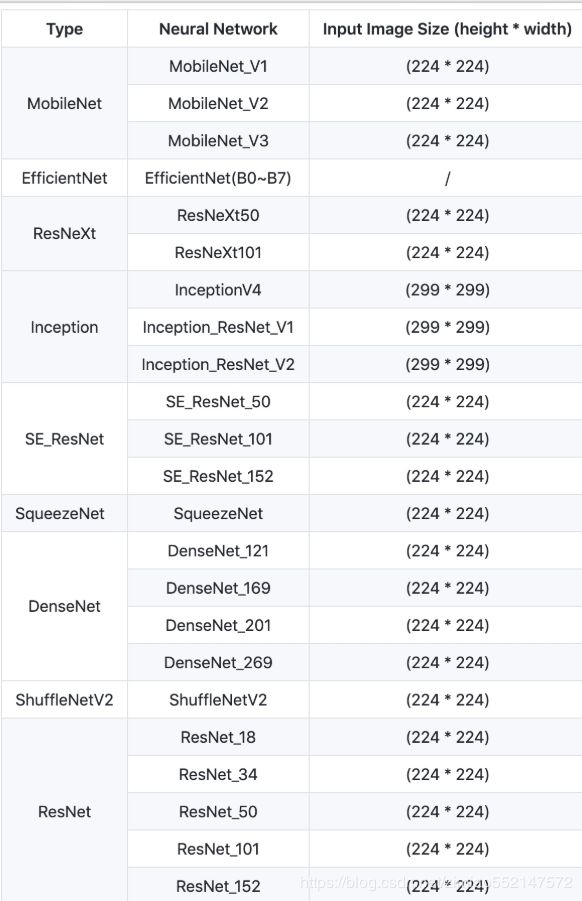

EfficientNet中的每个模型要求的输入形状大小

每个网络要求的输入形状大小:

EfficientNetB0 - (224, 224, 3)

EfficientNetB1 - (240, 240, 3)

EfficientNetB2 - (260, 260, 3)

EfficientNetB3 - (300, 300, 3)

EfficientNetB4 - (380, 380, 3)

EfficientNetB5 - (456, 456, 3)

EfficientNetB6 - (528, 528, 3)

EfficientNetB7 - (600, 600, 3)

EfficientNet模型迁移的使用注意事项:

1.因为该模型的源码是在tensorflow 1.x的版本,并非是tensorflow 2.0的版本,因此在tensorflow 2.0环境中使用的话,

需要用到tf.compat.v1.disable_eager_execution(),表示关闭默认的eager模式,但要注意的是,如果关闭默认的eager模式了的话,

那么同时还使用tf.keras.callbacks.TensorBoard的话会报错,tf.keras.callbacks.ModelCheckpoint不会报错,

那么解决的方式要么此时不使用TensorBoard,或者不关闭默认的eager模式。

2.layers.py中的class Swish中的call()函数返回值的修改建议。

如果使用了tf.compat.v1.disable_eager_execution()之后,报错No registered 'swish_f32' OpKernel for GPU devices compatible with node的话,

把 layers.py中的class Swish中的call()函数返回值 return tf.nn.swish(inputs) 修改为 return inputs * tf.math.sigmoid(inputs) 即可解决,

实际上底层是 tf.nn.swish(x) 封装了 x* tf.math.sigmoid(x),不使用tf.nn.swish之后,即可也把tf.compat.v1.disable_eager_execution()给注释掉,

即不需要关闭默认的eager模式了,那么此时也可以正常同时使用TensorBoard。4.9 综合案例:垃圾分类介绍

学习目标

- 目标

- 知道垃圾分类相关比赛问题

- 知道图像分类问题的常见优化(tricks)

- 掌握常用分类问题的数据增强方式

- mixup等

- 掌握标签平滑正则化

- 了解分类常见模型以及模型算法优化

- 应用

- 应用Tensorflow完成垃圾分类数据的读取以及处理要求

4.9.1 垃圾分类介绍

垃圾分类问题是2019年的社会热点问题,2019年6月25日,生活垃圾分类制度将入法。上海成为第一个中国垃圾分类试点城市。

一般是指按一定规定或标准将垃圾分类储存、分类投放和分类搬运,从而转变成公共资源的一系列活动的总称。分类的目的是提高垃圾的资源价值和经济价值,力争物尽其用。

1、垃圾分类问题的需求 :

注:湿垃圾(厨余垃圾),干垃圾(其他垃圾)

分类知识小拓展:可回收物指适宜回收和资源利用的废弃物,包括废弃的玻璃、金属、塑料、纸类、织物、家具、电器电子产品和年花年桔等。厨余垃圾指家庭、个人产生的易腐性垃圾,包括剩菜、剩饭、菜叶、果皮、蛋壳、茶渣、汤渣、骨头、废弃食物以及厨房下脚料等。有害垃圾指对人体健康或者自然环境造成直接或者潜在危害且应当专门处理的废弃物,包括废电池、废荧光灯管等。其他垃圾指除以上三类垃圾之外的其他生活垃圾,比如纸尿裤、尘土、烟头、一次性快餐盒、破损花盆及碗碟、墙纸等。

2、垃圾分类意义

- 减少占地、减少污染、变废为宝

3、垃圾分类难点

- 种类多,易分错:如口香糖?湿纸巾?瓜子皮?塑料袋?

- 干,干,湿,可回收

- 自动分捡

- 人们通过手机拍照,用程序自动识别出垃圾的类别,不仅简化人们对垃圾分类的处理,而且提高垃圾分类的准确性。

4.9.1.1 垃圾分类比赛

- 1、华为云人工智能大赛·垃圾分类挑战杯

- 官网:https://competition.huaweicloud.com/information/1000007620/introduction

1、垃圾种类40类

{

"0": "其他垃圾/一次性快餐盒",

"1": "其他垃圾/污损塑料",

"2": "其他垃圾/烟蒂",

"3": "其他垃圾/牙签",

"4": "其他垃圾/破碎花盆及碟碗",

"5": "其他垃圾/竹筷",

"6": "厨余垃圾/剩饭剩菜",

"7": "厨余垃圾/大骨头",

"8": "厨余垃圾/水果果皮",

"9": "厨余垃圾/水果果肉",

"10": "厨余垃圾/茶叶渣",

"11": "厨余垃圾/菜叶菜根",

"12": "厨余垃圾/蛋壳",

"13": "厨余垃圾/鱼骨",

"14": "可回收物/充电宝",

"15": "可回收物/包",

"16": "可回收物/化妆品瓶",

"17": "可回收物/塑料玩具",

"18": "可回收物/塑料碗盆",

"19": "可回收物/塑料衣架",

"20": "可回收物/快递纸袋",

"21": "可回收物/插头电线",

"22": "可回收物/旧衣服",

"23": "可回收物/易拉罐",

"24": "可回收物/枕头",

"25": "可回收物/毛绒玩具",

"26": "可回收物/洗发水瓶",

"27": "可回收物/玻璃杯",

"28": "可回收物/皮鞋",

"29": "可回收物/砧板",

"30": "可回收物/纸板箱",

"31": "可回收物/调料瓶",

"32": "可回收物/酒瓶",

"33": "可回收物/金属食品罐",

"34": "可回收物/锅",

"35": "可回收物/食用油桶",

"36": "可回收物/饮料瓶",

"37": "有害垃圾/干电池",

"38": "有害垃圾/软膏",

"39": "有害垃圾/过期药物"

}

- 2、其他比赛

- Apache Flink极客挑战赛——垃圾图片分类

-

- 官方:https://tianchi.aliyun.com/competition/entrance/231743/information

4.9.2 华为垃圾分类比赛介绍

本次比赛选取40种生活中常见的垃圾,选手根据公布的数据集进行模型训练,将训练好的模型发布到华为ModelArts平台上,在线预测华为的私有数据集,采用识别准确率作为评价指标。这次比赛中有很多容易混淆的类,比如饮料瓶和调料瓶、筷子和牙签、果皮和果肉等外形极为相似的垃圾,因此此次竞赛也可看作是细粒度图像分类任务。

重要:比赛或者项目解题思路

- 1、拿到数据后,首先做数据分析。统计数据样本分布,尺寸分布,图片形态等,基于分析可以做一些针对性的数据预处理算法,对后期的模型训练会有很大的帮助

- 2、选择好的baseline。需要不断的尝试各种现有的网络结构,进行结果对比,挑选出适合该网络的模型结构,然后基于该模型进行不断的调参,调试出性能较好的参数

- 3、做结果验证,将上述模型在验证集上做结果验证,找出错误样本,分析出错原因,然后针对性的调整网络和数据。

- 3、基于新数据和模型,再次进行模型调优

比赛表现前5团队效果

| 名次 | 准确率 | 推理(inference)时间(ms) |

|---|---|---|

| 第一名 | 0.969636 | 102.8 |

| 第二名 | 0.96251 | 95.43 |

| 第三名 | 0.962045 | 97.25 |

| 第四名 | 0.961735 | 82.99 |

| 第五名 | 0.957397 | 108.49 |

4.9.2.1 赛题分析

1、问题描述:

经典图像分类问题。采用深圳市垃圾分类标准,输出该物品属于可回收物、 厨余垃圾、有害垃圾和其他垃圾中的二级分类,共43个类别。

2、评价指标:

识别准确率 = 识别正确的图片数 / 图片总数

3、挑战:

- 官方训练集有19459张图片,数据量小;

- 类别较多(40),且各类样本不平衡;

- 图片大小、分辨率不一,垃圾物品有多种尺度;

- 垃圾分类是细粒度、粗粒度兼有的一种分类问题,轮廓、纹理、对象位置分 布都需要考察

4.9.2.2 对策

- 1、数据集分析和选择

- 2、模型选择

- 3、图像分类问题常见trick(优化)

1、数据集情况

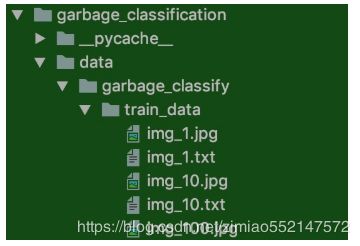

数据集下载以及组成

- 组成有train_data,然后同目录下有图片以及对应txt

- txt中的格式:img_1.jpg, 0-为图片以及对应目标

- 注:官方还有V2版本的数据,拓展了类别共43类(我们后面的项目是在第一个版本的数据中做训练)

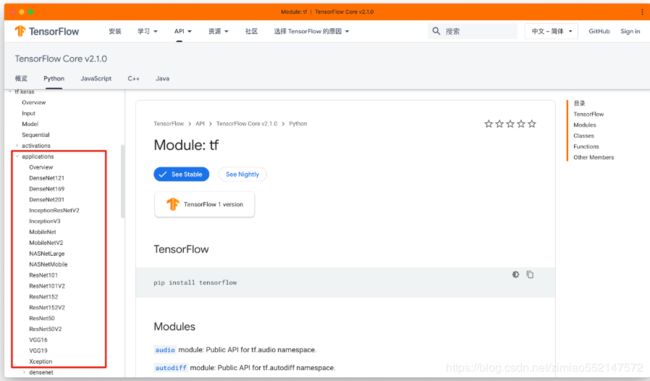

2、分类模型选择

数据量小、类别多、推理时间短->综合考察计 算量、体积、精度,选择近期才发布的高质量 预训练模型(EfficientNet B5/B4)进行迁移 学习

(1)现有模型以及准确率对比

Top-1 准确率和 Top-5 准确率都是在 ImageNet 验证集上的结果。

| Architecture | @top1* | @top5* | Weights |

|---|---|---|---|

| EfficientNetB0 | 0.7668 | 0.9312 | + |

| EfficientNetB1 | 0.7863 | 0.9418 | + |

| EfficientNetB2 | 0.7968 | 0.9475 | + |

| EfficientNetB3 | 0.8083 | 0.9531 | + |

Tensorflow在 ImageNet 上预训练过的用于图像分类的模型(TFAPI文档,官网会推荐安装):

# 输入大小

EfficientNetB0 - (224, 224, 3)

EfficientNetB1 - (240, 240, 3)

EfficientNetB2 - (260, 260, 3)

EfficientNetB3 - (300, 300, 3)

EfficientNetB4 - (380, 380, 3)

EfficientNetB5 - (456, 456, 3)

EfficientNetB6 - (528, 528, 3)

EfficientNetB7 - (600, 600, 3)

注:项目降到模型部分会详细介绍模型

3、 图像分类问题常见trick(优化)

(1)数据方面

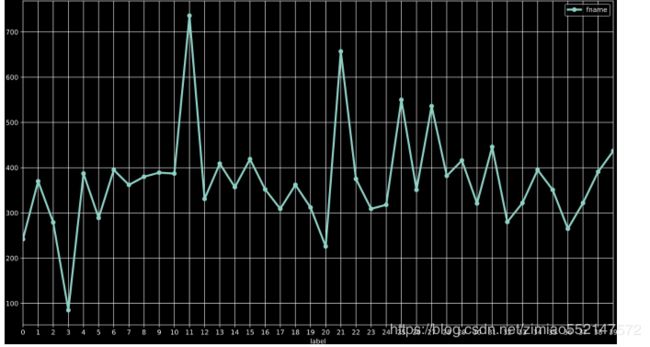

- 分析:下图横坐标是类标号,纵坐标是每个类的样本数量。

- 很容易看出,每个类之间的样本量差异很大,如果不做类均衡处理,很有可能会导致样本量多的类过拟合,样本量少的类欠拟合。

- 常见的类均衡方法有:多数类欠采样,少数类过采样;数据增强;标签平滑;

- 图片长宽比有一定的差异性,长宽比大多数集中于1,因此也适合一些模型输入尺寸设为1:1

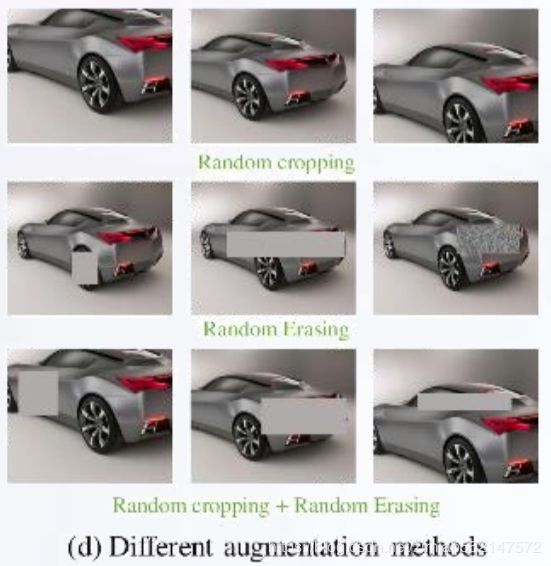

- 1、数据增强

- (1)并非所有数据增强方法都有效,要保证数据增强后目标仍可肉眼分辨,且不改变图像所属类别。

- (2)过多的数据增强也会延长模型训练时间,通常翻转的效果不错(随机水平翻转、随机垂直翻转、以一定概率随机旋转90°、180°、270°、随机crop(0-10%)等)

- 其他如下:

- Color Jittering:对颜色的数据增强:图像亮度、饱和度、对比度变化

- Random Scale:尺度变换;

- Random Crop:采用随机图像差值方式,对图像进行裁剪、缩放

- Horizontal/Vertical Flip:水平/垂直翻转;

- Shift:平移变换;

- Rotation/Reflection:旋转/仿射变换;

- Noise:高斯噪声、模糊处理;

- 2、外部数据:比赛不限制使用外部数据,也就是说可以通过自己拍照、网上爬取等方式获得更多的训练数据,但比较费时耗力。通常自己找的数据集质量也不够好,与华为公布的数据集分布不同,有些团队爬取一些额外数据,最终效果都不好,甚至降低了分数,因此放弃了这种方法。所以在一开始构造好数据分布很重要。(比赛与项目会有些差异,真正项目其实还是更多覆盖数据分布越好,比赛可能只是华为公开的数据集包括测试的数据分布一样的,导致不能拿过多的外部数据(容易过拟合))

- 3、数据归一化

- 4、标签平滑

- 5、mixup

(2)发掘与训练模型的潜力:

- 1、使用多种模型进行对比实验

- 2、选择改进的Adam优化方法 :Adam with warm up优化器

- 3、自定义学习率-余弦退火学习率

- 自带warmup的学习率控制对迭代次数比较稳健(只要达到足够的迭代次数,最终结果都比 较接近),大大降低调参复杂性,缩短实验周期

4.9.3 项目构建(模块分析)

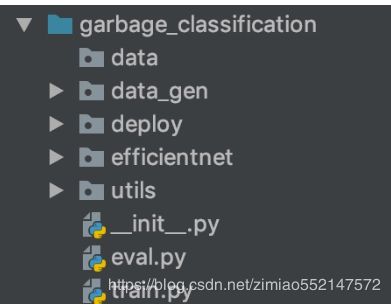

4.9.3.1 项目模块图

- data:存放数据的目录

- data_gen目录:批次数据预处理代码,包括数据增强、标签平滑、mixup功能

- deploy:模型导出以及部署模块

- efficientnet:efficientnet模型源码存放位置

- utils:封装的工具类,如warmup以及余弦退火学习率

- train:训练网络部分包括数据流获取、网络构建、优化器

项目运行过程数据记录以及最终效果

- 在16核、内存16G的机器上,只用CPU计算1 epoch耗时1.83小时(1小时50分钟)

Epoch 1/30

76/787 [=>............................] - ETA: 1:49:16 - loss: 3.7690 - accuracy: 0.0189

303/787 [==========>...................] - ETA: 1:07:05 - loss: 3.7383 - accuracy: 0.025

468/787 [================>.............] - ETA: 43:30 - loss: 3.7253 - accuracy: 0.0262

470/787 [================>.............] - ETA: 43:13 - loss: 3.7251 - accuracy: 0.0261

499/787 [==================>...........] - ETA: 39:12 - loss: 3.7232 - accuracy: 0.0270

576/787 [====================>.........] - ETA: 28:35 - loss: 3.7188 - accuracy: 0.0290

577/787 [====================>.........] - ETA: 28:26 - loss: 3.7187 - accuracy: 0.0290

602/787 [=====================>........] - ETA: 25:01 - loss: 3.7170 - accuracy: 0.0295

612/787 [======================>.......] - ETA: 23:39 - loss: 3.7163 - accuracy: 0.0298

...

786/787 [============================>.] - ETA: 8s - loss: 3.7085 - accuracy: 0.0319

项目训练代码初始-训练流程

下面简单的介绍一下整个工程中最关键的训练部分代码

import multiprocessing

import numpy as np

import argparse

import tensorflow as tf

from tensorflow.keras.callbacks import ReduceLROnPlateau

from tensorflow.keras.callbacks import TensorBoard, Callback

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam, RMSprop

from efficientnet import model as EfficientNet

from efficientnet import preprocess_input

from data_gen import data_flow

from utils.warmup_cosine_decay_scheduler import WarmUpCosineDecayScheduler

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

# efficientnet源码实现用TF1.X版本,所以要关闭默认的eager模式

tf.compat.v1.disable_eager_execution()

parser = argparse.ArgumentParser()

parser.add_argument("data_url", type=str, default='./data/garbage_classify/train_data', help="data dir", nargs='?')

parser.add_argument("train_url", type=str, default='./garbage_ckpt/', help="save model dir", nargs='?')

parser.add_argument("num_classes", type=int, default=40, help="num_classes", nargs='?')

parser.add_argument("input_size", type=int, default=300, help="input_size", nargs='?')

parser.add_argument("batch_size", type=int, default=16, help="batch_size", nargs='?')

parser.add_argument("learning_rate", type=float, default=0.0001, help="learning_rate", nargs='?')

parser.add_argument("max_epochs", type=int, default=30, help="max_epochs", nargs='?')

parser.add_argument("deploy_script_path", type=str, default='', help="deploy_script_path", nargs='?')

parser.add_argument("test_data_url", type=str, default='', help="test_data_url", nargs='?')

def model_fn(param):

"""迁移学习修改模型函数

:param param:

:return:

"""

base_model = EfficientNet.EfficientNetB3(include_top=False, input_shape=(param.input_size, param.input_size, 3),

classes=param.num_classes)

x = base_model.output

x = GlobalAveragePooling2D(name='avg_pool')(x)

predictions = Dense(param.num_classes, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=predictions)

return model

def train_model(param):

"""训练模型

:param param: 传入的命令参数

:return:

"""

# 1、建立读取数据的sequence

train_sequence, validation_sequence = data_flow(param.data_url, param.batch_size,

param.num_classes, param.input_size, preprocess_input)

# 2、建立模型,指定模型训练相关参数

model = model_fn(param)

optimizer = Adam(lr=param.learning_rate)

objective = 'categorical_crossentropy'

metrics = ['accuracy']

# 模型修改

# 模型训练优化器指定

model.compile(loss=objective, optimizer=optimizer, metrics=metrics)

model.summary()

# 判断模型是否加载历史模型

if os.path.exists(param.train_url):

filenames = os.listdir(param.train_url)

model.load_weights(filenames[-1])

print("加载完成!!!")

# 3、指定训练的callbacks,并进行模型的训练

# (1)Tensorboard

tensorboard = tf.keras.callbacks.TensorBoard(log_dir='./graph', histogram_freq=1,

write_graph=True, write_images=True)

# (2)自定义warm up和余弦学习率衰减

sample_count = len(train_sequence) * param.batch_size

epochs = param.max_epochs

warmup_epoch = 5

batch_size = param.batch_size

learning_rate_base = param.learning_rate

total_steps = int(epochs * sample_count / batch_size)

warmup_steps = int(warmup_epoch * sample_count / batch_size)

warm_up_lr = WarmUpCosineDecayScheduler(learning_rate_base=learning_rate_base,

total_steps=total_steps,

warmup_learning_rate=0,

warmup_steps=warmup_steps,

hold_base_rate_steps=0,

)

#(3)模型保存相关参数

check = tf.keras.callbacks.ModelCheckpoint(param.train_url+'weights_{epoch:02d}-{val_acc:.2f}.h5',

monitor='val_acc',

save_best_only=True,

save_weights_only=False,

mode='auto',

period=1)

# (4)训练

model.fit_generator(

train_sequence,

steps_per_epoch=len(train_sequence),

epochs=param.max_epochs,

verbose=1,

callbacks=[check, tensorboard, warm_up_lr],

validation_data=validation_sequence,

max_queue_size=10,

workers=int(multiprocessing.cpu_count() * 0.7),

use_multiprocessing=True,

shuffle=True

)

print('模型训练结束!')

4.9.3.2 步骤以及知识点应用分析

- 1、数据读取以及预处理模块

- 数据获取

- 数据增强

- 归一化

- 随机擦除

- Mixup

- 2、模型网络结构实现

- efficientnet模型介绍

- 垃圾分类模型修改

- 模型学习率优化-warmup与余弦退火学习率

- 模型优化器-Adam优化器改进RAdam/NRdam

- 3、模型训练保存与预测

- 模型完整训练过程实现

- 预估流程实现

- 4、模型导出以及部署

- tf.saved_model模块使用

- TensorFlow serving模块使用

上面是垃圾分类项目要掌握的知识点,也是要去实现项目训练的关键

4.9.4 数据读取与预处理

4.9.4.1 项目预处理模代码流程介绍

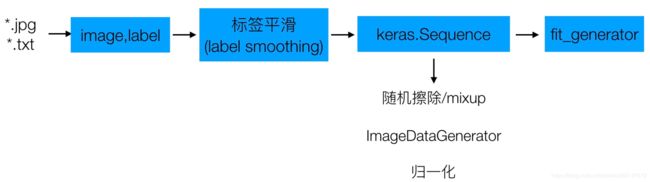

代码流程介绍

- 1、本地数据的读取,进行图片路径读取,标签读取,对标签进行平滑处理

- 2、tf.keras.Sequence类封装,返回序列数据

完整流程:

def data_from_sequence(train_data_dir, batch_size, num_classes, input_size):

"""读取本地图片和标签数据,处理成sequence数据类型

:param train_data_dir: 训练数据目录

:param batch_size: 批次大小

:param num_classes: 垃圾分类总类别数

:param input_size: 输入模型的图片大小(300, 300)

:return:

"""

# 1、获取txt文件,打乱一次文件

label_files = [os.path.join(train_data_dir, filename) for filename in os.listdir(train_data_dir) if filename.endswith('.txt')]

print(label_files)

random.shuffle(label_files)

# 2、读取txt文件,解析出

img_paths = []

labels = []

for index, file_path in enumerate(label_files):

with open(file_path, 'r') as f:

line = f.readline()

line_split = line.strip().split(', ')

if len(line_split) != 2:

print('%s 文件中格式错误' % (file_path))

continue

# 获取图片名称和标签,转换格式

img_name = line_split[0]

label = int(line_split[1])

# 图片完整路径拼接,并获取到图片和标签列表中(顺序一一对应)

img_paths.append(os.path.join(train_data_dir, img_name))

labels.append(label)

# 3、进行标签类别处理,以及标签平滑

labels = to_categorical(labels, num_classes)

labels = smooth_labels(labels)

# 4、进行所有数据的分割,训练集和验证集

train_img_paths, validation_img_paths, train_labels, validation_labels = \

train_test_split(img_paths, labels, test_size=0.15, random_state=0)

print('总共样本数: %d, 训练样本数: %d, 验证样本数据: %d' % (

len(img_paths), len(train_img_paths), len(validation_img_paths)))

# 5、sequence序列数据制作

train_sequence = GarbageDataSequence(train_img_paths, train_labels, batch_size,

[input_size, input_size], use_aug=True)

validation_sequence = GarbageDataSequence(validation_img_paths, validation_labels, batch_size,

[input_size, input_size], use_aug=False)

return train_sequence, validation_sequence

1、本地数据的读取,进行图片路径读取,标签读取,对标签进行平滑处理

新建一个data_gen的目录,新建processing_data.py,实现下面这个将在模型训练中调用的数据处理主函数

import math

import os

import random

import numpy as np

from PIL import Image

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.utils import to_categorical, Sequence

from sklearn.model_selection import train_test_split

from data_gen.random_eraser import get_random_eraser

def data_from_sequence(train_data_dir, batch_size, num_classes, input_size):

"""读取本地图片和标签数据,处理成sequence数据类型

:param train_data_dir: 训练数据目录

:param batch_size: 批次大小

:param num_classes: 垃圾分类总类别数

:param input_size: 输入模型的图片大小(300, 300)

:return:

"""

# 1、获取txt文件,打乱一次文件

label_files = [os.path.join(train_data_dir, filename) for filename in os.listdir(train_data_dir) if filename.endswith('.txt')]

print(label_files)

random.shuffle(label_files)

# 2、读取txt文件,解析出

img_paths = []

labels = []

for index, file_path in enumerate(label_files):

with open(file_path, 'r') as f:

line = f.readline()

line_split = line.strip().split(', ')

if len(line_split) != 2:

print('%s 文件中格式错误' % (file_path))

continue

# 获取图片名称和标签,转换格式

img_name = line_split[0]

label = int(line_split[1])

# 图片完整路径拼接,并获取到图片和标签列表中(顺序一一对应)

img_paths.append(os.path.join(train_data_dir, img_name))

labels.append(label)

# 3、进行标签类别处理,以及标签平滑

labels = to_categorical(labels, num_classes)

labels = smooth_labels(labels)

return None

(1) 数据读取和类别转换以及标签平滑

to_categorical使用介绍:

In [5]: tf.keras.utils.to_categorical([1,2,3,4,5], num_classes=10)

Out[5]:

array([[0., 1., 0., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 1., 0., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 1., 0., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 1., 0., 0., 0., 0., 0.],

[0., 0., 0., 0., 0., 1., 0., 0., 0., 0.]], dtype=float32)

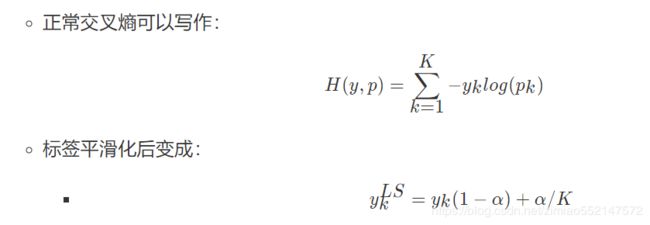

1、标签平滑-Label Smoothing Regularization

Label Smoothing就是一种抑制过拟合的手段。

-

思想:在训练时即假设标签可能存在错误,避免“过分”相信训练样本的标签。就是要告诉模型,样本的标签不一定正确,那么训练出来的模型对于少量的样本错误就会鲁棒性更强。

-

过程:在每次迭代时,并不直接将(xi,yi)放入训练集,而是设置一个错误率ε,以1-ε的概率将(xi,yi)代入训练,以ε的概率将(xi,1-yi)代入训练

-

-

比如:我们的二分类猫/狗示例,0.1 的标签平滑意味着目标答案将是 0.90(90%确信)这是一个狗的图像,而 0.10(10%确信)这是一只猫,而不是先前的向 1 或 0 移动的结果。由于不太确定,它作为一种正则化形式,提高了它对新数据的预测能力。

-

Label Smoothing的工作原理是对原来的[0 1]这种标注做一个改动,假设我们给定Label Smoothing的值为0.1:[0,1]×(1−0.1)+0.1/2=[0.05,0.95]

-

-

公式原理讲解:

-

理解:

- 没有标签平滑计算的损失只考虑正确标签位置的损失,而不考虑其他标签位置的损失,这就会使得模型过于关注增大预测正确标签的概率,而不关注减 少预测错误标签的概率,最后导致的结果是模型在自己的训练集上拟合效果 非常良好,而在其他的测试集结果表现不好,即过拟合。

- 平滑过后的样本交叉熵损失就不仅考虑到了训练样本中正确的标签位置 (one-hot标签为1的位置)的损失,也稍微考虑到其他错误标签位置(one- hot标签为0的位置)的损失,导致最后的损失增大,导致模型的学习能力提 高,即要下降到原来的损失,就得学习的更好,也就是迫使模型往增大正确 分类概率并且同时减小错误分类概率的方向前进。

来自于论文:论文:Rethinking the Inception Architecture for ComputerVision

注:前两列的模型未进行标签平滑处理,后两 列使用了标签平滑技术

-

现象:可见标签平滑技术可以使得网络倒数第二层激活函数的表示的聚类更加紧密。 标签平滑提高了最终的精度

-

通常用于:图片分类、机器翻译和语音识别。

代码实现

def smooth_labels(y, smooth_factor=0.1):

assert len(y.shape) == 2

if 0 <= smooth_factor <= 1:

y *= 1 - smooth_factor

y += smooth_factor / y.shape[1]

else:

raise Exception(

'Invalid label smoothing factor: ' + str(smooth_factor))

return y

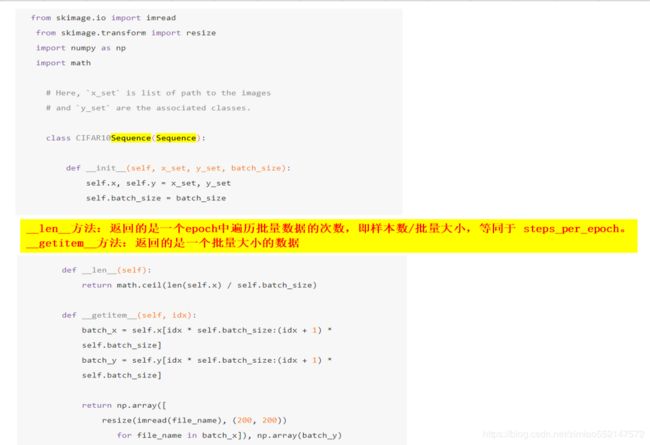

2、tf.keras.Sequence类封装,返回序列数据

首先介绍一下Sequence以及其他相关方法之间的关系。之前接触过有tf.data以及tf.keras.preprocessing.image中的ImageDataGenerator,前面可以构造自定义批次等等,后者提供了默认的数据增强方法实现批次数据迭代返回。但是对于很多任务来说我们需要做更多的有些自定义预处理,如标签平滑,随机擦出等等,在一个API中无法完全实现,现在我们会介绍一个能实现更自由的各个时间内修改数据集的工具Sequence

-

tf.keras.utils.Sequence

- 1、每个人都

Sequence必须实现__getitem__和__len__方法。如果您想在各个时期之间修改数据集,则可以实现on_epoch_end。该方法__getitem__应返回完整的批次。也可以自定义其他方法供使用 - 2、特点:

Sequence是进行多处理的更安全方法。这种结构保证了网络在每个时间段的每个样本上只会训练一次,而生成器则不会。

- 1、每个人都

官网使用案例:

from skimage.io import imread

from skimage.transform import resize

import numpy as np

import math

# Here, `x_set` is list of path to the images

# and `y_set` are the associated classes.

class CIFAR10Sequence(Sequence):

def __init__(self, x_set, y_set, batch_size):

self.x, self.y = x_set, y_set

self.batch_size = batch_size

def __len__(self):

return math.ceil(len(self.x) / self.batch_size)

def __getitem__(self, idx):

batch_x = self.x[idx * self.batch_size:(idx + 1) *

self.batch_size]

batch_y = self.y[idx * self.batch_size:(idx + 1) *

self.batch_size]

return np.array([

resize(imread(file_name), (200, 200))

for file_name in batch_x]), np.array(batch_y)

GarbageDataSequence-垃圾分类的Sequence代码解析

构建了一个GarbageDataSequence类别, 继承基类Sequence

class GarbageDataSequence(Sequence):

"""数据流生成器,每次迭代返回一个batch

可直接用于fit_generator的generator参数,能保证在多进程下的一个epoch中不会重复取相同的样本

"""

def __init__(self, img_paths, labels, batch_size, img_size, use_aug):

# 异常判断

self.x_y = np.hstack((np.array(img_paths).reshape(len(img_paths), 1), np.array(labels)))

self.batch_size = batch_size

self.img_size = img_size

self.alpha = 0.2

self.use_aug = use_aug

self.eraser = get_random_eraser(s_h=0.3, pixel_level=True)

def __len__(self):

return math.ceil(len(self.x_y) / self.batch_size)

@staticmethod

def center_img(img, size=None, fill_value=255):

"""改变图片尺寸到300x300,并且做填充使得图像处于中间位置

"""

h, w = img.shape[:2]

if size is None:

size = max(h, w)

shape = (size, size) + img.shape[2:]

background = np.full(shape, fill_value, np.uint8)

center_x = (size - w) // 2

center_y = (size - h) // 2

background[center_y:center_y + h, center_x:center_x + w] = img

return background

def preprocess_img(self, img_path):

"""图片的处理流程函数,数据增强、center_img处理

"""

# 1、图像读取,[180 , 200]-> (200)max(180, 200)->[300/200 * 180, 300/200 * 200]

# 这样做为了不使图形直接变形,后续在统一长宽

img = Image.open(img_path)

resize_scale = self.img_size[0] / max(img.size[:2])

img = img.resize((int(img.size[0] * resize_scale), int(img.size[1] * resize_scale)))

img = img.convert('RGB')

img = np.array(img)

# 2、数据增强:如果是训练集进行数据增强操作

# 先随机擦除,然后翻转

if self.use_aug:

img = self.eraser(img)

datagen = ImageDataGenerator(

width_shift_range=0.05,

height_shift_range=0.05,

horizontal_flip=True,

vertical_flip=True,

)

img = datagen.random_transform(img)

# 3、把图片大小调整到[300, 300, 3],调整的方式为直接填充小的坐标。为了模型需要

img = self.center_img(img, self.img_size[0])

return img

def __getitem__(self, idx):

# 1、处理图片大小、数据增强等过程

print(self.x_y)

batch_x = self.x_y[idx * self.batch_size: (idx + 1) * self.batch_size, 0]

batch_y = self.x_y[idx * self.batch_size: (idx + 1) * self.batch_size, 1:]

batch_x = np.array([self.preprocess_img(img_path) for img_path in batch_x])

batch_y = np.array(batch_y).astype(np.float32)

# print(batch_y[1])

# 2、mixup进行构造新的样本分布数据

# batch_x, batch_y = self.mixup(batch_x, batch_y)

# 3、输入模型的归一化数据

batch_x = self.preprocess_input(batch_x)

return batch_x, batch_y

def on_epoch_end(self):

np.random.shuffle(self.x_y)

def preprocess_input(self, x):

"""归一化处理样本特征值

:param x:

:return:

"""

assert x.ndim in (3, 4)

assert x.shape[-1] == 3

MEAN_RGB = [0.485 * 255, 0.456 * 255, 0.406 * 255]

STDDEV_RGB = [0.229 * 255, 0.224 * 255, 0.225 * 255]

x = x - np.array(MEAN_RGB)

x = x / np.array(STDDEV_RGB)

return x

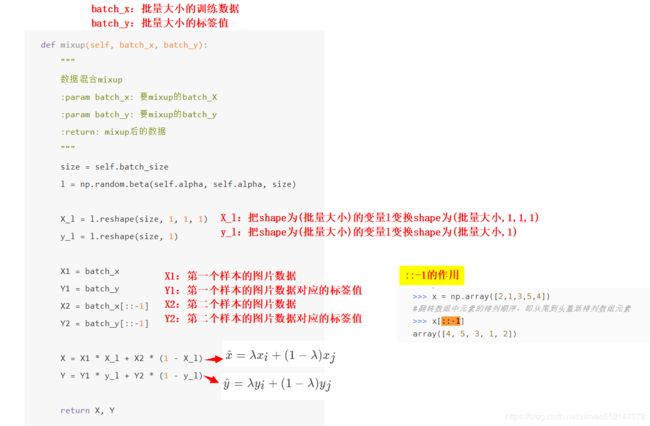

def mixup(self, batch_x, batch_y):

"""

数据混合mixup

:param batch_x: 要mixup的batch_X

:param batch_y: 要mixup的batch_y

:return: mixup后的数据

"""

size = self.batch_size

l = np.random.beta(self.alpha, self.alpha, size)

X_l = l.reshape(size, 1, 1, 1)

y_l = l.reshape(size, 1)

X1 = batch_x

Y1 = batch_y

X2 = batch_x[::-1]

Y2 = batch_y[::-1]

X = X1 * X_l + X2 * (1 - X_l)

Y = Y1 * y_l + Y2 * (1 - y_l)

return X, Y

if __name__ == '__main__':

train_data_dir = '../data/garbage_classify/train_data'

batch_size = 32

train_sequence, validation_sequence = data_from_sequence(train_data_dir, batch_size, num_classes=40, input_size=300)

for i in range(100):

print("第 %d 批次数据" % i)

batch_data, bacth_label = train_sequence.__getitem__(i)

print(batch_data.shape, bacth_label.shape)

batch_data, bacth_label = validation_sequence.__getitem__(i)

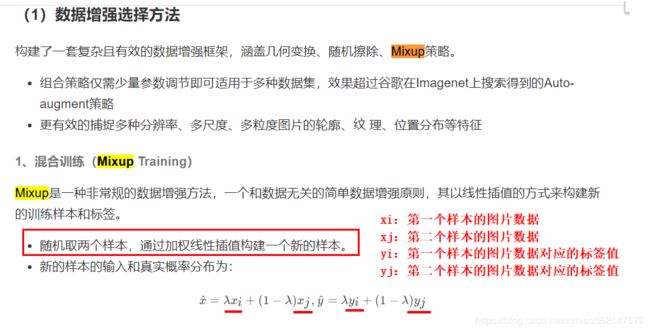

(1)数据增强选择方法

构建了一套复杂且有效的数据增强框架,涵盖几何变换、随机擦除、Mixup策略。

- 组合策略仅需少量参数调节即可适用于多种数据集,效果超过谷歌在Imagenet上搜索得到的Auto-augment策略

- 更有效的捕捉多种分辨率、多尺度、多粒度图片的轮廓、纹 理、位置分布等特征

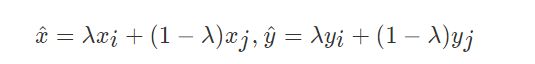

1、混合训练(Mixup Training)

Mixup是一种非常规的数据增强方法,一个和数据无关的简单数据增强原则,其以线性插值的方式来构建新的训练样本和标签。

- 随机取两个样本,通过加权线性插值构建一个新的样本。

- 新的样本的输入和真实概率分布为:

mixup策略实现,封装在

def mixup(self, batch_x, batch_y):

"""

数据混合mixup

:param batch_x: 要mixup的batch_X

:param batch_y: 要mixup的batch_y

:return: mixup后的数据

"""

size = self.batch_size

l = np.random.beta(self.alpha, self.alpha, size)

X_l = l.reshape(size, 1, 1, 1)

y_l = l.reshape(size, 1)

X1 = batch_x

Y1 = batch_y

X2 = batch_x[::-1]

Y2 = batch_y[::-1]

X = X1 * X_l + X2 * (1 - X_l)

Y = Y1 * y_l + Y2 * (1 - y_l)

return X, Y

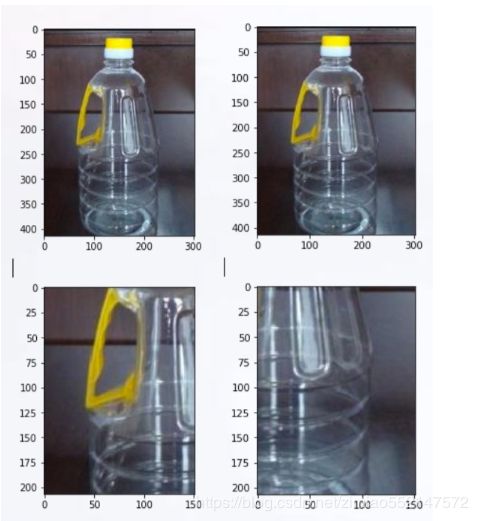

2、Random Erasing原理:

训练时,随机擦除方法会在原图随机选择一个矩 形区域,将该区域的像素替换为随机值。这个过 程中,参与训练的图片会做不同程度的遮挡,这 样可以降低过拟合的风险并提高模型的鲁棒性。 随机擦除是独立于参数学习过程的,因此可以整 合到任何基于CNN的识别模型中。

封装在random_eraser.py文件中。

import numpy as np

import tensorflow as tf

def get_random_eraser(p=0.5, s_l=0.02, s_h=0.4, r_1=0.3, r_2=1/0.3, v_l=0, v_h=255, pixel_level=False):

def eraser(input_img):

img_h, img_w, img_c = input_img.shape

p_1 = np.random.rand()

if p_1 > p:

return input_img

while True:

s = np.random.uniform(s_l, s_h) * img_h * img_w

r = np.random.uniform(r_1, r_2)

w = int(np.sqrt(s / r))

h = int(np.sqrt(s * r))

left = np.random.randint(0, img_w)

top = np.random.randint(0, img_h)

if left + w <= img_w and top + h <= img_h:

break

if pixel_level:

c = np.random.uniform(v_l, v_h, (h, w, img_c))

else:

c = np.random.uniform(v_l, v_h)

input_img[top:top + h, left:left + w, :] = c

return input_img

return eraser

注:这些代码不需要去实现,有很多现成实现好的方法

3、ImageDataGenerator-提供主要翻转方法

如下使用,进行随机水平/垂直翻转。

datagen = ImageDataGenerator(

horizontal_flip=True,

vertical_flip=True,

)

最后实现测试结果:

...

第 10 批次数据

(32, 300, 300, 3) [[0.0025 0.0025 0.0025 ... 0.0025 0.0025 0.0025]

[0.0025 0.0025 0.0025 ... 0.0025 0.0025 0.0025]

[0.0025 0.0025 0.0025 ... 0.0025 0.0025 0.0025]

...

[0.0025 0.0025 0.0025 ... 0.0025 0.0025 0.0025]

[0.0025 0.0025 0.0025 ... 0.0025 0.0025 0.0025]

[0.0025 0.0025 0.0025 ... 0.0025 0.0025 0.0025]]

4.9.5 总结

- 知道垃圾分类相关比赛问题

- 知道图像分类问题的常见优化(tricks)

- 常用分类问题的数据增强方式

- mixup

- 随机擦除

- 翻转

- 标签平滑正则化

- 分类常见模型以及模型算法优化

EfficientNet模型B0~B7 源码

initializers.py

import numpy as np

import tensorflow as tf

import keras.backend as K

from keras.initializers import Initializer

from keras.utils.generic_utils import get_custom_objects

class EfficientConv2DKernelInitializer(Initializer):

"""Initialization for convolutional kernels.

The main difference with tf.variance_scaling_initializer is that

tf.variance_scaling_initializer uses a truncated normal with an uncorrected

standard deviation, whereas here we use a normal distribution. Similarly,

tf.contrib.layers.variance_scaling_initializer uses a truncated normal with

a corrected standard deviation.

Args:

shape: shape of variable

dtype: dtype of variable

partition_info: unused

Returns:

an initialization for the variable

"""

def __call__(self, shape, dtype=K.floatx(), **kwargs):

kernel_height, kernel_width, _, out_filters = shape

fan_out = int(kernel_height * kernel_width * out_filters)

return tf.random_normal(

shape, mean=0.0, stddev=np.sqrt(2.0 / fan_out), dtype=dtype)

class EfficientDenseKernelInitializer(Initializer):

"""Initialization for dense kernels.

This initialization is equal to

tf.variance_scaling_initializer(scale=1.0/3.0, mode='fan_out',

distribution='uniform').

It is written out explicitly here for clarity.

Args:

shape: shape of variable

dtype: dtype of variable

Returns:

an initialization for the variable

"""

def __call__(self, shape, dtype=K.floatx(), **kwargs):

"""Initialization for dense kernels.

This initialization is equal to

tf.variance_scaling_initializer(scale=1.0/3.0, mode='fan_out',

distribution='uniform').

It is written out explicitly here for clarity.

Args:

shape: shape of variable

dtype: dtype of variable

Returns:

an initialization for the variable

"""

init_range = 1.0 / np.sqrt(shape[1])

return tf.random_uniform(shape, -init_range, init_range, dtype=dtype)

conv_kernel_initializer = EfficientConv2DKernelInitializer()

dense_kernel_initializer = EfficientDenseKernelInitializer()

get_custom_objects().update({

'EfficientDenseKernelInitializer': EfficientDenseKernelInitializer,

'EfficientConv2DKernelInitializer': EfficientConv2DKernelInitializer,

})layers.py

import tensorflow as tf

import tensorflow.keras.backend as K

import tensorflow.keras.layers as KL

from tensorflow.keras.utils import get_custom_objects

"""

import tensorflow as tf

import numpy as np

x=np.array([[1.,8.,7.],[10.,14.,3.],[1.,2.,4.]])

tf.math.sigmoid(x)

tf.compat.v1.disable_eager_execution()

x=np.array([[1.,8.,7.],[10.,14.,3.],[1.,2.,4.]])

tf.nn.swish(x)

----------------------------------------------------------------

1.tf.nn.swish(x) 等同于把 x * tf.sigmoid(beta * x) 封装了。

如果使用了tf.nn.swish(x) 则需要同时使用tf.compat.v1.disable_eager_execution()。

如果使用x * tf.sigmoid(beta * x)来代替tf.nn.swish(x)的话,则可以不使用tf.compat.v1.disable_eager_execution()。

2.但注意此处可能环境问题使用tf.nn.swish(x)的话会报错,所以此处使用x * tf.sigmoid(beta * x)来代替tf.nn.swish(x)

报错信息如下:

tensorflow/core/grappler/utils/graph_view.cc:830] No registered 'swish_f32' OpKernel for GPU devices compatible with node

{{node swish_75/swish_f32}} Registered:

"""

class Swish(KL.Layer):

def __init__(self, **kwargs):

super().__init__(**kwargs)

def call(self, inputs, **kwargs):

# return tf.nn.swish(inputs)

return inputs * tf.math.sigmoid(inputs)

class DropConnect(KL.Layer):

def __init__(self, drop_connect_rate=0., **kwargs):

super().__init__(**kwargs)

self.drop_connect_rate = drop_connect_rate

def call(self, inputs, training=None):

def drop_connect():

keep_prob = 1.0 - self.drop_connect_rate

# Compute drop_connect tensor

batch_size = tf.shape(inputs)[0]

random_tensor = keep_prob

random_tensor += tf.random.uniform([batch_size, 1, 1, 1], dtype=inputs.dtype)

binary_tensor = tf.floor(random_tensor)

output = tf.math.divide(inputs, keep_prob) * binary_tensor

return output

return K.in_train_phase(drop_connect, inputs, training=training)

def get_config(self):

config = super().get_config()

config['drop_connect_rate'] = self.drop_connect_rate

return config

get_custom_objects().update({

'DropConnect': DropConnect,

'Swish': Swish,

}) model.py

# Copyright 2019 The TensorFlow Authors. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# ==============================================================================

"""Contains definitions for EfficientNet model.

[1] Mingxing Tan, Quoc V. Le

EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks.

ICML'19, https://arxiv.org/abs/1905.11946

"""

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import collections

import math

import numpy as np

import six

from six.moves import xrange # pylint: disable=redefined-builtin

import tensorflow as tf

import tensorflow.keras.backend as K

import tensorflow.keras.models as KM

import tensorflow.keras.layers as KL

from tensorflow.keras.utils import get_file

from tensorflow.keras.initializers import Initializer

from .layers import Swish, DropConnect

from .params import get_model_params, IMAGENET_WEIGHTS

from .initializers import conv_kernel_initializer, dense_kernel_initializer

__all__ = ['EfficientNet', 'EfficientNetB0', 'EfficientNetB1', 'EfficientNetB2', 'EfficientNetB3',

'EfficientNetB4', 'EfficientNetB5', 'EfficientNetB6', 'EfficientNetB7']

class ConvKernalInitializer(Initializer):

def __call__(self, shape, dtype=K.floatx(), partition_info=None):

"""Initialization for convolutional kernels.

The main difference with tf.variance_scaling_initializer is that

tf.variance_scaling_initializer uses a truncated normal with an uncorrected

standard deviation, whereas here we use a normal distribution. Similarly,

tf.contrib.layers.variance_scaling_initializer uses a truncated normal with

a corrected standard deviation.

Args:

shape: shape of variable

dtype: dtype of variable

partition_info: unused

Returns:

an initialization for the variable

"""

del partition_info

kernel_height, kernel_width, _, out_filters = shape

fan_out = int(kernel_height * kernel_width * out_filters)

return tf.random.normal(

shape, mean=0.0, stddev=np.sqrt(2.0 / fan_out), dtype=dtype)

class DenseKernalInitializer(Initializer):

def __call__(self, shape, dtype=K.floatx(), partition_info=None):

"""Initialization for dense kernels.

This initialization is equal to

tf.variance_scaling_initializer(scale=1.0/3.0, mode='fan_out',

distribution='uniform').

It is written out explicitly here for clarity.

Args:

shape: shape of variable

dtype: dtype of variable

partition_info: unused

Returns:

an initialization for the variable

"""

del partition_info

init_range = 1.0 / np.sqrt(shape[1])

return tf.random_uniform(shape, -init_range, init_range, dtype=dtype)

def round_filters(filters, global_params):

"""Round number of filters based on depth multiplier."""

orig_f = filters

multiplier = global_params.width_coefficient

divisor = global_params.depth_divisor

min_depth = global_params.min_depth

if not multiplier:

return filters

filters *= multiplier

min_depth = min_depth or divisor

new_filters = max(min_depth, int(filters + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_filters < 0.9 * filters:

new_filters += divisor

# print('round_filter input={} output={}'.format(orig_f, new_filters))

return int(new_filters)

def round_repeats(repeats, global_params):

"""Round number of filters based on depth multiplier."""

multiplier = global_params.depth_coefficient

if not multiplier:

return repeats

return int(math.ceil(multiplier * repeats))

def SEBlock(block_args, global_params):

num_reduced_filters = max(

1, int(block_args.input_filters * block_args.se_ratio))

filters = block_args.input_filters * block_args.expand_ratio

if global_params.data_format == 'channels_first':

channel_axis = 1

spatial_dims = [2, 3]

else:

channel_axis = -1

spatial_dims = [1, 2]

def block(inputs):

x = inputs

x = KL.Lambda(lambda a: K.mean(a, axis=spatial_dims, keepdims=True))(x)

x = KL.Conv2D(

num_reduced_filters,

kernel_size=[1, 1],

strides=[1, 1],

kernel_initializer=ConvKernalInitializer(),

padding='same',

use_bias=True

)(x)

x = Swish()(x)

# Excite

x = KL.Conv2D(

filters,

kernel_size=[1, 1],

strides=[1, 1],

kernel_initializer=ConvKernalInitializer(),

padding='same',

use_bias=True

)(x)

x = KL.Activation('sigmoid')(x)

out = KL.Multiply()([x, inputs])

return out

return block

def MBConvBlock(block_args, global_params, drop_connect_rate=None):

batch_norm_momentum = global_params.batch_norm_momentum

batch_norm_epsilon = global_params.batch_norm_epsilon

if global_params.data_format == 'channels_first':

channel_axis = 1

spatial_dims = [2, 3]

else:

channel_axis = -1

spatial_dims = [1, 2]

has_se = (block_args.se_ratio is not None) and (

block_args.se_ratio > 0) and (block_args.se_ratio <= 1)

filters = block_args.input_filters * block_args.expand_ratio

kernel_size = block_args.kernel_size

def block(inputs):

if block_args.expand_ratio != 1:

x = KL.Conv2D(

filters,

kernel_size=[1, 1],

strides=[1, 1],

kernel_initializer=ConvKernalInitializer(),

padding='same',

use_bias=False

)(inputs)

x = KL.BatchNormalization(

axis=channel_axis,

momentum=batch_norm_momentum,

epsilon=batch_norm_epsilon

)(x)

x = Swish()(x)

else:

x = inputs

x = KL.DepthwiseConv2D(

[kernel_size, kernel_size],

strides=block_args.strides,

depthwise_initializer=ConvKernalInitializer(),

padding='same',

use_bias=False

)(x)

x = KL.BatchNormalization(

axis=channel_axis,

momentum=batch_norm_momentum,

epsilon=batch_norm_epsilon

)(x)

x = Swish()(x)

if has_se:

x = SEBlock(block_args, global_params)(x)

# output phase

x = KL.Conv2D(

block_args.output_filters,

kernel_size=[1, 1],

strides=[1, 1],

kernel_initializer=ConvKernalInitializer(),

padding='same',

use_bias=False

)(x)

x = KL.BatchNormalization(

axis=channel_axis,

momentum=batch_norm_momentum,

epsilon=batch_norm_epsilon

)(x)

if block_args.id_skip:

if all(

s == 1 for s in block_args.strides

) and block_args.input_filters == block_args.output_filters:

# only apply drop_connect if skip presents.

if drop_connect_rate:

x = DropConnect(drop_connect_rate)(x)

x = KL.Add()([x, inputs])

return x

return block

def EfficientNet(input_shape, block_args_list, global_params, include_top=True, pooling=None):

batch_norm_momentum = global_params.batch_norm_momentum

batch_norm_epsilon = global_params.batch_norm_epsilon

if global_params.data_format == 'channels_first':

channel_axis = 1

else:

channel_axis = -1

# Stem part

inputs = KL.Input(shape=input_shape)

x = inputs

x = KL.Conv2D(

filters=round_filters(32, global_params),

kernel_size=[3, 3],

strides=[2, 2],

kernel_initializer=ConvKernalInitializer(),

padding='same',

use_bias=False

)(x)

x = KL.BatchNormalization(

axis=channel_axis,

momentum=batch_norm_momentum,

epsilon=batch_norm_epsilon

)(x)

x = Swish()(x)

# Blocks part

block_idx = 1

n_blocks = sum([block_args.num_repeat for block_args in block_args_list])

drop_rate = global_params.drop_connect_rate or 0

drop_rate_dx = drop_rate / n_blocks

for block_args in block_args_list:

assert block_args.num_repeat > 0

# Update block input and output filters based on depth multiplier.

block_args = block_args._replace(

input_filters=round_filters(block_args.input_filters, global_params),

output_filters=round_filters(block_args.output_filters, global_params),

num_repeat=round_repeats(block_args.num_repeat, global_params)

)

# The first block needs to take care of stride and filter size increase.

x = MBConvBlock(block_args, global_params,

drop_connect_rate=drop_rate_dx * block_idx)(x)

block_idx += 1

if block_args.num_repeat > 1:

block_args = block_args._replace(input_filters=block_args.output_filters, strides=[1, 1])

for _ in xrange(block_args.num_repeat - 1):

x = MBConvBlock(block_args, global_params,

drop_connect_rate=drop_rate_dx * block_idx)(x)

block_idx += 1

# Head part

x = KL.Conv2D(

filters=round_filters(1280, global_params),

kernel_size=[1, 1],

strides=[1, 1],

kernel_initializer=ConvKernalInitializer(),

padding='same',

use_bias=False

)(x)

x = KL.BatchNormalization(

axis=channel_axis,

momentum=batch_norm_momentum,

epsilon=batch_norm_epsilon

)(x)

x = Swish()(x)

if include_top:

x = KL.GlobalAveragePooling2D(data_format=global_params.data_format)(x)

if global_params.dropout_rate > 0:

x = KL.Dropout(global_params.dropout_rate)(x)

x = KL.Dense(global_params.num_classes, kernel_initializer=DenseKernalInitializer())(x)

x = KL.Activation('softmax')(x)

else:

if pooling == 'avg':

x = KL.GlobalAveragePooling2D(data_format=global_params.data_format)(x)

elif pooling == 'max':

x = KL.GlobalMaxPooling2D(data_format=global_params.data_format)(x)

outputs = x

model = KM.Model(inputs, outputs)

return model

def _get_model_by_name(model_name, input_shape=None, include_top=True, weights=None, classes=1000, pooling=None):

"""Re-Implementation of EfficientNet for Keras

Reference:

https://arxiv.org/abs/1807.11626

Args:

input_shape: optional, if ``None`` default_input_shape is used

EfficientNetB0 - (224, 224, 3)

EfficientNetB1 - (240, 240, 3)

EfficientNetB2 - (260, 260, 3)

EfficientNetB3 - (300, 300, 3)

EfficientNetB4 - (380, 380, 3)

EfficientNetB5 - (456, 456, 3)

EfficientNetB6 - (528, 528, 3)

EfficientNetB7 - (600, 600, 3)

include_top: whether to include the fully-connected

layer at the top of the network.

weights: one of `None` (random initialization),

'imagenet' (pre-training on ImageNet).

classes: optional number of classes to classify images

into, only to be specified if `include_top` is True, and

if no `weights` argument is specified.

pooling: optional [None, 'avg', 'max'], if ``include_top=False``

add global pooling on top of the network

- avg: GlobalAveragePooling2D

- max: GlobalMaxPooling2D

Returns:

A Keras model instance.

"""

if weights not in {None, 'imagenet'}:

raise ValueError('Parameter `weights` should be one of [None, "imagenet"]')

if weights == 'imagenet' and model_name not in IMAGENET_WEIGHTS:

raise ValueError('There are not pretrained weights for {} model.'.format(model_name))

if weights == 'imagenet' and include_top and classes != 1000:

raise ValueError('If using `weights` and `include_top`'

' `classes` should be 1000')

block_agrs_list, global_params, default_input_shape = get_model_params(

model_name, override_params={'num_classes': classes}

)

if input_shape is None:

input_shape = (default_input_shape, default_input_shape, 3)

model = EfficientNet(input_shape, block_agrs_list, global_params, include_top=include_top, pooling=pooling)

model._name = model_name

if weights:

if not include_top:

weights_name = model_name + '-notop'

else:

weights_name = model_name

weights = IMAGENET_WEIGHTS[weights_name]

weights_path = get_file(

weights['name'],

weights['url'],

cache_subdir='models',

md5_hash=weights['md5'],

)

model.load_weights(weights_path)

return model

def EfficientNetB0(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b0', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

def EfficientNetB1(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b1', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

def EfficientNetB2(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b2', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

def EfficientNetB3(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b3', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

def EfficientNetB4(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b4', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

def EfficientNetB5(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b5', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

def EfficientNetB6(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b6', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

def EfficientNetB7(include_top=True, input_shape=None, weights=None, classes=1000, pooling=None):

return _get_model_by_name('efficientnet-b7', include_top=include_top, input_shape=input_shape,

weights=weights, classes=classes, pooling=pooling)

EfficientNetB0.__doc__ = _get_model_by_name.__doc__

EfficientNetB1.__doc__ = _get_model_by_name.__doc__

EfficientNetB2.__doc__ = _get_model_by_name.__doc__

EfficientNetB3.__doc__ = _get_model_by_name.__doc__

EfficientNetB4.__doc__ = _get_model_by_name.__doc__

EfficientNetB5.__doc__ = _get_model_by_name.__doc__

EfficientNetB6.__doc__ = _get_model_by_name.__doc__

EfficientNetB7.__doc__ = _get_model_by_name.__doc__params.py

import os

import re

import collections

IMAGENET_WEIGHTS = {

'efficientnet-b0': {

'name': 'efficientnet-b0_imagenet_1000.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b0_imagenet_1000.h5',

'md5': 'bca04d16b1b8a7c607b1152fe9261af7',

},

'efficientnet-b0-notop': {

'name': 'efficientnet-b0_imagenet_1000_notop.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b0_imagenet_1000_notop.h5',

'md5': '45d2f3b6330c2401ef66da3961cad769',

},

'efficientnet-b1': {

'name': 'efficientnet-b1_imagenet_1000.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b1_imagenet_1000.h5',

'md5': 'bd4a2b82f6f6bada74fc754553c464fc',

},

'efficientnet-b1-notop': {

'name': 'efficientnet-b1_imagenet_1000_notop.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b1_imagenet_1000_notop.h5',

'md5': '884aed586c2d8ca8dd15a605ec42f564',

},

'efficientnet-b2': {

'name': 'efficientnet-b2_imagenet_1000.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b2_imagenet_1000.h5',

'md5': '45b28b26f15958bac270ab527a376999',

},

'efficientnet-b2-notop': {

'name': 'efficientnet-b2_imagenet_1000_notop.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b2_imagenet_1000_notop.h5',

'md5': '42fb9f2d9243d461d62b4555d3a53b7b',

},

'efficientnet-b3': {

'name': 'efficientnet-b3_imagenet_1000.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b3_imagenet_1000.h5',

'md5': 'decd2c8a23971734f9d3f6b4053bf424',

},

'efficientnet-b3-notop': {

'name': 'efficientnet-b3_imagenet_1000_notop.h5',

'url': 'https://github.com/qubvel/efficientnet/releases/download/v0.0.1/efficientnet-b3_imagenet_1000_notop.h5',

'md5': '1f7d9a8c2469d2e3d3b97680d45df1e1',

},

}

GlobalParams = collections.namedtuple('GlobalParams', [

'batch_norm_momentum', 'batch_norm_epsilon', 'dropout_rate', 'data_format',

'num_classes', 'width_coefficient', 'depth_coefficient',

'depth_divisor', 'min_depth', 'drop_connect_rate',

])

GlobalParams.__new__.__defaults__ = (None,) * len(GlobalParams._fields)

BlockArgs = collections.namedtuple('BlockArgs', [

'kernel_size', 'num_repeat', 'input_filters', 'output_filters',

'expand_ratio', 'id_skip', 'strides', 'se_ratio'

])

# defaults will be a public argument for namedtuple in Python 3.7

# https://docs.python.org/3/library/collections.html#collections.namedtuple

BlockArgs.__new__.__defaults__ = (None,) * len(BlockArgs._fields)

def efficientnet_params(model_name):

"""Get efficientnet params based on model name."""

params_dict = {

# (width_coefficient, depth_coefficient, resolution, dropout_rate)

'efficientnet-b0': (1.0, 1.0, 224, 0.2),

'efficientnet-b1': (1.0, 1.1, 240, 0.2),

'efficientnet-b2': (1.1, 1.2, 260, 0.3),

'efficientnet-b3': (1.2, 1.4, 300, 0.3),

'efficientnet-b4': (1.4, 1.8, 380, 0.4),

'efficientnet-b5': (1.6, 2.2, 456, 0.4),

'efficientnet-b6': (1.8, 2.6, 528, 0.5),

'efficientnet-b7': (2.0, 3.1, 600, 0.5),

}

return params_dict[model_name]

class BlockDecoder(object):

"""Block Decoder for readability."""

def _decode_block_string(self, block_string):

"""Gets a block through a string notation of arguments."""

assert isinstance(block_string, str)

ops = block_string.split('_')

options = {}

for op in ops:

splits = re.split(r'(\d.*)', op)

if len(splits) >= 2:

key, value = splits[:2]

options[key] = value

if 's' not in options or len(options['s']) != 2:

raise ValueError('Strides options should be a pair of integers.')

return BlockArgs(

kernel_size=int(options['k']),

num_repeat=int(options['r']),

input_filters=int(options['i']),

output_filters=int(options['o']),

expand_ratio=int(options['e']),

id_skip=('noskip' not in block_string),

se_ratio=float(options['se']) if 'se' in options else None,

strides=[int(options['s'][0]), int(options['s'][1])])

def _encode_block_string(self, block):

"""Encodes a block to a string."""

args = [

'r%d' % block.num_repeat,

'k%d' % block.kernel_size,

's%d%d' % (block.strides[0], block.strides[1]),

'e%s' % block.expand_ratio,

'i%d' % block.input_filters,

'o%d' % block.output_filters

]

if block.se_ratio > 0 and block.se_ratio <= 1:

args.append('se%s' % block.se_ratio)

if block.id_skip is False:

args.append('noskip')

return '_'.join(args)

def decode(self, string_list):

"""Decodes a list of string notations to specify blocks inside the network.

Args:

string_list: a list of strings, each string is a notation of block.

Returns:

A list of namedtuples to represent blocks arguments.

"""

assert isinstance(string_list, list)

blocks_args = []

for block_string in string_list:

blocks_args.append(self._decode_block_string(block_string))

return blocks_args

def encode(self, blocks_args):

"""Encodes a list of Blocks to a list of strings.

Args:

blocks_args: A list of namedtuples to represent blocks arguments.

Returns:

a list of strings, each string is a notation of block.

"""

block_strings = []

for block in blocks_args:

block_strings.append(self._encode_block_string(block))

return block_strings

def efficientnet(width_coefficient=None,

depth_coefficient=None,

dropout_rate=0.2,

drop_connect_rate=0.2):

"""Creates a efficientnet model."""

blocks_args = [

'r1_k3_s11_e1_i32_o16_se0.25', 'r2_k3_s22_e6_i16_o24_se0.25',

'r2_k5_s22_e6_i24_o40_se0.25', 'r3_k3_s22_e6_i40_o80_se0.25',

'r3_k5_s11_e6_i80_o112_se0.25', 'r4_k5_s22_e6_i112_o192_se0.25',

'r1_k3_s11_e6_i192_o320_se0.25',

]

global_params = GlobalParams(

batch_norm_momentum=0.99,

batch_norm_epsilon=1e-3,

dropout_rate=dropout_rate,

drop_connect_rate=drop_connect_rate,

data_format='channels_last',

num_classes=1000,

width_coefficient=width_coefficient,

depth_coefficient=depth_coefficient,

depth_divisor=8,

min_depth=None)

decoder = BlockDecoder()

return decoder.decode(blocks_args), global_params

def get_model_params(model_name, override_params=None):

"""Get the block args and global params for a given model."""

if model_name.startswith('efficientnet'):

width_coefficient, depth_coefficient, input_shape, dropout_rate = (

efficientnet_params(model_name))

blocks_args, global_params = efficientnet(

width_coefficient, depth_coefficient, dropout_rate)

else:

raise NotImplementedError('model name is not pre-defined: %s' % model_name)

if override_params:

# ValueError will be raised here if override_params has fields not included

# in global_params.

global_params = global_params._replace(**override_params)

#print('global_params= %s', global_params)

#print('blocks_args= %s', blocks_args)

return blocks_args, global_params, input_shapepreprocessing.py

import numpy as np

from skimage.transform import resize

MEAN_RGB = [0.485 * 255, 0.456 * 255, 0.406 * 255]

STDDEV_RGB = [0.229 * 255, 0.224 * 255, 0.225 * 255]

MAP_INTERPOLATION_TO_ORDER = {

'nearest': 0,

'bilinear': 1,

'biquadratic': 2,

'bicubic': 3,

}

def center_crop_and_resize(image, image_size, crop_padding=32, interpolation='bicubic'):

assert image.ndim in {2, 3}

assert interpolation in MAP_INTERPOLATION_TO_ORDER.keys()

h, w = image.shape[:2]

padded_center_crop_size = int((image_size / (image_size + crop_padding)) * min(h, w))

offset_height = ((h - padded_center_crop_size) + 1) // 2

offset_width = ((w - padded_center_crop_size) + 1) // 2

image_crop = image[offset_height:padded_center_crop_size + offset_height,

offset_width:padded_center_crop_size + offset_width]

resized_image = resize(

image_crop,

(image_size, image_size),

order=MAP_INTERPOLATION_TO_ORDER[interpolation],

preserve_range=True,

)

return resized_image

def preprocess_input(x):

assert x.ndim in (3, 4)

assert x.shape[-1] == 3

x = x - np.array(MEAN_RGB)

x = x / np.array(STDDEV_RGB)

return xtf.keras.Sequence

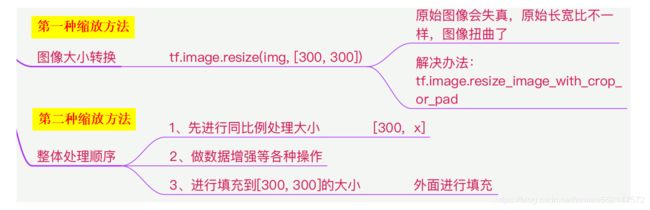

数据混合mixup

对所读取的图片进行缩放到指定长宽大小

tf.image.resize_with_crop_or_pad(image, target_height, target_width)

参数:

image:4维形状张量[batch, height, width, channels]或3维形状张量[height, width, channels]。

target_height:目标高度。

target_width:目标宽度。

返回:

裁剪和/或填充图像。如果图像是四维的,则是一个四维的浮动形状张量[批处理,new_height新高度,new_width新宽度,通道]。

如果图像是三维的,则为三维形状的浮动张量[new_height新高度、new_width新宽度、通道]。

# 假如读取的图像大小为[180, 200],那么目的想要把[180, 200]缩放为[300, 300]的话,要经过两步。

# 第一步:先取出长和宽中的最大值,即200,然后使用300/200得出缩放系数为1.5,然后计算[1.5*180, 1.5*200]得到[270, 200]。

# 第一步的目的是为了不让图形在缩放的时候直接变形,所以长和宽分别乘以缩放系数,那么便会有长/宽其中一者或两者都为300。

# 第二步:如果第一步之后长和宽都为300的话,则不需要执行第二步了,如果只有一边为300,另外一边不为300的话,则继续执行第二步。

# 调用填充函数center_img()对其中一边不满300的进行填充至300。

img = Image.open(img_path)

resize_scale = 300 / max(img.size[:2])

img = img.resize((int(img.size[0] * resize_scale), int(img.size[1] * resize_scale)))

img = img.convert('RGB')

img = np.array(img)

#把图片大小调整到[300, 300, 3],调整的方式为直接填充小的坐标。为了模型输入大小要求的需要

img = center_img(img, 300)

def center_img(img, size=None, fill_value=255):

"""改变图片尺寸到300x300,并且做填充使得图像处于中间位置

"""

h, w = img.shape[:2]

if size is None:

size = max(h, w)

shape = (size, size) + img.shape[2:]

background = np.full(shape, fill_value, np.uint8)

center_x = (size - w) // 2

center_y = (size - h) // 2

background[center_y:center_y + h, center_x:center_x + w] = img

return background