前言

Prometheus之于kubernetes(监控领域),如kubernetes之于容器编排。

随着heapster不再开发和维护以及influxdb 集群方案不再开源,heapster+influxdb的监控方案,只适合一些规模比较小的k8s集群。而prometheus整个社区非常活跃,除了官方社区提供了一系列高质量的exporter,例如node_exporter等。Telegraf(集中采集metrics) + prometheus的方案,也是一种减少部署和管理各种exporter工作量的很好的方案。

今天主要讲讲我司在使用prometheus过程中,存储方面的一些实战经验。

Prometheus 储存瓶颈

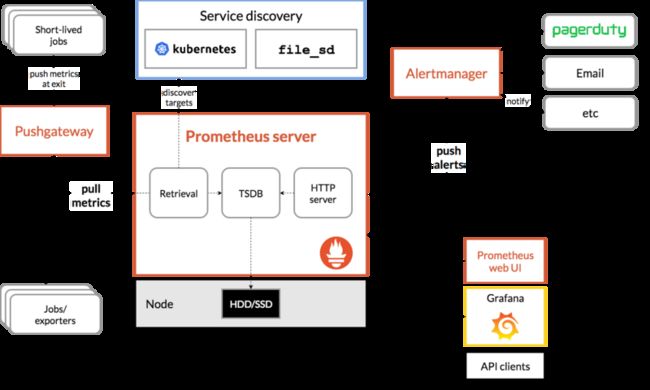

通过prometheus的架构图可以看出,prometheus提供了本地存储,即tsdb时序数据库。本地存储的优势就是运维简单,缺点就是无法海量的metrics持久化和数据存在丢失的风险,我们在实际使用过程中,出现过几次wal文件损坏,无法再写入的问题。

当然prometheus2.0以后压缩数据能力得到了很大的提升。为了解决单节点存储的限制,prometheus没有自己实现集群存储,而是提供了远程读写的接口,让用户自己选择合适的时序数据库来实现prometheus的扩展性。

prometheus通过下面两种方式来实现与其他的远端存储系统对接

- Prometheus 按照标准的格式将metrics写到远端存储

- prometheus 按照标准格式从远端的url来读取metrics

![]()

metrics的持久化的意义和价值

其实监控不仅仅是体现在可以实时掌握系统运行情况,及时报警这些。而且监控所采集的数据,在以下几个方面是有价值的

- 资源的审计和计费。这个需要保存一年甚至多年的数据的。

- 故障责任的追查

- 后续的分析和挖掘,甚至是利用AI,可以实现报警规则的设定的智能化,故障的根因分析以及预测某个应用的qps的趋势,提前HPA等,当然这是现在流行的AIOPS范畴了。

Prometheus 数据持久化方案

方案选型

社区中支持prometheus远程读写的方案

- AppOptics: write

- Chronix: write

- Cortex: read and write

- CrateDB: read and write

- Elasticsearch: write

- Gnocchi: write

- Graphite: write

- InfluxDB: read and write

- OpenTSDB: write

- PostgreSQL/TimescaleDB: read and write

- SignalFx: write

- clickhouse: read and write

选型方案需要具备以下几点

- 满足数据的安全性,需要支持容错,备份

- 写入性能要好,支持分片

- 技术方案不复杂

- 用于后期分析的时候,查询语法友好

- grafana读取支持,优先考虑

- 需要同时支持读写

基于以上的几点,clickhouse满足我们使用场景。

Clickhouse是一个高性能的列式数据库,因为侧重于分析,所以支持丰富的分析函数。

下面是Clickhouse官方推荐的几种使用场景:

- Web and App analytics

- Advertising networks and RTB

- Telecommunications

- E-commerce and finance

- Information security

- Monitoring and telemetry

- Time series

- Business intelligence

- Online games

- Internet of Things

ck适合用于存储Time series

此外社区已经有graphouse项目,把ck作为Graphite的存储。

性能测试

写入测试

本地mac,docker 启动单台ck,承接了3个集群的metrics,均值达到12910条/s。写入毫无压力。其实在网盟等公司,实际使用时,达到30万/s。

查询测试

fbe6a4edc3eb :) select count(*) from metrics.samples;

SELECT count(*)

FROM metrics.samples

┌──count()─┐

│ 22687301 │

└──────────┘

1 rows in set. Elapsed: 0.014 sec. Processed 22.69 million rows, 45.37 MB (1.65 billion rows/s., 3.30 GB/s.)其中最有可能耗时的查询:

1)查询聚合sum

fbe6a4edc3eb :) select sum(val) from metrics.samples where arrayExists(x -> 1 == match(x, 'cid=9'),tags) = 1 and name = 'machine_cpu_cores' and ts > '2017-07-11 08:00:00'

SELECT sum(val)

FROM metrics.samples

WHERE (arrayExists(x -> (1 = match(x, 'cid=9')), tags) = 1) AND (name = 'machine_cpu_cores') AND (ts > '2017-07-11 08:00:00')

┌─sum(val)─┐

│ 6324 │

└──────────┘

1 rows in set. Elapsed: 0.022 sec. Processed 57.34 thousand rows, 34.02 MB (2.66 million rows/s., 1.58 GB/s.)2)group by 查询

fbe6a4edc3eb :) select sum(val), time from metrics.samples where arrayExists(x -> 1 == match(x, 'cid=9'),tags) = 1 and name = 'machine_cpu_cores' and ts > '2017-07-11 08:00:00' group by toDate(ts) as time;

SELECT

sum(val),

time

FROM metrics.samples

WHERE (arrayExists(x -> (1 = match(x, 'cid=9')), tags) = 1) AND (name = 'machine_cpu_cores') AND (ts > '2017-07-11 08:00:00')

GROUP BY toDate(ts) AS time

┌─sum(val)─┬───────time─┐

│ 6460 │ 2018-07-11 │

│ 136 │ 2018-07-12 │

└──────────┴────────────┘

2 rows in set. Elapsed: 0.023 sec. Processed 64.11 thousand rows, 36.21 MB (2.73 million rows/s., 1.54 GB/s.)

3) 正则表达式

fbe6a4edc3eb :) select sum(val) from metrics.samples where name = 'container_memory_rss' and arrayExists(x -> 1 == match(x, '^pod_name=ofo-eva-hub'),tags) = 1 ;

SELECT sum(val)

FROM metrics.samples

WHERE (name = 'container_memory_rss') AND (arrayExists(x -> (1 = match(x, '^pod_name=ofo-eva-hub')), tags) = 1)

┌─────sum(val)─┐

│ 870016516096 │

└──────────────┘

1 rows in set. Elapsed: 0.142 sec. Processed 442.37 thousand rows, 311.52 MB (3.11 million rows/s., 2.19 GB/s.)总结:

利用好所建索引,即使在大数据量下,查询性能非常好。

方案设计

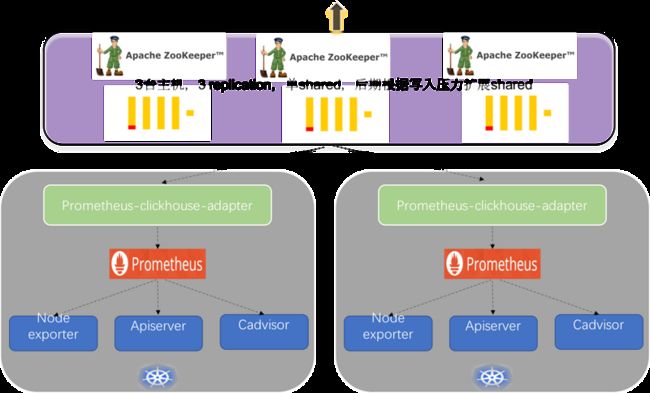

关于此架构,有以下几点:

- 每个k8s集群部署一个Prometheus-clickhouse-adapter 。关于Prometheus-clickhouse-adapter该组件,下面我们会详细解读。

- clickhouse 集群部署,需要zk集群做一致性表数据复制。

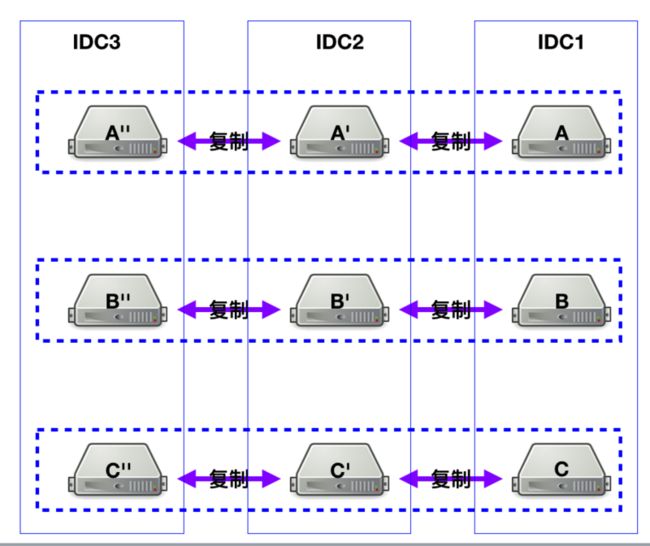

而clickhouse 的集群示意图如下:

- ReplicatedMergeTree + Distributed。ReplicatedMergeTree里,共享同一个ZK路径的表,会相互,注意是,相互同步数据

- 每个IDC有3个分片,各自占1/3数据

- 每个节点,依赖ZK,各自有2个副本

这块详细步骤和思路,请参考ClickHouse集群搭建从0到1。感谢新浪的鹏哥指点。

zk集群部署注意事项:

- 安装 ZooKeeper 3.4.9或更高版本的稳定版本

- 不要使用zk的默认配置,默认配置就是一个定时炸弹。

The ZooKeeper server won't delete files from old snapshots and logs when using the default configuration (see autopurge), and this is the responsibility of the operator.ck官方给出的配置如下zoo.cfg:

# http://hadoop.apache.org/zookeeper/docs/current/zookeeperAdmin.html

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=30000

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=10

maxClientCnxns=2000

maxSessionTimeout=60000000

# the directory where the snapshot is stored.

dataDir=/opt/zookeeper/{{ cluster['name'] }}/data

# Place the dataLogDir to a separate physical disc for better performance

dataLogDir=/opt/zookeeper/{{ cluster['name'] }}/logs

autopurge.snapRetainCount=10

autopurge.purgeInterval=1

# To avoid seeks ZooKeeper allocates space in the transaction log file in

# blocks of preAllocSize kilobytes. The default block size is 64M. One reason

# for changing the size of the blocks is to reduce the block size if snapshots

# are taken more often. (Also, see snapCount).

preAllocSize=131072

# Clients can submit requests faster than ZooKeeper can process them,

# especially if there are a lot of clients. To prevent ZooKeeper from running

# out of memory due to queued requests, ZooKeeper will throttle clients so that

# there is no more than globalOutstandingLimit outstanding requests in the

# system. The default limit is 1,000.ZooKeeper logs transactions to a

# transaction log. After snapCount transactions are written to a log file a

# snapshot is started and a new transaction log file is started. The default

# snapCount is 10,000.

snapCount=3000000

# If this option is defined, requests will be will logged to a trace file named

# traceFile.year.month.day.

#traceFile=

# Leader accepts client connections. Default value is "yes". The leader machine

# coordinates updates. For higher update throughput at thes slight expense of

# read throughput the leader can be configured to not accept clients and focus

# on coordination.

leaderServes=yes

standaloneEnabled=false

dynamicConfigFile=/etc/zookeeper-{{ cluster['name'] }}/conf/zoo.cfg.dynamic每个版本的ck配置文件不太一样,这里贴出一个390版本的

information

/data/ck/log/clickhouse-server.log

/data/ck/log/clickhouse-server.err.log

1000M

10

8123

9000

/etc/clickhouse-server/server.crt

/etc/clickhouse-server/server.key

/etc/clickhouse-server/dhparam.pem

none

true

true

sslv2,sslv3

true

true

true

sslv2,sslv3

true

RejectCertificateHandler

9009

0.0.0.0

4096

3

100

8589934592

5368709120

/data/ck/data/

/data/ck/tmp/

/data/ck/user_files/

users.xml

default

default

false

ck11.ruly.xxx.net

9000

ck12.ruly.xxx.net

9000

zk1.ruly.xxx.net

2181

zk2.ruly.xxx.net

2181

zk3.ruly.xxx.net

2181

1

ck11.ruly.ofo.net

3600

3600

60

system

query_log

toYYYYMM(event_date)

7500

*_dictionary.xml

/clickhouse/task_queue/ddl

/var/lib/clickhouse/format_schemas/

Prometheus-Clickhuse-Adapter组件

Prometheus-Clickhuse-Adapter(Prom2click) 是一个将clickhouse作为prometheus 数据远程存储的适配器。

prometheus-clickhuse-adapter,该项目缺乏日志,对于一个实际生产的项目,是不够的,此外一些数据库连接细节实现的也不够完善,已经在实际使用过程中将改进部分作为pr提交。

在实际使用过程中,要注意并发写入数据的数量,及时调整启动参数ch.batch 的大小,实际就是批量写入ck的数量,目前我们设置的是65536。因为ck的Merge引擎有一个300的限制,超过会报错

Too many parts (300). Merges are processing significantly slower than inserts300是指 processing,不是指一次批量插入的条数。

总结

这篇文章主要讲了我司Prometheus在存储方面的探索和实战的一点经验。后续会讲Prometheus查询和采集分离的高可用架构方案。