pytorch手写字母用自己的网络进行训练对比lenet

导入必要的模块

import torch

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

from torch import nn,optim

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

定义自己的网络结构

包括卷积,bacth norm,relu,maxpooling层

定义网络结构

class CNN(nn.Module):

def __init__(self):

super(CNN,self).__init__()

self.layer1=nn.Sequential(

nn.Conv2d(1,16,kernel_size=3),#b,16,26,26)

nn.BatchNorm2d(16),

nn.ReLU(inplace=True))

self.layer2=nn.Sequential(

nn.Conv2d(16,32,kernel_size=3),#b,32,24.24

nn.BatchNorm2d(32),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2,stride=2))

self.layer3=nn.Sequential(

nn.Conv2d(32,64,kernel_size=3),#b,64,10,10)

nn.BatchNorm2d(64),

nn.ReLU(inplace=True))

self.layer4=nn.Sequential(

nn.Conv2d(64,128,kernel_size=3),#b,128,8.8

nn.BatchNorm2d(128),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2,stride=2))#b,128,4,4

self.fc=nn.Sequential(

nn.Linear(128*4*4,1024),

nn.ReLU(inplace=True),

nn.Linear(1024,128),

nn.ReLU(inplace=True),

nn.Linear(128,10)

)

def forward(self,x):

x=self.layer1(x)

x=self.layer2(x)

x=self.layer3(x)

x=self.layer4(x)

x=x.view(x.size(0),-1)

x=self.fc(x)

return x

定义Lenet网路结构

#定义一个lenet5网络结构

class Lenet(nn.Module):

def __init__(self):

super(Lenet,self).__init__()

layer1=nn.Sequential()

layer1.add_module('conv1',nn.Conv2d(1,6,3,padding=1))

layer1.add_module('pool1',nn.MaxPool2d(2,2))

self.layer1=layer1

layer2=nn.Sequential()

layer2.add_module('conv2',nn.Conv2d(6,16,5))

layer2.add_module('pool2',nn.MaxPool2d(2,2))

self.layer2=layer2

layer3=nn.Sequential()

layer3.add_module('fc1',nn.Linear(400,120))

layer3.add_module('fc2',nn.Linear(120,84))

layer3.add_module('fc3',nn.Linear(84,10))

self.layer3=layer3

def forward(self,x):

x=self.layer1(x)

x=self.layer2(x)

x=x.view(x.size(0),-1)

x=self.layer3(x)

return x

开始训练

import torch

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

from torch import nn,optim

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

#定义一些超参数

batch_size=64

learning_rate=1e-2

num_epoches=20

#预处理

data_tf=transforms.Compose(

[transforms.ToTensor(),transforms.Normalize([0.5],[0.5])])#将图像转化成tensor,然后继续标准化,就是减均值,除以方差

#读取数据集

train_dataset=datasets.MNIST(root='./data',train=True,transform=data_tf,download=True)

test_dataset=datasets.MNIST(root='./data',train=False,transform=data_tf)

#使用内置的函数导入数据集

train_loader=DataLoader(train_dataset,batch_size=batch_size,shuffle=True)

test_loader=DataLoader(test_dataset,batch_size=batch_size,shuffle=False)

#导入网络,定义损失函数和优化方法

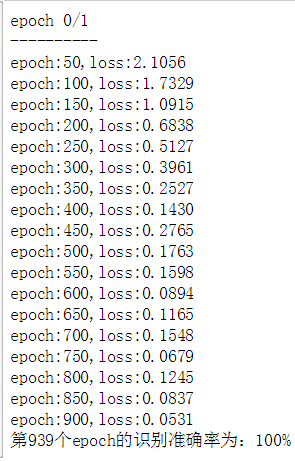

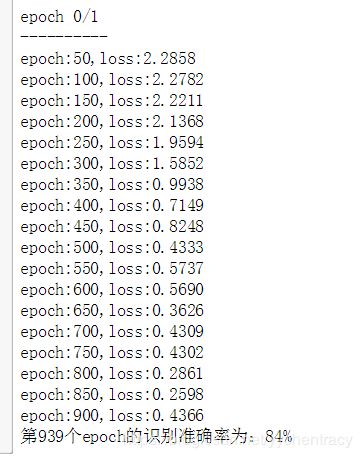

model=Lenet()#如果使用Lenet就用这个网路

#model=CNN()#使用CNN进行训练

if torch.cuda.is_available():#是否使用cuda加速

model=model.cuda()

criterion=nn.CrossEntropyLoss()

optimizer=optim.SGD(model.parameters(),lr=learning_rate)

n_epochs=1

for epoch in range(n_epochs):

total=0

running_loss=0.0

running_correct=0

print("epoch {}/{}".format(epoch,n_epochs))

print("-"*10)

for data in train_loader:

img,label=data

#img=img.view(img.size(0),-1)

img = Variable(img)

if torch.cuda.is_available():

img=img.cuda()

label=label.cuda()

else:

img=Variable(img)

label=Variable(label)

out=model(img)#得到前向传播的结果

loss=criterion(out,label)#得到损失函数

print_loss=loss.data.item()

optimizer.zero_grad()#归0梯度

loss.backward()#反向传播

optimizer.step()#优化

running_loss+=loss.item()

epoch+=1

if epoch%50==0:

print('epoch:{},loss:{:.4f}'.format(epoch,loss.data.item()))

_, predicted = torch.max(out.data, 1)

total += label.size(0)#得到总图片数目

running_correct += (predicted == label).sum()#分类正确的

print('第%d个epoch的识别准确率为:%d%%' % (epoch + 1, (100 * running_correct / total))