Hadoop安装与配置(CentOS7、伪分布式、单节点)

目录

- 一、安装Linux虚拟机(系统:CentOS7)

- 二、配置主机

- 三、解压安装包

- 四、文件配置

- 五、Hadoop运行、访问与关闭

本文用到的安装包

jdk(Linux)提取码: tmxp

Hadoop安装包提取码: vcfg

一、安装Linux虚拟机(系统:CentOS7)

安装链接如下(主机网卡地址自定义)

Linux 虚拟机安装

二、配置主机

- 修改主机名

hostnamectl set-hostname hadoop101

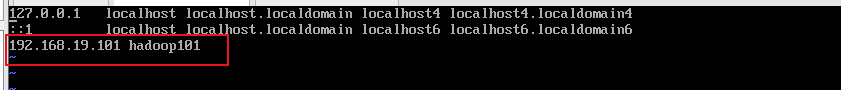

- 添加主机列表

vi /etc/hosts

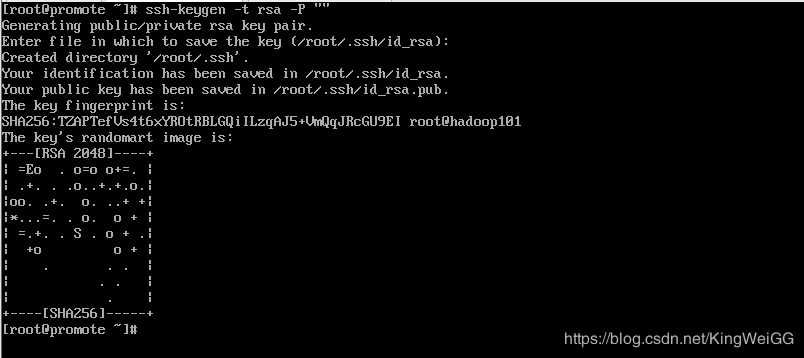

ssh-keygen -t rsa -P ""

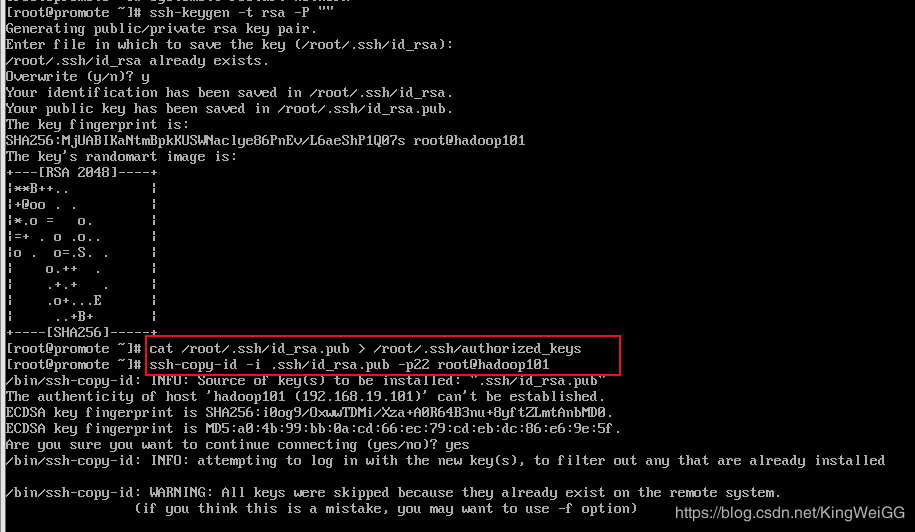

- 复制私钥到公钥

cat /root/.ssh/id_rsa.pub > /root/.ssh/autorized_keys

- 开启免密登录

ssh-copy-id -i .ssh/id_rsa.pub -p22 root@hadoop101

#chmod 600 /root/.ssh/autorized_keys

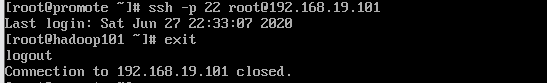

ssh -p 22 root@192.168.19.101

exit

三、解压安装包

- 将安装包放在opt目录下,并在opt目录下创建包soft

mkdir /opt/soft

- 将jdk与Hadoop解压到opt/soft目录

tar -zxvf jdk-8u221-linux-x64.tar.gz -C /opt/soft

tar -zxvf hadoop-2.6.0-cdh5.14.2.tar.gz -C /opt/soft

tar -xvf hadoop-native-64-2.6.0.tar -C /opt/soft

- 可以删除安装包

rm -rf *

- 切换到opt目录下

cd /opt/

- 对jdk1.8.0_221包与 hadoop-2.6.0-cdh5.14.2包进行改名

mv hadoop-2.6.0-cdh5.14.2/ hadoop260

mv jdk1.8.0_221/ java8

- 将关于lib的文件移到hadoop/lib/native文件夹下,并复制到hadoop/lib/文件下

mv lib* /opt/soft/hadoop260/lib/native/

cp /opt/soft/hadoop260/lib/native/lib* /opt/soft/hadoop260/lib/

四、文件配置

- 配置jdk环境变量与hadoop环境

vi /etc/profile

export JAVA_HOME=/opt/soft/java8

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/rt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar

export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

export HADOOP_HOME=/opt/soft/hadoop260

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

- 使环境变量及时生效

source /etc/profile

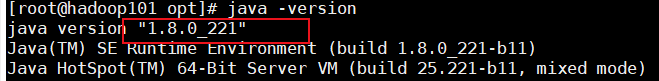

- 测试环境变量的配置是否成功

java -version

javac

echo $JAVA_HOME

- Hadoop文件配置(进入Hadoop文件目录下执行命令 cd /opt/soft/hadoop260/etc/hadoop)

- 配置hadoop-env.sh文件 (修改JAVA_HOME)

vi hadoop-env.sh

- 找到JAVA_HOME并更改(25行)

export JAVA_HOME=/opt/soft/java8

- 配置core-site.xml文件

vi core-site.xml

<!-- 默认的文件系统的名称。通常指定namenode的URI地址,包括主机和端口 -->

fs.defaultFS</name>

hdfs://192.168.19.101:9000</value>

</property>

<!-- 其他临时目录的父目录 -->

hadoop.tmp.dir</name>

/opt/soft/hadoop260/tmp</value>

</property>

<!-- 其他机器的root用户可访问 -->

hadoop.proxyuser.root.hosts</name>

*</value>

</property>

<!-- 其他root组下的用户都可以访问 -->

hadoop.proxyuser.root.groups</name>

*</value>

</property>

</configuration>

- 配置hdfs-site.xml文件

vi hdfs-site.xml

<!--指定dataNode存储block的副本数量-->

dfs.replication</name>

1</value>

</property>

<!--是否开启权限检查,建议开启-->

dfs.permissions.enabled</name>

false</value>

</property>

</configuration>

- 配置mapred-site.xml文件

注:将mapred-site.xml.template改名为mapred-site.xml或者复制一份mapred-site.xml.template并改名为mapred-site.xml

mv mapred-site.xml.template mapred-site.xml

vi mapred-site.xml

<!-- mapreduce的工作模式:yarn -->

mapreduce.framework.name</name>

yarn</value>

</property>

</configuration>

注:使用jobhistory,添加下面

<!-- mapreduce的工作地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop101:10020</value>

</property>

<!-- web页面访问历史服务端口的配置 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop101:19888</value>

</property>

- 配置yarn-site.xml文件

vi yarn-site.xml

<!-- Site specific YARN configuration properties -->

<!-- reducer获取数据方式 -->

yarn.nodemanager.aux-services</name>

mapreduce_shuffle</value>

</property>

<!-- 指定YARN的ResourceManager的地址 -->

yarn.resourcemanager.hostname</name>

hadoop101</value>

</property>

</configuration>

- 配置slaves(单节点可不配)

vi slaves

hadoop101

- 格式化HDFS

hadoop namenode -format

注:不报erro即成功,报错则根据erro进行文件修改后在格式化

五、Hadoop运行、访问与关闭

- 启动Hadoop(启动脚本和停止脚本在 /opt/soft/hadoop260/sbin 目录下)

- 启动

start-all.sh

- 启动历史服务(根据你在mapred-site.xml中是否添加jobhistory)

mr-jobhistory-daemon.sh start historyserver

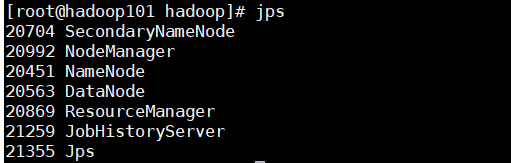

- 测试Hadoop是否启动成功

输入jps命令,若出现除jps进程的以下六个进程说明启动成功

注:如果不全,首先stop-all.sh 在查看日志,根据日志信息error修改对应的文件,然后删掉临时文件父目录/opt/soft/hadoop260/tmp与logs, 重新格式化 再启动 (如果存在进程正在启动中则干掉相应进程在重新启动)

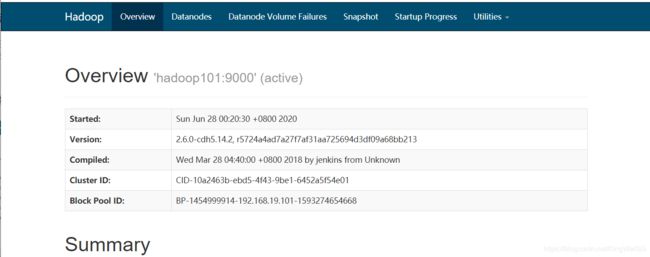

- HDFS页面:http://192.168.19.101:50070

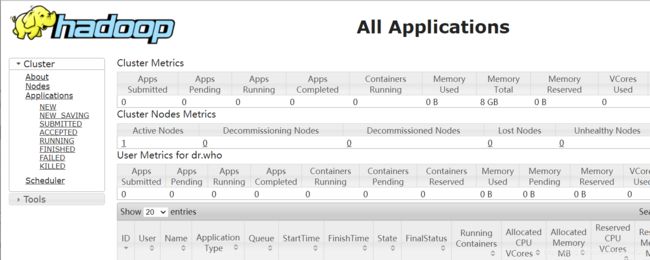

- YARN的管理界面:http://192.168.19.101:8088

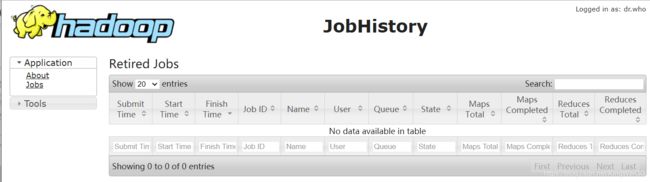

- web页面访问历史:http://192.168.19.101:19888/

- 关闭Hadoop

方式一:

- 关闭所有(如果要关闭单个进入sbin目录进行查看 cd /opt/soft/hadoop260/sbin/)

stop-all.sh

- 关闭历史服务

mr-jobhistory-daemon.sh stop historyserver

方式二(停止进程,但不建议容易丢失数据):

kill -9 [pid]

- 退出linux

poweroff

#或

#shutdown

注:

Hadoop集群、ZooKpeeper、HBase、Hive搭建(系统centos7.0)搭建