Hadoop集群、ZooKpeeper、HBase、Hive搭建(系统centos7.0)

目录

- 前提

- Hadoop集群搭建

- 一、复制虚拟机

- 二、修改主机名和主机列表

- 三、配置免密登录

- 四、修改配置文件

- 五、Hadoop运行、查看

- ZooKeeper搭建

- 一、配置ZooKeeper

- 二、启动ZooKeeper

- HBase搭建

- 一、配置HBase

- 二、启动HBase

- Hive搭建

- 一、mysql 安装

- 二、配置Hive

- 三、启动Hive

需要用到的安装包:

jdk-8u221-linux-x64.tar.gz提取码: tmxp

MySQL-client-5.6.46-1.el7.x86_64.rpm提取码: wedy

MySQL-server-5.6.46-1.el7.x86_64.rpm提取码: 6n7k

hadoop2.6.0提取码: ebui

zookeeper3.4.6提取码: 95ni

hbase1.2.0提取码: nh27

hive1.1.05p8f

前提

注:本文采用复制虚拟机也可重新创建hadoop

- 在搭建集群之前

- 准备好一台已经搭建好的虚拟机

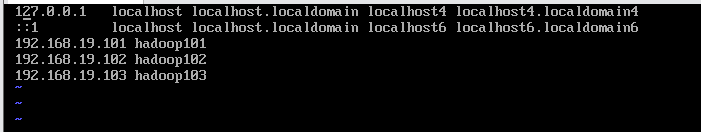

hadoop安装与配置 - 添加主机列表 (添加所有机器的主机ip和主机列表名)

vi /etc/hosts

- 删除临时文件父目录tmp文件夹 /opt/soft/hadoop260/tmp

rm -rf /opt/soft/hadoop260/tmp

解压文件

tar -zxvf hadoop-2.6.0-cdh5.14.2 /opt/soft

tar -zxvf hbase-1.2.0-cdh5.14.2 /opt/soft

tar -zxvf hive-1.1.0-cdh5.14.2 /opt/soft

tar -zxvf jdk1.8.0_221 /opt/soft

tar -zxvf zookeeper-3.4.6 /opt/soft

改名

mv hadoop-2.6.0-cdh5.14.2 hadoop260

mv hbase-1.2.0-cdh5.14.2 hbase120

mv hive-1.1.0-cdh5.14.2 hive110

mv jdk1.8.0_221 java8

mv zookeeper-3.4.6 zookeeper

- 配置HBase、Hive需修改jdk环境变量(添加HBASE_HOME、HIVE_HOME、在PATH后面添加对应的路径)

vi /etc/profile

export JAVA_HOME=/opt/soft/java8

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/rt.jar:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar

export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin

export HADOOP_HOME=/opt/soft/hadoop260

export HBASE_HOME=/opt/soft/hbase120

export HIVE_HOME=/opt/soft/hive110

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$HBASE_HOME/bin:$HIVE_HOME/bin

- 使环境变量生效

source /etc/profile

Hadoop集群搭建

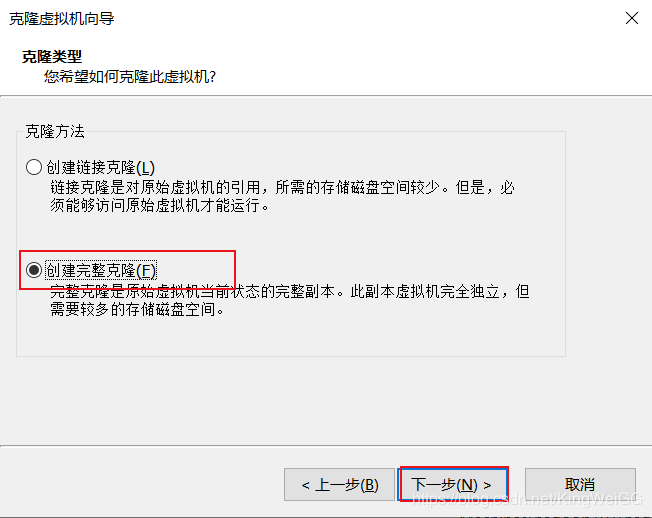

一、复制虚拟机

- 搭建集群(3台)

- 注:所有步骤最好同步进行

- 克隆虚拟机(虚拟机必须是关闭状态)

-

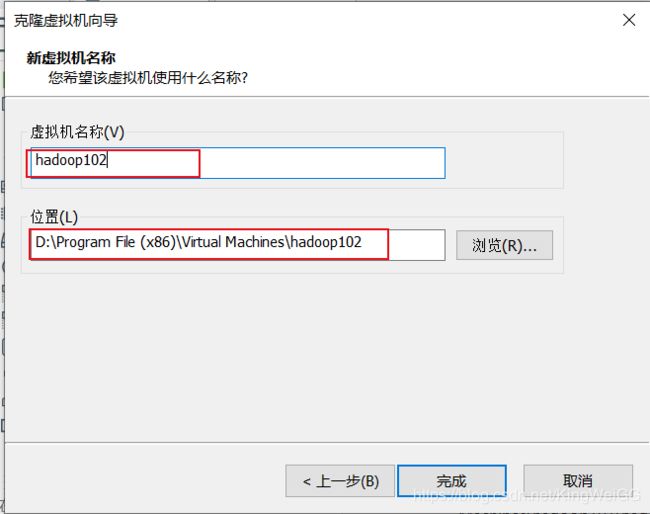

启动3台虚拟机

-

修改两台克隆的ip地址并重启网络(更改IPADDR)

vi /etc/sysconfig/network-scripts/ifcfg-ens33

- 输入命令重启网络

systemctl restart network

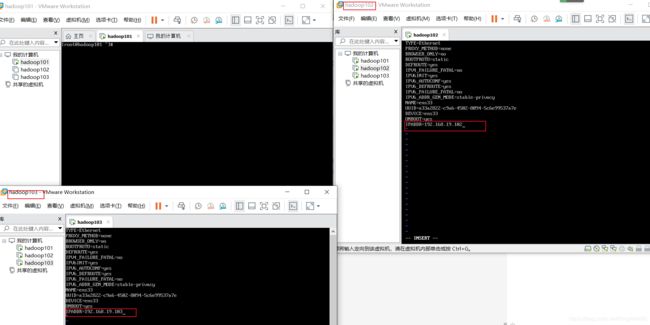

二、修改主机名和主机列表

- 修改两台克隆机的主机名

hostnamectl set-hostname hadoop102

hostnamectl set-hostname hadoop103

- 查看主机名

hostname

或

vi /etc/hostname

- 查看主机列表

vi /etc/hosts

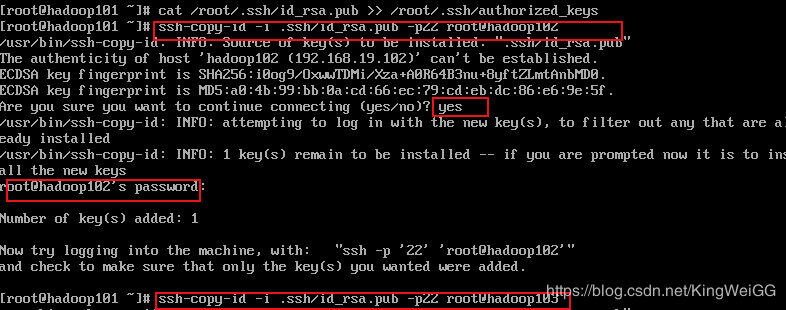

三、配置免密登录

- 回车两次生成私钥(每台虚拟机都执行):

ssh-keygen -t rsa -P ""

- 复制私钥到公钥(每台虚拟机都执行):

cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

- 开启远程免密登录配置(每台虚拟机都执行,但要除去当前虚拟机主机名)

例hadoop101主机下:

ssh-copy-id -i .ssh/id_rsa.pub -p22 root@hadoop102

ssh-copy-id -i .ssh/id_rsa.pub -p22 root@hadoop103

- 测试是否连接成功

ssh root@hadoop102

ssh root@hadoop103

exit

exit

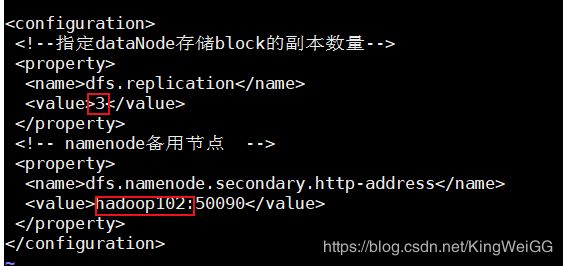

四、修改配置文件

- 修改主机Hadoop配置文件

- 进入etc/hadoop目录:

cd /opt/soft/hadoop260/etc/hadoop

- 修改hdfs-site.xml文件:

vi hdfs-site.xml

vi slaves

- 修改副机Hadoop配置文件

- 方法一:重复修改主机Hadoop配置文件步骤

略

方法二:将主机修改好的配置文件复制到其他副机上

scp hdfs-site.xml root@192.168.19.102:/opt/hadoop/etc/hadoop/hdfs-site.xml

scp hdfs-site.xml root@192.168.19.103:/opt/hadoop/etc/hadoop/hdfs-site.xml

scp slaves root@192.168.19.102:/opt/hadoop/etc/hadoop/slaves

scp slaves root@192.168.19.103:/opt/hadoop/etc/hadoop/slaves

- 格式化HDFS(主机)

hadoop namenode -format

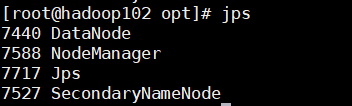

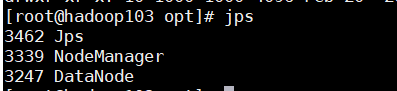

五、Hadoop运行、查看

- 启动

start-all.sh

stop-all.sh

ZooKeeper搭建

注:最少3台虚拟机,并且数量是奇数,时区必须同步

在Hadoop包解压完成

一、配置ZooKeeper

- 修改zoo_sample.cfg配置文件

- 进入zookeeper/conf/目录下

cd /opt/soft/zookeeper/conf/

- 修改文件

vi zoo_sample.cfg

# The number of milliseconds of each tick

tickTime=2000

#最大节点行数不限

maxClientCnxns=0

# The number of ticks that the initial

# synchronization phase can take

#初始化最小进程数

initLimit=50

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

#数据目录

dataDir=/opt/soft/zookeeper/zkData

# the port at which the clients will connect

clientPort=2181

#配置server

server.1=hadoop101:2888:3888

server.2=hadoop102:2888:3888

server.3=hadoop103:2888:3888

- 修改配置文件名

mv zoo_sample.cfg zoo.cfg

- 将配置文件复制到其他机器zookeeper节点上

scp zoo.cfg root@hadoop102:/opt/zookeeper/conf/

scp zoo.cfg root@hadoop103:/opt/zookeeper/conf/

- 在hadoop目录下新建数据目录zookeeperdata,并添加myid文件,内容为该节点对应的server号,即hadoop101对应为 1(每台虚拟机都需要配置)

mkdir /opt/soft/zookeeper/zkData

echo "1" > mkdir /opt/soft/zookeeper/zkData/myid

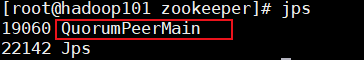

二、启动ZooKeeper

- 在各zookeeper目录下运行zkServer.sh start。

./bin/zkServer.sh start

- 查看是否连接成功

tail zookeeper.out

- jps

HBase搭建

注:hbase包解压完成,环境变量HBASE_HOME和PATH添加完成

一、配置HBase

- 进入到hbase目录conf目录下

cd /opt/hbase/conf/

- 配置hbase-site.xml

vi hbase-site.xml

hbase.rootdir</name>

hdfs://hadoop101:9000/hbase</value>

The directory shared by region servers.</description>

</property>

hbase.cluster.distributed</name>

true</value>

</property>

hbase.master.port</name>

60000</value>

</property>

hbase.zookeeper.quorum</name>

hadoop101,hadoop102,hadoop103</value>

</property>

hbase.regionserver.handler.count</name>

300</value>

</property>

hbase.hstore.blockingStoreFiles</name>

70</value>

</property>

zookeeper.session.timeout</name>

60000</value>

</property>

hbase.regionserver.restart.on.zk.expire</name>

true</value>

Zookeeper session expired will force regionserver exit.

Enable this will make the regionserver restart.

</description>

</property>

hbase.replication</name>

false</value>

</property>

hfile.block.cache.size</name>

0.4</value>

</property>

hbase.regionserver.global.memstore.upperLimit</name>

0.35</value>

</property>

hbase.hregion.memstore.block.multiplier</name>

8</value>

</property>

hbase.server.thread.wakefrequency</name>

100</value>

</property>

hbase.master.distributed.log.splitting</name>

false</value>

</property>

hbase.regionserver.hlog.splitlog.writer.threads</name>

3</value>

</property>

hbase.hstore.blockingStoreFiles</name>

20</value>

</property>

hbase.hregion.memstore.flush.size</name>

134217728</value>

</property>

hbase.hregion.memstore.mslab.enabled</name>

true</value>

</property>

</configuration>

- 配置hbase-env.sh

vi hbase-env.sh

#28行

export JAVA_HOME=/opt/java8

#31行位置为hadoop配置文件位置

export HBASE_CLASSPATH=/opt/hadoop/etc/hadoop 31行hadoop配置文件位置

#34行

export HBASE_HEAPSIZE=4000

# 37释放

export HBASE_OFFHEAPSIZE=1G

#44行添加

export HBASE_OPTS="-Xmx4g -Xms4g -Xmn128m -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70 -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -Xloggc:$HBASE_HOME/logs/gc-$(hostname)-hbase.log"

#121释放

export HBASE_PID_DIR=/var/hadoop/pids

#130行

export HBASE_MANAGES_ZK=false

- 配置log4j.properties

vi log4j.properties

#18行改为警告级以上

hbase.root.logger=WARN,console

#94行

log4j.logger.org.apache.hadoop.hbase=WARN

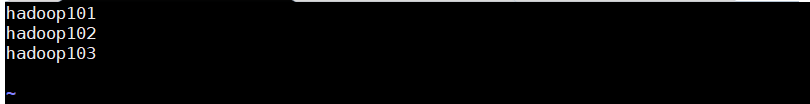

- 配置regionservers

vi regionservers

- 添加所有主机名

hadoop101

hadoop102

hadoop103

- 将修改的文件复制到其他副机上

scp hbase-site.xml root@hadoop102:/opt/hbase/conf/hbase-site.xml

scp hbase-site.xml root@hadoop103:/opt/hbase/conf/hbase-site.xml

scp hbase-env.sh root@hadoop102:/opt/hbase/conf/hbase-env.sh

scp hbase-env.sh root@hadoop103:/opt/hbase/conf/hbase-env.sh

scp log4j.properties root@hadoop102:/opt/hbase/conf/log4j.properties

scp log4j.properties root@hadoop103:/opt/hbase/conf/log4j.properties

scp regionservers root@hadoop102:/opt/hbase/conf/regionservers

scp regionservers root@hadoop103:/opt/hbase/conf/regionservers

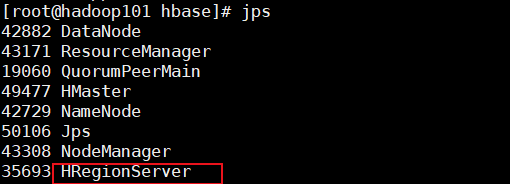

二、启动HBase

- 在hbase目录下启动

./bin/start-hbase.sh

- 查看 jps是否出现进程HRegionServer

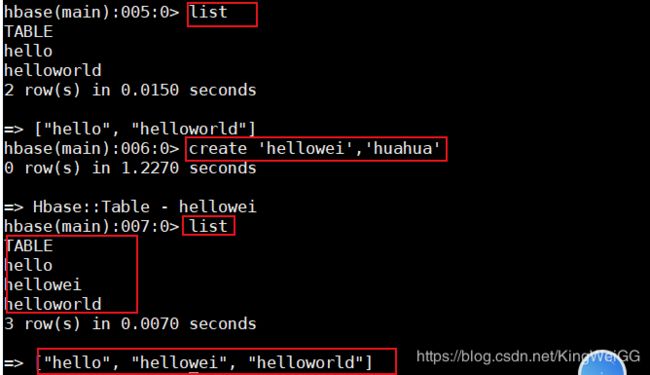

- 执行hbase shell 使用HBase

hbase shell

- 输入quit退出hbase

- 停止HBase运行(hbase目录下)

./bin/stop-hbase.sh

Hive搭建

注:与mysql语句差不多,hive主要是用来查询比较方便

一、mysql 安装

- 查询是否有依赖,根据提示将报错的依赖包删除

rpm -qa | grep mariadb

rpm -e mariadb-libs-5.5.64-1.el7.x86_64 --nodeps

- 切换到opt目录

cd /opt

- yum 下载并安装依赖包:net-tools、perl、autoconf

yum install -y net-tools

yum install -y perl

yum install -y autoconf

- 安装客户端:

rpm -ivh MySQL-client-5.6.46-1.el7.x86_64.rpm

- 安装服务端:

rpm -ivh MySQL-server-5.6.46-1.el7.x86_64.rpm

- 由于没有初始密码,需要进入vi /usr/my.cnf中[mysqld]内容中配置免密登录skip-grant-tables(跳过权限),字符集、忽略大小写操作

vi /usr/my.cnf

[client]

default-character-set = utf8

[mysqld]

skip-grant-tables

character_set_server = utf8

collation_server = utf8_general_ci

lower_case_table_names

- 启动mysql服务

service mysql start

- 在命令行使用mysql命令进入mysql命令行,use mysql进入mysql库

- 执行语句修改root密码,在quit 退出mysql命令行

update user set password=password('ok');

- 再次vi /usr/my.cnf将免密登录去掉或注释#skip-grant-tables,保存退出

- 重启mysql服务(service mysql restart)----每次修改都需要重启mysql服务

- 使用用户密码mysql数据库:(mysql -uroot -p)

- 初始密码设定:(set password=password(‘ok’);)

- 创建一个bigdata用户,并赋权

#创建一个bigdata用户

mysql> create user 'bigdata'@'hadoop101' IDENTIFIED BY 'ok';

#创建hive_metedata库

mysql> create database hive_metedata;

#给予权限

mysql> grant all privileges on *.* to 'bigdata'@'hadoop101';

#flush及时生效

mysql> flush privileges;

二、配置Hive

注:hive包解压完成,环境变量HIVE_HOME和PATH添加完成

- 进入到hive目录

cd /opt/soft/hive110

- 配置hive-env.sh.template

- 改名

mv conf/hive-env.sh.template conf/hive-env.sh

- 配置

vi conf/hive-env.sh

#49行

export HADOOP_HOME=/opt/soft/hadoop260

#52行

export HIVE_CONF_DIR=/opt/soft/hive110/conf

#56行

export HIVE_AUX_JARS_PATH=/opt/soft/hive110/lib

#57行

export JAVA_HOME=/opt/java8

- 配置hive-site.xml

vi hive-site.xml

"1.0" encoding="UTF-8" standalone="no"?>

-stylesheet type="text/xsl" href="configuration.xsl"?>

hive.metastore.warehouse.dir</name>

/opt/soft/hive110/warehouse</value>

</property>

hive.metastore.local</name>

true</value>

</property>

<!-- 如果是远程mysql数据库的话需要在这里写入远程的IP或hosts -->

javax.jdo.option.ConnectionURL</name>

jdbc:mysql://127.0.0.1:3306/hive_metadata?createDatabaseIfNotExist=true</value>

</property>

javax.jdo.option.ConnectionDriverName</name>

com.mysql.jdbc.Driver</value>

</property>

javax.jdo.option.ConnectionUserName</name>

root</value>

</property>

javax.jdo.option.ConnectionPassword</name>

ok</value>

</property>

</configuration>

- 将software目录下mysql-connector-java-5.1.0-bin.jar移到lib目录下

mv /opt/mysql-connector-java-5.1.48-bin.jar /opt/soft/hive110/lib/

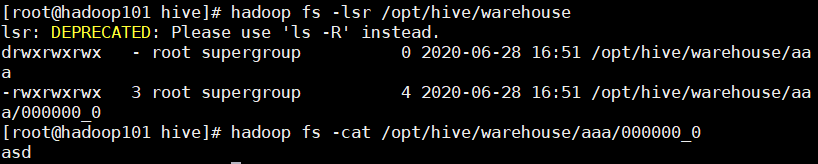

- 创建hdfs文件夹,并赋予权限

hadoop fs -mkdir -p /opt/soft/hive110/warehouse

hadoop fs -chmod -R 777 /opt/soft/hive110/

- 初始化hive

schematool -dbType mysql -initSchema

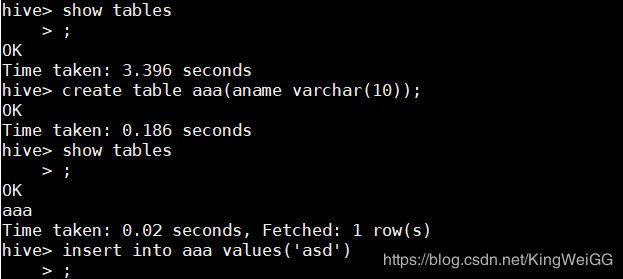

三、启动Hive

- 启动hive

# nohup hive --service metastore &

# hive

- 使用beeline

需启动hiveserver2服务

nohup hive --service hiveserver2 &

#或直接命令

#hiveservice2

- 执行hive语句

- 启动系统顺序

hadoop->zookeeper->mysql->hive(看到hive提示符号就ok)

start-all.sh

./bin/zkServer.sh start-------------------zookeeper目录下启动

service mysql start

Hive

注:hadoop安装与配置