支持向量机的SOM算法实现

终于把支持向量机的原理以及SOM实现代码自己撸了一遍,感觉实在好复杂,辛亏有大佬们的文档作参考:

原理公式推导讲的最好的一篇:https://blog.csdn.net/weixin_41090915/article/details/79177267

SOM算法详解:https://www.cnblogs.com/jerrylead/archive/2011/03/18/1988419.html

我主要是自己参考的这两篇文章,找的之前自己做手机客户流失的数据来写的程序,程序思路就是跟着大佬来做的!

具体的详解都在大佬的文章里面啦!!!我觉得写的实在是太太太好太详细啦!

时不时拿出来温习一下下很ok哦!

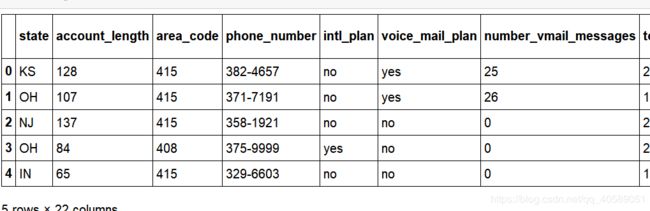

这是我照着大佬撸的代码,数据是长这样的,我只用了其中两个特征。

import numpy as np

import random

import matplotlib.pyplot as plt

import pandas as pd

get_ipython().magic('matplotlib inline')

#读取文件

file_name = r"G:\laioffer\LaiData_MLProjects\LaiData_MLProjects\data\churn.csv"

file = pd.read_csv(file_name)

file.head()

features = ['total_intl_charge','number_customer_service_calls']

train_data = file[features][:10].as_matrix()

train_target = file['churned'][:10].as_matrix()

#定义一个select_jrand函数,该函数的功能是给定输入i和m,随机输出一个0到m之间的与i不同的整数。这个函数在后面将会用到。

def select_jrand(i, m):

j = i

while(j==i):

j = int(random.uniform(0,m))

return j

#定义一个clip_alpha函数,该函数的功能是输入将aj限制在L和H之间

def clip_alpha(aj, H, L):

if aj > H:

aj = H

if L > aj:

aj = L

return aj

def smo_simple(data_mat, class_label, C, toler, max_iter):

# 循环外的初始化工作

data_mat = np.mat(data_mat)

label_mat = np.mat(class_label).reshape(10,1)

b = 0

m,n = np.shape(data_mat)

alphas = np.zeros((m,1))

iter = 0

while iter < max_iter:

# 内循环的初始化工作

alpha_pairs_changed = 0

for i in range(m):

# 第一小段代码

WT_i = np.dot(np.multiply(alphas, label_mat).T, data_mat)

f_xi = float(np.dot(WT_i, data_mat[i,:].T)) + b

Ei = f_xi - float(label_mat[i])

if((label_mat[i]*Ei < -toler) and (alphas[i] < C)) or ((label_mat[i]*Ei > toler) and (alphas[i] > 0)):

j = select_jrand(i, m)

WT_j = np.dot(np.multiply(alphas, label_mat).T, data_mat)

f_xj = float(np.dot(WT_j, data_mat[j,:].T)) + b

Ej = f_xj - float(label_mat[j])

alpha_iold = alphas[i].copy()

alpha_jold = alphas[j].copy()

# 第二小段代码

if (label_mat[i] != label_mat[j]):

L = max(0, alphas[j] - alphas[i]) #

H = min(C, C + alphas[j] - alphas[i])

else:

L = max(0, alphas[j] + alphas[i] - C)

H = min(C, alphas[j] + alphas[i])

if H == L :continue

# 第三小段代码

eta = 2.0 * data_mat[i,:]*data_mat[j,:].T - data_mat[i,:]*data_mat[i,:].T - data_mat[j,:]*data_mat[j,:].T

if eta >= 0: continue

alphas[j] = (alphas[j] - label_mat[j]*(Ei - Ej))/eta

alphas[j] = clip_alpha(alphas[j], H, L)

if (abs(alphas[j] - alpha_jold) < 0.00001):

continue

alphas[i] = alphas[i] + label_mat[j]*label_mat[i]*(alpha_jold - alphas[j])

# 第四小段代码

b1 = b - Ei + label_mat[i]*(alpha_iold - alphas[i])*np.dot(data_mat[i,:], data_mat[i,:].T) + label_mat[j]*(alpha_jold - alphas[j])*np.dot(data_mat[i,:], data_mat[j,:].T)

b2 = b - Ej + label_mat[i]*(alpha_iold - alphas[i])*np.dot(data_mat[i,:], data_mat[j,:].T) + label_mat[j]*(alpha_jold - alphas[j])*np.dot(data_mat[j,:], data_mat[j,:].T)

if (0 < alphas[i]) and (C > alphas[i]):

b = b1

elif (0 < alphas[j]) and (C > alphas[j]):

b = b2

else:

b = (b1 + b2)/2.0

alpha_pairs_changed += 1

if (alpha_pairs_changed == 0): iter += 1

else: iter = 0

return b, alphas

# In[24]:

b, alphas = smo_simple(train_data, train_target, 0.6, 0.001, 5)

print(b, alphas)

真的自己理解起来好难哦,不过后来用了核函数的方式来寻找支持向量,sklearn库的中的支持向量机就是利用的核函数算法,用起来是非常方便的!

用sklearn实现支持向量:https://blog.csdn.net/qq_40589051/article/details/82179342