hive配置教程详解

使用到的工具

xshell

centos7

xtpf

apache-hive-2.3.6-bin

mysql的驱动

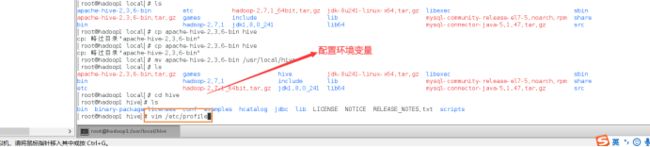

第一步:将下载好的hive安装包上传到/usr/local目录下解压

解压命令 tar -zxvf apache-hive-2.3.6-bin.tar.gz

将解压的文件改名 mv apache-hive-2.3.6-bin /usr/local/hive

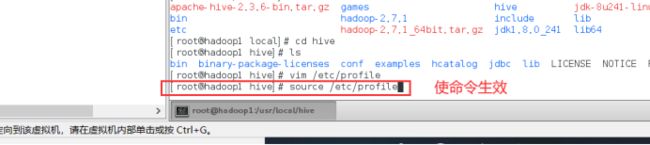

命令 vim /etc/profile

命令 export HIVE_HOME=/usr/local/hive

命令 export PATH=$PATH:$HIVE_HOME/bin

配置完环境变量后使用命令让它生效

命令 source /etc/profile

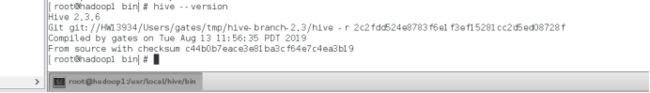

第三步检验环境变量是否生效有版本显示即生效:

第三步检验环境变量是否生效有版本显示即生效:

命令 hive --version

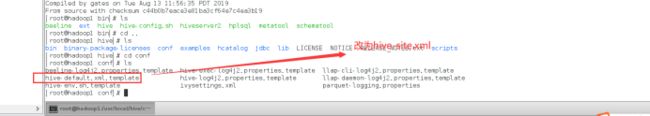

第五步:在hive的配置文件的目录上将xxxxxxxxxxx改 为hive-site.xml

第五步:在hive的配置文件的目录上将xxxxxxxxxxx改 为hive-site.xml

命令: cp hive-default.xml.template hive-site.xml

第六步:创建在hive的hive-site.xml文件中对应的hdfs目录并赋予相应的权限但是首先我们要先开启hadoop集群或是伪分布服务

第六步:创建在hive的hive-site.xml文件中对应的hdfs目录并赋予相应的权限但是首先我们要先开启hadoop集群或是伪分布服务

命令 start-all.sh 开启hadoop的服务

创建目录并赋予相应的权限

命令 hadoop fs -mkdir -p /user/hive/warehouse

命令 hadoop fs -mkdir -p /tmp/hive

命令 hadoop fs -chmod -R 777 /user/hive/warehouse

命令 hadoop fs -chmod -R 777 /tmp/hive

命令 hadoop fs -ls /

第七步查看/usr/local/hive目录下是否有temp文件夹如果没有需要自己创建

命令 cd /usr/local/hive

命令 ls

命令 mkdir temp

命令 ls

赋予它相应的权限 命令chmod -R 777 temp

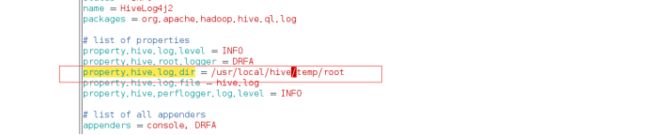

搜索的命令是 :/搜索的东西

如 :/hive.exec.local.scratchdir

自己按照自己的路径来改!

:/hive.exec.local.scratchdir

hive.exec.local.scratchdir</name>

/usr/local/hive/temp/root</value>

Local scratch space for Hive jobs</description>

</property>

:/hive.downloaded.resources.dir

hive.downloaded.resources.dir</name>

/usr/local/hive/temp/${hive.session.id}_resources</value>

Temporary local directory for added resources in the remote file system.</description>

</property>

:/hive.server2.logging.operation.log.location

hive.server2.logging.operation.log.location</name>

usr/local/hive/temp/root/operation_logs</value>

Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

:/hive.querylog.location

hive.querylog.location</name>

/usr/local/hive/temp/root</value>

Location of Hive run time structured log file</description>

</property>

:/javax.jdo.option.ConnectionURL

javax.jdo.option.ConnectionURL</name>

jdbc:mysql://192.168.121.110:3306/hive?createDatabaseIfNotExist=true&;characterEncoding=UTF-8</value>

自己的ip地址

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

以下是mysql相关配置注意自己安装的mysql版本

# 数据库的驱动类名称

# 新版本8.0版本的驱动为com.mysql.cj.jdbc.Driver

# 旧版本5.x版本的驱动为com.mysql.jdbc.Driver

# 本记录驱动版本为5.1.47

:/javax.jdo.option.ConnectionDriverName

javax.jdo.option.ConnectionDriverName</name>

com.mysql.jdbc.Driver</value>

Driver class name for a JDBC metastore</description>

</property>

:/javax.jdo.option.ConnectionUserName

javax.jdo.option.ConnectionUserName</name>

root</value> 当前用户

Username to use against metastore database</description>

</property>

:/javax.jdo.option.ConnectionPassword

javax.jdo.option.ConnectionPassword</name>

123456</value> # mysql密码

</property>

:/hive.metastore.schema.verification

hive.metastore.schema.verification</name>

false</value> 一定要改为false

Enforce metastore schema version consistency.

True: Verify that version information stored in is compatible with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manually migrate schema after Hive upgrade which ensures

proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

hive.metastore.schema.verification.record.version</name>

false</value> 一定要改为false

When true the current MS version is recorded in the VERSION table. If this is disabled and verification is

enabled the MS will be unusable.

</description>

</property>

第九步:复制并更名hive-log4j2.properties.template为 hive-log4j2.properties文件:

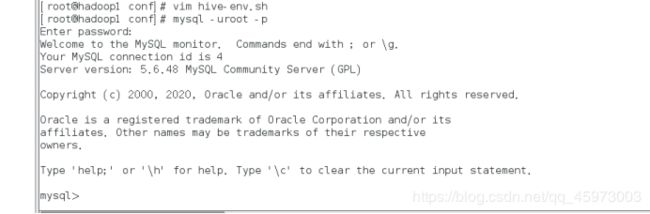

第十步:配置hive-env.sh

第十步:配置hive-env.sh

首先我们先复制并更名hive-env.sh.template为 hive-env.sh文件

命令 cp hive-env.sh.template hive-env.sh

配置文件如下:

# Set HADOOP_HOME to point to a specific hadoop install directory

# HADOOP_HOME=${bin}/../../hadoop

HADOOP_HOOME=/usr/local/hadoop-2.7.1 hdoop安装目录

# Hive Configuration Directory can be controlled by:

# export HIVE_CONF_DIR=

export HIVE_CONF_DIR=/usr/local/hive/conf hive的配置文件目录

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

# export HIVE_AUX_JARS_PATH=

export HIVE_AUX_JARS_PATH=/usr/local/hive/lib hive依赖的jar包目录

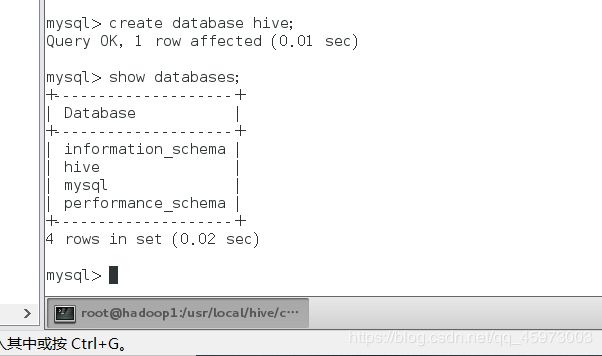

第十一步:启动mysql创建hive数据库

命令 : mysql -uroot -p

命令 : create database hive;

命令 :grant all privileges on *.* to 'root'@'%'identified by '密码'with grant option;

命令:flush privileges; #刷新权限

上传mysql连接驱动到/usr/local/hive并解压,然后复制到hive目录中的lib中(如下图pwd路径)

上传mysql连接驱动到/usr/local/hive并解压,然后复制到hive目录中的lib中(如下图pwd路径)

复制过去后到/usr/local/hive/lib目录下查询jar包是否在lib目录下

命令 ll mysql-connector-java-5.1.47-bin.jar

命令:

在/usr/local/hive/mysql-connector-java-5.1.47目录下输入

cp mysql-connector-java-5.1.47-bin.jar /usr/local/hive/lib

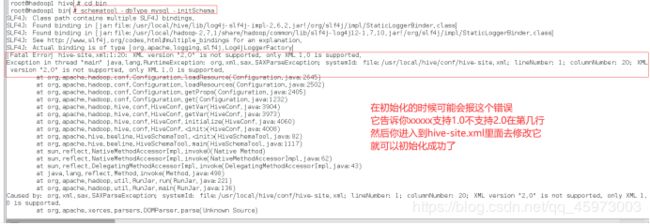

进入hive的bin目录进行初始化:

命令cd /usr/local/hive/bin

初始化命令 schematool -dbType mysql -initSchema

命令 hive