CentOS 7搭建Apache Hadoop 3.1.1集群

一、集群规划

集群节点为1个NameNode,1个SecondaryNameNode,3个DataNode,如下表如示:

NameNode,SecondaryNameNode:

| NameNode,SecondaryNameNode:(192.168.0.199) | ||

| 组件 | 版本 | 路径 |

| jdk | 1.8.0_181 | /usr/local/java/ |

| hadoop | 3.1.1 | /usr/local/hadoop/ |

DataNode:

| DataNode:(192.168.0.111,192.168.0.133,192.168.0.155) | ||

| 组件 | 版本 | 路径 |

| jdk | 1.8.0_181 | /usr/local/java/ |

| hadoop | 3.1.1 | /usr/local/hadoop/ |

二、安装包下载

jdk:下载地址:http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html,下载1.8.0_181版本;

hadoop:下载地址:http://hadoop.apache.org/releases.html,下载3.1.1版本;

三、服务器设置

1、主机名修改

hostnamectl --static set-hostname cloud-ix

hostnamectl --static set-hostname cloud-vi

hostnamectl --static set-hostname cloud-vii

hostnamectl --static set-hostname cloud-viii

同时修改四个主机的/etc/hosts文件,增加以下三条:

192.168.0.111 cloud-vi

192.168.0.133 cloud-vii

192.168.0.155 cloud-viii

192.168.0.199 cloud-ix

2、防火墙设置

如主机中未安装iptables,在四个主机中执行以下命令安装:

yum install iptables-services

执行iptables -L -n -v命令可以查看iptables配置,执行以下命令永久关闭四个主机的iptables:

chkconfig iptables off

同时关闭四个主机的iptables和firewalld并设置开机不启动,执行以下命令:

systemctl stop iptables

systemctl disable iptables

systemctl stop firewalld

systemctl disable firewalld

执行systemctl status iptables和systemctl status firewalld可以查看防火墙已经关闭。

3、时钟同步

执行以下命令安装ntpdate:

yum install ntpdate

执行以下命令同步时针:

ntpdate us.pool.ntp.org

添加时针同步的定时任务,执行如下命令:

crontab -e

输入如下内容,每天凌晨5点同步时针:

0 5 * * * /usr/sbin/ntpdate cn.pool.ntp.org

执行如下命令重启服务并设置开机自启:

service crond restart

systemctl enable crond.service

4、SSH免密登录

在四个主机中执行如下两条命令生成密钥和公钥文件,并添加到认证文件中:

ssh-keygen -t rsa

cat id_rsa.pub >> authorized_keys

合并四个主机的认证文件,在cloud-ix,cloud-vi,cloud-vii三个主机中分别执行如下命令,将四个主机的认证信息合并到cloud-viii中。

cloud-ix:scp authorized_keys root@cloud-vi:/root/.ssh/authorized_keys

cloud-vi:scp authorized_keys root@cloud-vii:/root/.ssh/authorized_keys

cloud-vii:scp authorized_keys root@cloud-viii:/root/.ssh/authorized_keys

将cloud-viii中的认证文件,回写到其他三个主机中,执行如下命令:

scp authorized_keys root@cloud-ix:/root/.ssh/authorized_keys

scp authorized_keys root@cloud-vi:/root/.ssh/authorized_keys

scp authorized_keys root@cloud-vii:/root/.ssh/authorized_keys

在四个主机中,执行如下任意命令,可登录到其他主机:

ssh root@cloud-ix

ssh root@cloud-vi

ssh root@cloud-vii

ssh root@cloud-viii

登录到其他主机后,可执行exit返回主机。

四、安装并配置JDK

下载jdk1.8.0_181,将其解压到/usr/local/java/。执行如下命令:

mkdir -p /usr/local/java

tar -zxvf jdk-8u181-linux-x64.tar.gz /usr/local/java/

添加环境变量,编辑配置文件/etc/profile

export JAVA_HOME=/usr/local/java/jdk1.8.0_181

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

配置后,执行source /etc/profile使配置生效

执行java -version查看jdk版本,看配置是否生效

五、安装并配置hadoop

下载hadoop 3.1.1,将其解压到/usr/local/hadoop。执行如下命令:

mkdir -p /usr/local/hadoop

tar -zxvf hadoop-3.1.1.tar.gz /usr/local/hadoop/

同时创建hadoop相关配置目录:

mkdir -p /data/hadoop/hdfs/name /data/hadoop/hdfs/data /var/log/hadoop/tmp

添加环境变量,编辑配置文件/etc/profile

export HADOOP_HOME=/usr/local/hadoop/hadoop-3.1.1

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

配置后,执行source /etc/profile使配置生效

接下来配置hadoop配置文件:

1、/usr/local/hadoop/hadoop-3.1.1/etc/hadoop/hadoop-env.sh

配置jdk和hadoop根目录

export JAVA_HOME=/usr/local/java/jdk1.8.0_181

export HADOOP_HOME=/usr/local/hadoop/hadoop-3.1.12、/usr/local/hadoop/hadoop-3.1.1/etc/hadoop/yarn-env.sh

配置jdk根目录

export JAVA_HOME=/usr/local/java/jdk1.8.0_1813、/usr/local/hadoop/hadoop-3.1.1/etc/hadoop/workers

配置DataNode节点

cloud-vi

cloud-vii

cloud-viii4、/usr/local/hadoop/hadoop-3.1.1/etc/hadoop/core-site.xml

指定hdfs的nameservice,指定hadoop临时目录

fs.defaultFS

hdfs://cloud-ix:8020

hadoop.tmp.dir

/var/log/hadoop/tmp

5、/usr/local/hadoop/hadoop-3.1.1/etc/hadoop/hdfs-site.xml

配置NameNode和SecondaryNameNode和文件目录,配置hdfs的文件副本数

dfs.namenode.http-address

cloud-ix:50070

dfs.namenode.secondary.http-address

cloud-ix:50090

dfs.namenode.name.dir

/data/hadoop/hdfs/name

dfs.datanode.data.dir

/data/hadoop/hdfs/data

dfs.replication

3

6、/usr/local/hadoop/hadoop-3.1.1/etc/hadoop/mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.application.classpath

$HADOOP_HOME/etc/hadoop,

$HADOOP_HOME/share/hadoop/common/*,

$HADOOP_HOME/share/hadoop/common/lib/*,

$HADOOP_HOME/share/hadoop/hdfs/*,

$HADOOP_HOME/share/hadoop/hdfs/lib/*,

$HADOOP_HOME/share/hadoop/mapreduce/*,

$HADOOP_HOME/share/hadoop/mapreduce/lib/*,

$HADOOP_HOME/share/hadoop/yarn/*,

$HADOOP_HOME/share/hadoop/yarn/lib/*

mapreduce.jobhistory.address

cloud-ix:10020

mapreduce.jobhistory.webapp.address

cloud-ix:19888

7、/usr/local/hadoop/hadoop-3.1.1/etc/hadoop/yarn-site.xml

yarn.resourcemanager.hostname

cloud-ix

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

The address of the applications manager interface in the RM.

yarn.resourcemanager.address

${yarn.resourcemanager.hostname}:8032

The address of the scheduler interface.

yarn.resourcemanager.scheduler.address

${yarn.resourcemanager.hostname}:8030

The http address of the RM web application.

yarn.resourcemanager.webapp.address

${yarn.resourcemanager.hostname}:8088

The https adddress of the RM web application.

yarn.resourcemanager.webapp.https.address

${yarn.resourcemanager.hostname}:8090

yarn.resourcemanager.resource-tracker.address

${yarn.resourcemanager.hostname}:8031

The address of the RM admin interface.

yarn.resourcemanager.admin.address

${yarn.resourcemanager.hostname}:8033

yarn.nodemanager.local-dirs

/data/hadoop/yarn/local

yarn.log-aggregation-enable

true

yarn.nodemanager.remote-app-log-dir

/data/hadoop/data/tmp/logs

yarn.log.server.url

http://cloud-ix:19888/jobhistory/logs

yarn.nodemanager.vmem-check-enabled

false

接下来修改hadoop启动脚本:

1、/usr/local/hadoop/hadoop-3.1.1/sbin/start-dfs.sh 和 /usr/local/hadoop/hadoop-3.1.1/sbin/stop-dfs.sh

添加如下配置:

HADOOP_SECURE_DN_USER=hdfs

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

2、/usr/local/hadoop/hadoop-3.1.1/sbin/start-yarn.sh 和 /usr/local/hadoop/hadoop-3.1.1/sbin/stop-yarn.sh

添加如下配置:

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

3、/usr/local/hadoop/hadoop-3.1.1/sbin/start-all.sh 和 /usr/local/hadoop/hadoop-3.1.1/sbin/stop-all.sh

添加如下配置:

TANODE_USER=root

HDFS_DATANODE_USER=root

HDFS_DATANODE_SECURE_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

六、启动hadoop

格式化hdfs,执行如下命令:

hdfs namenode -format

启动hadoop

start-all.sh

执行jps可以查看节点中启动的NameNode、SecondaryNameNode、DataNode。

执行stop-all.sh脚本可以停止hadoop。

至此,hadoop集群已经搭建并启动。

七,验证hadoop

1、访问web页面

访问hadoop页面,打开http://192.168.0.199:8088,如下所示:

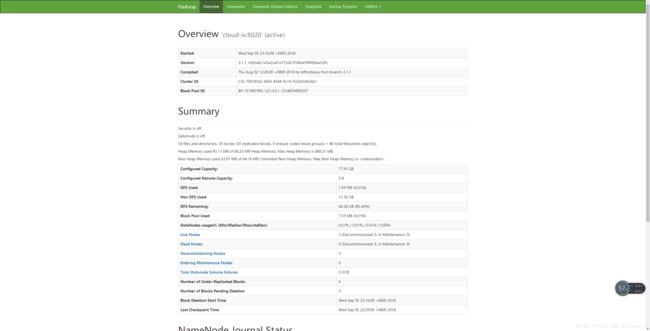

访问hdfs,打开http://192.168.0.199:50070,如下所示:

2、hdfs操作

命令行操作如下:

查看hdfs目录:hadoop fs -ls /

新建hdfs目录:hadoop fs -mkdir -p /hdfs

上传文件到hdfs:hadoop fs -put temp.txt /hdfs

重命名hdfs目录:hadoop fs -mv /hdfs /dfs

删除hdfs目录:hadoop fs -rm /dfs

查看hdfs文件内容:hadoop fs -cat /hdfs/temp.txt

hdfs也可以在web端操作,打开页面http://192.168.0.199:50070/explorer.html#/,即可看到hdfs。

3、执行hadoop自带的单词统计

jar包在/usr/local/hadoop/hadoop-3.1.1/share/hadoop/mapreduce目录下,进入该目录,执行如下命令:

yarn jar hadoop-mapreduce-examples-3.1.1.jar wordcount /hdfs/temp.txt /hdfs/temp-wordcount-out

执行完后,结果写在/hdfs/temp-wordcount-out/part-r-00000文件中,查看该文件即可得到统计结果