Spark之Spark Streaming

目录

概述

准备

一、TCP socket集成

1. nc服务安装

2. 测试

二、kafka集成

1. 服务启动

2. 测试

参考文章

概述

Spark Streaming是核心Spark API的扩展,可实现实时数据流的可伸缩,高吞吐量,容错流处理。 可以从许多数据源(例如Kafka,Flume,Kinesis或TCP套接字)中提取数据,并可以使用高级功能(如map,reduce,join和window)表示的复杂算法来处理数据。最后,可以将处理后的数据推送到文件系统,数据库和实时图表。

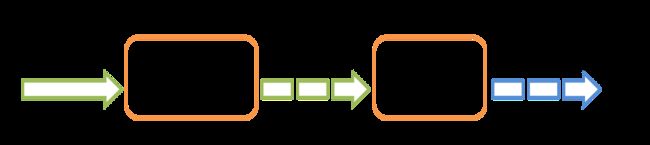

在内部,Spark Streaming接收实时输入数据流,并将数据分成“批”处理,然后由Spark引擎进行处理,从而生成批处理的最终结果流。如图所示:

Spark Streaming提供被称为离散化流或者DStream的高级抽象,它表示连续的数据流。 可以根据来自Kafka,Flume和Kinesis等输入数据流来创建DStream,也可以通过对其他的DStream应用高级操作来创建DStream。在内部,DStream表示为RDD序列。

说明:本实例将通过Spark Streaming读取TCP套接字和kafka的数据来进行词频统计,把统计的数据保存到Mysql数据库中。Scala版本是2.11.8,Spark版本是2.2.0,kafka版本是2.10-0.10.1.0

准备

添加如下依赖:

org.apache.spark

spark-streaming_2.11

2.2.0

org.apache.spark

spark-streaming-kafka-0-10_2.11

2.2.0

一、TCP socket集成

1. nc服务安装

- 下载&安装

# 下载

wget http://vault.centos.org/6.4/os/x86_64/Packages/nc-1.84-22.el6.x86_64.rpm

# 安装

sudo rpm -iUv nc-1.84-22.el6.x86_64.rpm- 启动监听

nc -lk 99992. 测试

- 编码

package com.wangmh.sparks

import java.sql.DriverManager

import org.apache.spark.sql.SparkSession

import org.apache.spark.streaming.dstream.DStream

import org.apache.spark.streaming.{Seconds, StreamingContext}

object StreamingSocket {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.master("local[*]")

.appName("testSparkStreaming")

.getOrCreate()

val ssc = new StreamingContext(spark.sparkContext, Seconds(10))

val line = ssc.socketTextStream("bigdata.centos01", 9999)

val wordsPair = line.flatMap(l => l.split(" ")).map(l => (l, 1))

val wordCount = wordsPair.reduceByKey(_ + _)

//save to db

save(wordCount)

// print the result

wordCount.print()

ssc.start()

ssc.awaitTermination()

}

/**

* save the records to mysql

* @param wordCount

*/

def save(wordCount: DStream[(String, Int)]): Unit = {

wordCount.foreachRDD(rdd => rdd.foreachPartition(line => {

Class.forName("com.mysql.jdbc.Driver")

val conn = DriverManager.getConnection("jdbc:mysql://bigdata.centos03:3306/test?useUnicode=true", "root", "123456")

val statement = conn.createStatement()

try {

for (record <- line) {

println("----------------run sql-----------------")

val sql = "insert into word_count(title,count) values('" + record._1 + "'," + record._2 + ")"

statement.execute(sql)

}

} finally {

conn.close()

statement.close()

}

}))

}

}

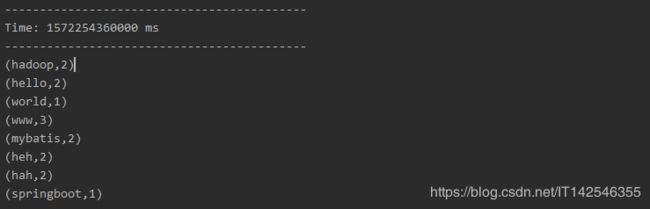

- 测试结果

输入:

统计输出:

二、kafka集成

kafka的集群安装部署请参阅:kafka的配置和分布式部署

1. 服务启动

- 启动producer

bin/kafka-console-producer.sh --broker-list bigdata.centos01:9092,bigdata.centos02:9092,bigdata.centos03:9092 --topic weblogs2. 测试

- 编码

package com.wangmh.sparks

import org.apache.kafka.clients.consumer.ConsumerConfig

import org.apache.kafka.common.serialization._

import org.apache.spark.sql.SparkSession

import org.apache.spark.streaming.kafka010.{ConsumerStrategies, KafkaUtils, LocationStrategies}

import org.apache.spark.streaming.{Seconds, StreamingContext}

object StreamingKafkaV10 {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder()

.master("local[*]")

.appName("testSparkStreaming")

.getOrCreate()

val ssc = new StreamingContext(spark.sparkContext, Seconds(10))

// Create direct kafka stream with brokers and topics

val topicsSet = Set("test")

val kafkaParams = Map[String, Object](

ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG -> "bigdata.centos01:9092,bigdata.centos02:9092,bigdata.centos03:9092",

ConsumerConfig.GROUP_ID_CONFIG -> "kafkaV10",

ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG -> classOf[StringDeserializer],

ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG -> classOf[StringDeserializer])

val messages = KafkaUtils.createDirectStream[String, String](

ssc,

LocationStrategies.PreferConsistent,

ConsumerStrategies.Subscribe[String, String](topicsSet, kafkaParams))

// Get the lines, split them into words, count the words and print

val lines = messages.map(_.value)

val words = lines.flatMap(_.split(" "))

val wordCounts = words.map(x => (x, 1L)).reduceByKey(_ + _)

wordCounts.print()

// Start the computation

ssc.start()

ssc.awaitTermination()

}

}

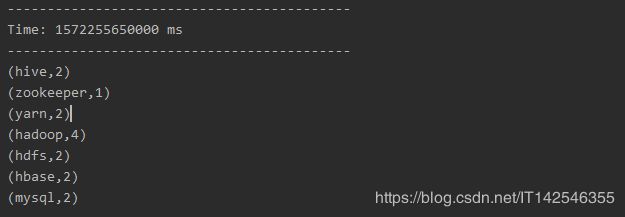

- 测试结果

输入:

![]()

统计输出:

参考文章

-

http://spark.apache.org/docs/2.2.0/streaming-programming-guide.html Spark Streaming Programming Guide