ELK-6.0.0(一)搭建Elasticsearch分布式集群

介绍:

Elk是elasticsearch+logstash+kibana的简称,是一个开源的日志分析的框架,在这三个组件中,这三个组件的作用如下:

- elasticsearch是存储,可以理解为数据库,把数据存起来并建立索引,方便用户的查询。

- Kibana是展示用的组件,负责将数据进行可视化呈现。同时有控制台,可以直接对es进行操作,

- Logstash是这个elk的核心组件,是日志的过滤器。它负责将日志进行格式化,然后传给elasticsearch进行存储。同时logstash也有一些组件,可以进行一些数据分析的工作。

这三个组件之间的逻辑关系是logstash>elasticsearch>kibana,但是在实际操作中并没有那么简单。

一、安装准备工作

准备3台机器,这样才能完成分布式集群的实验:

- 192.168.2.10

- 192.168.2.123

- 192.168.2.180

角色划分:

- 3台机器全部安装jdk1.8,因为elasticsearch是java开发的

- 3台全部安装elasticsearch (后续都简称为es)

- 192.168.2.10作为主节点

- 192.168.2.123以及192.168.2.180作为数据节点

- 主节点上需要安装kibana

- 在192.168.2.123上安装 logstash

ELK版本信息:

- Elasticsearch-6.0.0

- logstash-6.0.0

- kibana-6.0.0

- filebeat-6.0.0

修改三台机器的主机名和hosts文件内容如下,然后三台机器都得关闭防火墙

[root@master-node ~]# vim /etc/hosts

192.168.2.10 master-node

192.168.2.123 data-node1

192.168.2.180 data-node2二、安装ES

1、官方安装文档

https://www.elastic.co/guide/en/elastic-stack/current/installing-elastic-stack.html中文版

https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html2、首先要安装jdk8。注意:三台机器都要安装

wget下载

[root@master-node ~]# cd /usr/local/

[root@master-node ~]# wget --no-cookies --no-check-certificate --header "Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept-securebackup-cookie" "http://download.oracle.com/otn-pub/java/jdk/8u141-b15/336fa29ff2bb4ef291e347e091f7f4a7/jdk-8u141-linux-x64.tar.gz"

[root@master-node local]# tar -zxvf jdk-8u141-linux-x64.tar.gz

[root@master-node local]# mv jdk1.8.0_141 jdk8设置环境变量:vim /etc/profile,然后加入以下

export JAVA_HOME=/usr/local/jdk8

export JAVA_BIN=/usr/local/jdk8

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export JAVA_HOME JAVA_BIN PATH CLASSPATH

更新:

[root@master-node ~]# source /etc/profile查看Java

[root@master-node ~]# java -version

java version "1.8.0_141"

Java(TM) SE Runtime Environment (build 1.8.0_141-b15)

Java HotSpot(TM) 64-Bit Server VM (build 25.141-b15, mixed mode)2、官方源安装,三台机器都要执行安装es

[root@master-node ~]# rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

[root@master-node ~]# vim /etc/yum.repos.d/elastic.repo # 增加以下内容

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://artifacts.elastic.co/packages/6.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

[root@master-node ~]# yum install -y elasticsearch3、因为官方源下载太慢,所以这里下载rpm包进行安装

[root@master-node ~]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.0.0.rpm

[root@master-node ~]# rpm -ivh elasticsearch-6.0.0.rpm

warning: elasticsearch-6.0.0.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ################################# [100%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

Updating / installing...

1:elasticsearch-0:6.0.0-1 ################################# [100%]

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

### You can start elasticsearch service by executing

sudo systemctl start elasticsearch.service

[root@data-node1 ~]# rpm -ivh elasticsearch-6.0.0.rpm

[root@data-node2 ~]# rpm -ivh elasticsearch-6.0.0.rpm三、配置ES

1、elasticsearch配置文件在这两个地方,有两个配置文件

[root@master-node ~]# ll /etc/elasticsearch/

total 16

-rw-rw---- 1 root elasticsearch 2870 Nov 11 2017 elasticsearch.yml

-rw-rw---- 1 root elasticsearch 2678 Nov 11 2017 jvm.options

-rw-rw---- 1 root elasticsearch 5091 Nov 11 2017 log4j2.properties

You have new mail in /var/spool/mail/root

[root@master-node ~]# ll /etc/sysconfig/elasticsearch

-rw-rw---- 1 root elasticsearch 1593 Nov 11 2017 /etc/sysconfig/elasticsearchelasticsearch.yml 文件用于配置集群节点等相关信息的,elasticsearch 文件则是配置服务本身相关的配置,例如某个配置文件的路径以及java的一些路径配置什么的。

2、官方配置文档

https://www.elastic.co/guide/en/elasticsearch/reference/6.0/rpm.html配置集群节点,在192.168.2.10上编辑配置文件:

[root@master-node ~]# vim /etc/elasticsearch/elasticsearch.yml # 增加或更改以下内容

[root@master-node ~]# cat /etc/elasticsearch/elasticsearch.yml | grep ^[^#]

cluster.name: master-node # 集群中的名称

node.name: master # 该节点名称

node.master: true # 意思是该节点为主节点

node.data: false # 表示这不是数据节点

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0 # 监听全部ip,在实际环境中应设置为一个安全的ip

http.port: 9200 # es服务的端口号

discovery.zen.ping.unicast.hosts: ["192.168.2.10", "192.168.2.123","192.168.2.180"] # 配置自动发现3、然后将配置文件发送到另外两台机器上去

[root@master-node ~]# scp /etc/elasticsearch/elasticsearch.yml data-node1:/tmp/

The authenticity of host 'data-node1 (192.168.2.123)' can't be established.

ECDSA key fingerprint is 6d:5b:e9:d9:bd:12:64:06:c5:cc:a2:07:a6:99:96:3d.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'data-node1,192.168.2.123' (ECDSA) to the list of known hosts.

root@data-node1's password:

elasticsearch.yml 100% 2924 2.9KB/s 00:00

[root@master-node ~]# scp /etc/elasticsearch/elasticsearch.yml data-node2:/tmp/

The authenticity of host 'data-node2 (192.168.2.180)' can't be established.

ECDSA key fingerprint is 6d:5b:e9:d9:bd:12:64:06:c5:cc:a2:07:a6:99:96:3d.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'data-node2,192.168.2.180' (ECDSA) to the list of known hosts.

root@data-node2's password:

elasticsearch.yml4、到两台机器上更改此文件,修改以下几处地方

192.168.2.123

[root@data-node1 ~]# vim /tmp/elasticsearch.yml

[root@data-node1 ~]# cat /tmp/elasticsearch.yml | grep ^[^#]

cluster.name: master-node

node.name: data-node1

node.master: false

node.data: true

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["192.168.2.10", "192.168.2.123","192.168.2.180"]

[root@data-node1 ~]# cp /tmp/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml

cp: overwrite ‘/etc/elasticsearch/elasticsearch.yml’? yes

[root@data-node1 ~]# 192.168.2.180

[root@data-node2 ~]# cat /tmp/elasticsearch.yml | grep ^[^#]

cluster.name: master-node

node.name: data-node2

node.master: false

node.data: true

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.unicast.hosts: ["192.168.2.10", "192.168.2.123","192.168.2.180"]

[root@data-node2 ~]# cp /tmp/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml

cp: overwrite ‘/etc/elasticsearch/elasticsearch.yml’? yes完成以上的配置之后,三台都要启动es服务

[root@master-node ~]# systemctl start elasticsearch.service主节点启动完成之后,查看9200和9300端口是否监听状态,然后再启动其他节点的es服务。

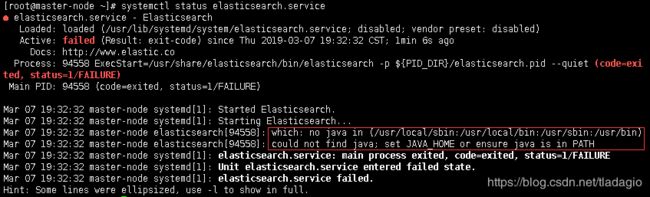

5、排错记录

启动失败,然后查看状态

这是无法在环境变量中找到java可执行文件,添加软链接即可:

[root@master-node ~]# ln -s /usr/local/jdk8/bin/java /usr/bin/再次查看服务和端口,9300端口是集群通信用的,9200则是数据传输时用的。

[root@master-node ~]# systemctl restart elasticsearch.service

[root@master-node ~]# netstat -ntlp | grep java

tcp 0 0 127.0.0.1:32000 0.0.0.0:* LISTEN 954/java

tcp6 0 0 :::9200 :::* LISTEN 95311/java

tcp6 0 0 :::9300 :::* LISTEN 95311/java

[root@master-node ~]# systemctl status elasticsearch.service

● elasticsearch.service - Elasticsearch

Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; disabled; vendor preset: disabled)

Active: active (running) since Thu 2019-03-07 19:43:36 CST; 1min 41s ago

Docs: http://www.elastic.co

Main PID: 95311 (java)

CGroup: /system.slice/elasticsearch.service

└─95311 /bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccu...

Mar 07 19:43:36 master-node systemd[1]: Started Elasticsearch.

Mar 07 19:43:36 master-node systemd[1]: Starting Elasticsearch... 四、curl查看es集群情况

1、集群的健康检查:

[root@master-node ~]# curl '192.168.2.10:9200/_cluster/health?pretty'

{

"cluster_name" : "master-node",

"status" : "green", #为green则代表健康没问题,如果是yellow或者red则是集群有问题

"timed_out" : false, #是否有超时

"number_of_nodes" : 3, #集群中的节点数量

"number_of_data_nodes" : 2, #集群中Java节点的数量

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}2、查看集群的详细信息:

[root@master-node ~]# curl '192.168.2.10:9200/_cluster/state?pretty'

{

"cluster_name" : "master-node",

"compressed_size_in_bytes" : 350,

"version" : 4,

"state_uuid" : "DugAUq0HSK27cMLmOz-KWA",

"master_node" : "DdcU0fKfQtitLqwOL0v7eA",

"blocks" : { },

"nodes" : {

"QbIwi4X4QwGAEUFIs8gQ1w" : {

"name" : "data-node2",

"ephemeral_id" : "twJHf3w9QxWvba2rjFvNhw",

"transport_address" : "192.168.2.180:9300",

"attributes" : { }

},

"U8YW6pFrQNaHxcnDU_fzhw" : {

"name" : "data-node1",

"ephemeral_id" : "V6VOAmL5TRCw1sfkFc-biA",

"transport_address" : "192.168.2.123:9300",

"attributes" : { }

},

"DdcU0fKfQtitLqwOL0v7eA" : {

"name" : "master",

"ephemeral_id" : "jFH6nBLXSyWnKKftniCWDg",

"transport_address" : "192.168.2.10:9300",

"attributes" : { }

}

},

"metadata" : {

"cluster_uuid" : "9r603V_rTmm515A3L5DutA",

"templates" : { },

"indices" : { },

"index-graveyard" : {

"tombstones" : [ ]

}

},

"routing_table" : {

"indices" : { }

},

"routing_nodes" : {

"unassigned" : [ ],

"nodes" : {

"QbIwi4X4QwGAEUFIs8gQ1w" : [ ],

"U8YW6pFrQNaHxcnDU_fzhw" : [ ]

}

},

"snapshot_deletions" : {

"snapshot_deletions" : [ ]

},

"snapshots" : {

"snapshots" : [ ]

},

"restore" : {

"snapshots" : [ ]

}

}

You have new mail in /var/spool/mail/root

[root@master-node ~]#检查没有问题后,es集群就搭建完成。

3、集群的状态信息也可以通过浏览器查看:

但是显示出来的也是一堆字符串,我们希望这些信息能以图形化的方式显示出来,那就需要安装kibana来为我们展示这些数据了。