CDH6.3.1部署大数据集群

近期公司打算重新部署CDH大数据集群,之前使用的是CDH5.3.6的版本,搭建方式可以参考这里~此次重新部署,打算采用6.3.1的版本,之前的部署方式已然不适用,故重新整理,遂成此文~

目录

一、准备工作

二、环境搭建

1、设置主机名

2、配置主机映射

3、关闭集群防火墙

4、关闭selinux

5、配置免秘钥登录

6、设置集群时间同步

三、所有节点安装jdk

四、主节点部署jdbc驱动

五、安装MySQL

六、CDH部署

6.1 离线部署CM server及agent

6.1.1 创建目录解压软件

6.1.2 选择cdh-master为cm的server节点,进行部署

6.1.3 四个节点均为cm的agent节点,进行部署

6.1.4 所有节点修改agent的配置,指向server的节点

6.1.5 master节点修改server的配置

6.2 主节点部署离线parcel源

6.2.1 安装httpd

6.2.2 部署离线parcel源

6.2.3 启动httpd

6.2.4 主节点启动server

6.2.5 所有节点启动agent

一、准备工作

首先将需要安装的包上传到/opt/software目录下:

安装包的获取地址如下:

1、jdk:https://www.oracle.com/technetwork/java/javase/downloads/java-archive-javase8-2177648.html

2、mysql:https://dev.mysql.com/downloads/mysql/5.7.html#downloads

3、mysql jdbc jar包:http://central.maven.org/maven2/mysql/mysql-connector-java/5.1.47/mysql-connector-java-5.1.47.jar

4、cm6.3.1:https://archive.cloudera.com/cm6/6.3.1/repo-as-tarball/cm6.3.1-redhat7.tar.gz

5、Parcel:

https://archive.cloudera.com/cdh6/6.3.1/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel

https://archive.cloudera.com/cdh6/6.3.1/parcels/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha1

https://archive.cloudera.com/cdh6/6.3.1/parcels/manifest.json二、环境搭建

小编使用了四台机器搭建CDH集群,其中一台作为master节点,另外三台作为slave节点。

1、设置主机名

hostnamectl set-hostname cdh-master

hostnamectl set-hostname cdh-slave01

hostnamectl set-hostname cdh-slave02

hostnamectl set-hostname cdh-slave032、配置主机映射

在四个节点的/etc/hosts文件中添加如下内容:

192.168.0.192 cdh-master

192.168.0.196 cdh-slave01

192.168.0.197 cdh-slave02

192.168.0.198 cdh-slave033、关闭集群防火墙

四个节点同时执行:

systemctl stop firewalld && systemctl disable firewalld && iptables -F4、关闭selinux

在四个节点的/etc/selinux/config文件中,将SELINUX设置为disabled:

SELINUX=disabled5、配置免秘钥登录

所有节点依次执行如下操作:

ssh-keygen

ssh-copy-id cdh-master

ssh-copy-id cdh-slave01

ssh-copy-id cdh-slave02

ssh-copy-id cdh-slave03注意:以上操作重启机器生效~

reboot6、设置集群时间同步

(1)所有节点设置亚洲上海时区

timedatectl set-timezone Asia/Shanghai(2)所有节点安装ntp服务

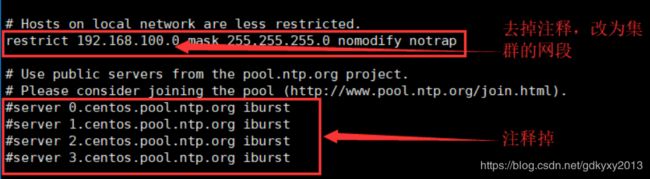

yum install -y ntp(3)选取cdh-master为ntp的主节点,在主节点上修改配置文件/etc/ntp.conf:

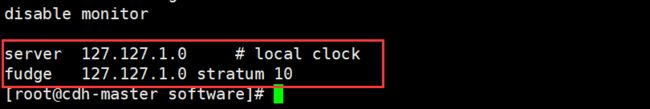

在该配置文件下方添加如下内容:

server 127.127.1.0 # local clock

fudge 127.127.1.0 stratum 10(4)在所有机器上执行如下命令:

systemctl start ntpd && systemctl enable ntpd (5)修改cdh-master的时间与网络时间同步:

ntpdate -u us.pool.ntp.org(6)在cdh-master节点上修改本地硬件时钟时间:

hwclock --localtime //查看本地硬件时钟时间

hwclock --localtime -w //将系统时间写入本地硬件时钟时间(7)cdh-master节点将本地硬件时钟时间与系统时间进行自动同步:

vi /etc/sysconfig/ntpdate

SYNC_HWCLOCK=yes

vi /etc/sysconfig/ntpd

SYNC_HWCLOCK=yes(8)slave节点执行crontab定时任务,定时向master节点同步时间:

*/20 * * * * /usr/sbin/ntpdate -u cdh-master三、所有节点安装jdk

以master节点为例:

[root@cdh-master ~]# mkdir /usr/java

[root@cdh-master ~]# tar -zxf jdk-8u231-linux-x64.tar.gz -C /usr/java/

[root@cdh-master ~]# chown -R root:root /usr/java/jdk1.8.0_231

[root@cdh-master ~]# echo "export JAVA_HOME=/usr/java/jdk1.8.0_231" >> /etc/profile

[root@cdh-master ~]# echo "export PATH=/usr/java/jdk1.8.0_231/bin:${PATH}" >> /etc/profile

[root@cdh-master ~]# source /etc/profile

[root@cdh-master ~]# which java

/usr/java/jdk1.8.0_231/bin/java

[root@cdh-master ~]# java -version

java version "1.8.0_231"

Java(TM) SE Runtime Environment (build 1.8.0_231-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.231-b11, mixed mode)四、主节点部署jdbc驱动

mkdir -p /usr/share/java/

cp mysql-connector-java-5.1.47.jar /usr/share/java/mysql-connector-java.jar五、安装MySQL

1、解压:

[root@cdh-master software]# tar -zxvf mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz -C /usr/local/2、重命名:

[root@cdh-master software]# cd /usr/local/

[root@cdh-master local]# mv mysql-5.7.11-linux-glibc2.5-x86_64 mysql3、创建目录:

[root@cdh-master local]# mkdir mysql/arch mysql/data mysql/tmp4、创建my.cnf:

[client]

port = 3306

socket = /usr/local/mysql/data/mysql.sock

default-character-set=utf8mb4

[mysqld]

port = 3306

socket = /usr/local/mysql/data/mysql.sock

skip-slave-start

skip-external-locking

key_buffer_size = 256M

sort_buffer_size = 2M

read_buffer_size = 2M

read_rnd_buffer_size = 4M

query_cache_size= 32M

max_allowed_packet = 16M

myisam_sort_buffer_size=128M

tmp_table_size=32M

table_open_cache = 512

thread_cache_size = 8

wait_timeout = 86400

interactive_timeout = 86400

max_connections = 600

# Try number of CPU's*2 for thread_concurrency

#thread_concurrency = 32

#isolation level and default engine

default-storage-engine = INNODB

transaction-isolation = READ-COMMITTED

server-id = 1739

basedir = /usr/local/mysql

datadir = /usr/local/mysql/data

pid-file = /usr/local/mysql/data/hostname.pid

#open performance schema

log-warnings

sysdate-is-now

binlog_format = ROW

log_bin_trust_function_creators=1

log-error = /usr/local/mysql/data/hostname.err

log-bin = /usr/local/mysql/arch/mysql-bin

expire_logs_days = 7

innodb_write_io_threads=16

relay-log = /usr/local/mysql/relay_log/relay-log

relay-log-index = /usr/local/mysql/relay_log/relay-log.index

relay_log_info_file= /usr/local/mysql/relay_log/relay-log.info

log_slave_updates=1

gtid_mode=OFF

enforce_gtid_consistency=OFF

# slave

slave-parallel-type=LOGICAL_CLOCK

slave-parallel-workers=4

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log_recovery=ON

#other logs

#general_log =1

#general_log_file = /usr/local/mysql/data/general_log.err

#slow_query_log=1

#slow_query_log_file=/usr/local/mysql/data/slow_log.err

#for replication slave

sync_binlog = 500

#for innodb options

innodb_data_home_dir = /usr/local/mysql/data/

innodb_data_file_path = ibdata1:1G;ibdata2:1G:autoextend

innodb_log_group_home_dir = /usr/local/mysql/arch

innodb_log_files_in_group = 4

innodb_log_file_size = 1G

innodb_log_buffer_size = 200M

#根据生产需要,调整pool size

innodb_buffer_pool_size = 2G

#innodb_additional_mem_pool_size = 50M #deprecated in 5.6

tmpdir = /usr/local/mysql/tmp

innodb_lock_wait_timeout = 1000

#innodb_thread_concurrency = 0

innodb_flush_log_at_trx_commit = 2

innodb_locks_unsafe_for_binlog=1

#innodb io features: add for mysql5.5.8

performance_schema

innodb_read_io_threads=4

innodb-write-io-threads=4

innodb-io-capacity=200

#purge threads change default(0) to 1 for purge

innodb_purge_threads=1

innodb_use_native_aio=on

#case-sensitive file names and separate tablespace

innodb_file_per_table = 1

lower_case_table_names=1

[mysqldump]

quick

max_allowed_packet = 128M

[mysql]

no-auto-rehash

default-character-set=utf8mb4

[mysqlhotcopy]

interactive-timeout

[myisamchk]

key_buffer_size = 256M

sort_buffer_size = 256M

read_buffer = 2M

write_buffer = 2M5、创建用户组及用户

[root@cdh-master local]# groupadd -g 101 dba

[root@cdh-master local]# useradd -u 514 -g dba -G root -d /usr/local/mysql mysqladmin

[root@cdh-master local]# id mysqladmin

uid=514(mysqladmin) gid=101(dba) groups=101(dba),0(root)设置mysqladmin的密码:

[root@cdh-master local]# passwd mysqladmin如果用户已经存在,执行下面的命令:

[root@cdh-master local]# usermod -u 514 -g dba -G root -d /usr/local/mysql mysqladmin6、环境变量配置文件至mysqladmin用户的home目录中:

[root@cdh-master local]# cp /etc/skel/.* /usr/local/mysql7、配置环境变量

[root@cdh-master local]# vi mysql/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

export MYSQL_BASE=/usr/local/mysql

export PATH=${MYSQL_BASE}/bin:$PATH

unset USERNAME

#stty erase ^H

set umask to 022

umask 022

PS1=`uname -n`":"'$USER'":"'$PWD'":>"; export PS1

## end8、赋权

[root@cdh-master local]# chown mysqladmin:dba /etc/my.cnf

[root@cdh-master local]# chmod 640 /etc/my.cnf

[root@cdh-master local]# chown -R mysqladmin:dba /usr/local/mysql

[root@cdh-master local]# chmod -R 755 /usr/local/mysql 9、配置服务及开机自启:

[root@cdh-master local]# cd /usr/local/mysql

#将服务文件拷贝到init.d下,并重命名为mysql

[root@cdh-master mysql]# cp ./support-files/mysql.server /etc/rc.d/init.d/mysql

#赋予可执行权限

[root@cdh-master mysql]# chmod +x /etc/rc.d/init.d/mysql

#删除服务

[root@cdh-master mysql]# chkconfig --del mysql

#添加服务

[root@cdh-master mysql]# chkconfig --add mysql

[root@cdh-master mysql]# chkconfig --level 345 mysql on10、安装libaio及安装mysql的初始db:

[root@cdh-master mysql]# yum -y install libaio

[root@cdh-master mysql]# su - mysqladmin切换到mysqladmin用户下后,执行如下命令:

bin/mysqld \

--defaults-file=/etc/my.cnf \

--user=mysqladmin \

--basedir=/usr/local/mysql/ \

--datadir=/usr/local/mysql/data/ \

--initialize11、查看临时密码:

cat data/hostname.err |grep password

2017-07-22T02:15:29.439671Z 1 [Note] A temporary password is generated for root@localhost: tZgX7N-n;wO_12、启动:

/usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf &13、登录MySQL:

mysql -uroot -p'tZgX7N-n;wO_'14、修改密码并赋权:

alter user root@localhost identified by 'p@ssw0rd';

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY 'p@ssw0rd';

flush privileges;15、退出,重启MySQL生效:

service mysql restart16、再次登录MySQL,新建一些相关的数据库:

create database cmf DEFAULT CHARACTER SET utf8;

create database amon DEFAULT CHARACTER SET utf8;

grant all on cmf.* TO 'cmf'@'%' IDENTIFIED BY 'admin';

grant all on amon.* TO 'amon'@'%' IDENTIFIED BY 'admin';

flush privileges;

create database hive DEFAULT CHARACTER SET utf8;

grant all on hive.* TO 'hive'@'%' IDENTIFIED BY 'admin';

flush privileges;

create database oozie DEFAULT CHARACTER SET utf8;

grant all on oozie.* TO 'oozie'@'%' IDENTIFIED BY 'admin';

flush privileges;

create database hue DEFAULT CHARACTER SET utf8;

grant all on hue.* TO 'hue'@'%' IDENTIFIED BY 'admin';

flush privileges;六、CDH部署

6.1 离线部署CM server及agent

6.1.1 创建目录解压软件

所有节点执行如下操作:

mkdir /opt/cloudera-manager

tar -xzvf cm6.3.1-redhat7.tar.gz -C /opt/cloudera-manager/6.1.2 选择cdh-master为cm的server节点,进行部署

cd /opt/cloudera-manager/cm6.3.1/RPMS/x86_64

rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm --nodeps --force

rpm -ivh cloudera-manager-server-6.3.1-1466458.el7.x86_64.rpm --nodeps --force6.1.3 四个节点均为cm的agent节点,进行部署

cd /opt/cloudera-manager/cm6.3.1/RPMS/x86_64

rpm -ivh cloudera-manager-daemons-6.3.1-1466458.el7.x86_64.rpm --nodeps --force

rpm -ivh cloudera-manager-agent-6.3.1-1466458.el7.x86_64.rpm --nodeps --force6.1.4 所有节点修改agent的配置,指向server的节点

sed -i "s/server_host=localhost/server_host=cdh-master/g" /etc/cloudera-scm-agent/config.ini6.1.5 master节点修改server的配置

vi /etc/cloudera-scm-server/db.properties

com.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=cdh-master

com.cloudera.cmf.db.name=cmf

com.cloudera.cmf.db.user=cmf

com.cloudera.cmf.db.password=admin

com.cloudera.cmf.db.setupType=EXTERNAL6.2 主节点部署离线parcel源

6.2.1 安装httpd

yum install -y httpd6.2.2 部署离线parcel源

mkdir -p /var/www/html/cdh6_parcel

[root@cdh-master CDH6.3.1]# cp ./CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel /var/www/html/cdh6_parcel/

[root@cdh-master CDH6.3.1]# cp ./CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha1 /var/www/html/cdh6_parcel/CDH-6.3.1-1.cdh6.3.1.p0.1470567-el7.parcel.sha

[root@cdh-master CDH6.3.1]# cp ./manifest.json /var/www/html/cdh6_parcel/6.2.3 启动httpd

systemctl start httpd浏览器中输入如下地址,查看是否可以访问

http://cdh-master/cdh6_parcel/6.2.4 主节点启动server

systemctl start cloudera-scm-server查看后台日志:

tail -f /var/log/cloudera-scm-server/cloudera-scm-server.log如果没有问题,一段时间后,可以登录监控界面:

cdh-master:71806.2.5 所有节点启动agent

systemctl start cloudera-scm-agent接下来就是机械式的点击安装了,按照提示一步步操作即可~

你们在此过程中遇到了什么问题,欢迎留言,让我看看你们遇到了什么问题~