yolov3训练自己的数据

前言:早就听说yolov3算法识别率高,检测速度快,“快如闪电,可称目标检测之光”,最近在研究yolov3算法,动手训练了自己的数据集,其间出现很多问题,所以写下这篇博客记录一下。

一.编译源码并运行demo

运行demo的话,我主要是采用YunYang1994的代码,github上的代码链接为:https://github.com/YunYang1994/tensorflow-yolov3如果想直接训练自己的数据集的可以跳过这节内容,从第二节数据的标注开始阅读。

- 1.下载文件:

$ git clone https://github.com/YunYang1994/tensorflow-yolov3.git

- 2.安装依赖:

$ cd tensorflow-yolov3

$ pip install -r ./docs/requirements.txt

- 3.再去下载一下 权重文件yolov3.weights(迅雷比较快哦)

然后把它放在./checkpoint目录下 - 4.下载coco的训练模型文件并编译:(这里有 百度云链接.,如果下载慢的话),同样放在./checkpoint目录下。

$ cd checkpoint

$ wget https://github.com/YunYang1994/tensorflow-yolov3/releases/download/v1.0/yolov3_coco.tar.gz

$ tar -xvf yolov3_coco.tar.gz

$ cd ..

$ python convert_weight.py

$ python freeze_graph.py

- 5.如果得到了.pb文件,就可以运行demo了:

$ python image_demo.py

$ python video_demo.py # if use camera, set video_path = 0

二.数据的标注

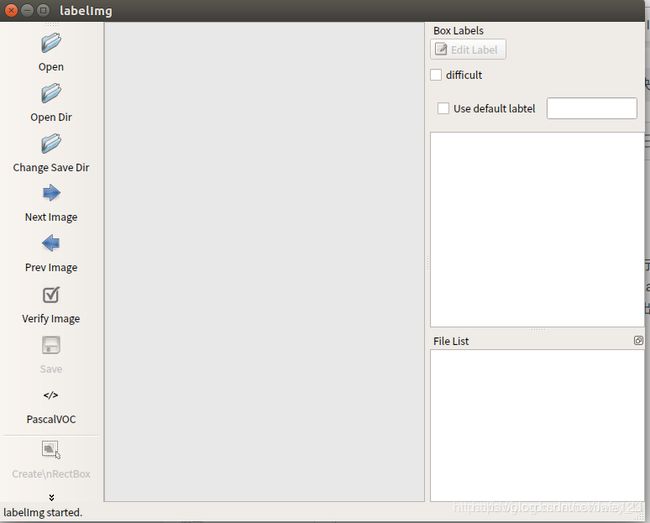

- 1.数据集的标注我选择的是labelimg,安装软件的话,还是挺顺利的,这里有篇博客链接大家可以参考一下:安装labelimg

- 2.我选择的环境是python3+qt5:

$ sudo apt-get install pyqt5-dev-tools

$ sudo pip install lxml

$ make qt5py3

$ python3 labelImg.py

三.制作数据集

- 1.大家在选择数据集时要先考虑检测什么,是1分类,2分类,还是10分类。图片的话,可以在京东,淘宝上下载,或者是voc数据集,比赛的数据集,都是可以的

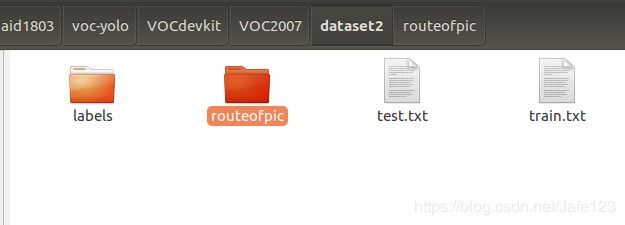

- 2.新建VOCdevkit,然后里面新建VOC2007文件夹,然后里面再新建3个文件夹:

Annotations——存放你的xml文件

JPEGImages——放置你的图片

dataset2——用于生成的标签和txt文件

- 3.因为用labelimg生成的文件是xml格式的,需要转换成yolo格式的,所以需要写脚本转换一下。

- 4.首先是生成图片的train.txt,test.txt文件:

# 生成train.txt,trainval.txt,val.txt

# 或者生成train.txt,test.txt

import os

from os import listdir, getcwd

from os.path import join

if __name__ == '__main__':

source_folder='/home/tarena/aid1803/voc-yolo/VOC2007/JPEGImages'#地址是所有图片的保存地点

#dest='/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/train.txt' #保存train.txt的地址

dest2='/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/test.txt' #保存val.txt的地址 测试集

dest3='/home/tarena/aid1803/voc-yolo/VOC2007/dataset/trainval.txt' #保存trainval.txt的地址 训练中的测试集

file_list=os.listdir(source_folder) #赋值图片所在文件夹的文件列表

#train_file=open(dest,'a') #打开文件

val_file=open(dest2,'a') #打开文件

trainval_file=open(dest3,'a') #打开文件

file_num=0

for file_obj in file_list: #访问文件列表中的每一个文件

file_path=os.path.join(source_folder,file_obj)

#file_path保存每一个文件的完整路径

file_name,file_extend=os.path.splitext(file_obj)# 4951(0,3800)(3800,4945)(6)

#print ("file_num:",file_num)# 4952

#file_name 保存文件的名字,file_extend保存文件扩展名

if(file_num>3800 & file_num<4946): #and file_num%4!=0): #前面是你的图片数目,后面是为了保留文件用于训练

#if(file_num<3000): #and file_num%4!=0): #前面是你的图片数目,后面是为了保留文件用于训练

#print file_num

trainval_file.write(file_name+'\n')

#train_file.write(file_name+'\n') #用于训练前900个的图片路径保存在train.txt里面,结尾加回车换行

else :

val_file.write(file_name+'\n') #其余的文件保存在val.txt里面

file_num+=1

#train_file.close()#关闭文件

val_file.close()

trainval_file.close()

- 5.然后是标签文件和标签的路径文件

# 在xml文件中提取,转换成txt文件,生成路径

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

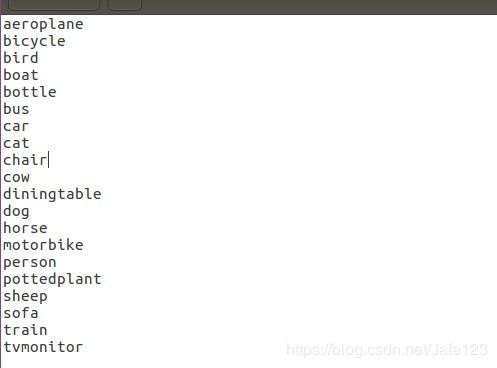

classes = ["aeroplane","bicycle","bird","boat","bus","car","chair","cow","diningtable","dog","horse",

"motorbike","person","pottedplant","sheep","sofa","train","tvmonitor","cat",

"bottle"] #对应里面的name,按照实际情况修改

#这个函数是voc自己的不用修改,在下面的函数中调用

def convert(size, box):

dw = 1./size[0]

dh = 1./size[1]

x = (box[0] + box[1])/2.0

y = (box[2] + box[3])/2.0

w = box[1] - box[0]

h = box[3] - box[2]

x = x*dw

w = w*dw

y = y*dh

h = h*dh

return (x,y,w,h)

#生成标签函数,从xml文件中提取有用信息写入txt文件

def convert_annotation(image_id):

in_file = open('/home/tarena/aid1803/voc-yolo/VOC2007/Annotations/%s.xml'%(image_id)) #Annotations文件夹地址

out_file = open('/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/labels/%s.txt'%(image_id), 'w') #labels文件夹地址

tree=ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text), float(xmlbox.find('ymax').text))

bb = convert((w,h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '\n')

if __name__ == '__main__':

if not os.path.exists('/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/labels'): # 不存在文件夹

os.makedirs('/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/labels')

#主函数,从之前生成的train.txt/val.txt/trainval.txt获取文件名循环,3个文件各执行1次

image_ids = open('/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/train.txt').read().strip().split() #之前生成的train.txt/val.txt/trainval.txt地址

#image_ids = open('/home/tarena/aid1803/voc-yolo/VOC2007/dataset/trainval.txt').read().strip().split() #之前生成的train.txt/val.txt/trainval.txt地址

#image_ids = open('/home/tarena/aid1803/voc-yolo/VOC2007/dataset/val.txt').read().strip().split() #之前生成的train.txt/val.txt/trainval.txt地址

#image_ids = open('/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/test.txt').read().strip().split() #之前生成的train.txt/val.txt/trainval.txt地址

#list_file = open('/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/routeofpic/infrared_train.txt', 'w') #你希望的图片路径txt保存地址

list_file = open('/home/tarena/aid1803/voc-yolo/VOC2007/dataset2/routeofpic/infrared_vol.txt', 'w') #你希望的图片路径txt保存地址

for image_id in image_ids:

list_file.write('/home/tarena/aid1803/voc-yolo/VOC2007/JPEGImages/%s.jpg\n'%(image_id)) #你实际的图片路径,这句话是直接写入程序务必写对

convert_annotation(image_id)

list_file.close()

四.下载和编译源码

- 1.训练的自己的数据集我用的是原作者的,当然大家也可以试一试YunYang1994的代码去训练。

$ git clone https://github.com/pjreddie/darknet

- 2.编译代码

cd darknet

vim Makefile #如果使用CPU模式。则不用修改Makefile文件

GPU=1 #如果使用GPU设置为1,CPU设置为0

CUDNN=1 #如果使用CUDNN设置为1,否则为0

OPENCV=0 #如果调用摄像头,还需要设置OPENCV为1,否则为0

OPENMP=0 #如果使用OPENMP设置为1,否则为0

DEBUG=0 #如果使用DEBUG设置为1,否则为0

CC=gcc

NVCC=/home/usr/local/cuda-10.0/bin/nvcc #NVCC=nvcc 修改为自己的路径

AR=ar

ARFLAGS=rcs

OPTS=-Ofast

LDFLAGS= -lm -pthread

COMMON= -Iinclude/ -Isrc/

CFLAGS=-Wall -Wno-unused-result -Wno-unknown-pragmas -Wfatal-errors -fPIC

...

ifeq ($(GPU), 1)

COMMON+= -DGPU -I/usr/local/cuda-10.0/include/ #修改为自己的路径

CFLAGS+= -DGPU

LDFLAGS+= -L/usr/local/cuda-10.0/lib64 -lcuda -lcudart -lcublas -lcurand #修改为自己的路径

endif

$ make

如果编译的时候出现一下错误:(若无下列错误,则可跳过以下内容)

/bin/sh: 1: nvcc: not found

并且下面的cuda的位置不知道在哪里。则只需写nvcc处的cuda位置即可,并且前3行是这样的:

GPU=1

CUDNN=0

OPENCV=0

OPENMP=0

DEBUG=0

ARCH= -gencode arch=compute_30,code=sm_30 \

-gencode arch=compute_35,code=sm_35 \

-gencode arch=compute_50,code=[sm_50,compute_50] \

-gencode arch=compute_52,code=[sm_52,compute_52]

# -gencode arch=compute_20,code=[sm_20,sm_21] \ This one is deprecated?

# This is what I use, uncomment if you know your arch and want to specify

# ARCH= -gencode arch=compute_52,code=compute_52

VPATH=./src/:./examples

SLIB=libdarknet.so

ALIB=libdarknet.a

EXEC=darknet

OBJDIR=./obj/

CC=gcc

CPP=g++

NVCC=/usr/local/cuda-10.0/bin/nvcc#只改这个cuda,找到他的位置

AR=ar

ARFLAGS=rcs

OPTS=-Ofast

LDFLAGS= -lm -pthread

COMMON= -Iinclude/ -Isrc/

CFLAGS=-Wall -Wno-unused-result -Wno-unknown-pragmas -Wfatal-errors -fPIC

ifeq ($(OPENMP), 1)

CFLAGS+= -fopenmp

endif

ifeq ($(DEBUG), 1)

OPTS=-O0 -g

endif

CFLAGS+=$(OPTS)

ifeq ($(OPENCV), 1)

COMMON+= -DOPENCV

CFLAGS+= -DOPENCV

LDFLAGS+= `pkg-config --libs opencv` -lstdc++

COMMON+= `pkg-config --cflags opencv`

endif

ifeq ($(GPU), 1)

COMMON+= -DGPU -I/usr/local/cuda/include/#这里的cuda不用改

CFLAGS+= -DGPU

LDFLAGS+= -L/usr/local/cuda/lib64 -lcuda -lcudart -lcublas -lcurand #这里的cuda不用改

endif

nvcc的位置怎么找呢?

$ cd /usr/local/

$ ls

bin cuda cuda-10.0 etc games include lib man sbin share src

$ ll

total 44

drwxr-xr-x 11 root root 4096 May 25 18:24 ./

drwxr-xr-x 10 root root 4096 May 25 18:12 ../

drwxr-xr-x 2 root root 4096 May 25 18:15 bin/

lrwxrwxrwx 1 root root 19 May 25 18:24 cuda -> /usr/local/cuda-10.0/

drwxr-xr-x 17 root root 4096 May 25 18:24 cuda-10.0/

drwxr-xr-x 2 root root 4096 Mar 1 02:23 etc/

drwxr-xr-x 2 root root 4096 Mar 1 02:23 games/

drwxr-xr-x 2 root root 4096 Mar 1 02:23 include/

drwxr-xr-x 4 root root 4096 May 25 15:22 lib/

lrwxrwxrwx 1 root root 9 May 25 14:49 man -> share/man/

drwxr-xr-x 2 root root 4096 Mar 1 02:23 sbin/

drwxr-xr-x 7 root root 4096 May 25 18:12 share/

drwxr-xr-x 2 root root 4096 Mar 1 02:23 src/

$ sudo vim /etc/profile

在/etc/profile里修改环境变量

export PATH=/usr/local/cuda-10.0/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-10.0/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

五.编译成功之后可以测试demo

下载yolov3权重,并且测试。 权重文件yolov3.weights(迅雷比较快哦)

$ wget https://pjreddie.com/media/files/yolov3.weights

$ ./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

六.编译成功之后局部修改

- 1.修改文件cfg/voc.data

classes= 20 #classes为训练样本集的类别总数

train = /root/data/tensorflow-yolov3-darknet/darknet/VOCdevkit/VOC2007/dataset2/routeofpic/infrared_train.txt #train的路径为训练样本集所在的路径

valid = /root/data/tensorflow-yolov3-darknet/darknet/VOCdevkit/VOC2007/dataset2/routeofpic/infrared_val.txt #valid的路径为验证样本集所在的路径

names = data/voc.names #names的路径为data/voc.names文件所在的路径

backup = backup

- 2.在darknet文件夹下面新建文件夹backup,用来放训练的结果文件。

- 3.修改data/voc.name为样本集的标签名,coco.names不知道要不要改,我也改了。

- 4.修改文件cfg/yolo_voc.cfg

ctrl+f搜 yolo, 总共会搜出3个含有yolo的地方。

每个地方都必须要改2处,

filters:3*(5+len(classes));

classes: len(classes),这里以10个类为例

filters = 45

classes = 10

random可选,如果显存很小,将random设置为0,关闭多尺度训练。

[convolutional]

batch_normalize=1 ### BN

filters=45 ### 卷积核数目

size=3 ### 卷积核尺寸

stride=1 ### 卷积核步长

pad=1 ### pad

activation=leaky ### 激活函数

[convolutional]

size=1

stride=1

pad=1

filters=45 #3*(10+4+1)

activation=linear

[yolo]

mask = 6,7,8

anchors = 10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326

classes=10 #类别

num=9

jitter=.3

ignore_thresh = .5

truth_thresh = 1

random=0 #1,如果显存很小,将random设置为0,关闭多尺度训练;

七.下载模型并开始训练

下载darknet53的预训练模型。如果下载慢的话,可以把我的分享给大家:链接:https://pan.baidu.com/s/1MW0zAHrvMbtxMKIpWYsACQ

提取码:hllv

wget https://pjreddie.com/media/files/darknet53.conv.74

开始训练:

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg darknet53.conv.74

正在训练:

发现IOU有很多为nan,所以增加了batch,再训练时效果好了点。

八.识别

将训练得到的weights文件拷贝到darknet/weights文件夹下面

./darknet detect cfg/yolov3-voc.cfg weights/yolov3.weights data/dog.jpg

不当之处,还请指正,共勉,一起加油。再次感谢以下博客作者:

1.https://blog.csdn.net/qq_41320736/article/details/104738101

2.https://blog.csdn.net/shangpapa3/article/details/77483324

3.https://blog.csdn.net/qq_21578849/article/details/84980298

4.https://blog.csdn.net/john_bh/article/details/80625220

5.https://blog.csdn.net/chengyq116/article/details/80552163

6.https://www.cnblogs.com/hls91/p/10894540.html

7.https://www.cnblogs.com/Milburn/p/12052076.html