yolov3源码解析-输出特征的存储方式

yolov3源码解析-输出特征的存储方式

缘由:

最近在做darknet训练的yolov3模型转化成tensorlite模型的过程中,发现转换后的结果和转换前的结果不一致。此时需要排查三个输出节点的特征图信息是否一致,所以分析了darknet源码,并做此记录。

源码:

添加中文注释的地方为特征信息的打印,可根据打印信息分析特征的一致性问题。

int get_yolo_detections(layer l, int w, int h, int netw, int neth, float thresh, int *map, int relative, detection *dets, int letter)

{

printf("\n l.batch = %d, l.w = %d, l.h = %d, l.n = %d \n", l.batch, l.w, l.h, l.n);//打印当前yolo层信息

int i,j,n;

float *predictions = l.output; //函数调用之前做了网络层类型判断,当为yolo层时进入,l.output为上层(输出节点层)输出特征信息。

// This snippet below is not necessary

// Need to comment it in order to batch processing >= 2 images

//if (l.batch == 2) avg_flipped_yolo(l);

int count = 0;

for (i = 0; i < l.w*l.h; ++i){

int row = i / l.w;

int col = i % l.w;

for(n = 0; n < l.n; ++n){

int obj_index = entry_index(l, 0, n*l.w*l.h + i, 4);

float objectness = predictions[obj_index];

//if(objectness <= thresh) continue; // incorrect behavior for Nan values

if (objectness > thresh) {

//printf("\n objectness = %f, thresh = %f, i = %d, n = %d \n", objectness, thresh, i, n);

int box_index = entry_index(l, 0, n*l.w*l.h + i, 0);

printf("\n %f,\n %f,\n %f,\n %f,\n %f", predictions[box_index], predictions[box_index+1], predictions[box_index+2], predictions[box_index+3], objectness);//打印出(tx、ty、tw、th、objness)

dets[count].bbox = get_yolo_box(predictions, l.biases, l.mask[n], box_index, col, row, l.w, l.h, netw, neth, l.w*l.h);

dets[count].objectness = objectness;

dets[count].classes = l.classes;

for (j = 0; j < l.classes; ++j) {

int class_index = entry_index(l, 0, n*l.w*l.h + i, 4 + 1 + j);

float prob = objectness*predictions[class_index];

printf("\n %f", predictions[class_index]);//打印(classes_conf)

dets[count].prob[j] = (prob > thresh) ? prob : 0;

}

++count;

}

}

}

correct_yolo_boxes(dets, count, w, h, netw, neth, relative, letter);

return count;

}分析:

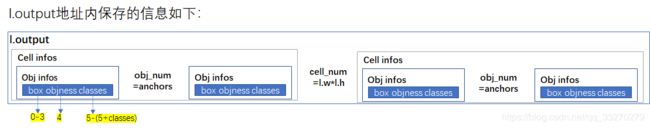

输出节点特征图信息的存储形式如下图:

l.output:每个输出节点输出的特征信息。

Cell infos: 特征图内每个cell内部包含的信息。

Obj infos:每个anchor内部包含的特征信息。

box:tx,ty,tw,th。

objness:目标框的可信度。

classes:每一个类别的置信度。