kaggle——Hotel booking demand酒店预订需求

1.导入数据

import numpy as np

import pandas as pd

import seaborn as sns

# 读取csv文件

hotel_data = pd.read_csv(r'D:\4_Project\1_pycharm_project\Hotel_booking_demand\hotel_bookings.csv')

# 查看前5行数据

hotel_data.head()

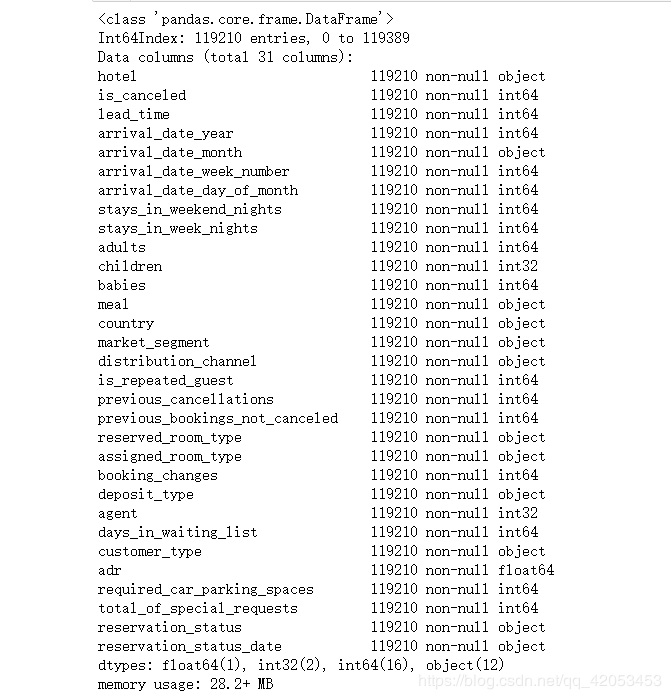

2.查看数据信息及缺失值

# 查看hotel_data二维表的信息

hotel_data.info()

# 找到缺失值

hotel_data.isnull().sum(axis=0)

根据hotel_data.info(),我们知道有32个特征,且存在特征为NULL,但并不直观,所以可以用.isnull().sum()来求出每一特征有多少个NULL值。

3.处理缺失值

根据2可知有4个特征存在缺失值,分别为childern,country,agent,company。

第一,children缺失4个,且为数值型变量,所以可以用中位数填充;

第二,country缺失488个相对11W的数据也比较少,由于是类别型变量,所以可以用众数填充。

第三,agent缺失16340个(16340/119390 =13.6% < 20%),也是类别型变量,考虑到这个特征本身的含义,用0填充,表示没有旅行社ID。

第四,company缺失112593个(112593/119390 >80%),所以可以直接删除。

import copy

data_new = copy.deepcopy(hotel_data)

# company字段缺失值112593/119390超过80%,所以可以直接删除

data_new.drop("company", axis=1, inplace=True)

# children字段中是数值型变量,且偏态分布,即用中位数替代

data_new["children"].fillna(data_new["children"].median(), inplace=True)

# country字段为类别型变量,用众数替代

data_new["country"].fillna(data_new["country"].mode()[0],inplace=True)

# 假设agent中缺失值代表未指定任何机构,即null=0

data_new["agent"].fillna(0, inplace=True)

data_new.isnull().sum()

4.异常值处理

# 统一类型

data_new["children"] = data_new["children"].astype(int)

data_new["agent"] = data_new["agent"].astype(int)

# 初始条件,餐饮字段中的Undefined / SC –无餐套餐为一类

data_new["meal"].replace("Undefined", "SC", inplace=True)

# 去掉异常值

zero_guests = list(data_new["adults"] + data_new["children"] + data_new["babies"] == 0)

data_new.drop(data_new.index[zero_guests],inplace=True)

data_new.info()

5.数据分析

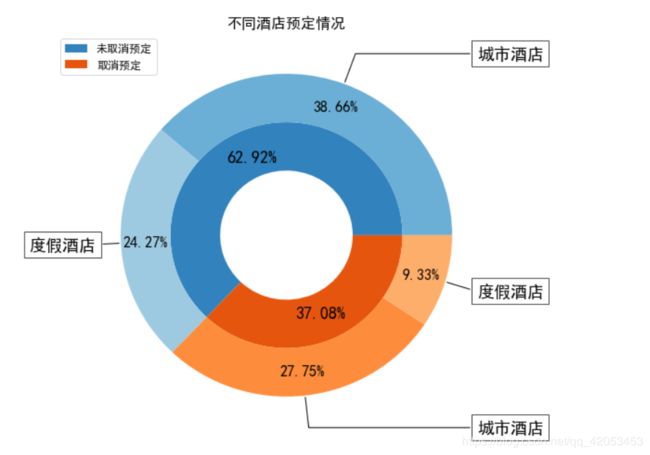

5.1 城市酒店与度假酒店总预定情况

因为是对酒店预定的需求分析,我们根据数据可知有两种酒店,分别是城市酒店和度假酒店,首先查看,在不考虑取消下二者的预定情况

import matplotlib.pyplot as plt

plt.rcParams["font.sans-serif"] = ["SimHei"]

plt.rcParams["font.serif"] = ["SimHei"]

# 从预定是否取消考虑

rh_iscancel_count = data_new[data_new["hotel"]=="Resort Hotel"].groupby(["is_canceled"])["is_canceled"].count()

ch_iscancel_count = data_new[data_new["hotel"]=="City Hotel"].groupby(["is_canceled"])["is_canceled"].count()

rh_cancel_data = pd.DataFrame({"hotel": "度假酒店",

"is_canceled": rh_iscancel_count.index,

"count": rh_iscancel_count.values})

ch_cancel_data = pd.DataFrame({"hotel": "城市酒店",

"is_canceled": ch_iscancel_count.index,

"count": ch_iscancel_count.values})

iscancel_data = pd.concat([rh_cancel_data, ch_cancel_data], ignore_index=True)

plt.figure(figsize=(8, 8))

label_list =["城市酒店","度假酒店"]

explode =[0,0.05]

# .value_counts()为计算频数

patches, l_text, p_text = plt.pie(data_new["hotel"].value_counts(), explode = explode,labels = label_list, autopct="%.2f%%",textprops={"fontsize":18})

plt.title("酒店总预定数分布", fontsize=16)

plt.legend(patches, (iscancel_data.loc[iscancel_data.is_canceled==1, "hotel"].value_counts().index)[::-1], loc="upper right",

fontsize=14)

plt.show()

可知:在不考虑退订情况下,城市酒店的预定量是度假酒店的1倍左右

5.2在考虑退订情况下,对二者预定量的影响

from itertools import chain

plt.figure(figsize=(8, 8))

cmap = plt.get_cmap("tab20c")

outer_colors = cmap(np.arange(2)*4)

inner_colors = cmap(np.array([1, 2, 5, 6]))

w , t, at = plt.pie(data_new["is_canceled"].value_counts(), autopct="%.2f%%",textprops={"fontsize":18}, radius=0.7,

wedgeprops=dict(width=0.3), pctdistance=0.75, colors=outer_colors)

plt.legend(w, ["未取消预定", "取消预定"], loc="upper right", bbox_to_anchor=(0, 0, 0.2, 1), fontsize=12)

val_array = np.array((iscancel_data.loc[(iscancel_data.hotel=="城市酒店")&(iscancel_data.is_canceled==0), "count"].values,

iscancel_data.loc[(iscancel_data.hotel=="度假酒店")&(iscancel_data.is_canceled==0), "count"].values,

iscancel_data.loc[(iscancel_data.hotel=="城市酒店")&(iscancel_data.is_canceled==1), "count"].values,

iscancel_data.loc[(iscancel_data.hotel=="度假酒店")&(iscancel_data.is_canceled==1), "count"].values))

w2, t2, at2 = plt.pie(list(chain.from_iterable(val_array)), autopct="%.2f%%", textprops={"fontsize":16}, radius=1,

wedgeprops=dict(width=0.3), pctdistance=0.85, colors=inner_colors)# 注意size不能为二维数组,否则会报错

plt.title("不同酒店预定情况", fontsize=16)

bbox_props = dict(boxstyle="square,pad=0.3", fc="w", ec="k", lw=0.72)

kw = dict(arrowprops=dict(arrowstyle="-", color="k"), bbox=bbox_props, zorder=3, va="center")

for i, p in enumerate(w2):

# print(i, p, sep="---")

text = ["城市酒店", "度假酒店", "城市酒店", "度假酒店"]

ang = (p.theta2 - p.theta1) / 2. + p.theta1

y = np.sin(np.deg2rad(ang))

x = np.cos(np.deg2rad(ang))

print(ang,x,y)

horizontalalignment = {-1: "right", 1: "left"}[int(np.sign(x))]

connectionstyle = "angle, angleA=0, angleB={}".format(ang)

kw["arrowprops"].update({"connectionstyle": connectionstyle})

'''

plt.annotate()

xy=(横坐标,纵坐标) 箭头尖端

xytext=(横坐标,纵坐标) 文字的坐标,指的是最左边的坐标

arrowprops= {facecolor= '箭头的颜色',shrink = '箭头缩小倍数' <1 收缩箭头}

horizontalalignment设置垂直对齐方式

bbox给标题增加外框

'''

plt.annotate(text[i], xy=(x, y), xytext=(1.15*np.sign(x), 1.2*y),

horizontalalignment=horizontalalignment, **kw, fontsize=18)

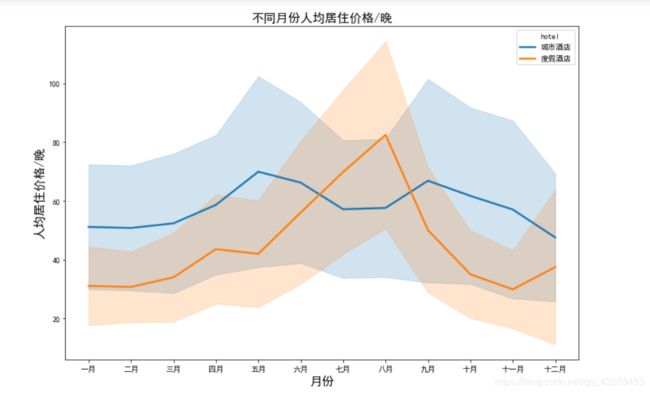

5.3酒店的人均价格

第二步 查看酒店的消费水平,可以查看酒店人均价格:

人均价格/晚= adr/(adults+children+babies)

# 从月份上看人均平均每晚价格

data_new["adr_pp"] = data_new["adr"] / (data_new["adults"] + data_new["children"]+ data_new["babies"])

full_data_guests = data_new.loc[data_new["is_canceled"] == 0] # only actual gusts

room_price_monthly = full_data_guests[["hotel", "arrival_date_month", "adr_pp"]].sort_values("arrival_date_month")

ordered_months = ["January", "February", "March", "April", "May", "June", "July", "August",

"September", "October", "November", "December"]

month_che = ["一月", "二月", "三月", "四月", "五月", "六月", "七月", "八月", "九月", "十月", "十一月", "十二月", ]

for en, che in zip(ordered_months, month_che):

room_price_monthly["arrival_date_month"].replace(en, che, inplace=True)

'''

categorical 在categories没有给出时,实际上是计算一个列表型数据中的类别数,即不重复项,它返回的是一个

CategoricalDtype 类型的对象,相当于在原来数据上附加上类别信息,具体的类别可以通过对应的序号表示可以使用

codes 和 categories 来查看pd.Categorical( list ).codes可以直接得到原始数据的对应的列表序号,通过这样可

以将类别信息转化成数值信息。

pandas.Categorical(values,categories = None,ordered = None,dtype = None,fastpath = False )[source]

values:类似列表。分类的值,如果给出了类别,不在类别中的值将替换为NaN。

categories(类别):索引式(唯一),可选。则按此类别分类。如果没有给出,则默认是values的去重。

ordered:布尔值(默认为False)。此分类是否被视为有序分类。如果没有给出,无序。

dtype:CategoricalDtype用于此分类的实例

'''

room_price_monthly["arrival_date_month"] = pd.Categorical(room_price_monthly["arrival_date_month"],

categories=month_che, ordered=True)

room_price_monthly["hotel"].replace("City Hotel", "城市酒店", inplace=True)

room_price_monthly["hotel"].replace("Resort Hotel", "度假酒店", inplace=True)

import seaborn as sns

plt.figure(figsize=(12, 8))

sns.lineplot(x="arrival_date_month", y="adr_pp", hue="hotel", data=room_price_monthly, hue_order=["城市酒店", "度假酒店"],

ci="sd", size="hotel", sizes=(2.5, 2.5))

plt.title("不同月份人均居住价格/晚", fontsize=16)

plt.xlabel("月份", fontsize=16)

plt.ylabel("人均居住价格/晚", fontsize=16)

可知:城市酒店在5月份和9月份人均价格有两个小高峰,在7-8月份价格下降;度假酒店在7-8月份人均价格处于高峰阶段

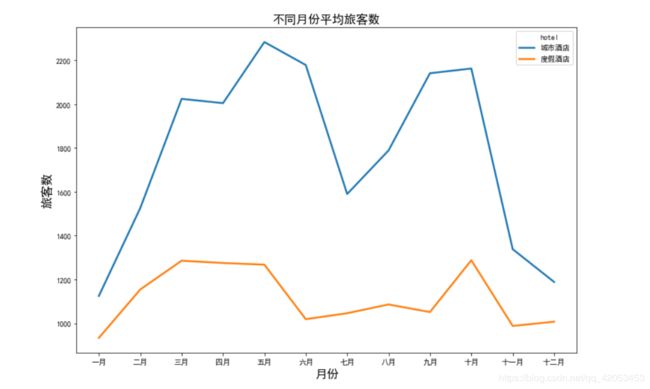

5.4平均每月到店人数

因为这个数据是2015年7月1日到2017年8月31日的,所以7,8月出现了3次,其余月份出现了2次,既然是计算平均每月到店人数,那么应该除去相应月份出现的次数。

# 查看月度人流量

rh_bookings_monthly = full_data_guests[full_data_guests.hotel=="Resort Hotel"].groupby("arrival_date_month")["hotel"].count()

ch_bookings_monthly = full_data_guests[full_data_guests.hotel=="City Hotel"].groupby("arrival_date_month")["hotel"].count()

rh_bookings_data = pd.DataFrame({"arrival_date_month": list(rh_bookings_monthly.index),

"hotel": "度假酒店",

"guests": list(rh_bookings_monthly.values)})

ch_bookings_data = pd.DataFrame({"arrival_date_month": list(ch_bookings_monthly.index),

"hotel": "城市酒店",

"guests": list(ch_bookings_monthly.values)})

full_booking_monthly_data = pd.concat([rh_bookings_data, ch_bookings_data], ignore_index=True)

ordered_months = ["January", "February", "March", "April", "May", "June", "July", "August",

"September", "October", "November", "December"]

month_che = ["一月", "二月", "三月", "四月", "五月", "六月", "七月", "八月", "九月", "十月", "十一月", "十二月"]

for en, che in zip(ordered_months, month_che):

full_booking_monthly_data["arrival_date_month"].replace(en, che, inplace=True)

full_booking_monthly_data["arrival_date_month"] = pd.Categorical(full_booking_monthly_data["arrival_date_month"],

categories=month_che, ordered=True)

full_booking_monthly_data.loc[(full_booking_monthly_data["arrival_date_month"]=="七月")|(full_booking_monthly_data["arrival_date_month"]=="八月"), "guests"] /= 3

full_booking_monthly_data.loc[~((full_booking_monthly_data["arrival_date_month"]=="七月")|(full_booking_monthly_data["arrival_date_month"]=="八月")), "guests"] /= 2

plt.figure(figsize=(12, 8))

sns.lineplot(x="arrival_date_month",

y="guests",

hue="hotel", hue_order=["城市酒店", "度假酒店"],

data=full_booking_monthly_data, size="hotel", sizes=(2.5, 2.5))

plt.title("不同月份平均旅客数", fontsize=16)

plt.xlabel("月份", fontsize=16)

plt.ylabel("旅客数", fontsize=16)

结合5.3可知:

1、城市酒店4-5月(春季)和9-10月(秋季)为预定旺季,房价也相应提高

2、度假酒店3-5月份(春季)和10月份(秋季)为预定旺季,房价稍有上浮

3、对于两家酒店来说6-8月份均为淡季,但发现度假酒店在7-8月淡季反而房价很高,远高于其他月份

4、11月-来年1月份(冬季)也是预定淡季

6.使用ML算法来预测顾客是否会取消预定

第一步:计算每个特征与"is_canceled"的相关性,由于有些是类别变量,所以不能参与计算

cancel_corr = data_new.corr()["is_canceled"]

cancel_corr.abs().sort_values(ascending=False)

可知:除了"is_canceled"外,前5个(从预定到到店时间,客户提出的特殊要求的数量,要求停车场,对预订进行的更改的数量,客户在当前预订之前取消的先前预订的数量)与"is_canceled"相关性较大

第二步 特征模型训练

建立base model,使用决策树,随机森林,逻辑回归、XGBC分类器,查看哪个训练结果更好

# for ML:

from sklearn.model_selection import train_test_split, KFold, cross_validate, cross_val_score

from sklearn.pipeline import Pipeline

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

from sklearn.impute import SimpleImputer

from sklearn.ensemble import RandomForestClassifier # 随机森林

from xgboost import XGBClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

import eli5 # Feature importance evaluation

#手动选择要包括的列

#为了使模型更通用并防止泄漏,排除了(预订更改、等待日、到达年份、指定房间类型、预订状态、国家/地区,列表)

#包括国家将提高准确性,但它也可能使模型不那么通用

num_features = ["lead_time","total_of_special_requests","required_car_parking_spaces",

"previous_cancellations","is_repeated_guest","adults","previous_bookings_not_canceled","agent",

"adr","babies","stays_in_weekend_nights","arrival_date_week_number","arrival_date_day_of_month",

"children","stays_in_week_nights"]

cat_features = ["hotel","arrival_date_month","meal","market_segment",

"distribution_channel","reserved_room_type","deposit_type","customer_type"]

#分离特征和预测值

features = num_features + cat_features

X = data_new.drop(["is_canceled"], axis=1)[features]

y = data_new["is_canceled"]

#预处理数值特征:

#对于大多数num cols,除了日期,0是最符合逻辑的填充值

#这里没有日期遗漏。

num_transformer = SimpleImputer(strategy="constant")

# 分类特征的预处理:

cat_transformer = Pipeline(steps=[("imputer", SimpleImputer(strategy="constant", fill_value="Unknown")),

("onehot", OneHotEncoder(handle_unknown='ignore'))])

# 数值和分类特征的束预处理:

preprocessor = ColumnTransformer(transformers=[("num", num_transformer, num_features),

("cat", cat_transformer, cat_features)])

# 定义要测试的模型:

base_models = [("DT_model", DecisionTreeClassifier(random_state=42)),

("RF_model", RandomForestClassifier(random_state=42,n_jobs=-1)),

("LR_model", LogisticRegression(random_state=42,n_jobs=-1,solver='liblinear')),

("XGB_model", XGBClassifier(random_state=42, n_jobs=-1))]

#将数据分成“kfold”部分进行交叉验证,

#使用shuffle确保数据的随机分布:

kfolds = 4 # 4 = 75% train, 25% validation

split = KFold(n_splits=kfolds, shuffle=True, random_state=42)

#对每个模型进行预处理、拟合、预测和评分:

for name, model in base_models:

#将数据和模型的预处理打包到管道中:

model_steps = Pipeline(steps=[('preprocessor', preprocessor),

('model', model)])

#获取每个模型的交叉验证分数:

cv_results = cross_val_score(model_steps,

X, y,

cv=split,

scoring="accuracy",

n_jobs=-1)

# output:

min_score = round(min(cv_results), 4)

max_score = round(max(cv_results), 4)

mean_score = round(np.mean(cv_results), 4)

std_dev = round(np.std(cv_results), 4)

print(f"{name} cross validation accuarcy score: {mean_score} +/- {std_dev} (std) min: {min_score}, max: {max_score}")

可知: RF算法的准确度更高,可以继续对其进行一些超参数的优化

# Enhanced RF model with the best parameters I found:

rf_model_enh = RandomForestClassifier(n_estimators=160,

max_features=0.4,

min_samples_split=2,

n_jobs=1,

random_state=42)

split = KFold(n_splits=10, shuffle=True, random_state=42)

model_pipe = Pipeline(steps=[('preprocessor', preprocessor),

('model', rf_model_enh)])

cv_results = cross_val_score(model_pipe,

X, y,

cv=split,

scoring="accuracy",

n_jobs=-1)

# output:

min_score = round(min(cv_results), 4)

max_score = round(max(cv_results), 4)

mean_score = round(np.mean(cv_results), 4)

std_dev = round(np.std(cv_results), 4)

print(f"Enhanced RF model cross validation accuarcy score: {mean_score} +/- {std_dev} (std) min: {min_score}, max: {max_score}")

![]()

可知: 模型提高了2%左右

第三步:查看影响模型权重较大的特征

# 查看影响模型权重较大的特征

# 拟合模型,以便可以访问值:

model_pipe.fit(X,y)

#需要所有(编码)功能的名称。

#从独热编码中获取列的名称:

onehot_columns = list(model_pipe.named_steps['preprocessor'].

named_transformers_['cat'].

named_steps['onehot'].

get_feature_names(input_features=cat_features))

#为完整列表添加num_功能。

#顺序必须与X的定义相同,其中num_特征是第一个:

feat_imp_list = num_features + onehot_columns

#显示10个最重要的功能,提供功能名称:

feat_imp_df = eli5.formatters.as_dataframe.explain_weights_df(

model_pipe.named_steps['model'],

feature_names=feat_imp_list)

feat_imp_df.head(10)

可知: 权重较大的三个特征是:从预定到到店时间,平均每日房价,押金类型不退款

研究这三个影响较大的特征对预定结果的影响

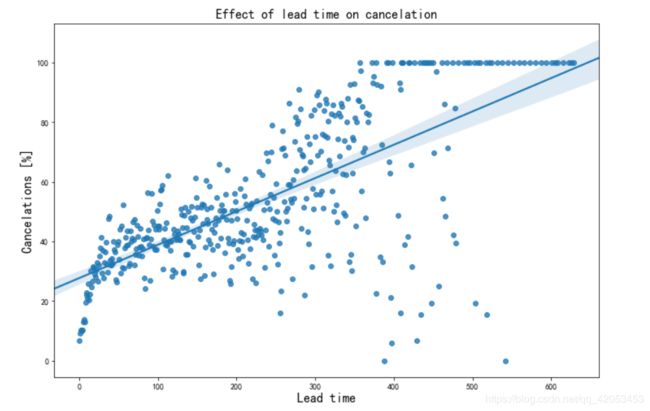

1、从预定到到店时间

# 查看从预定到离店时间特征的影响

import seaborn as sns

# group data for lead_time:

lead_cancel_data = data_new.groupby("lead_time")["is_canceled"].describe()

# use only lead_times wih more than 10 bookings for graph:

lead_cancel_data_10 = lead_cancel_data.loc[lead_cancel_data["count"] >= 10]

#show figure:

plt.figure(figsize=(12, 8))

x,y = pd.Series(lead_cancel_data_10.index, name="x_var"), pd.Series(lead_cancel_data_10["mean"].values * 100, name="y_var")

sns.regplot(x=x, y=lead_cancel_data_10["mean"].values * 100)

plt.title("Effect of lead time on cancelation", fontsize=16)

plt.xlabel("Lead time", fontsize=16)

plt.ylabel("Cancelations [%]", fontsize=16)

plt.show()

可知:到店日的前几日取消预定的人很少,随着距离预定日越长时间的取消预定的人数越多,提前一年预定的取消率也更大,这也符合人们的常识。

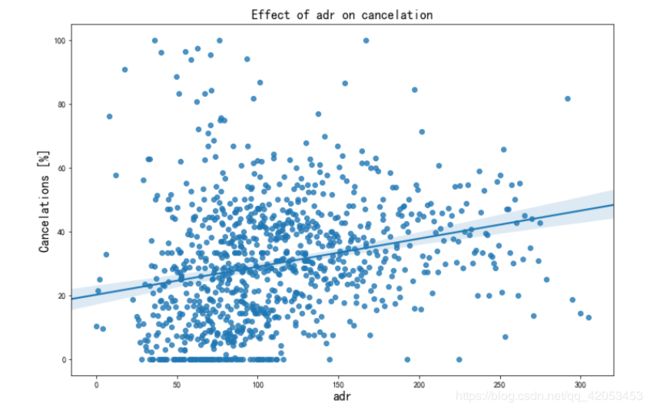

2.平均每日房价

# 查看平均每日房价特征的影响

adr_cancel_data = data_new.groupby("adr")["is_canceled"].describe()

# use only lead_times wih more than 10 bookings for graph:

adr_cancel_data_10 = adr_cancel_data.loc[adr_cancel_data["count"] > 10]

#show figure:

plt.figure(figsize=(12, 8))

x,y = pd.Series(adr_cancel_data_10.index, name="x_var"), pd.Series(adr_cancel_data_10["mean"].values * 100, name="y_var")

sns.regplot(x=x, y=adr_cancel_data_10["mean"].values * 100)

plt.title("Effect of lead time on cancelation", fontsize=16)

plt.xlabel("Lead time", fontsize=16)

plt.ylabel("Cancelations [%]", fontsize=16)

plt.show()

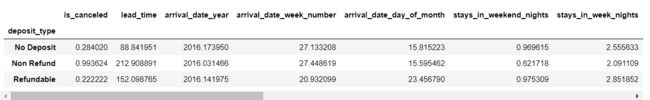

3、押金类型

# 查看无押金,有押金且不可退款,有押金且可退款对“取消预定”的影响

# group data for deposit_type:

deposit_cancel_data = data_new.groupby("deposit_type")["is_canceled"].describe()

#show figure:

plt.figure(figsize=(12, 8))

sns.barplot(x=deposit_cancel_data.index, y=deposit_cancel_data["mean"] * 100)

plt.title("Effect of deposit_type on cancelation", fontsize=16)

plt.xlabel("Deposit type", fontsize=16)

plt.ylabel("Cancelations [%]", fontsize=16)

plt.show()

可知:有押金且不退款的预定方式,取消率高达99%,这不符合人们的正常认知,也不符合逻辑,所以考虑数据来源是否正确,是否出现标签错误

4、针对这个疑问根据存款类型分组来查看所有数据平均值

deposit_mean_data = data_new.groupby("deposit_type").mean()

deposit_mean_data

可知有押金且不退款的特点是:

a、从预定至到店时间是无押金的2倍以上

b、重复的客人要少10倍以上

c、以前的取消次数要多10倍

d、当前预订之前未取消的先前预订的数量要少15倍

e、所需的停车位几乎为零

f、特殊要求非常罕见

总之:是那些提前很久预定而且不是以前住过的客人,也每没有什么特殊需求却预订、付款并多次取消。

5、排除这个特征再使用RF模型看看结果

# 去掉押金的影响

cat_features_non_dep = ["hotel","arrival_date_month","meal","market_segment",

"distribution_channel","reserved_room_type","customer_type"]

features_non_dep = num_features + cat_features_non_dep

X_non_dep = data_new.drop(["is_canceled"], axis=1)[features_non_dep]

y = data_new["is_canceled"]

# Bundle preprocessing for numerical and categorical features:

preprocessor_non_dep = ColumnTransformer(transformers=[("num", num_transformer, num_features),

("cat", cat_transformer, cat_features_non_dep)])

# Define model

rf_model_non_dep = RandomForestClassifier(n_estimators=160,

max_features=0.4,

min_samples_split=2,

n_jobs=-1,

random_state=42)

kfolds=4

split = KFold(n_splits=kfolds, shuffle=True, random_state=42)

model_pipe = Pipeline(steps=[('preprocessor', preprocessor_non_dep),

('model', rf_model_non_dep)])

cv_results = cross_val_score(model_pipe,

X_non_dep, y,

cv=split,

scoring="accuracy",

n_jobs=-1)

# output:

min_score = round(min(cv_results), 4)

max_score = round(max(cv_results), 4)

mean_score = round(np.mean(cv_results), 4)

std_dev = round(np.std(cv_results), 4)

print(f"RF model without deposit_type feature cross validation accuarcy score: {mean_score} +/- {std_dev} (std) min: {min_score}, max: {max_score}")

![]()

可知:去掉这个特征对最终的预测结果影响不大