android 结合 opencv项目(NDK、OpenCV、android,官方Demo人脸识别)

第一个android结合 opencv项目(NDK、OpenCV、android人脸识别)

(2017.5.16更改,见绿色)

前提条件:已经搭建好jdk 和eclipse 开发android 和插件CDT (eclipse c++)的环境

在命令行窗口输入:java –version 如果显示相应版本 代表jdk环境搭建成功

在命令行窗口输入:android –version 如果显示相应版本 代表eclipseandroid环境搭建成功

第一步:配置eclipseNDK 环境

1) :下载NDK

http://www.cnblogs.com/yaotong/archive/2011/01/25/1943615.html

我下载的版本是android-ndk-r10e

2) :配置NDK

先配置环境变量,我的NDK安装在E盘

配置主要是执行ndk-build.cmd

谷歌改良了ndk的开发流程,对于Windows环境下NDK的开发,如果使用的NDK是r7之前的版本,必须要安装Cygwin才能使用NDK。而在NDKr7开始,Google的Windows版的NDK提供了一个ndk-build.cmd的脚本,这样,就可以直接利用这个脚本编译,而不需要使用Cygwin了。只需要为Eclipse Android工程添加一个Builders,而为Eclipse配置的builder,其实就是在执行Cygwin,然后传递ndk-build作为参数,这样就能让Eclipse自动编译NDK了。(摘自博客http://www.cnblogs.com/yejiurui/p/3476565.html)

3) :配置Builders(其实有两种方法配置,先说第一种,个人觉得第二种更加方便)

第一种

新建一个android project 我的是(Demo_NDK)

鼠标右键选中该工程选择下面的Properties

在弹出窗口选中

点击右边new按钮出现

直接单击Program 弹出

在切到Refresh 页面

并点击右侧Specify Resources

点击Finish

再去到Build Options 页面

同样点击右侧Specify Resources选中刚才新建的项目

最后点击OK,完成操作

第二种

选择Window下拉列表,选中Preferences,再作如下操作

然后选中本项目右键下拉选择Android Tools 选中 add native support 完成操作

以上步骤完成NDK环境搭建

第二步:配置进行opencv在android运行的环境

1):到官网http://opencv.org/downloads.html下载sdk

我下载的是OpenCV-2.4.10-android-sdk

下载后在eclipse 导入android project

选中OpenCV Library -2.4.10 ----

当然你全部导入也行

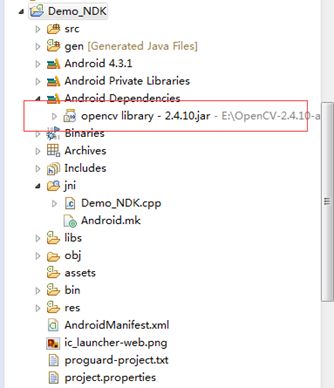

导入完成后如下

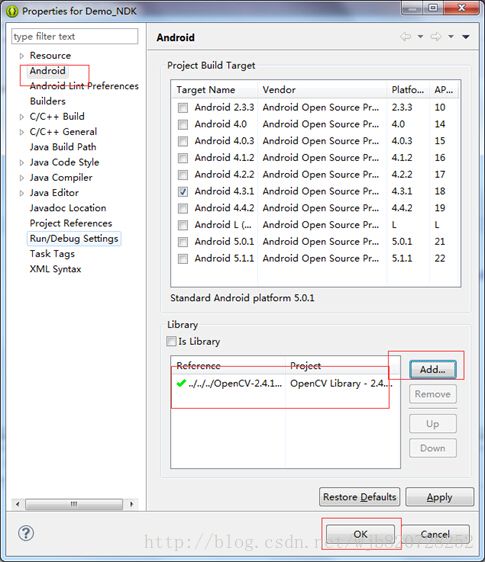

再右键点击刚才新建的项目选中下方Properties

点击OK 后,IDE 会自动 builder project 如果出现以下代表环境搭建好了

第三步:

上面两个步骤完成后,我以官方demo opencv-sample-face-detection 来展示如何利用OPENCV来通过NDK和android相关联

建包,分别创建DetectionBasedTracker.java 和FdActity.java

结构如下

用来写native方法(即本地方法,在C++环境运行)

代码如下

打开命令行进入这个项目的bin\classes目录(找到已经编译的.class文件)

输入命令:javah org.opencv.samples.facedetect.DetectionBasedTracker

org.opencv.samples.facedetect是类包路径

DetectionBasedTracker 是类名

将在bin\classes目录下生成org_opencv_samples_facedetect_DetectionBasedTracker.h文件,这个文件就是我们首先要拿到的文件

将其复制粘贴到项目的JNI 目录下

同时创建Android.mk 文件(配置文件)

LOCAL_MODULE :=Demo_NDK #标识你在Android.mk文件中描述的每个模板

这个标识着Java要调用的动态链接库

// Load native library after(!) OpenCV initialization

System.loadLibrary("Demo_NDK");

LOCAL_C_INCLUDES :=E:/OpenCV-2.4.10-android-sdk/sdk/native/jni/include

这将指定opencv包的路径,(这个很坑,我之前没有指定这段代码的时候,在下面.cpp文件导入openCV包总是报找不到包的错误)

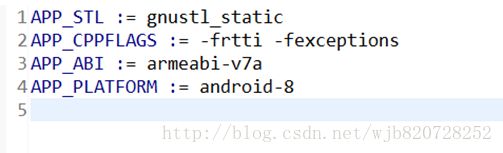

再创建Application.mk 文件(配置文件)

再创建org_opencv_samples_facedetect_DetectionBasedTracker.cpp文件,在里面编写openCV代码,分别对DetectionBasedTracker.java6个Native方法实现,代码如下

#include

#include

#include

#include

#include

#include

#define LOG_TAG"FaceDetection/DetectionBasedTracker"

#define LOGD(...) ((void)__android_log_print(ANDROID_LOG_DEBUG,LOG_TAG, __VA_ARGS__))

usingnamespace std;

usingnamespace cv;

inlinevoidvector_Rect_to_Mat(vector<Rect>& v_rect,Mat& mat)

{

mat = Mat(v_rect, true);

}

JNIEXPORT jlongJNICALLJava_org_opencv_samples_facedetect_DetectionBasedTracker_nativeCreateObject

(JNIEnv* jenv,jclass,jstring jFileName,jint faceSize)

{

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeCreateObjectenter");

constchar* jnamestr = jenv->GetStringUTFChars(jFileName, NULL);

string stdFileName(jnamestr);

jlong result = 0;

try

{

DetectionBasedTracker::Parameters DetectorParams;

if (faceSize > 0)

DetectorParams.minObjectSize = faceSize;

result = (jlong)newDetectionBasedTracker(stdFileName,DetectorParams);

}

catch(cv::Exception& e)

{

LOGD("nativeCreateObject caughtcv::Exception:%s", e.what());

jclass je = jenv->FindClass("org/opencv/core/CvException");

if(!je)

je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, e.what());

}

catch (...)

{

LOGD("nativeCreateObject caught unknown exception");

jclass je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, "Unknown exception in JNI code ofDetectionBasedTracker.nativeCreateObject()");

return 0;

}

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeCreateObjectexit");

return result;

}

JNIEXPORT voidJNICALLJava_org_opencv_samples_facedetect_DetectionBasedTracker_nativeDestroyObject

(JNIEnv* jenv,jclass,jlong thiz)

{

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeDestroyObjectenter");

try

{

if(thiz != 0)

{

((DetectionBasedTracker*)thiz)->stop();

delete (DetectionBasedTracker*)thiz;

}

}

catch(cv::Exception& e)

{

LOGD("nativeestroyObject caughtcv::Exception:%s", e.what());

jclass je = jenv->FindClass("org/opencv/core/CvException");

if(!je)

je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, e.what());

}

catch (...)

{

LOGD("nativeDestroyObject caught unknown exception");

jclass je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, "Unknown exception in JNI code ofDetectionBasedTracker.nativeDestroyObject()");

}

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeDestroyObjectexit");

}

JNIEXPORT voidJNICALLJava_org_opencv_samples_facedetect_DetectionBasedTracker_nativeStart

(JNIEnv* jenv,jclass,jlong thiz)

{

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeStartenter");

try

{

((DetectionBasedTracker*)thiz)->run();

}

catch(cv::Exception& e)

{

LOGD("nativeStart caughtcv::Exception: %s", e.what());

jclass je = jenv->FindClass("org/opencv/core/CvException");

if(!je)

je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, e.what());

}

catch (...)

{

LOGD("nativeStart caught unknown exception");

jclass je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, "Unknown exception in JNI code ofDetectionBasedTracker.nativeStart()");

}

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeStartexit");

}

JNIEXPORT voidJNICALLJava_org_opencv_samples_facedetect_DetectionBasedTracker_nativeStop

(JNIEnv* jenv,jclass,jlong thiz)

{

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeStopenter");

try

{

((DetectionBasedTracker*)thiz)->stop();

}

catch(cv::Exception& e)

{

LOGD("nativeStop caughtcv::Exception: %s", e.what());

jclass je = jenv->FindClass("org/opencv/core/CvException");

if(!je)

je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, e.what());

}

catch (...)

{

LOGD("nativeStop caught unknown exception");

jclass je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, "Unknown exception in JNI code ofDetectionBasedTracker.nativeStop()");

}

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeStopexit");

}

JNIEXPORT voidJNICALLJava_org_opencv_samples_facedetect_DetectionBasedTracker_nativeSetFaceSize

(JNIEnv* jenv,jclass,jlong thiz,jint faceSize)

{

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeSetFaceSizeenter");

try

{

if (faceSize > 0)

{

DetectionBasedTracker::Parameters DetectorParams = \

((DetectionBasedTracker*)thiz)->getParameters();

DetectorParams.minObjectSize = faceSize;

((DetectionBasedTracker*)thiz)->setParameters(DetectorParams);

}

}

catch(cv::Exception& e)

{

LOGD("nativeStop caughtcv::Exception: %s", e.what());

jclass je = jenv->FindClass("org/opencv/core/CvException");

if(!je)

je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, e.what());

}

catch (...)

{

LOGD("nativeSetFaceSize caught unknown exception");

jclass je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, "Unknown exception in JNI code ofDetectionBasedTracker.nativeSetFaceSize()");

}

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeSetFaceSizeexit");

}

JNIEXPORT voidJNICALLJava_org_opencv_samples_facedetect_DetectionBasedTracker_nativeDetect

(JNIEnv* jenv,jclass,jlong thiz,jlong imageGray,jlong faces)

{

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeDetectenter");

try

{

vector<Rect> RectFaces;

((DetectionBasedTracker*)thiz)->process(*((Mat*)imageGray));

((DetectionBasedTracker*)thiz)->getObjects(RectFaces);

vector_Rect_to_Mat(RectFaces, *((Mat*)faces));

}

catch(cv::Exception& e)

{

LOGD("nativeCreateObject caughtcv::Exception:%s", e.what());

jclass je = jenv->FindClass("org/opencv/core/CvException");

if(!je)

je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, e.what());

}

catch (...)

{

LOGD("nativeDetect caught unknown exception");

jclass je = jenv->FindClass("java/lang/Exception");

jenv->ThrowNew(je, "Unknown exception in JNI code DetectionBasedTracker.nativeDetect()");

}

LOGD("Java_org_opencv_samples_facedetect_DetectionBasedTracker_nativeDetectexit");

}

然后完善DetectionBasedTracker.java 文件

packageorg.opencv.samples.facedetect;

import org.opencv.core.Mat;

importorg.opencv.core.MatOfRect;

publicclass DetectionBasedTracker

{

public DetectionBasedTracker(String cascadeName,int minFaceSize) {

mNativeObj =nativeCreateObject(cascadeName,minFaceSize);

}

publicvoid start() {

nativeStart(mNativeObj);

}

publicvoid stop() {

nativeStop(mNativeObj);

}

publicvoid setMinFaceSize(int size) {

nativeSetFaceSize(mNativeObj, size);

}

publicvoid detect(Mat imageGray, MatOfRect faces) {

nativeDetect(mNativeObj,imageGray.getNativeObjAddr(), faces.getNativeObjAddr());

}

publicvoid release() {

nativeDestroyObject(mNativeObj);

mNativeObj = 0;

}

privatelongmNativeObj = 0;

privatestaticnativelongnativeCreateObject(String cascadeName, int minFaceSize);

privatestaticnativevoidnativeDestroyObject(long thiz);

privatestaticnativevoidnativeStart(longthiz);

privatestaticnativevoidnativeStop(longthiz);

privatestaticnativevoidnativeSetFaceSize(longthiz,intsize);

privatestaticnativevoidnativeDetect(longthiz,longinputImage,longfaces);

}

接下来是FdActivity.java主界面,代码如下

packageorg.opencv.samples.facedetect;

packageorg.opencv.samples.facedetect;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

importorg.opencv.android.BaseLoaderCallback;

importorg.opencv.android.CameraBridgeViewBase;

importorg.opencv.android.CameraBridgeViewBase.CvCameraViewFrame;

importorg.opencv.android.CameraBridgeViewBase.CvCameraViewListener2;

importorg.opencv.android.LoaderCallbackInterface;

importorg.opencv.android.OpenCVLoader;

import org.opencv.core.Core;

import org.opencv.core.Mat;

import org.opencv.core.MatOfRect;

import org.opencv.core.Rect;

import org.opencv.core.Scalar;

import org.opencv.core.Size;

import org.opencv.objdetect.CascadeClassifier;

import android.app.Activity;

import android.content.Context;

importandroid.content.pm.ActivityInfo;

import android.os.Bundle;

import android.util.Log;

import android.view.Menu;

import android.view.MenuItem;

import android.view.WindowManager;

import com.example.demo_ndk.R;

public class FdActivity extendsActivity implements CvCameraViewListener2 {

private static final String TAG ="OCVSample::Activity";

private static final Scalar FACE_RECT_COLOR = newScalar(0, 255, 0, 255);

public static final int JAVA_DETECTOR = 0;

public static final int NATIVE_DETECTOR = 1;

private MenuItem mItemFace50;

private MenuItem mItemFace40;

private MenuItem mItemFace30;

private MenuItem mItemFace20;

private MenuItem mItemType;

private Mat mRgba;

private Mat mGray;

private File mCascadeFile;

private CascadeClassifier mJavaDetector;

private DetectionBasedTracker mNativeDetector;

private int mDetectorType = JAVA_DETECTOR;

private String[] mDetectorName;

private float mRelativeFaceSize = 0.2f;

private int mAbsoluteFaceSize = 0;

//在 mOpenCvCameraView 的回调接口onCameraFrame函数里面处理每一帧从相机获取到的图片。

private CameraBridgeViewBase mOpenCvCameraView;

private BaseLoaderCallback mLoaderCallback = new BaseLoaderCallback(this) {

@Override

public void onManagerConnected(intstatus) {

switch (status) {

caseLoaderCallbackInterface.SUCCESS:

{

Log.i(TAG, "OpenCVloaded successfully");

// Load native libraryafter(!) OpenCV initialization

System.loadLibrary("Demo_NDK");

try {

// load cascade filefrom application resources

InputStream is =getResources().openRawResource(R.raw.lbpcascade_frontalface);

//这里去加载人脸识别分类文件(lbpcascade_frontalface.XML 是XML文件,这都是利用Opencv给我们提供好的XML人脸识别分类文件,在opencv/source/data/目录下,这里把那个文件拉到了Raw资源文件里面,方便Android调用,如果要自己实现一个XML人脸识别分类文件的话,需要用到opencv_haartraining,来训练大量数据,最终生成XML人脸识别分类文件)

File cascadeDir =getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir,"lbpcascade_frontalface.xml");

FileOutputStream os =new FileOutputStream(mCascadeFile);

byte[] buffer = newbyte[4096];

int bytesRead;

while ((bytesRead =is.read(buffer)) != -1) {

os.write(buffer, 0,bytesRead);

}

is.close();

os.close();

mJavaDetector = newCascadeClassifier(mCascadeFile.getAbsolutePath());

if(mJavaDetector.empty()) {

Log.e(TAG,"Failed to load cascade classifier");

mJavaDetector =null;

} else

Log.i(TAG,"Loaded cascade classifier from " + mCascadeFile.getAbsolutePath());

mNativeDetector = newDetectionBasedTracker(mCascadeFile.getAbsolutePath(), 0);

cascadeDir.delete();

} catch (IOException e) {

e.printStackTrace();

Log.e(TAG, "Failedto load cascade. Exception thrown: " + e);

}

mOpenCvCameraView.enableView();

} break;

default:

{

super.onManagerConnected(status);

} break;

}

}

};

public FdActivity() {

mDetectorName = newString[2];

mDetectorName[JAVA_DETECTOR] ="Java";

mDetectorName[NATIVE_DETECTOR] ="Native (tracking)";

Log.i(TAG, "Instantiated new" + this.getClass());

}

/** Called when the activity is first created. */

@Override

public void onCreate(Bundle savedInstanceState) {

Log.i(TAG, "calledonCreate");

super.onCreate(savedInstanceState);

getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

//设置拍摄方向

/*

系统默认

ActivityInfo.SCREEN_ORIENTATION_UNSPECIFIED

锁定直式

ActivityInfo.SCREEN_ORIENTATION_PORTRAIT

锁定横式

ActivityInfo.SCREEN_ORIENTATION_LANDSCAPE

随使用者当下

ActivityInfo.SCREEN_ORIENTATION_USER

与活动线程下相同的设定

ActivityInfo.SCREEN_ORIENTATION_BEHIND

不随SENSOR改变

ActivityInfo.SCREEN_ORIENTATION_NOSENSOR

随SENSOR改变

ActivityInfo.SCREEN_ORIENTATION_SENSOR

*/

this.setRequestedOrientation(ActivityInfo.SCREEN_ORIENTATION_LANDSCAPE);

setContentView(R.layout.face_detect_surface_view);

mOpenCvCameraView =(CameraBridgeViewBase) findViewById(R.id.fd_activity_surface_view);

mOpenCvCameraView.setCvCameraViewListener(this);

}

@Override

public void onPause()

{

super.onPause();

if (mOpenCvCameraView != null)

mOpenCvCameraView.disableView();

}

@Override

public void onResume()

{

super.onResume();

OpenCVLoader.initAsync(OpenCVLoader.OPENCV_VERSION_2_4_3, this,mLoaderCallback);

}

public void onDestroy() {

super.onDestroy();

mOpenCvCameraView.disableView();

}

public void onCameraViewStarted(int width, int height) {

mGray = new Mat();

mRgba = new Mat();

}

public void onCameraViewStopped() {

mGray.release();

mRgba.release();

}

public Mat onCameraFrame(CvCameraViewFrame inputFrame) {

//这里获取相机拍摄到的原图,彩色图

mRgba = inputFrame.rgba();

//这里获取相机拍摄到的灰度图,用来给下面检测人脸使用。

mGray = inputFrame.gray();

if (mAbsoluteFaceSize == 0) {

int height = mGray.rows();

if (Math.round(height * mRelativeFaceSize)> 0) {

mAbsoluteFaceSize =Math.round(height * mRelativeFaceSize);

}

mNativeDetector.setMinFaceSize(mAbsoluteFaceSize);

}

MatOfRect faces = new MatOfRect();

if (mDetectorType == JAVA_DETECTOR) {

if (mJavaDetector != null)

//调用opencv的detectMultiScale()检测函数,参数意义如下

/*

mGray表示的是要检测的输入图像,faces表示检测到的目标序列,存储检测结果(坐标位置,长,宽),1.1表示

每次图像尺寸减小的比例为1.1,2表示每一个目标至少要被检测到3次才算是真的目标(因为周围的像素和不同的窗口大

小都可以检测到目标),2(其实是一个常量:CV_HAAR_SCALE_IMAGE)表示不是缩放分类器来检测,而是缩放图像,最后两个size()为检测目标的

最小最大尺寸

*/

mJavaDetector.detectMultiScale(mGray, faces, 1.1, 2, 2, // TODO:objdetect.CV_HAAR_SCALE_IMAGE

newSize(mAbsoluteFaceSize, mAbsoluteFaceSize), new Size());

}

else if (mDetectorType ==NATIVE_DETECTOR) {

if (mNativeDetector != null)

mNativeDetector.detect(mGray,faces);

}

else {

Log.e(TAG, "Detection methodis not selected!");

}

Rect[] facesArray = faces.toArray();

for (int i = 0; i //在原图mRgba上为每个检测到的人脸画一个绿色矩形 Core.rectangle(mRgba,facesArray[i].tl(), facesArray[i].br(), FACE_RECT_COLOR, 3); //返回处理好的图像,返回后会直接显示在JavaCameraView上。 return mRgba; } @Override public boolean onCreateOptionsMenu(Menu menu) { Log.i(TAG, "calledonCreateOptionsMenu"); mItemFace50 = menu.add("Face size50%"); mItemFace40 = menu.add("Face size40%"); mItemFace30 = menu.add("Face size30%"); mItemFace20 = menu.add("Face size20%"); mItemType = menu.add(mDetectorName[mDetectorType]); return true; } @Override public boolean onOptionsItemSelected(MenuItem item) { Log.i(TAG, "calledonOptionsItemSelected; selected item: " + item); if (item == mItemFace50) setMinFaceSize(0.5f); else if (item == mItemFace40) setMinFaceSize(0.4f); else if (item == mItemFace30) setMinFaceSize(0.3f); else if (item == mItemFace20) setMinFaceSize(0.2f); else if (item == mItemType) { int tmpDetectorType =(mDetectorType + 1) % mDetectorName.length; item.setTitle(mDetectorName[tmpDetectorType]); setDetectorType(tmpDetectorType); } return true; } private void setMinFaceSize(float faceSize) { mRelativeFaceSize = faceSize; mAbsoluteFaceSize = 0; } private void setDetectorType(int type) { if (mDetectorType != type) { mDetectorType = type; if (type == NATIVE_DETECTOR) { Log.i(TAG, "DetectionBased Tracker enabled"); mNativeDetector.start(); } else { Log.i(TAG, "Cascadedetector enabled"); mNativeDetector.stop(); } } } } 最后配置AndroidManifest.xml文件 <supports-screensandroid:resizeable="true" android:smallScreens="true" android:normalScreens="true" android:largeScreens="true" android:anyDensity="true" /> <uses-permissionandroid:name="android.permission.CAMERA"/> <uses-featureandroid:name="android.hardware.camera"android:required="false"/> <uses-featureandroid:name="android.hardware.camera.autofocus"android:required="false"/> <uses-featureandroid:name="android.hardware.camera.front"android:required="false"/> <uses-featureandroid:name="android.hardware.camera.front.autofocus"android:required="false"/> 到此大功告成 运行结果如下 源代码已经上传至: http://download.csdn.net/detail/wjb820728252/9725892 至此,也是我这段时间走过的坑,希望能帮助到刚入门这门槛的朋友,谢谢!