玩一下QML实现OpenGL编程YUV显示,Canvas3D,three.js,VideoOutput,QQuickItem渲染

暂时有空了学习一下QML编程,今天要实现:

1、QML中3D渲染

2、QML中显示YUV420p

直接上代码,上图吧!

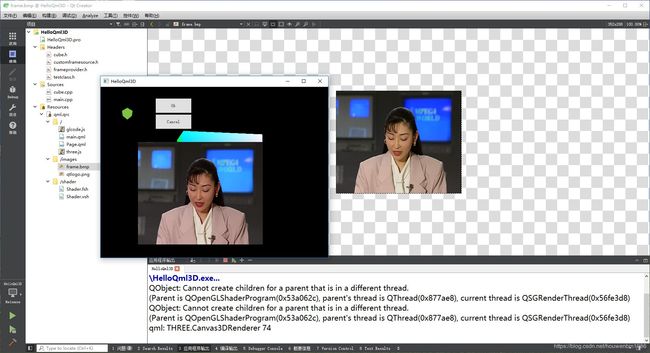

效果如图。

工程配置:

QT += quick multimedia

CONFIG += c++11

# The following define makes your compiler emit warnings if you use

# any feature of Qt which as been marked deprecated (the exact warnings

# depend on your compiler). Please consult the documentation of the

# deprecated API in order to know how to port your code away from it.

DEFINES += QT_DEPRECATED_WARNINGS

# You can also make your code fail to compile if you use deprecated APIs.

# In order to do so, uncomment the following line.

# You can also select to disable deprecated APIs only up to a certain version of Qt.

#DEFINES += QT_DISABLE_DEPRECATED_BEFORE=0x060000 # disables all the APIs deprecated before Qt 6.0.0

SOURCES += \

main.cpp \

cube.cpp

RESOURCES += qml.qrc

LIBS += -lOpenGL32

# Additional import path used to resolve QML modules in Qt Creator's code model

QML_IMPORT_PATH =

# Additional import path used to resolve QML modules just for Qt Quick Designer

QML_DESIGNER_IMPORT_PATH =

# Default rules for deployment.

qnx: target.path = /tmp/$${TARGET}/bin

else: unix:!android: target.path = /opt/$${TARGET}/bin

!isEmpty(target.path): INSTALLS += target

HEADERS += \

testclass.h \

cube.h \

frameprovider.h \

customframesource.h

主要界面(main.qml):

import QtQuick 2.4

import QtCanvas3D 1.1

import QtQuick.Window 2.2

import QtQuick.Controls 2.2

import QtQuick.Layouts 1.3

import QtMultimedia 5.9

//

import Union.Lotto.TestClass 1.0

import OpenGLCube 1.0

//

import "glcode.js" as GLCode

Window {

title: qsTr("HelloQml3D")

width: 640

height: 480

visible: true

TestClass {

id: testID

}

ColumnLayout

{

//

RowLayout

{

//

Canvas3D

{

id: canvas3d

//anchors.fill: parent

width: 150

height: 150

focus: true

onInitializeGL:

{

GLCode.initializeGL(canvas3d);

}

onPaintGL:

{

GLCode.paintGL(canvas3d);

}

onResizeGL:

{

GLCode.resizeGL(canvas3d);

}

}//Canvas3D

ColumnLayout

{

//

Button

{

text: "Ok"

onClicked:

{

console.time("wholeFunction");

console.log("Ok clicked")

testclass.startSlots();

console.log("---- c++ message (", testclass.getName(), Qt.formatDateTime(new Date(),"yyyy-MM-dd hh:mm:ss.zzz"));

console.timeEnd("wholeFunction");

}

}

Button

{

text: "Cancel"

onClicked:

{

console.log("Cancel clicked")

testID.startSlots()

}

}

}//ColumnLayout

}//RowLayout

RowLayout

{

//

Cube

{

id: cube

//anchors.fill: parent

width: 100

height: 100

//

ParallelAnimation

{

running: true

NumberAnimation

{

target: cube

property: "rotateAngle"

from: 0

to: 360

duration: 5000

easing.type: Easing.InOutQuad

}

Vector3dAnimation

{

target: cube

property: "axis"

from: Qt.vector3d(0,1,0)

to: Qt.vector3d(1,0,0)

duration: 5000

}

loops: Animation.Infinite

}//end ParallelAnimation

}//end Cube

VideoOutput

{

id: display

//anchors.top: parent.top

//anchors.bottom: parent.bottom

width: 100

height: 100

source: frameProvider

}//VideoOutput

}//RowLayout

}//ColumnLayout

}

主入口(main.cpp):

#include

#include

#include

//

#include "testclass.h"

#include "cube.h"

#include "frameprovider.h"

#include "customframesource.h"

int main(int argc, char *argv[])

{

QCoreApplication::setAttribute(Qt::AA_EnableHighDpiScaling);

QGuiApplication app(argc, argv);

//1、方式一

qmlRegisterType("Union.Lotto.TestClass",

1,0,

"TestClass");

//

qmlRegisterType("OpenGLCube",

1,0,

"Cube");

//

TesClass test_instance;

//

FrameProvider* frameProvider = new FrameProvider();

CustomFramesource source(352, 288, QVideoFrame::Format_YUV420P);

// Set the correct format for the video surface (Make sure your selected format is supported by the surface)

frameProvider->setFormat(source.width(), source.height(), source.format());

// Connect your frame source with the provider

QObject::connect(&source, SIGNAL(newFrameAvailable(const QVideoFrame &)), frameProvider, SLOT(onNewVideoContentReceived(const QVideoFrame &)));

//

QQmlApplicationEngine engine;

engine.rootContext()->setContextProperty("testclass",&test_instance);//2、方式二

engine.rootContext()->setContextProperty("frameProvider", frameProvider);

engine.load(QUrl(QStringLiteral("qrc:/main.qml")));

if (engine.rootObjects().isEmpty())

{

return -1;

}

//

source.startDataMaker();

return app.exec();

}

FrameProvider.h

#ifndef FRAMEPROVIDER_H

#define FRAMEPROVIDER_H

#include

#include

#include

#include

class FrameProvider : public QObject

{

Q_OBJECT

//

Q_PROPERTY(QAbstractVideoSurface *videoSurface READ videoSurface WRITE setVideoSurface)

public:

QAbstractVideoSurface* videoSurface() const

{

return m_surface;

}

private:

QAbstractVideoSurface *m_surface = NULL;

QVideoSurfaceFormat m_format;

public:

void setVideoSurface(QAbstractVideoSurface *surface)

{

if (m_surface && m_surface != surface && m_surface->isActive())

{

m_surface->stop();

}

m_surface = surface;

if (m_surface && m_format.isValid())

{

m_format = m_surface->nearestFormat(m_format);

m_surface->start(m_format);

}

}

void setFormat(int width, int heigth, int format)

{

QSize size(width, heigth);

QVideoSurfaceFormat vsformat(size, (QVideoFrame::PixelFormat)format);

m_format = vsformat;

if (m_surface)

{

if (m_surface->isActive())

{

m_surface->stop();

}

m_format = m_surface->nearestFormat(m_format);

m_surface->start(m_format);

}

}

public slots:

void onNewVideoContentReceived(const QVideoFrame &frame)

{

if (m_surface)

m_surface->present(frame);

}

};

#endif // FRAMEPROVIDER_H

CustomFrameSource.h:

#ifndef CUSTOMFRAMESOURCE_H

#define CUSTOMFRAMESOURCE_H

#include

#include

#include

#include

#include

//

class CustomFramesource : public QObject

{

Q_OBJECT

public:

explicit CustomFramesource(int width, int height, int format)

{

m_width = width;

m_height = height;

m_format = format; // QVideoFrame::Format_YUV420P

//

m_size = m_width*m_height* 3 / 2;

m_yuv = new unsigned char[m_size];

memset(m_yuv, 0, m_size);

//

QImage image(":/images/frame.bmp");

image = image.scaled(m_width, m_height);

image = image.convertToFormat(QImage::Format_RGB888);//重要!!!

image = image.mirrored();

//image.save("out.bmp");

//

RGB2YUV(image.bits(), image.width(), image.height(), m_yuv);

//rgb2YCbCr420(image.bits(), image.width(), image.height(), m_yuv);

//

//QFile srcYuv("d:/akiyo_cif.yuv");

//if (srcYuv.open(QFile::ReadOnly))

//{

// srcYuv.read((char*)m_yuv, m_size);

// srcYuv.close();

//}

//

QFile target("./out.yuv");

if (target.open(QFile::WriteOnly))

{

target.write((char*)m_yuv, m_size);

target.close();

}

}

~CustomFramesource()

{

stopDataMaker();

//

if (m_yuv)

{

delete[] m_yuv;

m_yuv = nullptr;

}

}

public:

int width()

{

return m_width;

}

int height()

{

return m_height;

}

int format()

{

return m_format;

}

//

void startDataMaker()

{

connect(&m_srcTimer, &QTimer::timeout, [=]

{

this->onSlotFrameGenerator();

});

m_srcTimer.start(1000);

}

void stopDataMaker()

{

m_srcTimer.stop();

}

signals:

void newFrameAvailable(const QVideoFrame& frame);

public slots:

void onSlotFrameGenerator()

{

makeFrame(m_size, m_yuv);

}

public:

void makeFrame(int size, unsigned char* yuv)//转

{

QVideoFrame frame(size, QSize(m_width, m_height), m_width, (QVideoFrame::PixelFormat)m_format);

if (frame.map(QAbstractVideoBuffer::WriteOnly))

{

memcpy(frame.bits(), yuv, size);

frame.setStartTime(0);

frame.unmap();

//

emit newFrameAvailable(frame);

}

}

private:

QImage* greyScale(QImage* origin)

{

QImage* newImage = new QImage(origin->width(), origin->height(), QImage::Format_ARGB32);

//

for (int y = 0; yheight(); y++)

{

QRgb* line = (QRgb *)origin->scanLine(y);

for(int x = 0; xwidth(); x++)

{

// 灰度 - 取R,G,B值为三者的算数平均数;

int average = (qRed(line[x]) + qGreen(line[x]) + qRed(line[x]))/3;

newImage->setPixel(x,y, qRgb(average, average, average));

}

}

return newImage;

}

//效果不行

bool rgb2YCbCr420(uchar* bgrBuf, int width, int height, uchar* yuv)

{

//yuv格式数组大小,y亮度占len长度,u,v各占len/4长度。

int ylen = width * height;

int ulen = width * height / 4;

int vlen = ulen;

//

int len = ylen + ulen + vlen;

//

memset(yuv, 0, len);

//

uchar y, u, v;

uchar *pBgrBuf = nullptr;

//

for (int j = 0; j< height; j++)

{

//

pBgrBuf = bgrBuf + width * (height - 1 - j) * 3 ;

//

for (int i = 0; i < width; i++)

{

//屏蔽ARGB的透明度值

//int rgb = pixels[i * width + j] & 0x00FFFFFF;

//像素的颜色顺序为bgr,移位运算。

//int b = rgb & 0xFF;//r

//int g = (rgb >> 8) & 0xFF;//g

//int r = (rgb >> 16) & 0xFF;//b

//

uchar b = *(pBgrBuf++); //r

uchar g = *(pBgrBuf++); //g

uchar r = *(pBgrBuf++); //b

//套用公式

y = ((66 * r + 129 * g + 25 * b + 128) >> 8) + 16;

u = ((-38 * r - 74 * g + 112 * b + 128) >> 8) + 128;

v = ((112 * r - 94 * g - 18 * b + 128) >> 8) + 128;

//调整

y = y < 16 ? 16 : (y > 255 ? 255 : y);

u = u < 0 ? 0 : (u > 255 ? 255 : u);

v = v < 0 ? 0 : (v > 255 ? 255 : v);

//赋值

yuv[j * width + i] = y;

yuv[ylen + (j >> 1) * width + (i & ~1) + 0] = u;

yuv[ylen + +(j >> 1) * width + (i & ~1) + 1] = v;

}

}

//

return true;

}

//效果好

bool RGB2YUV(uchar* bgrBuf, int nWidth, int nHeight, uchar* yuvBuf)

{

int i, j;

unsigned char *bufY, *bufU, *bufV, *bufRGB;

//

uint ylen = nWidth * nHeight;

uint ulen = (nWidth * nHeight)/4;

uint vlen = ulen;

uint len = ylen + ulen + vlen;

//

memset(yuvBuf, 0, len);

//

bufY = yuvBuf; //Y

bufV = yuvBuf + ylen; //U

bufU = yuvBuf + ylen + ulen; //V

//

unsigned char y, u, v, r, g, b;

//

for (j = 0; j> 8) + 16 ;

u = (unsigned char)( ( -38 * r - 74 * g + 112 * b + 128) >> 8) + 128 ;

v = (unsigned char)( ( 112 * r - 94 * g - 18 * b + 128) >> 8) + 128 ;

//

*(bufY++) = qMax((uchar)0, qMin(y, (uchar)255));

//

if (j%2==0 && i%2 ==0)

{

if (u>255)

{

u=255;

}

if (u<0)

{

u = 0;

}

*(bufU++) =u;

//存u分量

}

else

{

//存v分量

if (i%2==0)

{

if (v>255)

{

v = 255;

}

if (v<0)

{

v = 0;

}

*(bufV++) =v;

}

}

}

}

//

return true;

}

private:

int m_width = 640;

int m_height = 480;

int m_format = QVideoFrame::Format_YUV420P;

//

unsigned char* m_yuv = nullptr;

int m_size = 0;

private:

QTimer m_srcTimer;

};

#endif // CUSTOMFRAMESOURCE_H

显示YUV的核心就是上面俩个.h文件。