orb_slam2外接海康摄像头在Windows10、VS2013下实时运行

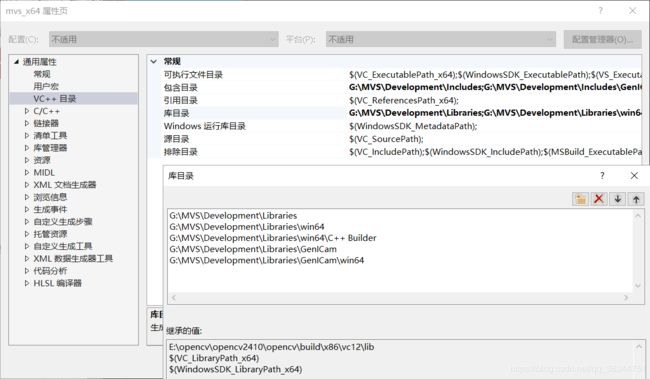

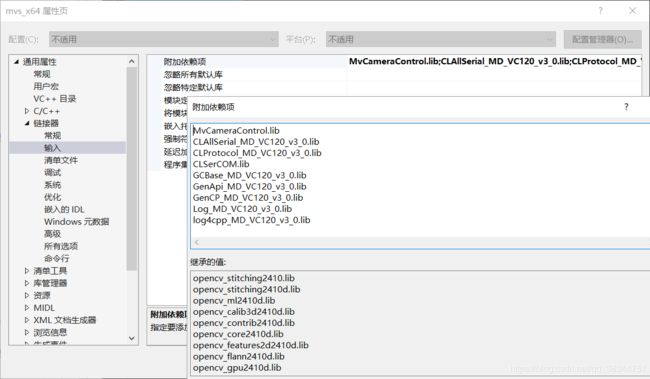

在写代码前有一系列的步骤,需要去官网下载海康sdk,然后把里面Decelopment文件下的头文件和库文件添加到属性表里,这样代码就可以直接调用里面的函数。

包含目录:

库目录:

附加依赖性:

代码只需将mono_kitti.cc的代码修改如下:

#pragma comment(lib, "MvCameraControl.lib")

#include

#include

#include

#include

#include

#include

#include

#include "MvCameraControl.h"

#include

#include

#include

#include

#include

#include

#include "string.h"

unsigned int g_nPayloadSize = 0;

using namespace std;

enum CONVERT_TYPE

{

OpenCV_Mat = 0, // Most of the time, we use 'Mat' format to store image data after OpenCV V2.1

};

// print the discovered devices information to user 将发现的设备信息打印给用户

bool PrintDeviceInfo(MV_CC_DEVICE_INFO* pstMVDevInfo)

{

if (NULL == pstMVDevInfo)

{

printf("The Pointer of pstMVDevInfo is NULL!\n");

return false;

}

if (pstMVDevInfo->nTLayerType == MV_USB_DEVICE)

{

printf("UserDefinedName: %s\n", pstMVDevInfo->SpecialInfo.stUsb3VInfo.chUserDefinedName);

printf("Serial Number: %s\n", pstMVDevInfo->SpecialInfo.stUsb3VInfo.chSerialNumber);

printf("Device Number: %d\n\n", pstMVDevInfo->SpecialInfo.stUsb3VInfo.nDeviceNumber);

}

else

printf("Not support.\n");

return true;

}

int RGB2BGR(unsigned char* pRgbData, unsigned int nWidth, unsigned int nHeight)

{

if (NULL == pRgbData)

{

return MV_E_PARAMETER;

}

for (unsigned int j = 0; j < nHeight; j++)

{

for (unsigned int i = 0; i < nWidth; i++)

{

unsigned char red = pRgbData[j * (nWidth * 3) + i * 3];

pRgbData[j * (nWidth * 3) + i * 3] = pRgbData[j * (nWidth * 3) + i * 3 + 2];

pRgbData[j * (nWidth * 3) + i * 3 + 2] = red;

}

}

return MV_OK;

}

// convert data stream in Mat format 以Mat格式转换数据流

bool Convert2Mat(MV_FRAME_OUT_INFO_EX* pstImageInfo, unsigned char * pData)

{

cv::Mat srcImage;

if (pstImageInfo->enPixelType == PixelType_Gvsp_Mono8)

{

srcImage = cv::Mat(pstImageInfo->nHeight, pstImageInfo->nWidth, CV_8UC1, pData);

}

else if (pstImageInfo->enPixelType == PixelType_Gvsp_RGB8_Packed)

{

RGB2BGR(pData, pstImageInfo->nWidth, pstImageInfo->nHeight);

srcImage = cv::Mat(pstImageInfo->nHeight, pstImageInfo->nWidth, CV_8UC3, pData);

}

else

{

printf("unsupported pixel format\n");

return false;

}

if (NULL == srcImage.data)

{

return false;

}

//save converted image in a local file 保存

// try {

//#if defined (VC9_COMPILE)

// cvSaveImage("MatImage.jpg", &(IplImage(srcImage)));

//#else

// cv::imwrite("MatImage.jpg", srcImage);

//#endif

// }

// catch (cv::Exception& ex) {

// fprintf(stderr, "Exception saving image to bmp format: %s\n", ex.what());

// }

srcImage.release();

return true;

}

int main(int argc, char **argv)

{

if (argc != 4)

{

cerr << endl << "Usage: ./path_to_PF_ORB path_to_vocabulary path_to_settings path_to_dev_video" << endl;

return 1;

}

int nRet = MV_OK;

void* handle = NULL;

// Create SLAM system. It initializes all system threads and gets ready to process frames.

ORB_SLAM2::System SLAM(argv[1], argv[2], ORB_SLAM2::System::MONOCULAR, true);

cout << endl << "-------" << endl;

cout << "Start processing sequence ..." << endl;

// Main loop

do

{

// Enum device

MV_CC_DEVICE_INFO_LIST stDeviceList;

memset(&stDeviceList, 0, sizeof(MV_CC_DEVICE_INFO_LIST));

nRet = MV_CC_EnumDevices(MV_GIGE_DEVICE | MV_USB_DEVICE, &stDeviceList);

if (MV_OK != nRet)

{

printf("Enum Devices fail! nRet [0x%x]\n", nRet);

break;

}

if (stDeviceList.nDeviceNum > 0)

{

for (unsigned int i = 0; i < stDeviceList.nDeviceNum; i++)

{

printf("[device %d]:\n", i);

MV_CC_DEVICE_INFO* pDeviceInfo = stDeviceList.pDeviceInfo[i];

if (NULL == pDeviceInfo)

{

break;

}

PrintDeviceInfo(pDeviceInfo);

}

}

else

{

printf("Find No Devices!\n");

break;

}

// select device to connect

//printf("Please Input camera index:");

unsigned int nIndex = 0;

//scanf("%d", &nIndex);

if (nIndex >= stDeviceList.nDeviceNum)

{

printf("Input error!\n");

break;

}

// Select device and create handle

nRet = MV_CC_CreateHandle(&handle, stDeviceList.pDeviceInfo[nIndex]);

if (MV_OK != nRet)

{

printf("Create Handle fail! nRet [0x%x]\n", nRet);

break;

}

// open device

nRet = MV_CC_OpenDevice(handle);

if (MV_OK != nRet)

{

printf("Open Device fail! nRet [0x%x]\n", nRet);

break;

}

// Detection network optimal package size(It only works for the GigE camera)

if (stDeviceList.pDeviceInfo[nIndex]->nTLayerType == MV_GIGE_DEVICE)

{

int nPacketSize = MV_CC_GetOptimalPacketSize(handle);

if (nPacketSize > 0)

{

nRet = MV_CC_SetIntValue(handle, "GevSCPSPacketSize", nPacketSize);

if (nRet != MV_OK)

{

printf("Warning: Set Packet Size fail nRet [0x%x]!", nRet);

}

}

else

{

printf("Warning: Get Packet Size fail nRet [0x%x]!", nPacketSize);

}

}

// Set trigger mode as off

nRet = MV_CC_SetEnumValue(handle, "TriggerMode", 0);

if (MV_OK != nRet)

{

printf("Set Trigger Mode fail! nRet [0x%x]\n", nRet);

break;

}

// Get payload size

MVCC_INTVALUE stParam;

memset(&stParam, 0, sizeof(MVCC_INTVALUE));

nRet = MV_CC_GetIntValue(handle, "PayloadSize", &stParam);

if (MV_OK != nRet)

{

printf("Get PayloadSize fail! nRet [0x%x]\n", nRet);

break;

}

g_nPayloadSize = stParam.nCurValue;

// Start grab image

nRet = MV_CC_StartGrabbing(handle);

if (MV_OK != nRet)

{

printf("Start Grabbing fail! nRet [0x%x]\n", nRet);

break;

}

// 数据去转换

//bool bConvertRet = false;

//bConvertRet = Convert2Mat(&stImageInfo, pData);

int timeStamps = 0;

for (;; timeStamps++)

{

cv::Mat srcImage;

MV_FRAME_OUT_INFO_EX stImageInfo = { 0 };

memset(&stImageInfo, 0, sizeof(MV_FRAME_OUT_INFO_EX));

unsigned char * pData = (unsigned char *)malloc(sizeof(unsigned char) * (g_nPayloadSize));

if (pData == NULL)

{

printf("Allocate memory failed.\n");

break;

}

// get one frame from camera with timeout=1000ms

nRet = MV_CC_GetOneFrameTimeout(handle, pData, g_nPayloadSize, &stImageInfo, 1000);

if (nRet == MV_OK)

{

printf("Get One Frame: Width[%d], Height[%d], nFrameNum[%d]\n",

stImageInfo.nWidth, stImageInfo.nHeight, stImageInfo.nFrameNum);

}

else

{

printf("No data[0x%x]\n", nRet);

free(pData);

pData = NULL;

break;

}

if (stImageInfo.enPixelType == PixelType_Gvsp_Mono8)

{

srcImage = cv::Mat(stImageInfo.nHeight, stImageInfo.nWidth, CV_8UC1, pData);

}

else if (stImageInfo.enPixelType == PixelType_Gvsp_RGB8_Packed)

{

RGB2BGR(pData, stImageInfo.nWidth, stImageInfo.nHeight);

srcImage = cv::Mat(stImageInfo.nHeight, stImageInfo.nWidth, CV_8UC3, pData);

}

else

{

printf("unsupported pixel format\n");

return false;

}

if (NULL == srcImage.data)

{

return false;

}

// Pass the image to the SLAM system

SLAM.TrackMonocular(srcImage, timeStamps);

}

// print result

/* if (bConvertRet)

{

printf("OpenCV format convert finished.\n");

free(pData);

pData = NULL;

}

else

{

printf("OpenCV format convert failed.\n");

free(pData);

pData = NULL;

break;

}*/

// Stop grab image

nRet = MV_CC_StopGrabbing(handle);

if (MV_OK != nRet)

{

printf("Stop Grabbing fail! nRet [0x%x]\n", nRet);

break;

}

// Close device

nRet = MV_CC_CloseDevice(handle);

if (MV_OK != nRet)

{

printf("ClosDevice fail! nRet [0x%x]\n", nRet);

break;

}

// Destroy handle

nRet = MV_CC_DestroyHandle(handle);

if (MV_OK != nRet)

{

printf("Destroy Handle fail! nRet [0x%x]\n", nRet);

break;

}

} while (0);

if (nRet != MV_OK)

{

if (handle != NULL)

{

MV_CC_DestroyHandle(handle);

handle = NULL;

}

}

SLAM.Shutdown();

SLAM.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectory.txt");

return 0;

}