java爬虫爬取豆瓣网站数据

简介

网络爬虫(又被称为网页蜘蛛,网络机器人,在FOAF社区中间,更经常的称为网页追逐者),是一种按照一定的规则,自动的抓取万维网信息的程序或者脚本。另外一些不常使用的名字还有蚂蚁,自动索引,模拟程序或者蠕虫.

网络爬虫是一个自动提取网页的程序,它为搜索引擎从万维网上下载网页,是搜索引擎的重要组成。传统爬虫从一个或若干初始网页的URL开始,获得初始网页上的URL,在抓取网页的过程中,不断从当前页面上抽取新的URL放入队列,直到满足系统的一定停止条件,在现在这个大数据的时代,

可以帮我们获取更多过滤更多好的数据。

分析豆瓣网站

接下来,我们来分析一下豆瓣网站,进行数据爬取

网址

https://movie.douban.com/tag/#/

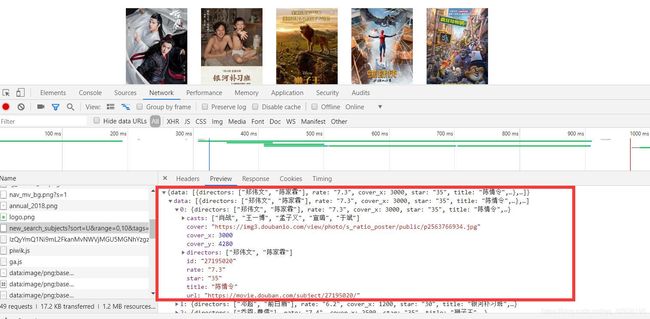

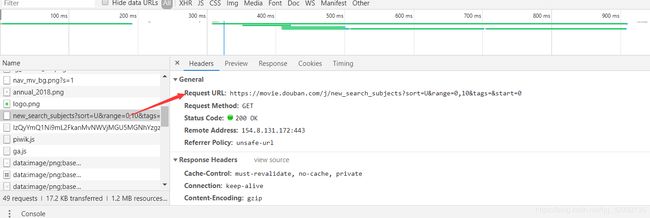

点击F12在Chrome浏览器的network中会得到如下的数据

https://movie.douban.com/j/new_search_subjects?sort=U&range=0,10&tags=&start=0

接下来,我们就可以开始创建Maven项目来进行爬取

maven项目结构如下图:

这里maven工程的依赖,在这里使用到了数据持久层的框架Mybatis,可以自己先去了解一下这个框架,和hibernate使用方法相似。数据库使用的是mysql

pom.xml

4.0.0

com.liuting

java_Crawler

war

0.0.1-SNAPSHOT

java_Crawler Maven Webapp

http://maven.apache.org

junit

junit

4.12

test

javax.servlet

javax.servlet-api

4.0.1

provided

org.json

json

20160810

com.alibaba

fastjson

1.2.47

mysql

mysql-connector-java

5.1.44

org.mybatis

mybatis

3.5.1

java_Crawler

org.apache.maven.plugins

maven-compiler-plugin

3.7.0

1.8

1.8

UTF-8

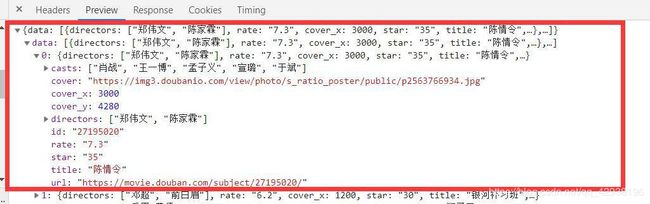

首先我们在model包中建立实体对象,字段和豆瓣电影的字段一样,就是请求豆瓣电影的json对象里面的字段

实体类

Movie

package com.liuting.model;

public class Movie {

private String id;//电影的id

private String directors;//导演

private String title;//标题

private String cover;//封面

private String rate;//评分

private String casts;//演员

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getDirectors() {

return directors;

}

public void setDirectors(String directors) {

this.directors = directors;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getCover() {

return cover;

}

public void setCover(String cover) {

this.cover = cover;

}

public String getRate() {

return rate;

}

public void setRate(String rate) {

this.rate = rate;

}

public String getCasts() {

return casts;

}

public void setCasts(String casts) {

this.casts = casts;

}

}

MovieMapper(接口)

package com.liuting.mapper;

import java.util.List;

import com.liuting.model.Movie;

public interface MovieMapper {

void insert(Movie movie);

List findAll();

}

接下来写 resource下的配置文件

config.properties

#oracle9i

#driver=oracle.jdbc.driver.OracleDriver

#url=jdbc:oracle:thin:@localhost:1521:ora9

#user=test

#pwd=test

#sql2005

#driver=com.microsoft.sqlserver.jdbc.SQLServerDriver

#url=jdbc:sqlserver://localhost:1423;DatabaseName=test

#user=sa

#pwd=sa

#sql2000

#driver=com.microsoft.jdbc.sqlserver.SQLServerDriver

#url=jdbc:microsoft:sqlserver://localhost:1433;databaseName=unit6DB

#user=sa

#pwd=888888

#mysql5

driver=com.mysql.jdbc.Driver

url=jdbc:mysql://127.0.0.1:3306/test?useUnicode=true&characterEncoding=utf8&serverTimezone=GMT

username=root

password=123

MovieMapper.xml(创建映射文件)

INSERT INTO movie(id,title,cover,rate,casts,directors)

VALUES

(#{id},#{title},#{cover},#{rate},#{casts},#{directors})

mybatis-config.xml(创建mybatis配置文件)

所需要的工具类

GetJson

package com.liuting.test;

import java.io.ByteArrayOutputStream;

import java.io.IOException;

import java.io.InputStream;

import java.net.HttpURLConnection;

import java.net.MalformedURLException;

import java.net.URL;

import org.json.JSONObject;

public class GetJson {

public JSONObject getHttpJson(String url, int comefrom) throws Exception {

try {

URL realUrl = new URL(url);

HttpURLConnection connection = (HttpURLConnection) realUrl.openConnection();

connection.setRequestProperty("accept", "*/*");

connection.setRequestProperty("connection", "Keep-Alive");

connection.setRequestProperty("user-agent", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1;SV1)");

// 建立实际的连接

connection.connect();

//请求成功

if (connection.getResponseCode() == 200) {

InputStream is = connection.getInputStream();

ByteArrayOutputStream baos = new ByteArrayOutputStream();

//10MB的缓存

byte[] buffer = new byte[10485760];

int len = 0;

while ((len = is.read(buffer)) != -1) {

baos.write(buffer, 0, len);

}

String jsonString = baos.toString();

baos.close();

is.close();

//转换成json数据处理

// getHttpJson函数的后面的参数1,表示返回的是json数据,2表示http接口的数据在一个()中的数据

JSONObject jsonArray = getJsonString(jsonString, comefrom);

return jsonArray;

}

} catch (MalformedURLException e) {

e.printStackTrace();

} catch (IOException ex) {

ex.printStackTrace();

}

return null;

}

public JSONObject getJsonString(String str, int comefrom) throws Exception{

JSONObject jo = null;

if(comefrom==1){

/* return new JSONObject(str);*/

return new JSONObject(str);

}else if(comefrom==2){

int indexStart = 0;

//字符处理

for(int i=0;i最后我们写一个启动豆瓣网类,将爬取的数据插入到数据库中

Main

package com.liuting.test;

import java.io.IOException;

import java.io.InputStream;

import java.util.List;

import org.apache.ibatis.io.Resources;

import org.apache.ibatis.session.SqlSession;

import org.apache.ibatis.session.SqlSessionFactory;

import org.apache.ibatis.session.SqlSessionFactoryBuilder;

import org.json.JSONObject;

import com.alibaba.fastjson.JSON;

import org.json.JSONArray;

import com.liuting.mapper.MovieMapper;

import com.liuting.model.Movie;

public class Main {

public static void main(String [] args) {

String resource = "mybatis-config.xml"; //定义配置文件路径

InputStream inputStream = null;

try {

inputStream = Resources.getResourceAsStream(resource);//读取配置文件

} catch (IOException e) {

e.printStackTrace();

}

SqlSessionFactory sqlSessionFactory = new SqlSessionFactoryBuilder().build(inputStream);//注册mybatis 工厂

SqlSession sqlSession = sqlSessionFactory.openSession();//得到连接对象

MovieMapper movieMapper = sqlSession.getMapper(MovieMapper.class);//从mybatis中得到dao对象

int start;//每页多少条

int total = 0;//记录数

int end = 9979;//总共9979条数据

for (start = 0; start <= end; start += 20) {

try {

String address = "https://Movie.douban.com/j/new_search_subjects?sort=U&range=0,10&tags=&start=" + start;

JSONObject dayLine = new GetJson().getHttpJson(address, 1);

System.out.println("start:" + start);

JSONArray json = dayLine.getJSONArray("data");

List list = JSON.parseArray(json.toString(), Movie.class);

for (Movie movie : list) {

movieMapper.insert(movie);

sqlSession.commit();

}

total += list.size();

System.out.println("正在爬取中---共抓取:" + total + "条数据");

} catch (Exception e) {

e.printStackTrace();

}

}

}

}