openstack -- nova的组件消息队列

Nova组件

Nova是OpenStack云中的计算组织控制器组件,支持OpenStack云中实例(instances)生命周期的所有活动都由Nova处理。这样使得Nova成为一个负责管理计算资源、网络、认证、所需可扩展性的平台。但是,Nova自身并没有提供任何虚拟化能力,相反它使用libvirt API来与被支持的Hypervisors交互。Nova 通过一个与Amazon Web Services(AWS)EC2 API兼容的web services API来对外提供服务。

nova的主要组件

Nova 云架构包括以下主要组件:

API Server (nova-api)

Message Queue (rabbit-mq server)

Compute Workers (nova-compute)

Network Controller (nova-network)

Volume Worker (nova-volume)

Scheduler (nova-scheduler)

相关介绍:https://www.jb51.net/article/96997.htm

消息队列Message Queue

消息(Message)是指在应用间传送的数据。

消息队列(Message Queue)是一种应用间的通信方式,消息发送后可以立即返回,由消息系统来确保消息的可靠传递。消息生产者只需要把消息发布到消息队列中而不用管谁来取,消息消费者只需从消息队列中取消息而不管是谁生产的。由此看出消息队列是一种应用间的异步协作机制

RabbitMQ消息队列

引自:https://blog.csdn.net/whoamiyang/article/details/54954780

![]()

概念说明:

Broker:它提供一种传输服务,它的角色就是维护一条从生产者到消费者的路线,保证数据能按照指定的方式进行传输,

Exchange:消息交换机,它指定消息按什么规则,路由到哪个队列。

Queue:相当于存放消息的缓冲区,每个消息都会被投到一个或多个队列。

Binding:绑定,它的作用就是把exchange和queue按照路由规则绑定起来.

Routing Key:路由关键字,exchange根据这个关键字进行消息投递。

vhost:虚拟主机,一个broker里可以有多个vhost,用作不同用户的权限分离。

Producer:消息生产者,就是投递消息的程序.

Consumer:消息消费者,就是接受消息的程序.

Channel:消息通道,在客户端的每个连接里,可建立多个channel.

交换器

引自:https://blog.csdn.net/jincm13/article/details/38705719

交换器根据生命周期的长短主要分为三种:持久交换器、临时交换器与自动删除交换器。

持久交换器是在RabbitMQ服务器中长久存在的,并不会因为系统重启或者应用程序终止而消除,其相关数据长期驻留在硬盘之上;

临时交换器驻留在内存中,随着系统的关闭而消失;

自动删除交换器随着宿主应用程序的中止而自动消亡,可有效释放服务器资源。

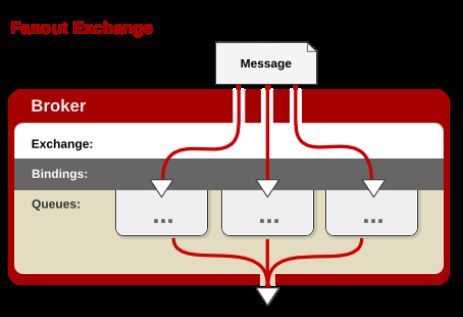

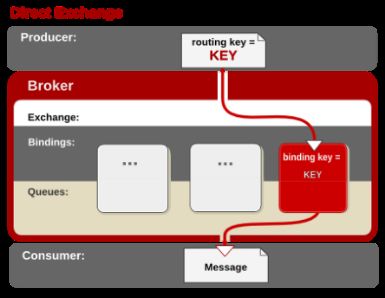

而交换器根据发布消息的方法又可以分为三种:广播式(fanout)、直接式(direct)、主题式(topic)。

广播式交换器不分析所接收到消息中的Routing Key,默认将消息转发到所有与该交换器绑定的队列中去,其转发效率最高,但是安全性较低,消费者应用程序可获取不属于自己的消息;

直接式交换器需要精确匹配Routing Key与BindingKey,如消息的Routing Key = Cloud,那么该条消息只能被转发至Binding Key = Cloud的消息队列中去,其转发效率较高,安全性较好,但是缺乏灵活性,系统配置量较大;

主题式交换器最为灵活,其通过消息的Routing Key与Binding Key的模式匹配,将消息转发至所有符合绑定规则的队列中。Binding Key支持通配符,其中“*”匹配一个词组,“#”匹配多个词组(包括零个)。其实还有一种header类型,headers 匹配消息的 header 而不是路由键,此外 headers 交换器和 direct 交换器完全一致,但性能差很多,目前几乎用不到了,

队列

引自:https://blog.csdn.net/jincm13/article/details/38705719

类似于交换器,消息队列也可以是持久的,临时的或者自动删除的。

持久的消息队列不会因为系统重启或者应用程序终止而消除,其相关数据长期驻留在硬盘之上;

临时消息队列在服务器被关闭时停止工作;

自动删除队列在没有应用程序使用它的时候被服务器自动删除。

消息队列将消息保存在内存、硬盘或两者的组合之中。

队列由消费者应用程序创建,主要用于实现存储与转发交换器发送来的消息。

绑定

绑定,用于消息队列和交换器之间的关联。一个绑定就是基于路由键将交换器和消息队列连接起来的路由规则,可以将交换器理解成一个由绑定构成的路由表。

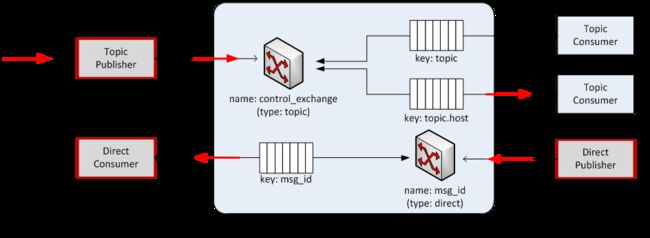

nova中的消息队列

nova模块内不同组件之间通信利用RPC远程调用完成,通过消息队列使用AMQP(Advanced Message Queue Protocol)完成通信。

AMQP是应用层协议的一个开放标准,为面向消息的中间件而设计,其中RabbitMQ是AMQP协议的一个开源实现,在openstack中可以使用其他实现,比如ActiveMQ,但是大部分openstack版本都使用了rabbitMQ。

Nova 通过异步调用请求响应,使用回调函数在收到响应时触发。因为使用了异步通信,不会有用户长时间卡在等待状态。这是有效的,因为许多API调用预期的行为都非常耗时,例如加载一个实例,或者上传一个镜像。

rpc发送消息方式

nova中的每个组件都会连接消息服务器,一个组件可能是一个消息发送者(如API、Scheduler),也可能是一个消息接收者(如compute、volume、network)。发送消息主要有两种方式:rpc.call和rpc.cast。

例如在用户想要启动一个实例时,

1、nova-api作为消息生产者,将“启动实例”的消息包装成amqp消息以rpc.call的方式通过topic交换机放入消息队列;

2、nova-compute作为消息消费者,接受该消息并通过底层虚拟化软件执行相应操作;

3、虚拟机启动成功后,nova-compute作为消息生产者将“实例启动成功”的消息通过direct交换机放入相应的响应队列;

4、nova-api作为消息消费者接受该消息并通知用户。

rpc.cast

rpc.cast:只提供单向请求,发送消息到消息队列,没有返回结果

以nova-conductor服务调用nova-compute服务build_and_run_instance(创建并启动)为例:

1. nova-conductor服务向消息队列服务的compute队列发送RPC请求,请求结束,不需要等待请求的最终回复。

2. nova-compute服务通过topic交换机从compute队列中获取消息并作出相应的处理。

创建实例的rpc调用

//从nova/compute/api.py的create函数开始:

create(self, context, instance_type,

image_href, kernel_id=None, ramdisk_id=None,

min_count=None, max_count=None,

display_name=None, display_description=None,

key_name=None, key_data=None, security_group=None,

availability_zone=None, forced_host=None, forced_node=None,

user_data=None, metadata=None, injected_files=None,

admin_password=None, block_device_mapping=None,

access_ip_v4=None, access_ip_v6=None, requested_networks=None,

config_drive=None, auto_disk_config=None, scheduler_hints=None,

legacy_bdm=True, shutdown_terminate=False,

check_server_group_quota=False)

return self._create_instance

=>

def _create_instance(self, context, instance_type,

image_href, kernel_id, ramdisk_id,

min_count, max_count,

display_name, display_description,

key_name, key_data, security_groups,

availability_zone, user_data, metadata, injected_files,

admin_password, access_ip_v4, access_ip_v6,

requested_networks, config_drive,

block_device_mapping, auto_disk_config, filter_properties,

reservation_id=None, legacy_bdm=True, shutdown_terminate=False,

check_server_group_quota=False)

self.compute_task_api.build_instances(context,

instances=instances, image=boot_meta,

filter_properties=filter_properties,

admin_password=admin_password,

injected_files=injected_files,

requested_networks=requested_networks,

security_groups=security_groups,

block_device_mapping=block_device_mapping,

legacy_bdm=False)

return (instances, reservation_id)

//其中compute_task_api为conductor的一个computetaskapi的类,用于排列计算任务

self.compute_task_api = conductor.ComputeTaskAPI()

ComputeTaskAPI(object):

"""ComputeTask API that queues up compute tasks for nova-conductor."""

def __init__(self):

//computetaskapi类含有一个computetaskapi成员,作为rpc调用的客户端

self.conductor_compute_rpcapi = rpcapi.ComputeTaskAPI()

class ComputeTaskAPI(object):

"""Client side of the conductor 'compute' namespaced RPC API"""

def __init__(self):

super(ComputeTaskAPI, self).__init__()

//osol_messaging库封装了rpc通信的底层实现,target包含了消息目的地的相关参数

target = messaging.Target(topic=CONF.conductor.topic,

namespace='compute_task',

version='1.0')

self.client = rpc.get_client(target, serializer=serializer)

get_client(target, version_cap=None, serializer=None):

return messaging.RPCClient(TRANSPORT,

target,

version_cap=version_cap,

serializer=serializer)

class RPCClient(object):

"""A class for invoking methods on remote servers.

The RPCClient class is responsible for sending method invocations to remote

servers via a messaging transport."""

=>

def build_instances(self, context, instances, image,

filter_properties, admin_password, injected_files,

requested_networks, security_groups, block_device_mapping,

legacy_bdm=True):

//调用ComputeTaskManager类的build_instances函数

utils.spawn_n(self._manager.build_instances, context,

instances=instances, image=image,

filter_properties=filter_properties,

admin_password=admin_password, injected_files=injected_files,

requested_networks=requested_networks,

security_groups=security_groups,

block_device_mapping=block_device_mapping,

legacy_bdm=legacy_bdm)

//computetask管理类,管理一些操作

ComputeTaskManager(base.Base):

"""Namespace for compute methods.

This class presents an rpc API for nova-conductor under the 'compute_task'

namespace. The methods here are compute operations that are invoked

by the API service. These methods see the operation to completion, which

may involve coordinating activities on multiple compute nodes.

"""

=>

def build_instances(self, context, instances, image, filter_properties,

admin_password, injected_files, requested_networks,

security_groups, block_device_mapping=None, legacy_bdm=True)

//调用ComputeAPI类的build_and_run_instance函数,ComputeAPI为compute rpc客户端

self.compute_rpcapi.build_and_run_instance(context,

instance=instance, host=host['host'], image=image,

request_spec=request_spec,

filter_properties=local_filter_props,

admin_password=admin_password,

injected_files=injected_files,

requested_networks=requested_networks,

security_groups=security_groups,

block_device_mapping=bdms, node=host['nodename'],

limits=host['limits'])

self.compute_rpcapi = compute_rpcapi.ComputeAPI()

class ComputeAPI(object):

"""Client side of the compute rpc API."""

=>

def build_and_run_instance(self, ctxt, instance, host, image, request_spec,

filter_properties, admin_password=None, injected_files=None,

requested_networks=None, security_groups=None,

block_device_mapping=None, node=None, limits=None):

version = '4.0'

cctxt = self.client.prepare(server=host, version=version)

//调用cast方法,发送请求不用等待响应

cctxt.cast(ctxt, 'build_and_run_instance', instance=instance,

image=image, request_spec=request_spec,

filter_properties=filter_properties,

admin_password=admin_password,

injected_files=injected_files,

requested_networks=requested_networks,

security_groups=security_groups,

block_device_mapping=block_device_mapping, node=node,

limits=limits)

=>

def prepare(self, exchange=_marker, topic=_marker, namespace=_marker,

version=_marker, server=_marker, fanout=_marker,

timeout=_marker, version_cap=_marker, retry=_marker):

"""Prepare a method invocation context.

Use this method to override client properties for an individual method

invocation."""

return self._prepare(self,

exchange, topic, namespace,

version, server, fanout,

timeout, version_cap, retry)

def _prepare(cls, base,

exchange=_marker, topic=_marker, namespace=_marker,

version=_marker, server=_marker, fanout=_marker,

timeout=_marker, version_cap=_marker, retry=_marker):

"""Prepare a method invocation context. See RPCClient.prepare()."""

return _CallContext(base.transport, target,

base.serializer,

timeout, version_cap, retry)

//一个用于调用的上下文类

class _CallContext(object):

_marker = object()

def __init__(self, transport, target, serializer,

timeout=None, version_cap=None, retry=None):

self.conf = transport.conf

self.transport = transport

self.target = target

self.serializer = serializer

self.timeout = timeout

self.retry = retry

self.version_cap = version_cap

super(_CallContext, self).__init__()

=>

//调用其cast方法

def cast(self, ctxt, method, **kwargs):

"""Invoke a method and return immediately. See RPCClient.cast()."""

msg = self._make_message(ctxt, method, kwargs)

ctxt = self.serializer.serialize_context(ctxt)

if self.version_cap:

self._check_version_cap(msg.get('version'))

try:

self.transport._send(self.target, ctxt, msg, retry=self.retry)

=>

//封装消息

def _make_message(self, ctxt, method, args):

msg = dict(method=method)

msg['args'] = dict()

for argname, arg in six.iteritems(args):

msg['args'][argname] = self.serializer.serialize_entity(ctxt, arg)

if self.target.namespace is not None:

msg['namespace'] = self.target.namespace

if self.target.version is not None:

msg['version'] = self.target.version

return msg

=>

//oslo_messaging库封装的一个类,其实就是rabbitmq等ampq的实现方式

class Transport(object):

"""A messaging transport.

This is a mostly opaque handle for an underlying messaging transport

driver.

It has a single 'conf' property which is the cfg.ConfigOpts instance used

to construct the transport object.

"""

//调用发送函数,其底层调用driver的send函数,driver就是kombu等实现库

def _send(self, target, ctxt, message, wait_for_reply=None, timeout=None,

retry=None):

if not target.topic:

raise exceptions.InvalidTarget('A topic is required to send',

target)

return self._driver.send(target, ctxt, message,

wait_for_reply=wait_for_reply,

timeout=timeout, retry=retry)

=>

...

//服务器端

//开启服务

server = service.Service.create(binary='nova-scheduler',

topic=CONF.scheduler_topic)

//调用Service类的create

def create(cls, host=None, binary=None, topic=None, manager=None,

report_interval=None, periodic_enable=None,

periodic_fuzzy_delay=None, periodic_interval_max=None,

db_allowed=True):

"""Instantiates class and passes back application object."""

//执行此类的构造函数

service_obj = cls(host, binary, topic, manager,

report_interval=report_interval,

periodic_enable=periodic_enable,

periodic_fuzzy_delay=periodic_fuzzy_delay,

periodic_interval_max=periodic_interval_max,

db_allowed=db_allowed)

return service_obj

//service.start

def start(self):

target = messaging.Target(topic=self.topic, server=self.host)

//endpoint包含了方法

endpoints = [

self.manager,

baserpc.BaseRPCAPI(self.manager.service_name, self.backdoor_port)

]

endpoints.extend(self.manager.additional_endpoints)

serializer = objects_base.NovaObjectSerializer()

self.rpcserver = rpc.get_server(target, endpoints, serializer)

self.rpcserver.start()

//获取rpc服务器

def get_server(target, endpoints, serializer=None):

assert TRANSPORT is not None

serializer = RequestContextSerializer(serializer)

return messaging.get_rpc_server(TRANSPORT,

target,

endpoints,

executor='eventlet',

serializer=serializer)

def get_rpc_server(transport, target, endpoints,

executor='blocking', serializer=None):

"""Construct an RPC server.

The executor parameter controls how incoming messages will be received and

dispatched. By default, the most simple executor is used - the blocking

executor."""

dispatcher = rpc_dispatcher.RPCDispatcher(target, endpoints, serializer)

return msg_server.MessageHandlingServer(transport, dispatcher, executor)

//分发器

class RPCDispatcher(dispatcher.DispatcherBase):

"""A message dispatcher which understands RPC messages.

A MessageHandlingServer is constructed by passing a callable dispatcher

which is invoked with context and message dictionaries each time a message

is received.

RPCDispatcher is one such dispatcher which understands the format of RPC

messages. The dispatcher looks at the namespace, version and method values

in the message and matches those against a list of available endpoints.

Endpoints may have a target attribute describing the namespace and version

of the methods exposed by that object. All public methods on an endpoint

object are remotely invokable by clients."""

//消息处理服务器

class MessageHandlingServer(service.ServiceBase, _OrderedTaskRunner):

"""Server for handling messages.

Connect a transport to a dispatcher that knows how to process the

message using an executor that knows how the app wants to create

new tasks."""

def __init__(self, transport, dispatcher, executor='blocking'):

"""Construct a message handling server.

The dispatcher parameter is a callable which is invoked with context

and message dictionaries each time a message is received.

The executor parameter controls how incoming messages will be received

and dispatched. By default, the most simple executor is used - the

blocking executor.

:param transport: the messaging transport

:type transport: Transport

:param dispatcher: a callable which is invoked for each method

:type dispatcher: callable

:param executor: name of message executor - for example

'eventlet', 'blocking'

:type executor: str

"""

self.conf = transport.conf

self.conf.register_opts(_pool_opts)

self.transport = transport

self.dispatcher = dispatcher

self.executor_type = executor

self.listener = None

try:

mgr = driver.DriverManager('oslo.messaging.executors',

self.executor_type)

except RuntimeError as ex:

raise ExecutorLoadFailure(self.executor_type, ex)

self._executor_cls = mgr.driver

self._work_executor = None

self._poll_executor = None

self._started = False

super(MessageHandlingServer, self).__init__()

//serve.start

def start(self, override_pool_size=None):

"""Start handling incoming messages.

This method causes the server to begin polling the transport for

incoming messages and passing them to the dispatcher. Message

processing will continue until the stop() method is called.

The executor controls how the server integrates with the applications

I/O handling strategy - it may choose to poll for messages in a new

process, thread or co-operatively scheduled coroutine or simply by

registering a callback with an event loop. Similarly, the executor may

choose to dispatch messages in a new thread, coroutine or simply the

current thread.

"""

# Warn that restarting will be deprecated

if self._started:

LOG.warning(_LW('Restarting a MessageHandlingServer is inherently '

'racy. It is deprecated, and will become a noop '

'in a future release of oslo.messaging. If you '

'need to restart MessageHandlingServer you should '

'instantiate a new object.'))

self._started = True

try:

self.listener = self.dispatcher._listen(self.transport)

except driver_base.TransportDriverError as ex:

raise ServerListenError(self.target, ex)

executor_opts = {}

if self.executor_type == "threading":

executor_opts["max_workers"] = (

override_pool_size or self.conf.executor_thread_pool_size

)

elif self.executor_type == "eventlet":

eventletutils.warn_eventlet_not_patched(

expected_patched_modules=['thread'],

what="the 'oslo.messaging eventlet executor'")

executor_opts["max_workers"] = (

override_pool_size or self.conf.executor_thread_pool_size

)

self._work_executor = self._executor_cls(**executor_opts)

self._poll_executor = self._executor_cls(**executor_opts)

return lambda: self._poll_executor.submit(self._runner)

@ordered(after='start')

def stop(self):

"""Stop handling incoming messages.

Once this method returns, no new incoming messages will be handled by

the server. However, the server may still be in the process of handling

some messages, and underlying driver resources associated to this

server are still in use. See 'wait' for more details.

"""

self.listener.stop()

self._started = False

//等待消息过程完成

@ordered(after='stop')

def wait(self):

"""Wait for message processing to complete.

After calling stop(), there may still be some existing messages

which have not been completely processed. The wait() method blocks

until all message processing has completed.

Once it's finished, the underlying driver resources associated to this

server are released (like closing useless network connections).

"""

self._poll_executor.shutdown(wait=True)

self._work_executor.shutdown(wait=True)

# Close listener connection after processing all messages

self.listener.cleanup()

//执行消息分发、处理

def _runner(self):

while self._started:

incoming = self.listener.poll(

timeout=self.dispatcher.batch_timeout,

prefetch_size=self.dispatcher.batch_size)

if incoming:

self._submit_work(self.dispatcher(incoming))

# listener is stopped but we need to process all already consumed

# messages

while True:

incoming = self.listener.poll(

timeout=self.dispatcher.batch_timeout,

prefetch_size=self.dispatcher.batch_size)

if incoming:

self._submit_work(self.dispatcher(incoming))

else:

return