风控特征学习笔记

总体业务建模流程:

1、将业务抽象为分类or回归问题

2、定义标签,得到y

3、选取合适的样本,并匹配出全部的信息作为特征的来源

4、特征工程 + 模型训练 + 模型评价与调优(相互之间可能会有交互)

5、输出模型报告

6、上线与监控

什么是特征?

在机器学习的背景下,特征是用来解释现象发生的单个特性或一组特性。 当这些特性转换为某种可度量的形式时,它们被称为特征。

举个例子,假设你有一个学生列表,这个列表里包含每个学生的姓名、学习小时数、IQ和之前考试的总分数。现在,有一个新学生,你知道他/她的学习小时数和IQ,但他/她的考试分数缺失,你需要估算他/她可能获得的考试分数。

在这里,你需要用IQ和study_hours构建一个估算分数缺失值的预测模型。所以,IQ和study_hours就成了这个模型的特征。

特征工程可能包含的内容:

1、基础特征构造

2、数据预处理

3、特征衍生

4、特征变换

5、特征筛选

这是一个完整的特征工程流程,但不是唯一的流程,每个过程都有可能会交换顺序。

一、基础特征构造

""" 预览数据 """

import pandas as pd

import numpy as np

df_train = pd.read_csv('train.csv')

df_train.head(3)"""查看数据基本情况"""

df_train.shape

df_train.info()

df_train.describe()

"""可以画3D图对数据进行可视化,例子下面所示"""

from pyecharts import Bar3D

bar3d = Bar3D("2018年申请人数分布", width=1200, height=600)

x_axis = [

"12a", "1a", "2a", "3a", "4a", "5a", "6a", "7a", "8a", "9a", "10a", "11a",

"12p", "1p", "2p", "3p", "4p", "5p", "6p", "7p", "8p", "9p", "10p", "11p"

]

y_axis = [

"Saturday", "Friday", "Thursday", "Wednesday", "Tuesday", "Monday", "Sunday"

]

data = [

[0, 0, 5], [0, 1, 1], [0, 2, 0], [0, 3, 0], [0, 4, 0], [0, 5, 0],

[0, 6, 0], [0, 7, 0], [0, 8, 0], [0, 9, 0], [0, 10, 0], [0, 11, 2],

[0, 12, 4], [0, 13, 1], [0, 14, 1], [0, 15, 3], [0, 16, 4], [0, 17, 6],

[0, 18, 4], [0, 19, 4], [0, 20, 3], [0, 21, 3], [0, 22, 2], [0, 23, 5],

[1, 0, 7], [1, 1, 0], [1, 2, 0], [1, 3, 0], [1, 4, 0], [1, 5, 0],

[1, 6, 0], [1, 7, 0], [1, 8, 0], [1, 9, 0], [1, 10, 5], [1, 11, 2],

[1, 12, 2], [1, 13, 6], [1, 14, 9], [1, 15, 11], [1, 16, 6], [1, 17, 7],

[1, 18, 8], [1, 19, 12], [1, 20, 5], [1, 21, 5], [1, 22, 7], [1, 23, 2],

[2, 0, 1], [2, 1, 1], [2, 2, 0], [2, 3, 0], [2, 4, 0], [2, 5, 0],

[2, 6, 0], [2, 7, 0], [2, 8, 0], [2, 9, 0], [2, 10, 3], [2, 11, 2],

[2, 12, 1], [2, 13, 9], [2, 14, 8], [2, 15, 10], [2, 16, 6], [2, 17, 5],

[2, 18, 5], [2, 19, 5], [2, 20, 7], [2, 21, 4], [2, 22, 2], [2, 23, 4],

[3, 0, 7], [3, 1, 3], [3, 2, 0], [3, 3, 0], [3, 4, 0], [3, 5, 0],

[3, 6, 0], [3, 7, 0], [3, 8, 1], [3, 9, 0], [3, 10, 5], [3, 11, 4],

[3, 12, 7], [3, 13, 14], [3, 14, 13], [3, 15, 12], [3, 16, 9], [3, 17, 5],

[3, 18, 5], [3, 19, 10], [3, 20, 6], [3, 21, 4], [3, 22, 4], [3, 23, 1],

[4, 0, 1], [4, 1, 3], [4, 2, 0], [4, 3, 0], [4, 4, 0], [4, 5, 1],

[4, 6, 0], [4, 7, 0], [4, 8, 0], [4, 9, 2], [4, 10, 4], [4, 11, 4],

[4, 12, 2], [4, 13, 4], [4, 14, 4], [4, 15, 14], [4, 16, 12], [4, 17, 1],

[4, 18, 8], [4, 19, 5], [4, 20, 3], [4, 21, 7], [4, 22, 3], [4, 23, 0],

[5, 0, 2], [5, 1, 1], [5, 2, 0], [5, 3, 3], [5, 4, 0], [5, 5, 0],

[5, 6, 0], [5, 7, 0], [5, 8, 2], [5, 9, 0], [5, 10, 4], [5, 11, 1],

[5, 12, 5], [5, 13, 10], [5, 14, 5], [5, 15, 7], [5, 16, 11], [5, 17, 6],

[5, 18, 0], [5, 19, 5], [5, 20, 3], [5, 21, 4], [5, 22, 2], [5, 23, 0],

[6, 0, 1], [6, 1, 0], [6, 2, 0], [6, 3, 0], [6, 4, 0], [6, 5, 0],

[6, 6, 0], [6, 7, 0], [6, 8, 0], [6, 9, 0], [6, 10, 1], [6, 11, 0],

[6, 12, 2], [6, 13, 1], [6, 14, 3], [6, 15, 4], [6, 16, 0], [6, 17, 0],

[6, 18, 0], [6, 19, 0], [6, 20, 1], [6, 21, 2], [6, 22, 2], [6, 23, 6]

]

range_color = ['#313695', '#4575b4', '#74add1', '#abd9e9', '#e0f3f8', '#ffffbf',

'#fee090', '#fdae61', '#f46d43', '#d73027', '#a50026']

bar3d.add(

"",

x_axis,

y_axis,

[[d[1], d[0], d[2]] for d in data],

is_visualmap=True,

visual_range=[0, 20],

visual_range_color=range_color,

grid3d_width=200,

grid3d_depth=80,

is_grid3d_rotate=True, # 自动旋转

grid3d_rotate_speed=180, # 旋转速度

)

bar3d二、数据预处理

缺失值-主要用到的两个包:1、pandas fillna 2、sklearn Imputer

"""均值填充"""

df_train['Age'].fillna(value=df_train['Age'].mean()).sample(5)

""" 另一种均值填充的方式 """

from sklearn.preprocessing import Imputer

imp = Imputer(missing_values='NaN', strategy='mean', axis=0)

age = imp.fit_transform(df_train[['Age']].values).copy()

df_train.loc[:,'Age'] = df_train['Age'].fillna(value=df_train['Age'].mean()).copy()

df_train.head(5)

数值型 - 数值缩放

"""取对数等变换"""

import numpy as np

log_age = df_train['Age'].apply(lambda x:np.log(x))

df_train.loc[:,'log_age'] = log_age

df_train.head(5)

""" 幅度缩放,最大最小值缩放到[0,1]区间内 """

from sklearn.preprocessing import MinMaxScaler

mm_scaler = MinMaxScaler()

fare_trans = mm_scaler.fit_transform(df_train[['Fare']])

""" 幅度缩放,将每一列的数据标准化为正态分布 """

from sklearn.preprocessing import StandardScaler

std_scaler = StandardScaler()

fare_std_trans = std_scaler.fit_transform(df_train[['Fare']])

""" 中位数或者四分位数去中心化数据,对异常值不敏感 """

from sklearn.preprocessing import robust_scale

fare_robust_trans = robust_scale(df_train[['Fare','Age']])

""" 将同一行数据规范化,前面的同一变为1以内也可以达到这样的效果 """

from sklearn.preprocessing import Normalizer

normalizer = Normalizer()

fare_normal_trans = normalizer.fit_transform(df_train[['Age','Fare']])

fare_normal_trans

统计值

""" 最大最小值 """

max_age = df_train['Age'].max()

min_age = df_train["Age"].min()

""" 分位数,极值处理,我们最粗暴的方法就是将前后1%的值替换成前后两个端点的值 """

age_quarter_01 = df_train['Age'].quantile(0.01)

print(age_quarter_01)

age_quarter_99 = df_train['Age'].quantile(0.99)

print(age_quarter_99)

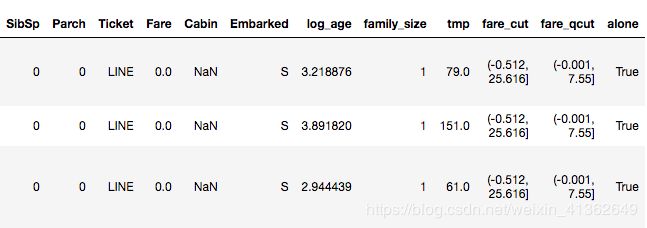

""" 四则运算 """

df_train.loc[:,'family_size'] = df_train['SibSp']+df_train['Parch']+1

df_train.head(2)

df_train.loc[:,'tmp'] = df_train['Age']*df_train['Pclass'] + 4*df_train['family_size']

df_train.head(2)

""" 多项式特征 """

from sklearn.preprocessing import PolynomialFeatures

poly = PolynomialFeatures(degree=2)

df_train[['SibSp','Parch']].head()

poly_fea = poly.fit_transform(df_train[['SibSp','Parch']])

pd.DataFrame(poly_fea,columns = poly.get_feature_names()).head()

""" 等距切分 """

df_train.loc[:, 'fare_cut'] = pd.cut(df_train['Fare'], 20)

df_train.head(2)

""" 等频切分 """

df_train.loc[:,'fare_qcut'] = pd.qcut(df_train['Fare'], 10)

df_train.head(2)

""" badrate 曲线 """

df_train = df_train.sort_values('Fare')

alist = list(set(df_train['fare_qcut']))

badrate = {}

for x in alist:

a = df_train[df_train.fare_qcut == x]

bad = a[a.label == 1]['label'].count()

good = a[a.label == 0]['label'].count()

badrate[x] = bad/(bad+good)

f = zip(badrate.keys(),badrate.values())

f = sorted(f,key = lambda x : x[1],reverse = True )

badrate = pd.DataFrame(f)

badrate.columns = pd.Series(['cut','badrate'])

badrate = badrate.sort_values('cut')

print(badrate.head())

badrate.plot('cut','badrate')

""" 一般采取等频分箱,很少等距分箱,等距分箱可能造成样本非常不均匀 """

""" 一般分5-6箱,保证badrate曲线从非严格递增转化为严格递增曲线 """

""" OneHot encoding/独热向量编码 """

""" 一般像男、女这种二分类categories类型的数据采取独热向量编码, 转化为0、1 主要用到 pd.get_dummies """

embarked_oht = pd.get_dummies(df_train[['Embarked']])

embarked_oht.head(2)

fare_qcut_oht = pd.get_dummies(df_train[['fare_qcut']])

fare_qcut_oht.head(2)

时间型 日期处理

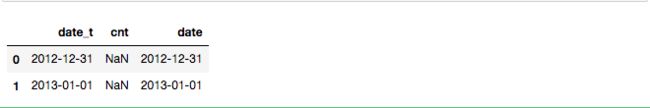

car_sales = pd.read_csv('car_data.csv')

car_sales.head(2)

car_sales.loc[:,'date'] = pd.to_datetime(car_sales['date_t'])

car_sales.head(2)

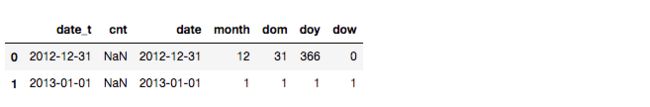

""" 取出关键时间信息 """

""" 月份 """

car_sales.loc[:,'month'] = car_sales['date'].dt.month

car_sales.head()

""" 几号 """

car_sales.loc[:,'dom'] = car_sales['date'].dt.day

""" 一年当中第几天 """

car_sales.loc[:,'doy'] = car_sales['date'].dt.dayofyear

""" 星期几 """

car_sales.loc[:,'dow'] = car_sales['date'].dt.dayofweek

car_sales.head(2)文本型数据

from pyecharts import WordCloud

name = [

'bupt', '金融', '涛涛', '实战', '人长得帅' ,

'机器学习', '深度学习', '异常检测', '知识图谱', '社交网络', '图算法',

'迁移学习', '不均衡学习', '瞪噔', '数据挖掘', '哈哈',

'集成算法', '模型融合','python', '聪明']

value = [

10000, 6181, 4386, 4055, 2467, 2244, 1898, 1484, 1112,

965, 847, 582, 555, 550, 462, 366, 360, 282, 273, 265]

wordcloud = WordCloud(width=800, height=600)

wordcloud.add("", name, value, word_size_range=[30, 80])""" 词袋模型 """

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer()

corpus = [

'This is a very good class',

'students are very very very good',

'This is the third sentence',

'Is this the last doc',

'PS teacher Mei is very very handsome'

]

X = vectorizer.fit_transform(corpus)

X.toarray() """ one-hot 编码"""vec = CountVectorizer(ngram_range=(1,3))

X_ngram = vec.fit_transform(corpus)

X_ngram.toarray()""" TF-IDF """

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf_vec = TfidfVectorizer()

tfidf_X = tfidf_vec.fit_transform(corpus)

tfidf_vec.get_feature_names()

tfidf_X.toarray()组合特征

""" 根据条件去判断获取组合特征 """

df_train.loc[:,'alone'] = (df_train['SibSp']==0)&(df_train['Parch']==0)

df_train.head(3)""" 词云图可以直观的反应哪些词作用权重比较大 """

from sklearn.feature_extraction.text import CountVectorizer

vectorizer = CountVectorizer()

corpus = [

'This is a very good class',

'students are very very very good',

'This is the third sentence',

'Is this the last doc',

'teacher Mei is very very handsome'

]

X = vectorizer.fit_transform(corpus)

L = []

for item in list(X.toarray()):

L.append(list(item))

value = [0 for i in range(len(L[0]))]

for i in range(len(L[0])):

for j in range(len(L)):

value[i] += L[j][i]

from pyecharts import WordCloud

wordcloud = WordCloud(width=800,height=500)

#这里是需要做的

wordcloud.add('',vectorizer.get_feature_names(),value,word_size_range=[20,100])

wordcloud

三、特征衍生

data = pd.read_excel('textdata.xlsx')

data.head()""" ft 和 gt 表示两个变量名 1-12 表示对应12个月中每个月的相应数值 """

""" 基于时间序列进行特征衍生 """

""" 最近p个月,inv>0的月份数 inv表示传入的变量名 """

def Num(data,inv,p):

df=data.loc[:,inv+'1':inv+str(p)]

auto_value=np.where(df>0,1,0).sum(axis=1)

return data,inv+'_num'+str(p),auto_value

data_new = data.copy()

for p in range(1,12):

for inv in ['ft','gt']:

data_new,columns_name,values=Num(data_new,inv,p)

data_new[columns_name]=values

# -*- coding:utf-8 -*-

'''

@Author : wangtao

@Time : 19/9/3 下午6:28

@desc : 构建时间序列衍生特征

'''

import numpy as np

import pandas as pd

class time_series_feature(object):

def __init__(self):

pass

def Num(self,data,inv,p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv大于0的月份个数

"""

df = data.loc[:,inv+'1':inv+str(p)]

auto_value = np.where(df > 0,1,0).sum(axis=1)

return inv+'_num'+str(p),auto_value

def Nmz(self,data,inv,p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv=0的月份个数

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.where(df == 0, 1, 0).sum(axis=1)

return inv + '_nmz' + str(p), auto_value

def Evr(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv>0的月份数是否>=1

"""

df = data.loc[:, inv + '1':inv + str(p)]

arr = np.where(df > 0, 1, 0).sum(axis=1)

auto_value = np.where(arr, 1, 0)

return inv + '_evr' + str(p), auto_value

def Avg(self,data,inv, p):

"""

:param p:

:return: 最近p个月,inv均值

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.nanmean(df, axis=1)

return inv + '_avg' + str(p), auto_value

def Tot(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv和

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.nansum(df, axis=1)

return inv + '_tot' + str(p), auto_value

def Tot2T(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近(2,p+1)个月,inv和 可以看出该变量的波动情况

"""

df = data.loc[:, inv + '2':inv + str(p + 1)]

auto_value = df.sum(1)

return inv + '_tot2t' + str(p), auto_value

def Max(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv最大值

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.nanmax(df, axis=1)

return inv + '_max' + str(p), auto_value

def Min(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv最小值

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.nanmin(df, axis=1)

return inv + '_min' + str(p), auto_value

def Msg(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,最近一次inv>0到现在的月份数

"""

df = data.loc[:, inv + '1':inv + str(p)]

df_value = np.where(df > 0, 1, 0)

auto_value = []

for i in range(len(df_value)):

row_value = df_value[i, :]

if row_value.max() <= 0:

indexs = '0'

auto_value.append(indexs)

else:

indexs = 1

for j in row_value:

if j > 0:

break

indexs += 1

auto_value.append(indexs)

return inv + '_msg' + str(p), auto_value

def Msz(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,最近一次inv=0到现在的月份数

"""

df = data.loc[:, inv + '1':inv + str(p)]

df_value = np.where(df == 0, 1, 0)

auto_value = []

for i in range(len(df_value)):

row_value = df_value[i, :]

if row_value.max() <= 0:

indexs = '0'

auto_value.append(indexs)

else:

indexs = 1

for j in row_value:

if j > 0:

break

indexs += 1

auto_value.append(indexs)

return inv + '_msz' + str(p), auto_value

def Cav(self,data,inv, p):

"""

:param p:

:return: 当月inv/(最近p个月inv的均值)

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = df[inv + '1'] / np.nanmean(df, axis=1)

return inv + '_cav' + str(p), auto_value

def Cmn(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 当月inv/(最近p个月inv的最小值)

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = df[inv + '1'] / np.nanmin(df, axis=1)

return inv + '_cmn' + str(p), auto_value

def Mai(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,每两个月间的inv的增长量的最大值

"""

arr = np.array(data.loc[:, inv + '1':inv + str(p)])

auto_value = []

for i in range(len(arr)):

df_value = arr[i, :]

value_lst = []

for k in range(len(df_value) - 1):

minus = df_value[k] - df_value[k + 1]

value_lst.append(minus)

auto_value.append(np.nanmax(value_lst))

return inv + '_mai' + str(p), auto_value

def Mad(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,每两个月间的inv的减少量的最大值

"""

arr = np.array(data.loc[:, inv + '1':inv + str(p)])

auto_value = []

for i in range(len(arr)):

df_value = arr[i, :]

value_lst = []

for k in range(len(df_value) - 1):

minus = df_value[k + 1] - df_value[k]

value_lst.append(minus)

auto_value.append(np.nanmax(value_lst))

return inv + '_mad' + str(p), auto_value

def Std(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv的标准差

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.nanvar(df, axis=1)

return inv + '_std' + str(p), auto_value

def Cva(self,data,inv, p):

"""

:param p:

:return: 最近p个月,inv的变异系数

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.nanmean(df, axis=1) / np.nanvar(df, axis=1)

return inv + '_cva' + str(p), auto_value

def Cmm(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: (当月inv) - (最近p个月inv的均值)

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = df[inv + '1'] - np.nanmean(df, axis=1)

return inv + '_cmm' + str(p), auto_value

def Cnm(self,data,inv, p):

"""

:param p:

:return: (当月inv) - (最近p个月inv的最小值)

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = df[inv + '1'] - np.nanmin(df, axis=1)

return inv + '_cnm' + str(p), auto_value

def Cxm(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: (当月inv) - (最近p个月inv的最大值)

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = df[inv + '1'] - np.nanmax(df, axis=1)

return inv + '_cxm' + str(p), auto_value

def Cxp(self,data,inv, p):

"""

:param p:

:return: ( (当月inv) - (最近p个月inv的最大值) ) / (最近p个月inv的最大值) )

"""

df = data.loc[:, inv + '1':inv + str(p)]

temp = np.nanmin(df, axis=1)

auto_value = (df[inv + '1'] - temp) / temp

return inv + '_cxp' + str(p), auto_value

def Ran(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月,inv的极差

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = np.nanmax(df, axis=1) - np.nanmin(df, axis=1)

return inv + '_ran' + str(p), auto_value

def Nci(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近min( Time on book,p )个月中,后一个月相比于前一个月增长了的月份数

"""

arr = np.array(data.loc[:, inv + '1':inv + str(p)])

auto_value = []

for i in range(len(arr)):

df_value = arr[i, :]

value_lst = []

for k in range(len(df_value) - 1):

minus = df_value[k] - df_value[k + 1]

value_lst.append(minus)

value_ng = np.where(np.array(value_lst) > 0, 1, 0).sum()

auto_value.append(np.nanmax(value_ng))

return inv + '_nci' + str(p), auto_value

def Ncd(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近min( Time on book,p )个月中,后一个月相比于前一个月减少了的月份数

"""

arr = np.array(data.loc[:, inv + '1':inv + str(p)])

auto_value = []

for i in range(len(arr)):

df_value = arr[i, :]

value_lst = []

for k in range(len(df_value) - 1):

minus = df_value[k] - df_value[k + 1]

value_lst.append(minus)

value_ng = np.where(np.array(value_lst) < 0, 1, 0).sum()

auto_value.append(np.nanmax(value_ng))

return inv + '_ncd' + str(p), auto_value

def Ncn(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近min( Time on book,p )个月中,相邻月份inv 相等的月份数

"""

arr = np.array(data.loc[:, inv + '1':inv + str(p)])

auto_value = []

for i in range(len(arr)):

df_value = arr[i, :]

value_lst = []

for k in range(len(df_value) - 1):

minus = df_value[k] - df_value[k + 1]

value_lst.append(minus)

value_ng = np.where(np.array(value_lst) == 0, 1, 0).sum()

auto_value.append(np.nanmax(value_ng))

return inv + '_ncn' + str(p), auto_value

def Bup(self,data,inv, p):

"""

:param p:

:return:

desc:If 最近min( Time on book,p )个月中,对任意月份i ,都有 inv[i] > inv[i+1] 即严格递增,且inv > 0则flag = 1 Else flag = 0

"""

arr = np.array(data.loc[:, inv + '1':inv + str(p)])

auto_value = []

for i in range(len(arr)):

df_value = arr[i, :]

index = 0

for k in range(len(df_value) - 1):

if df_value[k] > df_value[k + 1]:

break

index = + 1

if index == p:

value = 1

else:

value = 0

auto_value.append(value)

return inv + '_bup' + str(p), auto_value

def Pdn(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return:

desc: If 最近min( Time on book,p )个月中,对任意月份i ,都有 inv[i] < inv[i+1] ,即严格递减,且inv > 0则flag = 1 Else flag = 0

"""

arr = np.array(data.loc[:, inv + '1':inv + str(p)])

auto_value = []

for i in range(len(arr)):

df_value = arr[i, :]

index = 0

for k in range(len(df_value) - 1):

if df_value[k + 1] > df_value[k]:

break

index = + 1

if index == p:

value = 1

else:

value = 0

auto_value.append(value)

return inv + '_pdn' + str(p), auto_value

def Trm(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近min( Time on book,p )个月,inv的修建均值

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = []

for i in range(len(df)):

trm_mean = list(df.loc[i, :])

trm_mean.remove(np.nanmax(trm_mean))

trm_mean.remove(np.nanmin(trm_mean))

temp = np.nanmean(trm_mean)

auto_value.append(temp)

return inv + '_trm' + str(p), auto_value

def Cmx(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 当月inv / 最近p个月的inv中的最大值

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = (df[inv + '1'] - np.nanmax(df, axis=1)) / np.nanmax(df, axis=1)

return inv + '_cmx' + str(p), auto_value

def Cmp(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: ( 当月inv - 最近p个月的inv均值 ) / inv均值

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = (df[inv + '1'] - np.nanmean(df, axis=1)) / np.nanmean(df, axis=1)

return inv + '_cmp' + str(p), auto_value

def Cnp(self,data,inv, p):

"""

:param p:

:return: ( 当月inv - 最近p个月的inv最小值 ) /inv最小值

"""

df = data.loc[:, inv + '1':inv + str(p)]

auto_value = (df[inv + '1'] - np.nanmin(df, axis=1)) / np.nanmin(df, axis=1)

return inv + '_cnp' + str(p), auto_value

def Msx(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近min( Time on book,p )个月取最大值的月份距现在的月份数

"""

df = data.loc[:, inv + '1':inv + str(p)]

df['_max'] = np.nanmax(df, axis=1)

for i in range(1, p + 1):

df[inv + str(i)] = list(df[inv + str(i)] == df['_max'])

del df['_max']

df_value = np.where(df == True, 1, 0)

auto_value = []

for i in range(len(df_value)):

row_value = df_value[i, :]

indexs = 1

for j in row_value:

if j == 1:

break

indexs += 1

auto_value.append(indexs)

return inv + '_msx' + str(p), auto_value

def Rpp(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 近p个月的均值/((p,2p)个月的inv均值)

"""

df1 = data.loc[:, inv + '1':inv + str(p)]

value1 = np.nanmean(df1, axis=1)

df2 = data.loc[:, inv + str(p):inv + str(2 * p)]

value2 = np.nanmean(df2, axis=1)

auto_value = value1 / value2

return inv + '_rpp' + str(p), auto_value

def Dpp(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: 最近p个月的均值 - ((p,2p)个月的inv均值)

"""

df1 = data.loc[:, inv + '1':inv + str(p)]

value1 = np.nanmean(df1, axis=1)

df2 = data.loc[:, inv + str(p):inv + str(2 * p)]

value2 = np.nanmean(df2, axis=1)

auto_value = value1 - value2

return inv + '_dpp' + str(p), auto_value

def Mpp(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: (最近p个月的inv最大值)/ (最近(p,2p)个月的inv最大值)

"""

df1 = data.loc[:, inv + '1':inv + str(p)]

value1 = np.nanmax(df1, axis=1)

df2 = data.loc[:, inv + str(p):inv + str(2 * p)]

value2 = np.nanmax(df2, axis=1)

auto_value = value1 / value2

return inv + '_mpp' + str(p), auto_value

def Npp(self,data,inv, p):

"""

:param data:

:param inv:

:param p:

:return: (最近p个月的inv最小值)/ (最近(p,2p)个月的inv最小值)

"""

df1 = data.loc[:, inv + '1':inv + str(p)]

value1 = np.nanmin(df1, axis=1)

df2 = data.loc[:, inv + str(p):inv + str(2 * p)]

value2 = np.nanmin(df2, axis=1)

auto_value = value1 / value2

return inv + '_npp' + str(p), auto_value

def auto_var(self,data_new,inv,p):

"""

:param data:

:param inv:

:param p:

:return: 批量调用双参数函数

"""

try:

columns_name, values = self.Num(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Nmz(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Evr(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Avg(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Tot(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Tot2T(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Max(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Max(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Min(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Msg(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Msz(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cav(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cmn(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Std(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cva(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cmm(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cnm(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cxm(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cxp(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Ran(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Nci(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Ncd(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Ncn(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Pdn(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cmx(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cmp(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Cnp(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Msx(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Nci(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Trm(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Bup(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Mai(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Mad(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Rpp(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Dpp(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Mpp(data_new,inv, p)

data_new[columns_name] = values

columns_name, values = self.Npp(data_new,inv, p)

data_new[columns_name] = values

except:

pass

return data_new

if __name__ == "__main__":

file_dir = ""

file_name = "textdata.xlsx"

data_ = pd.read_excel(file_dir + file_name)

auto_var2 = time_series_feature()

for p in range(1,12):

for inv in ['ft','gt']:

data_ = auto_var2.auto_var(data_,inv,p)四、特征筛选

常用特征选择三种方法:

1、Filter

移除低方差的特征 (Removing features with low variance)

单变量特征选择 (Univariate feature selection)

2、Wrapper

递归特征消除 (Recursive Feature Elimination)

3、Embedded

使用SelectFromModel选择特征 (Feature selection using SelectFromModel)

将特征选择过程融入pipeline (Feature selection as part of a pipeline)

当数据预处理完成后,我们需要选择有意义的特征输入机器学习的算法和模型进行训练。

通常来说,从两个方面考虑来选择特征:

1、特征是否发散

如果一个特征不发散,例如方差接近于0,也就是说样本在这个特征上基本上没有差异,这个特征对于样本的区分并没有什么用。

2、特征与目标的相关性

这点比较显见,与目标相关性高的特征,应当优选选择。除移除低方差法外,可从相关性考虑

根据特征选择的形式又可以将特征选择方法分为3种:

Filter:过滤法,按照发散性或者相关性对各个特征进行评分,设定阈值或者待选择阈值的个数,选择特征。

Wrapper:包装法,根据目标函数(通常是预测效果评分),每次选择若干特征,或者排除若干特征。

Embedded:嵌入法,先使用某些机器学习的算法和模型进行训练,得到各个特征的权值系数,根据系数从大到小选择特征。类似于Filter方法,但是是通过训练来确定特征的优劣。

特征选择主要有两个目的:

减少特征数量、降维,使模型泛化能力更强,减少过拟合;

增强对特征和特征值之间的理解。

拿到数据集,一个特征选择方法,往往很难同时完成这两个目的

Filter

1)移除低方差的特征 (Removing features with low variance)

假设某特征的特征值只有0和1,并且在所有输入样本中,95%的实例的该特征取值都是1,那就可以认为这个特征作用不大。

如果100%都是1,那这个特征就没意义了。当特征值都是离散型变量的时候这种方法才能用,如果是连续型变量,就需要将连续变量离散化之后才能用。

而且实际当中,一般不太会有95%以上都取某个值的特征存在,所以这种方法虽然简单但是不太好用。可以把它作为特征选择的预处理,

先去掉那些取值变化小的特征,然后再从接下来提到的的特征选择方法中选择合适的进行进一步的特征选择。

from sklearn.feature_selection import VarianceThreshold

X = [[0, 0, 1], [0, 1, 0], [1, 0, 0], [0, 1, 1], [0, 1, 0], [0, 1, 1]]

sel = VarianceThreshold(threshold=(.8 * (1 - .8)))

sel.fit_transform(X)2)单变量特征选择 (Univariate feature selection)

单变量特征选择的原理是分别单独的计算每个变量的某个统计指标,根据该指标来判断哪些变量重要,剔除那些不重要的变量。

对于分类问题(y离散),可采用:

卡方检验

f_classif

mutual_info_classif

互信息

对于回归问题(y连续),可采用:

皮尔森相关系数

f_regression,

mutual_info_regression

最大信息系数

这种方法比较简单,易于运行,易于理解,通常对于理解数据有较好的效果(但对特征优化、提高泛化能力来说不一定有效)。

SelectKBest 移除得分前 k 名以外的所有特征(取top k)

SelectPercentile 移除得分在用户指定百分比以后的特征(取top k%)

对每个特征使用通用的单变量统计检验: 假正率(false positive rate) SelectFpr, 伪发现率(false discovery rate) SelectFdr, 或族系误差率 SelectFwe.

GenericUnivariateSelect 可以设置不同的策略来进行单变量特征选择。同时不同的选择策略也能够使用超参数寻优,从而让我们找到最佳的单变量特征选择策略。

Notice:

The methods based on F-test estimate the degree of linear dependency between two random variables.

(F检验用于评估两个随机变量的线性相关性)

On the other hand, mutual information methods can capture any kind of statistical dependency, but being nonparametric, they require more samples for accurate estimation.

(另一方面,互信息的方法可以捕获任何类型的统计依赖关系,但是作为一个非参数方法,估计准确需要更多的样本)

卡方(Chi2)检验

经典的卡方检验是检验定性自变量对定性因变量的相关性。

比如,我们可以对样本进行一次chi2 测试来选择最佳的两项特征:

from sklearn.datasets import load_iris

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import chi2

iris = load_iris()

X, y = iris.data, iris.target

print(X.shape)

X_new = SelectKBest(chi2, k=2).fit_transform(X, y)

print(X_new.shape)Pearson相关系数 (Pearson Correlation)

皮尔森相关系数是一种最简单的,能帮助理解特征和响应变量之间关系的方法,

该方法衡量的是变量之间的线性相关性,结果的取值区间为[-1,1],-1表示完全的负相关,+1表示完全的正相关,0表示没有线性相关

import numpy as np

from scipy.stats import pearsonr

np.random.seed(0)

size = 300

x = np.random.normal(0, 1, size)

""" pearsonr(x, y)的输入为特征矩阵和目标向量,能够同时计算 相关系数 和p-value. """

print("Lower noise", pearsonr(x, x + np.random.normal(0, 1, size)))

print("Higher noise", pearsonr(x, x + np.random.normal(0, 10, size)))

""" 比较了变量在加入噪音之前和之后的差异。当噪音比较小的时候,相关性很强,p-value很低 """

""" 使用Pearson相关系数主要是为了看特征之间的相关性,而不是和因变量之间的。 """Wrapper

递归特征消除 (Recursive Feature Elimination)

递归消除特征法使用一个基模型来进行多轮训练,每轮训练后,移除若干权值系数的特征,再基于新的特征集进行下一轮训练。

对特征含有权重的预测模型(例如,线性模型对应参数coefficients),RFE通过递归减少考察的特征集规模来选择特征。

首先,预测模型在原始特征上训练,每个特征指定一个权重。之后,那些拥有最小绝对值权重的特征被踢出特征集。如此往复递归,直至剩余的特征数量达到所需的特征数量。

RFECV 通过交叉验证的方式执行RFE,以此来选择最佳数量的特征:对于一个数量为d的feature的集合,他的所有的子集的个数是2的d次方减1(包含空集)。

指定一个外部的学习算法,比如SVM之类的。通过该算法计算所有子集的validation error。选择error最小的那个子集作为所挑选的特征。

from sklearn.feature_selection import RFE

from sklearn.ensemble import RandomForestClassifier

from sklearn.datasets import load_iris

rf = RandomForestClassifier()

iris=load_iris()

X,y=iris.data,iris.target

rfe = RFE(estimator=rf, n_features_to_select=3)

X_rfe = rfe.fit_transform(X,y)

X_rfe.shapeEmbedded

使用SelectFromModel选择特征 (Feature selection using SelectFromModel)

基于L1的特征选择 (L1-based feature selection)

使用L1范数作为惩罚项的线性模型(Linear models)会得到稀疏解:大部分特征对应的系数为0。

当你希望减少特征的维度以用于其它分类器时,可以通过 feature_selection.SelectFromModel 来选择不为0的系数。

特别指出,常用于此目的的稀疏预测模型有 linear_model.Lasso(回归), linear_model.LogisticRegression 和 svm.LinearSVC(分类)

from sklearn.feature_selection import SelectFromModel

from sklearn.svm import LinearSVC

lsvc = LinearSVC(C=0.01, penalty="l1", dual=False).fit(X,y)

model = SelectFromModel(lsvc, prefit=True)

X_embed = model.transform(X)

X_embed.shape首先来回顾一下我们在业务中的模型会遇到什么问题。

1、模型效果不好:大概率数据有问题

2、训练集效果好,跨时间测试(一般测试样本是训练数据的1/10)效果不好:

测试数据分布与训练数据不太一样导致的,说明选入特征变量有问题波动比较大,查看分析比较波动的特征变量

3、跨时间测试效果也好,上线之后效果不好:线下和线上和变量的逻辑出了问题,线下特征信息可能包含未来变量

4、上线之后效果还好,几周之后分数分布开始下滑:说明模型效果不行,说明一两个变量在跨时间上效果比较差

5、一两个月内都比较稳定,突然分数分布骤降:可能是外部因素,如运营部门一些操作或国家政策导致

6、没有明显问题,但模型每个月逐步失效:

然后我们来考虑一下业务所需要的变量是什么。

变量必须对模型有贡献,也就是说必须能对客群加以区分

逻辑回归要求变量之间线性无关

逻辑回归评分卡也希望变量呈现单调趋势

(有一部分也是业务原因,但从模型角度来看,单调变量未必一定比有转折的变量好)

客群在每个变量上的分布稳定,分布迁移无可避免,但不能波动太大

为此我们从上述方法中找到最贴合当前使用场景的几种方法。

from statsmodels.stats.outliers_influence import variance_inflation_factor

import numpy as np

data = [[1,2,3,4,5],

[2,4,6,8,9],

[1,1,1,1,1],

[2,4,6,4,7]]

X = np.array(data).T

variance_inflation_factor(X,0)3)单调性

- bivar图

""" 等频切分 """

df_train.loc[:,'fare_qcut'] = pd.qcut(df_train['Fare'], 10)

df_train.head()

df_train = df_train.sort_values('Fare')

alist = list(set(df_train['fare_qcut']))

badrate = {}

for x in alist:

a = df_train[df_train.fare_qcut == x]

bad = a[a.label == 1]['label'].count()

good = a[a.label == 0]['label'].count()

badrate[x] = bad/(bad+good)

f = zip(badrate.keys(),badrate.values())

f = sorted(f,key = lambda x : x[1],reverse = True )

badrate = pd.DataFrame(f)

badrate.columns = pd.Series(['cut','badrate'])

badrate = badrate.sort_values('cut')

print(badrate)

badrate.plot('cut','badrate')def var_PSI(dev_data, val_data):

dev_cnt, val_cnt = sum(dev_data), sum(val_data)

if dev_cnt * val_cnt == 0:

return None

PSI = 0

for i in range(len(dev_data)):

dev_ratio = dev_data[i] / dev_cnt

val_ratio = val_data[i] / val_cnt + 1e-10

psi = (dev_ratio - val_ratio) * math.log(dev_ratio/val_ratio)

PSI += psi

return PSI

注意分箱的数量将会影响着变量的PSI值。

PSI并不只可以对模型来求,对变量来求也一样。只需要对跨时间分箱的数据分别求PSI即可。

import pandas as pd

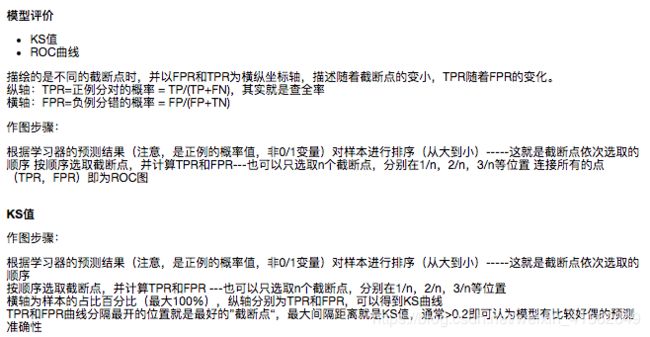

from sklearn.metrics import roc_auc_score,roc_curve,auc

from sklearn.model_selection import train_test_split

from sklearn import metrics

from sklearn.linear_model import LogisticRegression

import numpy as np

import random

import math

data = pd.read_csv(file_dir + 'data.txt')

data.head()""" 看一下月份分布,我们用最后一个月做为跨时间验证集合 """

data.obs_mth.unique()

train = data[data.obs_mth != '2018-11-30'].reset_index().copy()

val = data[data.obs_mth == '2018-11-30'].reset_index().copy()

feature_lst = ['person_info','finance_info','credit_info','act_info','td_score','jxl_score','mj_score','rh_score']

x = train[feature_lst]

y = train['bad_ind']

val_x = val[feature_lst]

val_y = val['bad_ind']

lr_model = LogisticRegression(C=0.1)

lr_model.fit(x,y)

y_pred = lr_model.predict_proba(x)[:,1]

fpr_lr_train,tpr_lr_train,_ = roc_curve(y,y_pred)

train_ks = abs(fpr_lr_train - tpr_lr_train).max()

print('train_ks : ',train_ks)

y_pred = lr_model.predict_proba(val_x)[:,1]

fpr_lr,tpr_lr,_ = roc_curve(val_y,y_pred)

val_ks = abs(fpr_lr - tpr_lr).max()

print('val_ks : ',val_ks)

from matplotlib import pyplot as plt

plt.plot(fpr_lr_train,tpr_lr_train,label = 'train LR')

plt.plot(fpr_lr,tpr_lr,label = 'evl LR')

plt.plot([0,1],[0,1],'k--')

plt.xlabel('False positive rate')

plt.ylabel('True positive rate')

plt.title('ROC Curve')

plt.legend(loc = 'best')

plt.show()""" 做特征筛选 """

from statsmodels.stats.outliers_influence import variance_inflation_factor

X = np.array(x)

for i in range(X.shape[1]):

print(variance_inflation_factor(X,i))import lightgbm as lgb

from sklearn.model_selection import train_test_split

train_x,test_x,train_y,test_y = train_test_split(x,y,random_state=0,test_size=0.2)

def lgb_test(train_x,train_y,test_x,test_y):

clf =lgb.LGBMClassifier(boosting_type = 'gbdt',

objective = 'binary',

metric = 'auc',

learning_rate = 0.1,

n_estimators = 24,

max_depth = 5,

num_leaves = 20,

max_bin = 45,

min_data_in_leaf = 6,

bagging_fraction = 0.6,

bagging_freq = 0,

feature_fraction = 0.8,

)

clf.fit(train_x,train_y,eval_set = [(train_x,train_y),(test_x,test_y)],eval_metric = 'auc')

return clf,clf.best_score_['valid_1']['auc'],

lgb_model , lgb_auc = lgb_test(train_x,train_y,test_x,test_y)

feature_importance = pd.DataFrame({'name':lgb_model.booster_.feature_name(),

'importance':lgb_model.feature_importances_}).sort_values(by=['importance'],ascending=False)

feature_importance

feature_lst = ['person_info','finance_info','credit_info','act_info']

x = train[feature_lst]

y = train['bad_ind']

val_x = val[feature_lst]

val_y = val['bad_ind']

lr_model = LogisticRegression(C=0.1,class_weight='balanced')

lr_model.fit(x,y)

y_pred = lr_model.predict_proba(x)[:,1]

fpr_lr_train,tpr_lr_train,_ = roc_curve(y,y_pred)

train_ks = abs(fpr_lr_train - tpr_lr_train).max()

print('train_ks : ',train_ks)

y_pred = lr_model.predict_proba(val_x)[:,1]

fpr_lr,tpr_lr,_ = roc_curve(val_y,y_pred)

val_ks = abs(fpr_lr - tpr_lr).max()

print('val_ks : ',val_ks)

from matplotlib import pyplot as plt

plt.plot(fpr_lr_train,tpr_lr_train,label = 'train LR')

plt.plot(fpr_lr,tpr_lr,label = 'evl LR')

plt.plot([0,1],[0,1],'k--')

plt.xlabel('False positive rate')

plt.ylabel('True positive rate')

plt.title('ROC Curve')

plt.legend(loc = 'best')

plt.show()

# 系数

print('变量名单:',feature_lst)

print('系数:',lr_model.coef_)

print('截距:',lr_model.intercept_)

"""报告"""

model = lr_model

row_num, col_num = 0, 0

bins = 20

Y_predict = [s[1] for s in model.predict_proba(val_x)]

Y = val_y

nrows = Y.shape[0]

lis = [(Y_predict[i], Y[i]) for i in range(nrows)]

ks_lis = sorted(lis, key=lambda x: x[0], reverse=True)

bin_num = int(nrows/bins+1)

bad = sum([1 for (p, y) in ks_lis if y > 0.5])

good = sum([1 for (p, y) in ks_lis if y <= 0.5])

bad_cnt, good_cnt = 0, 0

KS = []

BAD = []

GOOD = []

BAD_CNT = []

GOOD_CNT = []

BAD_PCTG = []

BADRATE = []

dct_report = {}

for j in range(bins):

ds = ks_lis[j*bin_num: min((j+1)*bin_num, nrows)]

bad1 = sum([1 for (p, y) in ds if y > 0.5])

good1 = sum([1 for (p, y) in ds if y <= 0.5])

bad_cnt += bad1

good_cnt += good1

bad_pctg = round(bad_cnt/sum(val_y),3)

badrate = round(bad1/(bad1+good1),3)

ks = round(math.fabs((bad_cnt / bad) - (good_cnt / good)),3)

KS.append(ks)

BAD.append(bad1)

GOOD.append(good1)

BAD_CNT.append(bad_cnt)

GOOD_CNT.append(good_cnt)

BAD_PCTG.append(bad_pctg)

BADRATE.append(badrate)

dct_report['KS'] = KS

dct_report['BAD'] = BAD

dct_report['GOOD'] = GOOD

dct_report['BAD_CNT'] = BAD_CNT

dct_report['GOOD_CNT'] = GOOD_CNT

dct_report['BAD_PCTG'] = BAD_PCTG

dct_report['BADRATE'] = BADRATE

val_repot = pd.DataFrame(dct_report)

val_repot

""" 映射分数 """

#['person_info','finance_info','credit_info','act_info']

def score(person_info,finance_info,credit_info,act_info):

xbeta = person_info * ( 3.49460978) + finance_info * ( 11.40051582 ) + credit_info * (2.45541981) + act_info * ( -1.68676079) --0.34484897

score = 650-34* (xbeta)/math.log(2)

return score

val['score'] = val.apply(lambda x : score(x.person_info,x.finance_info,x.credit_info,x.act_info) ,axis=1)

fpr_lr,tpr_lr,_ = roc_curve(val_y,val['score'])

val_ks = abs(fpr_lr - tpr_lr).max()

print('val_ks : ',val_ks)

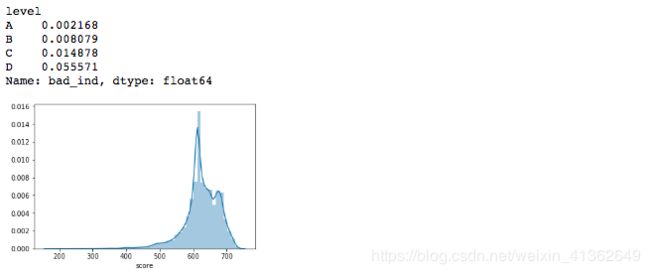

#对应评级区间

def level(score):

level = 0

if score <= 600:

level = "D"

elif score <= 640 and score > 600 :

level = "C"

elif score <= 680 and score > 640:

level = "B"

elif score > 680 :

level = "A"

return level

val['level'] = val.score.map(lambda x : level(x) )

val.level.groupby(val.level).count()/len(val)

""" 画图展示区间分布情况 """

import seaborn as sns

sns.distplot(val.score,kde=True)

val = val.sort_values('score',ascending=True).reset_index(drop=True)

df2=val.bad_ind.groupby(val['level']).sum()

df3=val.bad_ind.groupby(val['level']).count()

print(df2/df3)