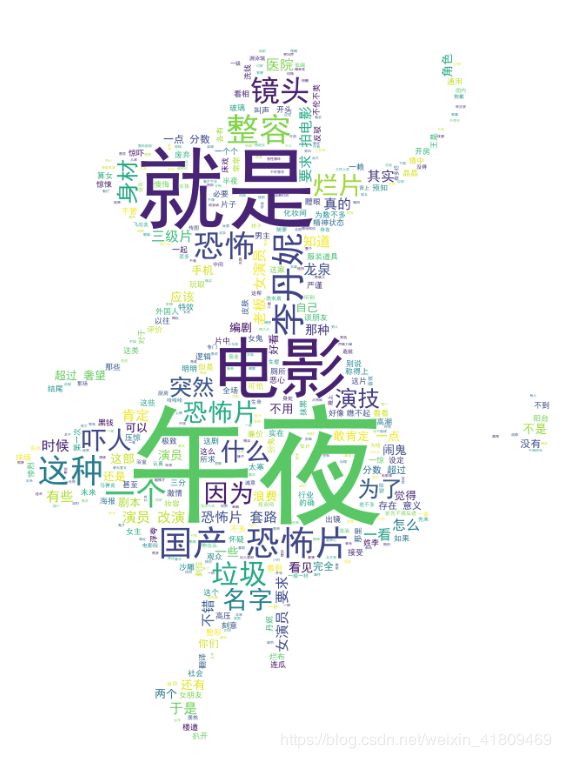

用Python爬取豆瓣首页所有电影名称、每部电影影评及生成词云

1.爬取环境:

- window 7

- Chrome 浏览器

- 注册豆瓣、注册超级鹰

2.安装第三方库:安装第三方库:

- 主程序用到的库有

import sys, time

import pytesseract

from selenium import webdriver

from PIL import Image, ImageEnhance

from chaojiying import Chaojiying_Client - 生成词云的程序用的库有

import os

import os.path

import jieba

from wordcloud import WordCloud

import matplotlib.pyplot as plt

from scipy.misc import imread

import re

3.技术难点:

- python+selenium环境搭建

安装selenium

安装浏览器对应的驱动(推荐用Chrome),驱动程序要与浏览器的版本对应, 将下载的chrome驱动程序chromedriver.exe复制到chrome浏览器的安装目录下,也就是appication目录下,如:chrome的安装路径是:C:\Users\admin\AppData\Local\Google\Chrome\Application

那么将下载的驱动程序chromedriver.exe复制到: C:\Users\admin\AppData\Local\Google\Chrome\Application

路径下,同时将此路径增加到环境变量path中(我的电脑–》右键——》属性——》高级系统设置——》环境变量——》系统变量——》path)中。 - 解决豆瓣对影评数据限制的问题。解决的办法用横扫登录,这就涉及验证码识别,推荐用超级鹰平台进行验证码识别(关注其微信公众号可获得1000题分,够用了)。需要注册,下载对应python的程序,获取ID

4.程序构成。

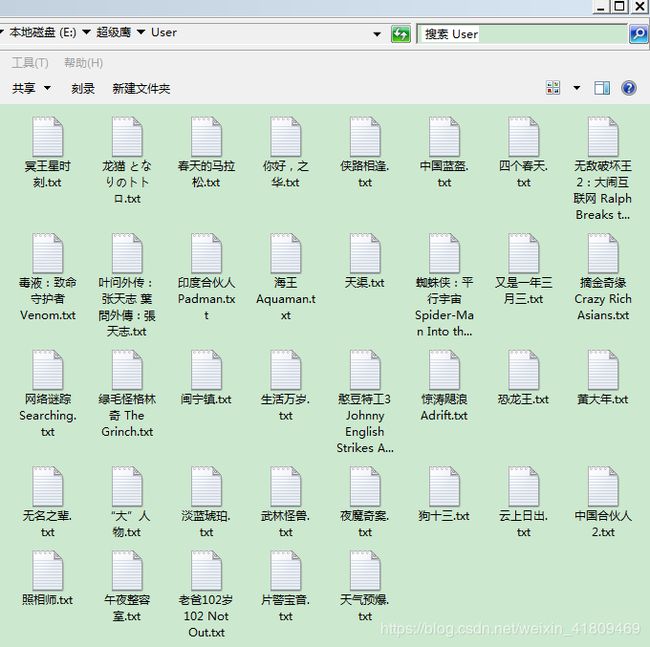

一个是主程序,用于爬取豆瓣电影时模拟登录,获取识别的验证码,最新电影的影评并存储为txt文件;一个是分程序,由主程序调用,用于分词和词云展示。

主程序完整代码

import sys, time

import pytesseract

from selenium import webdriver

from PIL import Image, ImageEnhance

from chaojiying import Chaojiying_Client

from wordclouds import WordAnanlysis #词云库

class Crawler(object):

def __init__(self):

executable_path='C://Users/Administrator/AppData/Local/Google/Chrome/Application/chromedriver.exe' # 驱动

self.driver = webdriver.Chrome(executable_path=executable_path)

#print(self.driver) #打开窗

self.username = '豆瓣登录用户名' # 豆瓣用户名

self.pwd = '豆瓣登录密码' # 密码

self.login_url = "https://accounts.douban.com/login" # 豆瓣登录url

self.base_url = "https://movie.douban.com/" # 豆瓣主页

self.dir = "E://超级鹰/User/" # 爬取的数据存放的目录

self.cjy = Chaojiying_Client('用户名','密码','ID') # 已注册过,超级鹰验证码识别接口

self.verify_image = 'E://超级鹰/input/zxy.png' # 处理前的验证码图片地址

self.result_image ='E://超级鹰/onput/zxy.png' # 处理后的验证码图片地址

def do_login(self):

self.driver.get(self.login_url)

try:

# 判断是否有验证码

img_url = self.driver.find_element_by_xpath('//*[@id="captcha_image"]').get_attribute("src")

print('111',img_url)

self.driver.get(img_url)

self.driver.get_screenshot_as_file(self.verify_image) # 截图保存

self.driver.back()

code = self.getCode(self.verify_image) # 获取验证码

print('222',code)

captcha = self.driver.find_element_by_xpath('//*[@id="captcha_field"]')

captcha.send_keys(code)

except:

Exception

pass

username = self.driver.find_element_by_xpath('//*[@id="email"]')

username.send_keys(self.username)

pwd = self.driver.find_element_by_xpath('//*[@id="password"]')

pwd.send_keys(self.pwd)

submit = self.driver.find_element_by_name('login')

submit.click()

# 获取识别的验证码

def getCode(self, imagePath):

im_origin = Image.open(imagePath)

box = (392, 268, 643, 308)

im_region = im_origin.crop(box) # 截取部分区域

im_region.convert('L') # 图像加强,二值化

sharpness = ImageEnhance.Contrast(im_region) # 对比度加强

im_result = sharpness.enhance(2.0) # 增加饱和度

im_result.save(self.result_image) # 保存处理后的验证码图片

time.sleep(3)

# 开始识别验证码

im = open(self.result_image, 'rb').read()

dict = self.cjy.PostPic(im, 1006)

code = dict['pic_str'].strip()

return code

# 获取电影影评列表

def get_movies_url(self):

self.driver.get(self.base_url)

list = self.driver.find_elements_by_xpath('//*[@id="screening"]/div[2]/ul/li')

length = len(list)

dict = {}

for i in range(0, length):

list = self.driver.find_element_by_xpath('//*[@id="screening"]/div[2]/ul').find_elements_by_class_name(

"ui-slide-item")

if list[i].get_attribute("data-title") is not None:

movie_name = list[i].get_attribute("data-title").strip()

movie_url = list[i].find_element_by_class_name("poster").find_element_by_tag_name("a").get_attribute(

"href")

# 去详情页查找影评的url

self.driver.get(movie_url)

comment_url = self.driver.find_element_by_xpath(

'//*[@id="comments-section"]/div[1]/h2/span/a').get_attribute("href")

# 构造dict

if movie_name not in dict:

dict.update({movie_name: comment_url})

# 返回父页面

self.driver.back()

return dict

#获取评论

def get_comment(self, dict):

if dict is not None and len(dict) > 0:

for k, v in dict.items():

#file_path = self.dir + encode(k.replace(":", "") + ".txt")

file_path = self.dir + k.replace(":", "") + ".txt"

file = open(file_path, "a")

# 获取评论

base_comment_url = v[0:v.find("?") + 1]

# 分页获取

for i in range(0, 25):

url = base_comment_url + "start=" + str(i * 20) + "&limit=20&sort=new_score&status=P&percent_type="

self.driver.get(url)

try:

comments = self.driver.find_element_by_xpath('//*[@id="comments"]').find_elements_by_class_name(

"comment-item")

for item in comments:

content = item.find_element_by_tag_name("p").text

file.write(content + "\n")

except:

Exception

break

file.close()

if __name__ == "__main__":

path='E://超级鹰/User/'

pathp='E://超级鹰/picture/'

crawler = Crawler()

crawler.do_login()

dict = crawler.get_movies_url()

crawler.get_comment(dict)

print('正在生成词云,请稍候!')

w=WordAnanlysis(path,pathp)

w.word()

分程序(wordclouds.py)

#词云展示

import os

import os.path

import jieba

from wordcloud import WordCloud

import matplotlib.pyplot as plt

from scipy.misc import imread

import re

class WordAnanlysis:

def __init__(self,path,pathp):

self.path=path

self.files = os.listdir(path)

self.pathp=pathp

def word(self):

font ='simhei.ttf'

# 背景图片路径

backgroud = imread("fbbb1.png",'r')

# 停用词

stopwords = {}.fromkeys([line.strip() for line in open('stopwords.txt','rb')])

for file in self.files:

txt_path = self.path + file

# 读取txt文件

f = open(txt_path, 'r')

text = f.readlines()

f.close()

final= ''

for seg in text:

if seg not in stopwords:

final += seg

filtrate= re.compile(r'[\u4e00-\u9fa5]')

filterdata = re.findall(filtrate,final)

filterdata=''.join(filterdata)

cut_text2 = " ".join(jieba.cut(filterdata,cut_all=False))

if len(cut_text2)==0:

continue

# 词云准备

cloud = WordCloud(

max_words=1000, #最多显示的词汇量

background_color="white",#背景颜色设置

mask=backgroud,

margin=2 ,#设置页面边缘

min_font_size=4, #显示最小字号

scale=1, #缩放倍数

stopwords=None, #停止词设置,修正词云图时需要

random_state=42,

max_font_size=150,#最大字号

font_path=font, #字体路径,中文需要

)

# 生成词云

word_cloud = cloud.generate(cut_text2)

# 显示词云

plt.imshow(word_cloud)

plt.axis("off")

#plt.show()

cloud.to_file(self.pathp + file.replace("txt", "png"))

if __name__=='__main__':

path ='E://超级鹰/User/'

pathp='E://超级鹰/picture/'

w=WordAnanlysis(path,pathp)

w.word()