Hadoop单节点及伪分布式文件系统的搭建

实验环境:rhel7.3虚拟机

| 主机信息 | 实现功能 |

|---|---|

| server1 | hadoop |

| 真机 | 测试 |

1.hapdoop单节点的搭建

hadoop单节点是在本地创建一个文件系统,与伪分布式文件系统的不同之处在于单节点在hadopp的专用目录下上传和下载,而伪分布式文件系统是在本地再搭建另一个文件系统,这个文件系统与hadoop的专用目录处于不同的目录,新建的文件系统与原本的文件系统构成了分布式文件系统,但由于同处于本地,所以被称为伪分布式文件系统。

单节点适用于测试和开发。

1.创建hadoop用户

将hadoop相关安装包都放在hadoop用户家目录下,并将安装包的所属用户和组改为hadoop

[root@server1 ~]# useradd hadoop

[root@server1 ~]# passwd hadoop

Changing password for user hadoop.

New password:

BAD PASSWORD: The password is shorter than 8 characters

Retype new password:

passwd: all authentication tokens updated successfully.

[root@server1 ~]# id hadoop

uid=1001(hadoop) gid=1001(hadoop) groups=1001(hadoop)

[root@server1 ~]# ls

hadoop-2.10.0.tar.gz jdk-8u121-linux-x64.rpm

[root@server1 ~]# mv * /home/hadoop/

[root@server1 ~]# su - hadoop

[hadoop@server1 ~]$ ls

hadoop-2.10.0.tar.gz jdk-8u121-linux-x64.rpm

[root@server1 ~]# cd /home/hadoop/

[root@server1 hadoop]# chown hadoop.hadoop hadoop-2.10.0.tar.gz

[root@server1 hadoop]# chown hadoop.hadoop jdk-8u121-linux-x64.rpm

[root@server1 hadoop]# su - hadoop

Last login: Sun Dec 22 06:17:24 CST 2019 on pts/0

[hadoop@server1 ~]$ ls

hadoop-2.10.0.tar.gz jdk-8u121-linux-x64.rpm

[hadoop@server1 ~]$ ll

total 546732

-r-------- 1 hadoop hadoop 392115733 Dec 22 06:14 hadoop-2.10.0.tar.gz

-rw-r--r-- 1 hadoop hadoop 167733100 Dec 22 06:13 jdk-8u121-linux-x64.rpm

2.解压压缩包

解压压缩包,并做软连接,安装jdk环境

[hadoop@server1 ~]$ tar zxf hadoop-2.10.0.tar.gz

[hadoop@server1 ~]$ ls

hadoop-2.10.0 jdk-8u121-linux-x64.rpm

hadoop-2.10.0.tar.gz

[hadoop@server1 ~]$ ln -s hadoop-2.10.0 hadoop

[hadoop@server1 ~]$ ls

hadoop hadoop-2.10.0.tar.gz

hadoop-2.10.0 jdk-8u121-linux-x64.rpm

[root@server1 hadoop]# yum install jdk-8u121-linux-x64.rpm

[root@server1 ~]# cp -r /usr/java/jdk1.8.0_121/ java/

[root@server1 ~]# ls

java jdk-8u121-linux-x64.rpm

[root@server1 ~]# mv java/ /home/hadoop/

[root@server1 ~]# exit

logout

[hadoop@server1 ~]$ ls

hadoop hadoop-2.10.0 hadoop-2.10.0.tar.gz java

[root@server1 hadoop]# chown -R hadoop.hadoop java

[root@server1 hadoop]# ll

total 382928

lrwxrwxrwx 1 hadoop hadoop 13 Dec 22 07:08 hadoop -> hadoop-2.10.0

drwxr-xr-x 9 hadoop hadoop 149 Oct 23 03:23 hadoop-2.10.0

-r-------- 1 hadoop hadoop 392115733 Dec 22 06:52 hadoop-2.10.0.tar.gz

drwxr-xr-x 9 hadoop hadoop 268 Dec 22 07:11 java

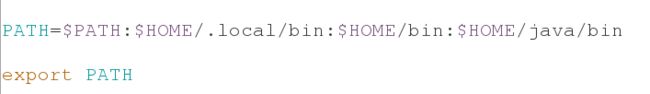

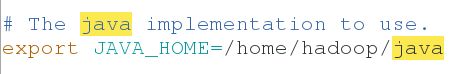

3.编辑环境变量的文件

[hadoop@server1 ~]$ vim .bash_profile

[hadoop@server1 ~]$ source .bash_profile

编辑文件,声明java

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ cd etc/

[hadoop@server1 etc]$ cd hadoop/

[hadoop@server1 hadoop]$ vim hadoop-env.sh

[hadoop@server1 hadoop]$ pwd

/home/hadoop/hadoop

[hadoop@server1 hadoop]$ ls

bin include libexec NOTICE.txt sbin

etc lib LICENSE.txt README.txt share

[hadoop@server1 hadoop]$ mkdir input

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt README.txt share

etc input libexec NOTICE.txt sbin

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml in

include/ input/

[hadoop@server1 hadoop]$ cp etc/hadoop/*.xml input/

[hadoop@server1 hadoop]$ ls input/

capacity-scheduler.xml hdfs-site.xml kms-site.xml

core-site.xml httpfs-site.xml yarn-site.xml

hadoop-policy.xml kms-acls.xml

4.独立操作debug,运行了一个程序

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.0.jar grep input output 'dfs[a-z.]+'

[hadoop@server1 hadoop]$ cd output/

[hadoop@server1 output]$ ls

part-r-00000 _SUCCESS

[hadoop@server1 output]$ cat *

1 dfsadmin

到此为止,基本的单节点搭建已经完毕,接下来实现伪分布式的搭建

到此为止,基本的单节点搭建已经完毕,接下来实现伪分布式的搭建

2.伪分布式文件系统的搭建

做本机的免密,因为此时的伪分布式也是在一台节点上实现的

[hadoop@server1 hadoop]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

07:f2:e1:ae:b2:36:83:55:3b:e5:24:85:04:51:1e:e8 hadoop@server1

The key's randomart image is:

+--[ RSA 2048]----+

| o*+. |

| ..... |

| . o.o |

| E o+oo |

| . *S . |

| . o... |

| o .. |

| . = . |

| ..=. |

+-----------------+

[hadoop@server1 ~]$ ssh-copy-id localhost

The authenticity of host 'localhost (::1)' can't be established.

ECDSA key fingerprint is b9:2d:17:ae:2d:07:7c:3a:d6:39:32:ac:72:ad:8b:7f.

Are you sure you want to continue connecting (yes/no)? yes

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@localhost's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'localhost'"

and check to make sure that only the key(s) you wanted were added.

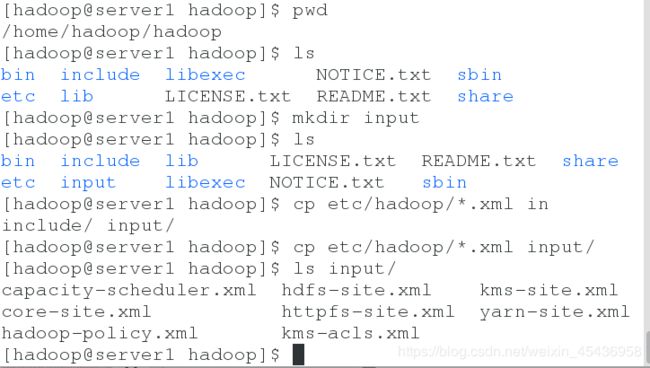

此时的workers文件里面既可以写localhost,也可以写ip地址

此时的workers文件里面既可以写localhost,也可以写ip地址

为了后续实验方便,在这里写ip地址

[hadoop@server1 ~]$ cd hadoop/

[hadoop@server1 hadoop]$ ls

bin etc include input lib libexec LICENSE.txt NOTICE.txt output README.txt sbin share

[hadoop@server1 hadoop]$ cd etc/

[hadoop@server1 etc]$ ls

hadoop

[hadoop@server1 etc]$ cd hadoop/

[hadoop@server1 hadoop]$ ls

capacity-scheduler.xml httpfs-env.sh mapred-env.sh

configuration.xsl httpfs-log4j.properties mapred-queues.xml.template

container-executor.cfg httpfs-signature.secret mapred-site.xml.template

core-site.xml httpfs-site.xml slaves

hadoop-env.cmd kms-acls.xml ssl-client.xml.example

hadoop-env.sh kms-env.sh ssl-server.xml.example

hadoop-metrics2.properties kms-log4j.properties yarn-env.cmd

hadoop-metrics.properties kms-site.xml yarn-env.sh

hadoop-policy.xml log4j.properties yarn-site.xml

hdfs-site.xml mapred-env.cmd

[hadoop@server1 hadoop]$ vim workers

[hadoop@server1 hadoop]$ vim hdfs-site.xml

设置副本个数为1,因为此时只有本机一个节点开启datanode进程

设置master节点也为本机

设置master节点也为本机

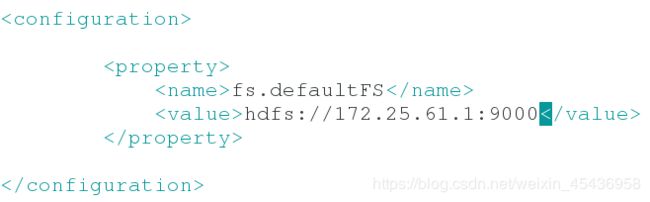

[hadoop@server1 hadoop]$ vim core-site.xml

[hadoop@server1 hadoop]$ cd ..

[hadoop@server1 etc]$ cd ..

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

开启服务:

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [server1]

server1: Warning: Permanently added 'server1,172.25.24.1' (ECDSA) to the list of known hosts.

建立数据目录,上传数据

[hadoop@server1 ~]$ cd hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir input

[hadoop@server1 hadoop]$ bin/hdfs dfs -put etc/hadoop/*.xml input

[hadoop@server1 hadoop]$ ls

bin include lib LICENSE.txt NOTICE.txt README.txt share

etc input libexec logs output sbin

删除本地文件:

[hadoop@server1 hadoop]$ rm -rf input

[hadoop@server1 hadoop]$ ls

bin include libexec logs output sbin

etc lib LICENSE.txt NOTICE.txt README.txt share

上传新文件:

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.3.jar grep input output 'dfs[a-z.]+'

[hadoop@server1 hadoop]$ bin/hdfs dfs -ls

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2019-12-22 10:18 input

drwxr-xr-x - hadoop supergroup 0 2019-12-22 10:24 output

本地文件和上传文件的比较:

[hadoop@server1 hadoop]$ bin/hdfs dfs -cat output/*

1 dfsadmin

1 dfs.replication

[hadoop@server1 hadoop]$ cat output/*

1 dfsadmin